Teresa Vidal-Calleja

Centre for Autonomous Systems, School of Mechanical and Mechatronic Engineering, University of Technology Sydney, Sydney, New South Wales, Australia

A Scene Representation for Online Spatial Sonification

Dec 07, 2024Abstract:Robotic perception is emerging as a crucial technology for navigation aids, particularly benefiting individuals with visual impairments through sonification. This paper presents a novel mapping framework that accurately represents spatial geometry for sonification, transforming physical spaces into auditory experiences. By leveraging depth sensors, we convert incrementally built 3D scenes into a compact 360-degree representation based on angular and distance information, aligning with human auditory perception. Our proposed mapping framework utilises a sensor-centric structure, maintaining 2D circular or 3D cylindrical representations, and employs the VDB-GPDF for efficient online mapping. We introduce two sonification modes-circular ranging and circular ranging of objects-along with real-time user control over auditory filters. Incorporating binaural room impulse responses, our framework provides perceptually robust auditory feedback. Quantitative and qualitative evaluations demonstrate superior performance in accuracy, coverage, and timing compared to existing approaches, with effective handling of dynamic objects. The accompanying video showcases the practical application of spatial sonification in room-like environments.

Gaussian Process Distance Fields Obstacle and Ground Constraints for Safe Navigation

Oct 23, 2024Abstract:Navigating cluttered environments is a challenging task for any mobile system. Existing approaches for ground-based mobile systems primarily focus on small wheeled robots, which face minimal constraints with overhanging obstacles and cannot manage steps or stairs, making the problem effectively 2D. However, navigation for legged robots (or even humans) has to consider an extra dimension. This paper proposes a tailored scene representation coupled with an advanced trajectory optimisation algorithm to enable safe navigation. Our 3D navigation approach is suitable for any ground-based mobile robot, whether wheeled or legged, as well as for human assistance. Given a 3D point cloud of the scene and the segmentation of the ground and non-ground points, we formulate two Gaussian Process distance fields to ensure a collision-free path and maintain distance to the ground constraints. Our method adeptly handles uneven terrain, steps, and overhanging objects through an innovative use of a quadtree structure, constructing a multi-resolution map of the free space and its connectivity graph based on a 2D projection of the relevant scene. Evaluations with both synthetic and real-world datasets demonstrate that this approach provides safe and smooth paths, accommodating a wide range of ground-based mobile systems.

Real-Time Truly-Coupled Lidar-Inertial Motion Correction and Spatiotemporal Dynamic Object Detection

Oct 07, 2024

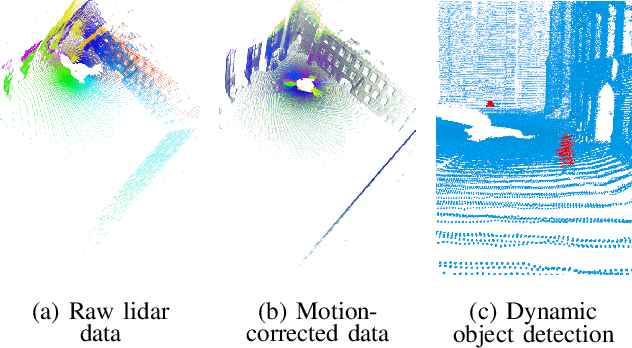

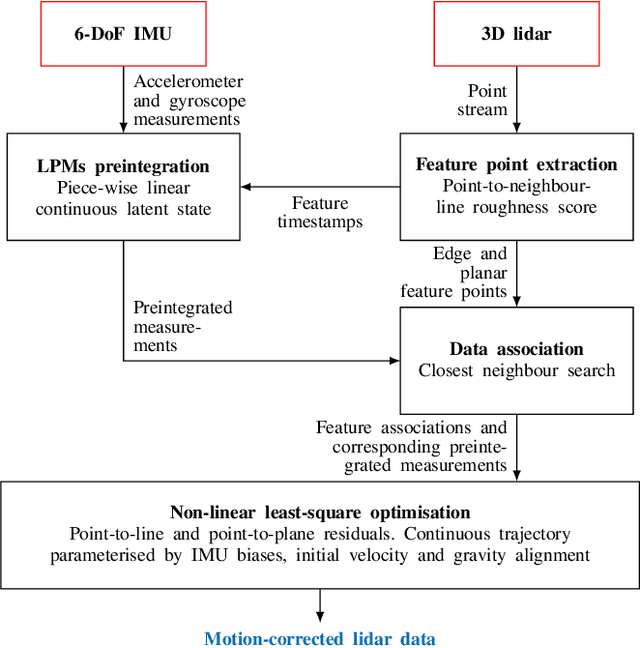

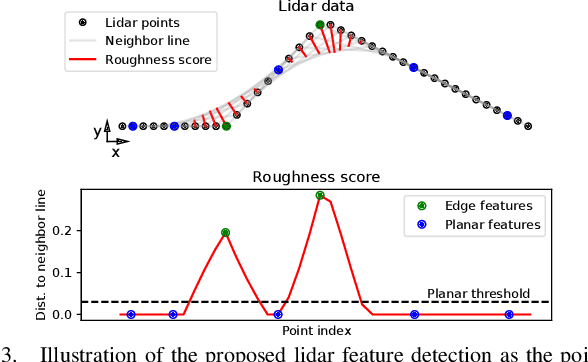

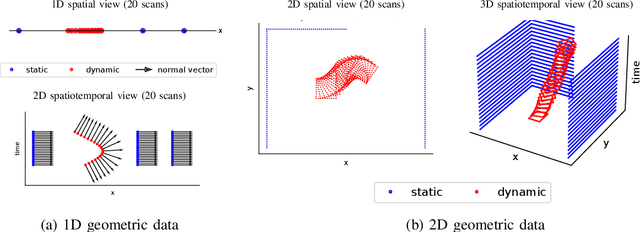

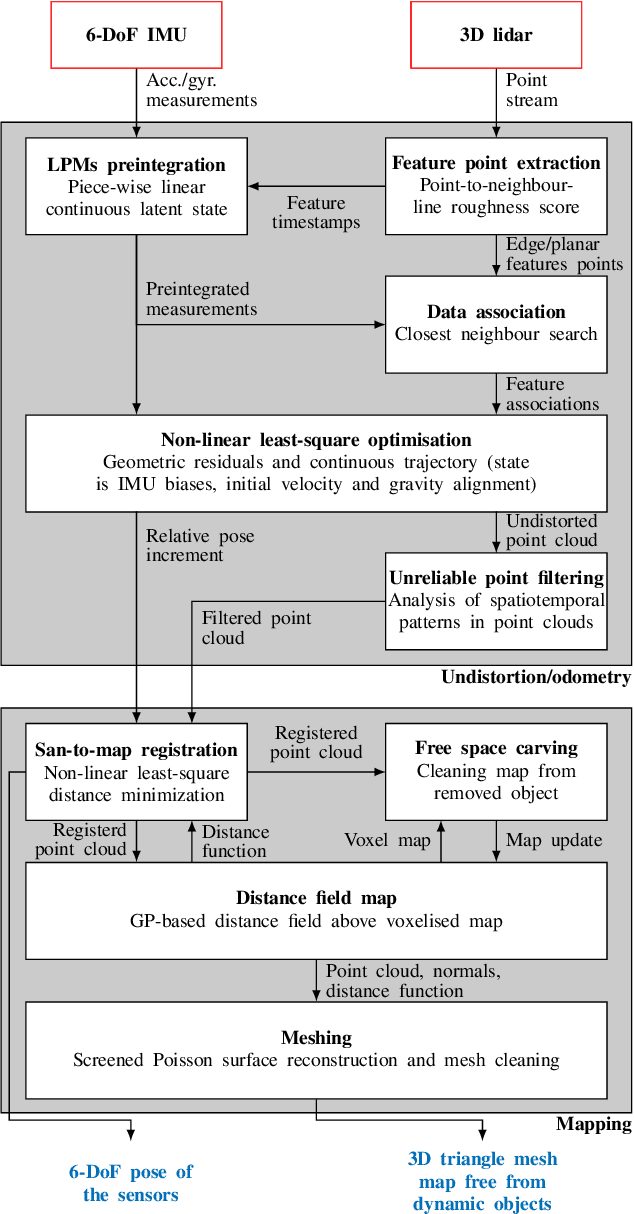

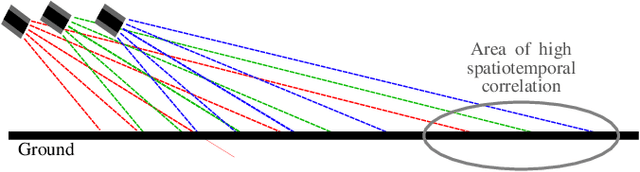

Abstract:Over the past decade, lidars have become a cornerstone of robotics state estimation and perception thanks to their ability to provide accurate geometric information about their surroundings in the form of 3D scans. Unfortunately, most of nowadays lidars do not take snapshots of the environment but sweep the environment over a period of time (typically around 100 ms). Such a rolling-shutter-like mechanism introduces motion distortion into the collected lidar scan, thus hindering downstream perception applications. In this paper, we present a novel method for motion distortion correction of lidar data by tightly coupling lidar with Inertial Measurement Unit (IMU) data. The motivation of this work is a map-free dynamic object detection based on lidar. The proposed lidar data undistortion method relies on continuous preintegrated of IMU measurements that allow parameterising the sensors' continuous 6-DoF trajectory using solely eleven discrete state variables (biases, initial velocity, and gravity direction). The undistortion consists of feature-based distance minimisation of point-to-line and point-to-plane residuals in a non-linear least-square formulation. Given undistorted geometric data over a short temporal window, the proposed pipeline computes the spatiotemporal normal vector of each of the lidar points. The temporal component of the normals is a proxy for the corresponding point's velocity, therefore allowing for learning-free dynamic object classification without the need for registration in a global reference frame. We demonstrate the soundness of the proposed method and its different components using public datasets and compare them with state-of-the-art lidar-inertial state estimation and dynamic object detection algorithms.

2FAST-2LAMAA: A Lidar-Inertial Localisation and Mapping Framework for Non-Static Environments

Oct 07, 2024

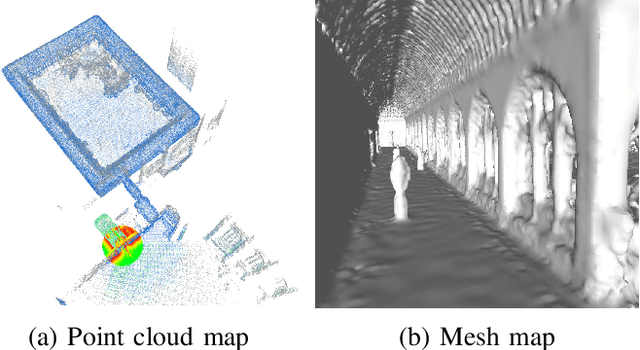

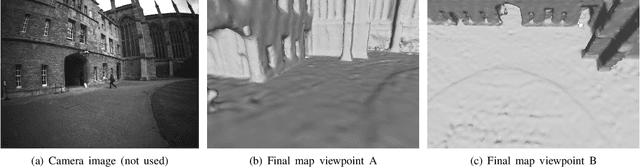

Abstract:This document presents a framework for lidar-inertial localisation and mapping named 2Fast-2Lamaa. The method revolves around two main steps which are the inertial-aided undistortion of the lidar data and the scan-to-map registration using a distance-field representation of the environment. The initialisation-free undistortion uses inertial data to constrain the continuous trajectory of the sensor during the lidar scan. The eleven DoFs that fully characterise the trajectory are estimated by minimising lidar point-to-line and point-to-plane distances in a non-linear least-square formulation. The registration uses a map that provides a distance field for the environment based on Gaussian Process regression. The pose of an undistorted lidar scan is optimised to minimise the distance field queries of its points with respect to the map. After registration, the new geometric information is efficiently integrated into the map. The soundness of 2Fast-2Lamaa is demonstrated over several datasets (qualitative evaluation only). The real-time implementation is made publicly available at https://github.com/UTS-RI/2fast2lamaa.

DynORecon: Dynamic Object Reconstruction for Navigation

Sep 30, 2024Abstract:This paper presents DynORecon, a Dynamic Object Reconstruction system that leverages the information provided by Dynamic SLAM to simultaneously generate a volumetric map of observed moving entities while estimating free space to support navigation. By capitalising on the motion estimations provided by Dynamic SLAM, DynORecon continuously refines the representation of dynamic objects to eliminate residual artefacts from past observations and incrementally reconstructs each object, seamlessly integrating new observations to capture previously unseen structures. Our system is highly efficient (~20 FPS) and produces accurate (~10 cm) reconstructions of dynamic objects using simulated and real-world outdoor datasets.

Safe Bubble Cover for Motion Planning on Distance Fields

Aug 23, 2024Abstract:We consider the problem of planning collision-free trajectories on distance fields. Our key observation is that querying a distance field at one configuration reveals a region of safe space whose radius is given by the distance value, obviating the need for additional collision checking within the safe region. We refer to such regions as safe bubbles, and show that safe bubbles can be obtained from any Lipschitz-continuous safety constraint. Inspired by sampling-based planning algorithms, we present three algorithms for constructing a safe bubble cover of free space, named bubble roadmap (BRM), rapidly exploring bubble graph (RBG), and expansive bubble graph (EBG). The bubble sampling algorithms are combined with a hierarchical planning method that first computes a discrete path of bubbles, followed by a continuous path within the bubbles computed via convex optimization. Experimental results show that the bubble-based methods yield up to 5- 10 times cost reduction relative to conventional baselines while simultaneously reducing computational efforts by orders of magnitude.

VDB-GPDF: Online Gaussian Process Distance Field with VDB Structure

Jul 12, 2024

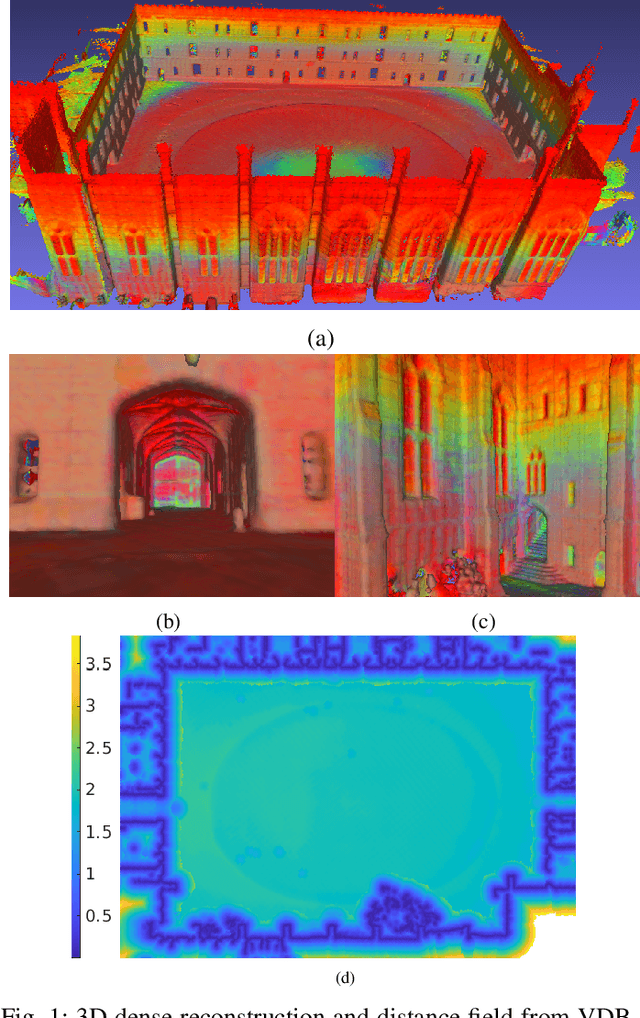

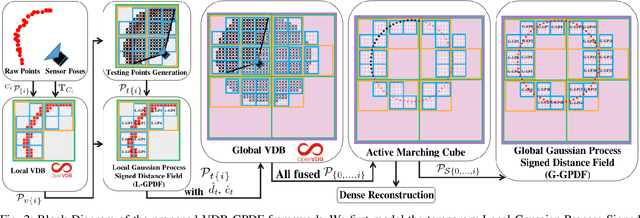

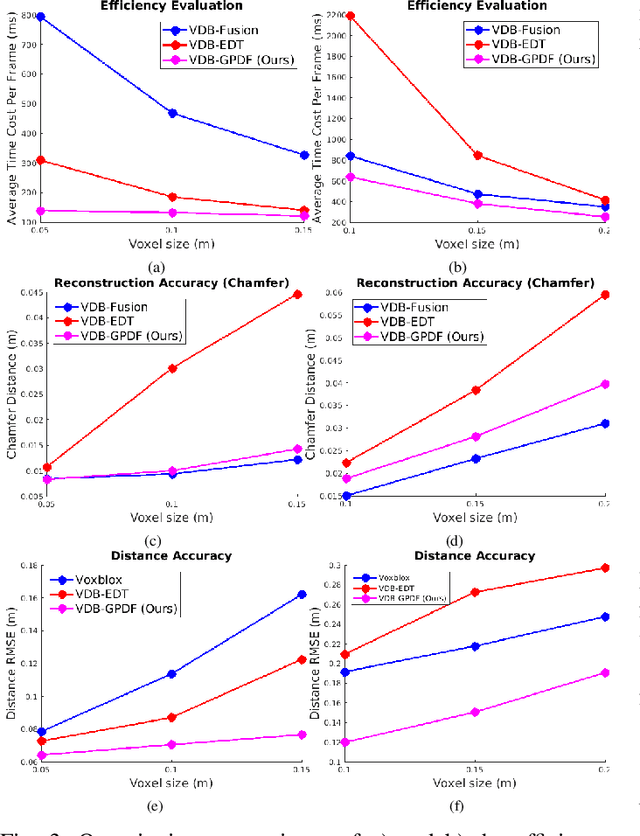

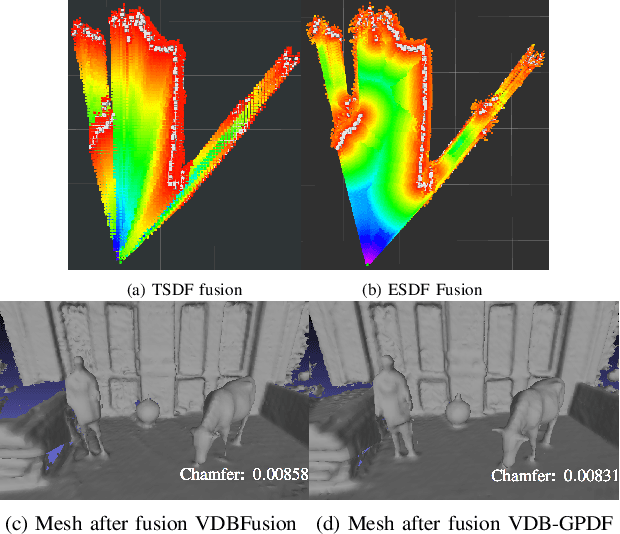

Abstract:Robots reason about the environment through dedicated representations. Popular choices for dense representations exploit Truncated Signed Distance Functions (TSDF) and Octree data structures. However, TSDF is a projective signed distance obtained directly from depth measurements that overestimates the Euclidean distance. Octrees, despite being memory efficient, require tree traversal and can lead to increased runtime in large scenarios. Other representations based on Gaussian Process (GP) distance fields are appealing due to their probabilistic and continuous nature, but the computational complexity is a concern. In this paper, we present an online efficient mapping framework that seamlessly couples GP distance fields and the fast-access VDB data structure. This framework incrementally builds the Euclidean distance field and fuses other surface properties, like intensity or colour, into a global scene representation that can cater for large-scale scenarios. The key aspect is a latent Local GP Signed Distance Field (L-GPDF) contained in a local VDB structure that allows fast queries of the Euclidean distance, surface properties and their uncertainties for arbitrary points in the field of view. Probabilistic fusion is then performed by merging the inferred values of these points into a global VDB structure that is efficiently maintained over time. After fusion, the surface mesh is recovered, and a global GP Signed Distance Field (G-GPDF) is generated and made available for downstream applications to query accurate distance and gradients. A comparison with the state-of-the-art frameworks shows superior efficiency and accuracy of the inferred distance field and comparable reconstruction performance. The accompanying code will be publicly available. https://github.com/UTS-RI/VDB_GPDF

Interactive Distance Field Mapping and Planning to Enable Human-Robot Collaboration

Mar 15, 2024

Abstract:Human-robot collaborative applications require scene representations that are kept up-to-date and facilitate safe motions in dynamic scenes. In this letter, we present an interactive distance field mapping and planning (IDMP) framework that handles dynamic objects and collision avoidance through an efficient representation. We define \textit{interactive} mapping and planning as the process of creating and updating the representation of the scene online while simultaneously planning and adapting the robot's actions based on that representation. Given depth sensor data, our framework builds a continuous field that allows to query the distance and gradient to the closest obstacle at any required position in 3D space. The key aspect of this work is an efficient Gaussian Process field that performs incremental updates and implicitly handles dynamic objects with a simple and elegant formulation based on a temporary latent model. In terms of mapping, IDMP is able to fuse point cloud data from single and multiple sensors, query the free space at any spatial resolution, and deal with moving objects without semantics. In terms of planning, IDMP allows seamless integration with gradient-based motion planners facilitating fast re-planning for collision-free navigation. The framework is evaluated on both real and synthetic datasets. A comparison with similar state-of-the-art frameworks shows superior performance when handling dynamic objects and comparable or better performance in the accuracy of the computed distance and gradient field. Finally, we show how the framework can be used for fast motion planning in the presence of moving objects. An accompanying video, code, and datasets are made publicly available https://uts-ri.github.io/IDMP.

Continuous-Time Gaussian Process Motion-Compensation for Event-vision Pattern Tracking with Distance Fields

Mar 05, 2023

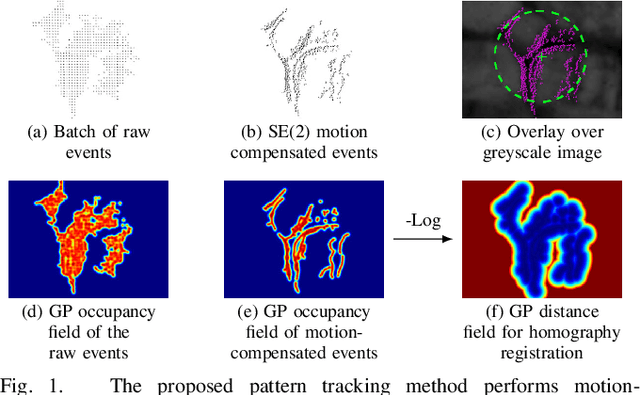

Abstract:This work addresses the issue of motion compensation and pattern tracking in event camera data. An event camera generates asynchronous streams of events triggered independently by each of the pixels upon changes in the observed intensity. Providing great advantages in low-light and rapid-motion scenarios, such unconventional data present significant research challenges as traditional vision algorithms are not directly applicable to this sensing modality. The proposed method decomposes the tracking problem into a local SE(2) motion-compensation step followed by a homography registration of small motion-compensated event batches. The first component relies on Gaussian Process (GP) theory to model the continuous occupancy field of the events in the image plane and embed the camera trajectory in the covariance kernel function. In doing so, estimating the trajectory is done similarly to GP hyperparameter learning by maximising the log marginal likelihood of the data. The continuous occupancy fields are turned into distance fields and used as templates for homography-based registration. By benchmarking the proposed method against other state-of-the-art techniques, we show that our open-source implementation performs high-accuracy motion compensation and produces high-quality tracks in real-world scenarios.

Accurate Gaussian Process Distance Fields with applications to Echolocation and Mapping

Feb 25, 2023Abstract:This paper introduces a novel method to estimate distance fields from noisy point clouds using Gaussian Process (GP) regression. Distance fields, or distance functions, gained popularity for applications like point cloud registration, odometry, SLAM, path planning, shape reconstruction, etc. A distance field provides a continuous representation of the scene. It is defined as the shortest distance from any query point and the closest surface. The key concept of the proposed method is a reverting function used to turn a GP-inferred occupancy field into an accurate distance field. The reverting function is specific to the chosen GP kernel. This paper provides the theoretical derivation of the proposed method and its relationship to existing techniques. The improved accuracy compared with existing distance fields is demonstrated with extensive simulated experiments. The level of accuracy of the proposed approach allows for novel applications that rely on precise distance estimation. Thus, alongside 3D point cloud registration, this work presents echolocation and mapping frameworks using ultrasonic guided waves sensing metallic structures. These methods leverage the proposed distance field in physics-based models to simulate the signal propagation and compare it with the actual signal received. Both simulated and real-world experiments are conducted to demonstrate the soundness of these frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge