Cedric Pradalier

Towards Efficient Occupancy Mapping via Gaussian Process Latent Field Shaping

Jun 16, 2025Abstract:Occupancy mapping has been a key enabler of mobile robotics. Originally based on a discrete grid representation, occupancy mapping has evolved towards continuous representations that can predict the occupancy status at any location and account for occupancy correlations between neighbouring areas. Gaussian Process (GP) approaches treat this task as a binary classification problem using both observations of occupied and free space. Conceptually, a GP latent field is passed through a logistic function to obtain the output class without actually manipulating the GP latent field. In this work, we propose to act directly on the latent function to efficiently integrate free space information as a prior based on the shape of the sensor's field-of-view. A major difference with existing methods is the change in the classification problem, as we distinguish between free and unknown space. The `occupied' area is the infinitesimally thin location where the class transitions from free to unknown. We demonstrate in simulated environments that our approach is sound and leads to competitive reconstruction accuracy.

NavBench: A Unified Robotics Benchmark for Reinforcement Learning-Based Autonomous Navigation

May 20, 2025Abstract:Autonomous robots must navigate and operate in diverse environments, from terrestrial and aquatic settings to aerial and space domains. While Reinforcement Learning (RL) has shown promise in training policies for specific autonomous robots, existing benchmarks are often constrained to unique platforms, limiting generalization and fair comparisons across different mobility systems. In this paper, we present NavBench, a multi-domain benchmark for training and evaluating RL-based navigation policies across diverse robotic platforms and operational environments. Built on IsaacLab, our framework standardizes task definitions, enabling different robots to tackle various navigation challenges without the need for ad-hoc task redesigns or custom evaluation metrics. Our benchmark addresses three key challenges: (1) Unified cross-medium benchmarking, enabling direct evaluation of diverse actuation methods (thrusters, wheels, water-based propulsion) in realistic environments; (2) Scalable and modular design, facilitating seamless robot-task interchangeability and reproducible training pipelines; and (3) Robust sim-to-real validation, demonstrated through successful policy transfer to multiple real-world robots, including a satellite robotic simulator, an unmanned surface vessel, and a wheeled ground vehicle. By ensuring consistency between simulation and real-world deployment, NavBench simplifies the development of adaptable RL-based navigation strategies. Its modular design allows researchers to easily integrate custom robots and tasks by following the framework's predefined templates, making it accessible for a wide range of applications. Our code is publicly available at NavBench.

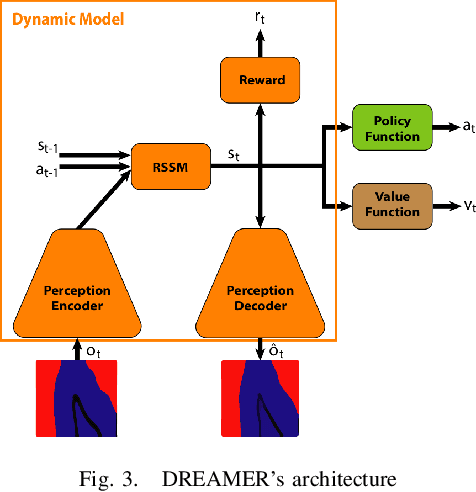

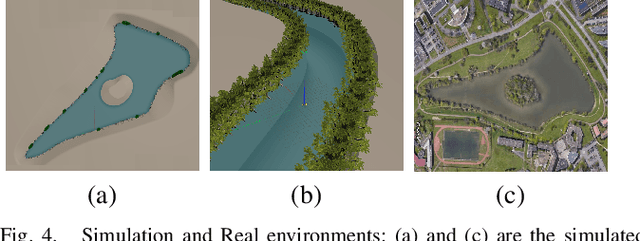

A Deep Reinforcement Learning Framework and Methodology for Reducing the Sim-to-Real Gap in ASV Navigation

Jul 11, 2024

Abstract:Despite the increasing adoption of Deep Reinforcement Learning (DRL) for Autonomous Surface Vehicles (ASVs), there still remain challenges limiting real-world deployment. In this paper, we first integrate buoyancy and hydrodynamics models into a modern Reinforcement Learning framework to reduce training time. Next, we show how system identification coupled with domain randomization improves the RL agent performance and narrows the sim-to-real gap. Real-world experiments for the task of capturing floating waste show that our approach lowers energy consumption by 13.1\% while reducing task completion time by 7.4\%. These findings, supported by sharing our open-source implementation, hold the potential to impact the efficiency and versatility of ASVs, contributing to environmental conservation efforts.

Self-Supervised Gaussian Regularization of Deep Classifiers for Mahalanobis-Distance-Based Uncertainty Estimation

May 23, 2023

Abstract:Recent works show that the data distribution in a network's latent space is useful for estimating classification uncertainty and detecting Out-of-distribution (OOD) samples. To obtain a well-regularized latent space that is conducive for uncertainty estimation, existing methods bring in significant changes to model architectures and training procedures. In this paper, we present a lightweight, fast, and high-performance regularization method for Mahalanobis distance-based uncertainty prediction, and that requires minimal changes to the network's architecture. To derive Gaussian latent representation favourable for Mahalanobis Distance calculation, we introduce a self-supervised representation learning method that separates in-class representations into multiple Gaussians. Classes with non-Gaussian representations are automatically identified and dynamically clustered into multiple new classes that are approximately Gaussian. Evaluation on standard OOD benchmarks shows that our method achieves state-of-the-art results on OOD detection with minimal inference time, and is very competitive on predictive probability calibration. Finally, we show the applicability of our method to a real-life computer vision use case on microorganism classification.

Accurate Gaussian Process Distance Fields with applications to Echolocation and Mapping

Feb 25, 2023Abstract:This paper introduces a novel method to estimate distance fields from noisy point clouds using Gaussian Process (GP) regression. Distance fields, or distance functions, gained popularity for applications like point cloud registration, odometry, SLAM, path planning, shape reconstruction, etc. A distance field provides a continuous representation of the scene. It is defined as the shortest distance from any query point and the closest surface. The key concept of the proposed method is a reverting function used to turn a GP-inferred occupancy field into an accurate distance field. The reverting function is specific to the chosen GP kernel. This paper provides the theoretical derivation of the proposed method and its relationship to existing techniques. The improved accuracy compared with existing distance fields is demonstrated with extensive simulated experiments. The level of accuracy of the proposed approach allows for novel applications that rely on precise distance estimation. Thus, alongside 3D point cloud registration, this work presents echolocation and mapping frameworks using ultrasonic guided waves sensing metallic structures. These methods leverage the proposed distance field in physics-based models to simulate the signal propagation and compare it with the actual signal received. Both simulated and real-world experiments are conducted to demonstrate the soundness of these frameworks.

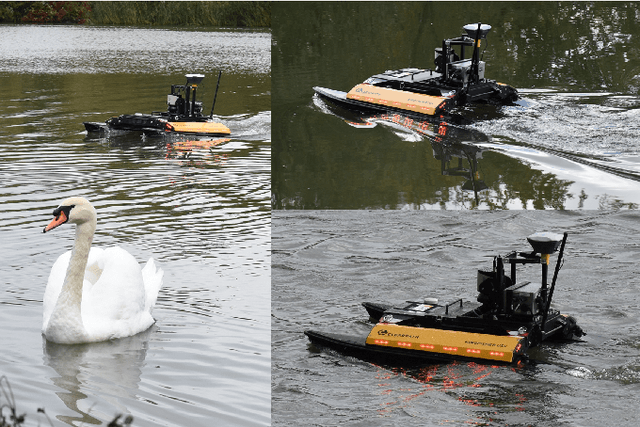

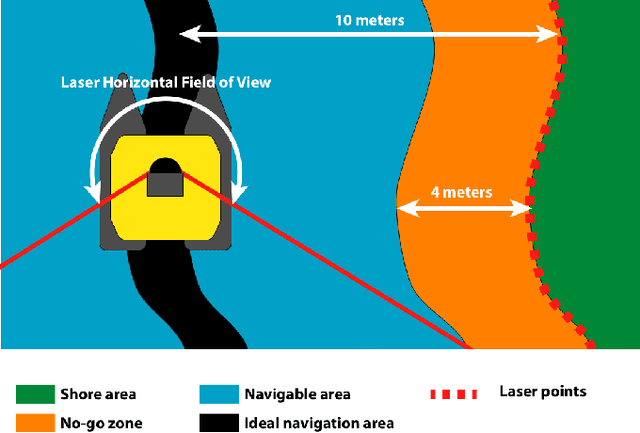

How To Train Your HERON

Feb 20, 2021

Abstract:In this paper we apply Deep Reinforcement Learning (Deep RL) and Domain Randomization to solve a navigation task in a natural environment relying solely on a 2D laser scanner. We train a model-based RL agent in simulation to follow lake and river shores and apply it on a real Unmanned Surface Vehicle in a zero-shot setup. We demonstrate that even though the agent has not been trained in the real world, it can fulfill its task successfully and adapt to changes in the robot's environment and dynamics. Finally, we show that the RL agent is more robust, faster, and more accurate than a state-aware Model-Predictive-Controller.

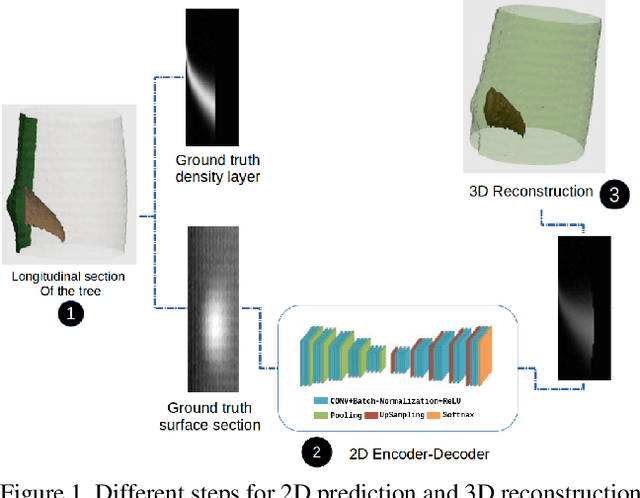

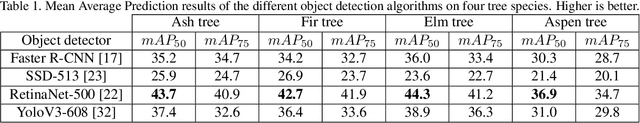

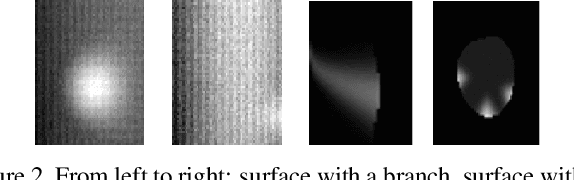

A Study on Trees's Knots Prediction from their Bark Outer-Shape

Oct 05, 2020

Abstract:In the industry, the value of wood-logs strongly depends on their internal structure and more specifically on the knots' distribution inside the trees. As of today, CT-scanners are the prevalent tool to acquire accurate images of the trees internal structure. However, CT-scanners are expensive, and slow, making their use impractical for most industrial applications. Knowing where the knots are within a tree could improve the efficiency of the overall tree industry by reducing waste and improving the quality of wood-logs by-products. In this paper we evaluate different deep-learning based architectures to predict the internal knots distribution of a tree from its outer-shape, something that has never been done before. Three types of techniques based on Convolutional Neural Networks (CNN) will be studied. The architectures are tested on both real and synthetic CT-scanned trees. With these experiments, we demonstrate that CNNs can be used to predict internal knots distribution based on the external surface of the trees. The goal being to show that these inexpensive and fast methods could be used to replace the CT-scanners. Additionally, we look into the performance of several off-the-shelf object-detectors to detect knots inside CT-scanned images. This method is used to autonomously label part of our real CT-scanned trees alleviating the need to manually segment the whole of the images.

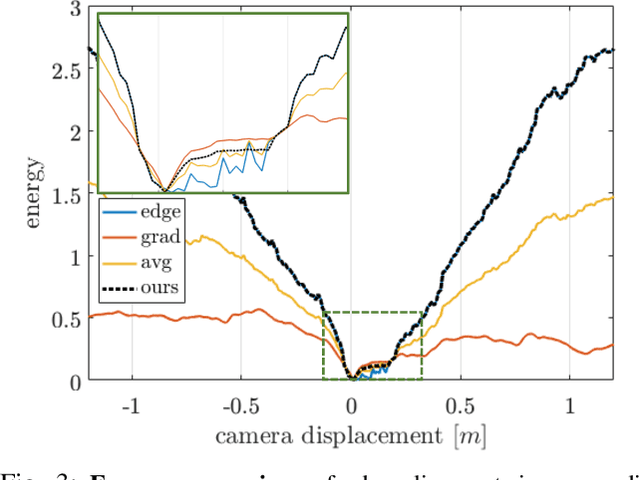

Robust Semi-Direct Monocular Visual Odometry Using Edge and Illumination-Robust Cost

Sep 25, 2019

Abstract:In this work, we propose a monocular semi-direct visual odometry framework, which is capable of exploiting the best attributes of edge features and local photometric information for illumination-robust camera motion estimation and scene reconstruction. In the tracking layer, the edge alignment error and image gradient error are jointly optimized through a convergence-preserved reweighting strategy, which not only preserves the property of illumination invariance but also leads to larger convergence basin and higher tracking accuracy compared with individual approaches. In the mapping layer, a fast probabilistic 1D search strategy is proposed to locate the best photometrically matched point along all geometrically possible edges, which enables real-time edge point correspondence generation using merely high-frequency components of the image. The resultant reprojection error is then used to substitute edge alignment error for joint optimization in local bundle adjustment, avoiding the partial observability issue of monocular edge mapping as well as improving the stability of optimization. We present extensive analysis and evaluation of our proposed system on synthetic and real-world benchmark datasets under the influence of illumination changes and large camera motions, where our proposed system outperforms current state-of-art algorithms.

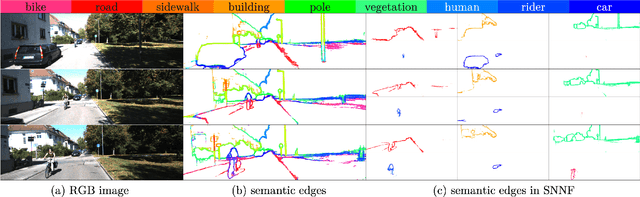

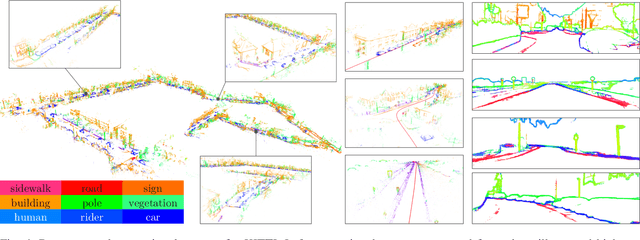

Semantic Nearest Neighbor Fields Monocular Edge Visual-Odometry

Apr 01, 2019

Abstract:Recent advances in deep learning for edge detection and segmentation opens up a new path for semantic-edge-based ego-motion estimation. In this work, we propose a robust monocular visual odometry (VO) framework using category-aware semantic edges. It can reconstruct large-scale semantic maps in challenging outdoor environments. The core of our approach is a semantic nearest neighbor field that facilitates a robust data association of edges across frames using semantics. This significantly enlarges the convergence radius during tracking phases. The proposed edge registration method can be easily integrated into direct VO frameworks to estimate photometrically, geometrically, and semantically consistent camera motions. Different types of edges are evaluated and extensive experiments demonstrate that our proposed system outperforms state-of-art indirect, direct, and semantic monocular VO systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge