Cedric Le Gentil

Autonomous Systems Lab, ETH Zurich, Switzerland, Centre for Autonomous Systems, School of Mechanical and Mechatronic Engineering, University of Technology Sydney, Sydney, New South Wales, Australia

Revisiting Continuous-Time Trajectory Estimation via Gaussian Processes and the Magnus Expansion

Jan 06, 2026Abstract:Continuous-time state estimation has been shown to be an effective means of (i) handling asynchronous and high-rate measurements, (ii) introducing smoothness to the estimate, (iii) post hoc querying the estimate at times other than those of the measurements, and (iv) addressing certain observability issues related to scanning-while-moving sensors. A popular means of representing the trajectory in continuous time is via a Gaussian process (GP) prior, with the prior's mean and covariance functions generated by a linear time-varying (LTV) stochastic differential equation (SDE) driven by white noise. When the state comprises elements of Lie groups, previous works have resorted to a patchwork of local GPs each with a linear time-invariant SDE kernel, which while effective in practice, lacks theoretical elegance. Here we revisit the full LTV GP approach to continuous-time trajectory estimation, deriving a global GP prior on Lie groups via the Magnus expansion, which offers a more elegant and general solution. We provide a numerical comparison between the two approaches and discuss their relative merits.

Towards Efficient Occupancy Mapping via Gaussian Process Latent Field Shaping

Jun 16, 2025Abstract:Occupancy mapping has been a key enabler of mobile robotics. Originally based on a discrete grid representation, occupancy mapping has evolved towards continuous representations that can predict the occupancy status at any location and account for occupancy correlations between neighbouring areas. Gaussian Process (GP) approaches treat this task as a binary classification problem using both observations of occupied and free space. Conceptually, a GP latent field is passed through a logistic function to obtain the output class without actually manipulating the GP latent field. In this work, we propose to act directly on the latent function to efficiently integrate free space information as a prior based on the shape of the sensor's field-of-view. A major difference with existing methods is the change in the classification problem, as we distinguish between free and unknown space. The `occupied' area is the infinitesimally thin location where the class transitions from free to unknown. We demonstrate in simulated environments that our approach is sound and leads to competitive reconstruction accuracy.

Do We Still Need to Work on Odometry for Autonomous Driving?

May 07, 2025Abstract:Over the past decades, a tremendous amount of work has addressed the topic of ego-motion estimation of moving platforms based on various proprioceptive and exteroceptive sensors. At the cost of ever-increasing computational load and sensor complexity, odometry algorithms have reached impressive levels of accuracy with minimal drift in various conditions. In this paper, we question the need for more research on odometry for autonomous driving by assessing the accuracy of one of the simplest algorithms: the direct integration of wheel encoder data and yaw rate measurements from a gyroscope. We denote this algorithm as Odometer-Gyroscope (OG) odometry. This work shows that OG odometry can outperform current state-of-the-art radar-inertial SE(2) odometry for a fraction of the computational cost in most scenarios. For example, the OG odometry is on top of the Boreas leaderboard with a relative translation error of 0.20%, while the second-best method displays an error of 0.26%. Lidar-inertial approaches can provide more accurate estimates, but the computational load is three orders of magnitude higher than the OG odometry. To further the analysis, we have pushed the limits of the OG odometry by purposely violating its fundamental no-slip assumption using data collected during a heavy snowstorm with different driving behaviours. Our conclusion shows that a significant amount of slippage is required to result in non-satisfactory pose estimates from the OG odometry.

DRO: Doppler-Aware Direct Radar Odometry

Apr 29, 2025Abstract:A renaissance in radar-based sensing for mobile robotic applications is underway. Compared to cameras or lidars, millimetre-wave radars have the ability to `see' through thin walls, vegetation, and adversarial weather conditions such as heavy rain, fog, snow, and dust. In this paper, we propose a novel SE(2) odometry approach for spinning frequency-modulated continuous-wave radars. Our method performs scan-to-local-map registration of the incoming radar data in a direct manner using all the radar intensity information without the need for feature or point cloud extraction. The method performs locally continuous trajectory estimation and accounts for both motion and Doppler distortion of the radar scans. If the radar possesses a specific frequency modulation pattern that makes radial Doppler velocities observable, an additional Doppler-based constraint is formulated to improve the velocity estimate and enable odometry in geometrically feature-deprived scenarios (e.g., featureless tunnels). Our method has been validated on over 250km of on-road data sourced from public datasets (Boreas and MulRan) and collected using our automotive platform. With the aid of a gyroscope, it outperforms state-of-the-art methods and achieves an average relative translation error of 0.26% on the Boreas leaderboard. When using data with the appropriate Doppler-enabling frequency modulation pattern, the translation error is reduced to 0.18% in similar environments. We also benchmarked our algorithm using 1.5 hours of data collected with a mobile robot in off-road environments with various levels of structure to demonstrate its versatility. Our real-time implementation is publicly available: https://github.com/utiasASRL/dro.

Gaussian Process Distance Fields Obstacle and Ground Constraints for Safe Navigation

Oct 23, 2024Abstract:Navigating cluttered environments is a challenging task for any mobile system. Existing approaches for ground-based mobile systems primarily focus on small wheeled robots, which face minimal constraints with overhanging obstacles and cannot manage steps or stairs, making the problem effectively 2D. However, navigation for legged robots (or even humans) has to consider an extra dimension. This paper proposes a tailored scene representation coupled with an advanced trajectory optimisation algorithm to enable safe navigation. Our 3D navigation approach is suitable for any ground-based mobile robot, whether wheeled or legged, as well as for human assistance. Given a 3D point cloud of the scene and the segmentation of the ground and non-ground points, we formulate two Gaussian Process distance fields to ensure a collision-free path and maintain distance to the ground constraints. Our method adeptly handles uneven terrain, steps, and overhanging objects through an innovative use of a quadtree structure, constructing a multi-resolution map of the free space and its connectivity graph based on a 2D projection of the relevant scene. Evaluations with both synthetic and real-world datasets demonstrate that this approach provides safe and smooth paths, accommodating a wide range of ground-based mobile systems.

Real-Time Truly-Coupled Lidar-Inertial Motion Correction and Spatiotemporal Dynamic Object Detection

Oct 07, 2024

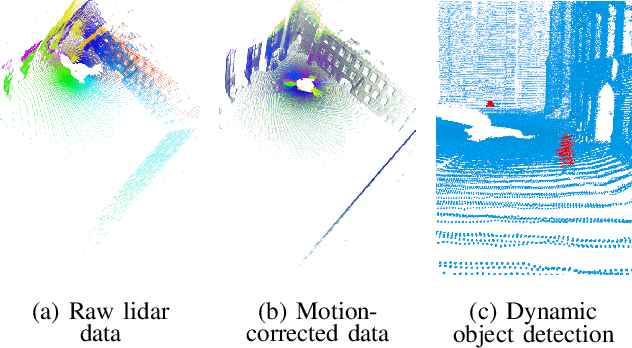

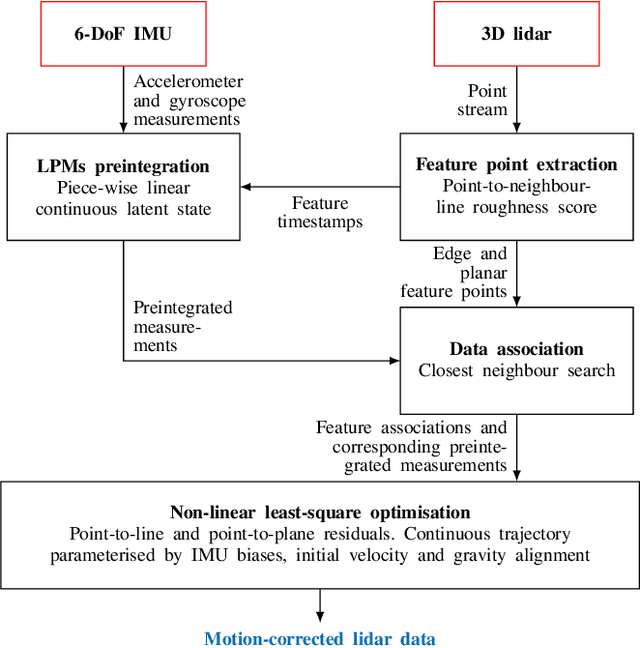

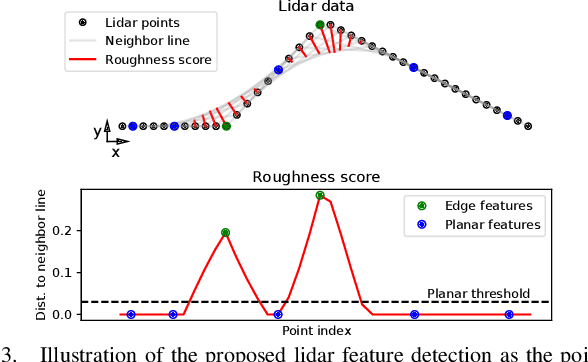

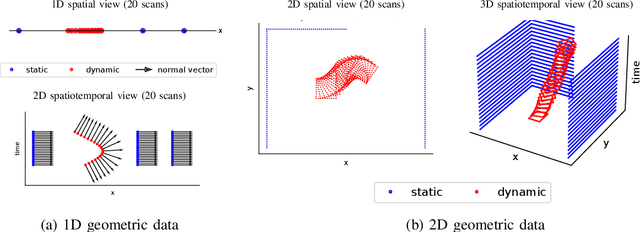

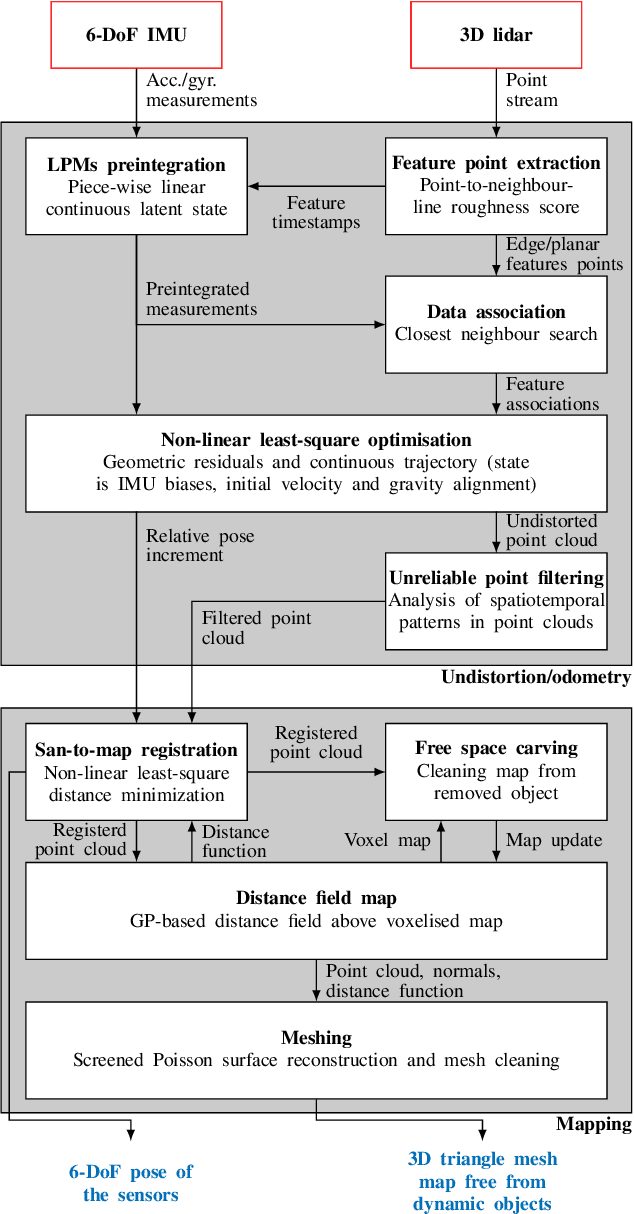

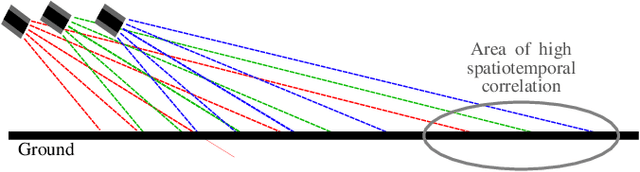

Abstract:Over the past decade, lidars have become a cornerstone of robotics state estimation and perception thanks to their ability to provide accurate geometric information about their surroundings in the form of 3D scans. Unfortunately, most of nowadays lidars do not take snapshots of the environment but sweep the environment over a period of time (typically around 100 ms). Such a rolling-shutter-like mechanism introduces motion distortion into the collected lidar scan, thus hindering downstream perception applications. In this paper, we present a novel method for motion distortion correction of lidar data by tightly coupling lidar with Inertial Measurement Unit (IMU) data. The motivation of this work is a map-free dynamic object detection based on lidar. The proposed lidar data undistortion method relies on continuous preintegrated of IMU measurements that allow parameterising the sensors' continuous 6-DoF trajectory using solely eleven discrete state variables (biases, initial velocity, and gravity direction). The undistortion consists of feature-based distance minimisation of point-to-line and point-to-plane residuals in a non-linear least-square formulation. Given undistorted geometric data over a short temporal window, the proposed pipeline computes the spatiotemporal normal vector of each of the lidar points. The temporal component of the normals is a proxy for the corresponding point's velocity, therefore allowing for learning-free dynamic object classification without the need for registration in a global reference frame. We demonstrate the soundness of the proposed method and its different components using public datasets and compare them with state-of-the-art lidar-inertial state estimation and dynamic object detection algorithms.

2FAST-2LAMAA: A Lidar-Inertial Localisation and Mapping Framework for Non-Static Environments

Oct 07, 2024

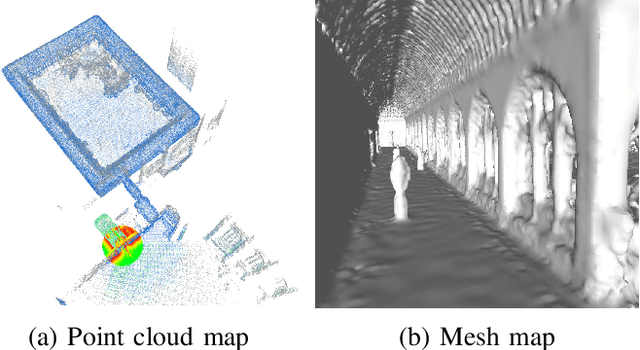

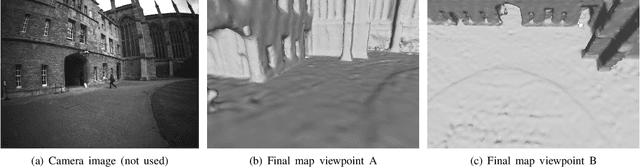

Abstract:This document presents a framework for lidar-inertial localisation and mapping named 2Fast-2Lamaa. The method revolves around two main steps which are the inertial-aided undistortion of the lidar data and the scan-to-map registration using a distance-field representation of the environment. The initialisation-free undistortion uses inertial data to constrain the continuous trajectory of the sensor during the lidar scan. The eleven DoFs that fully characterise the trajectory are estimated by minimising lidar point-to-line and point-to-plane distances in a non-linear least-square formulation. The registration uses a map that provides a distance field for the environment based on Gaussian Process regression. The pose of an undistorted lidar scan is optimised to minimise the distance field queries of its points with respect to the map. After registration, the new geometric information is efficiently integrated into the map. The soundness of 2Fast-2Lamaa is demonstrated over several datasets (qualitative evaluation only). The real-time implementation is made publicly available at https://github.com/UTS-RI/2fast2lamaa.

Safe Bubble Cover for Motion Planning on Distance Fields

Aug 23, 2024Abstract:We consider the problem of planning collision-free trajectories on distance fields. Our key observation is that querying a distance field at one configuration reveals a region of safe space whose radius is given by the distance value, obviating the need for additional collision checking within the safe region. We refer to such regions as safe bubbles, and show that safe bubbles can be obtained from any Lipschitz-continuous safety constraint. Inspired by sampling-based planning algorithms, we present three algorithms for constructing a safe bubble cover of free space, named bubble roadmap (BRM), rapidly exploring bubble graph (RBG), and expansive bubble graph (EBG). The bubble sampling algorithms are combined with a hierarchical planning method that first computes a discrete path of bubbles, followed by a continuous path within the bubbles computed via convex optimization. Experimental results show that the bubble-based methods yield up to 5- 10 times cost reduction relative to conventional baselines while simultaneously reducing computational efforts by orders of magnitude.

Towards Robust Perception for Assistive Robotics: An RGB-Event-LiDAR Dataset and Multi-Modal Detection Pipeline

Aug 23, 2024Abstract:The increasing adoption of human-robot interaction presents opportunities for technology to positively impact lives, particularly those with visual impairments, through applications such as guide-dog-like assistive robotics. We present a pipeline exploring the perception and "intelligent disobedience" required by such a system. A dataset of two people moving in and out of view has been prepared to compare RGB-based and event-based multi-modal dynamic object detection using LiDAR data for 3D position localisation. Our analysis highlights challenges in accurate 3D localisation using 2D image-LiDAR fusion, indicating the need for further refinement. Compared to the performance of the frame-based detection algorithm utilised (YOLOv4), current cutting-edge event-based detection models appear limited to contextual scenarios, such as for automotive platforms. This is highlighted by weak precision and recall over varying confidence and Intersection over Union (IoU) thresholds when using frame-based detections as a ground truth. Therefore, we have publicly released this dataset to the community, containing RGB, event, point cloud and Inertial Measurement Unit (IMU) data along with ground truth poses for the two people in the scene to fill a gap in the current landscape of publicly available datasets and provide a means to assist in the development of safer and more robust algorithms in the future: https://uts-ri.github.io/revel/.

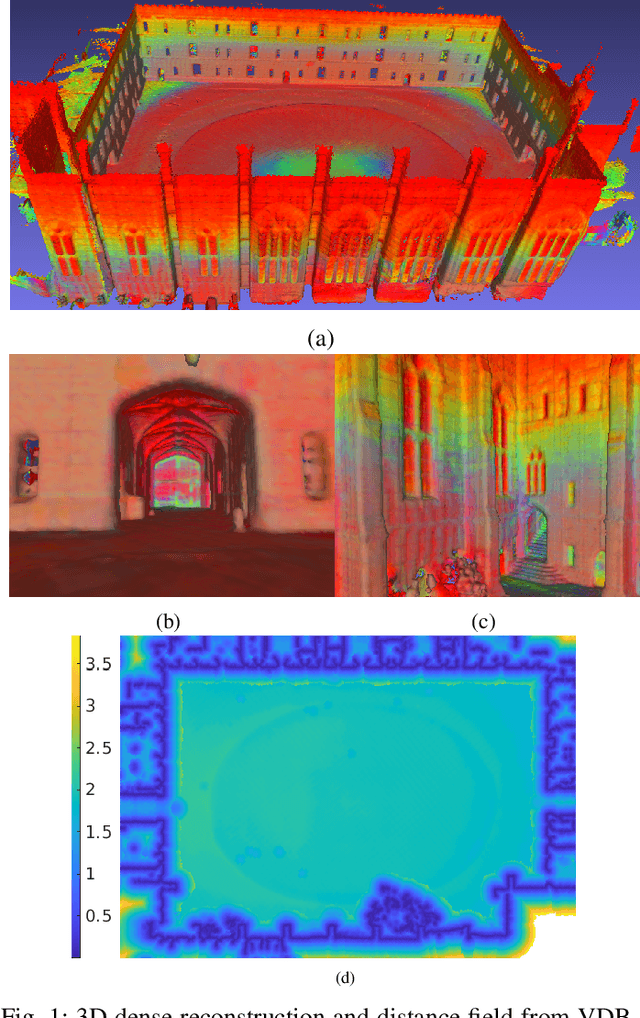

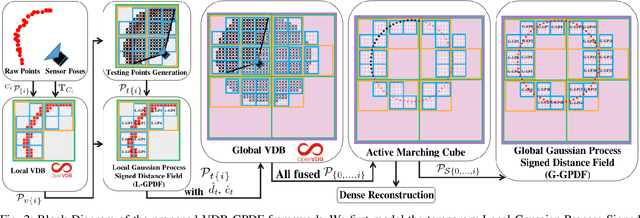

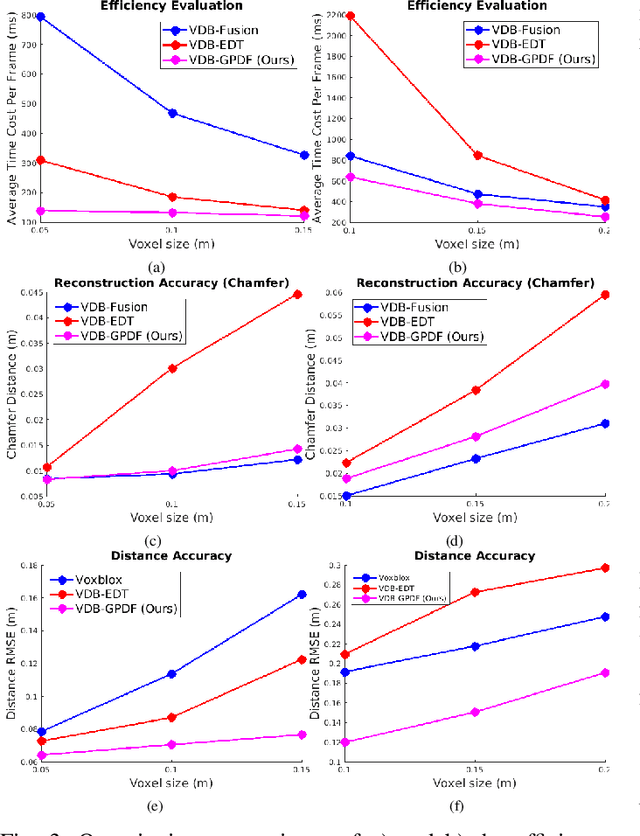

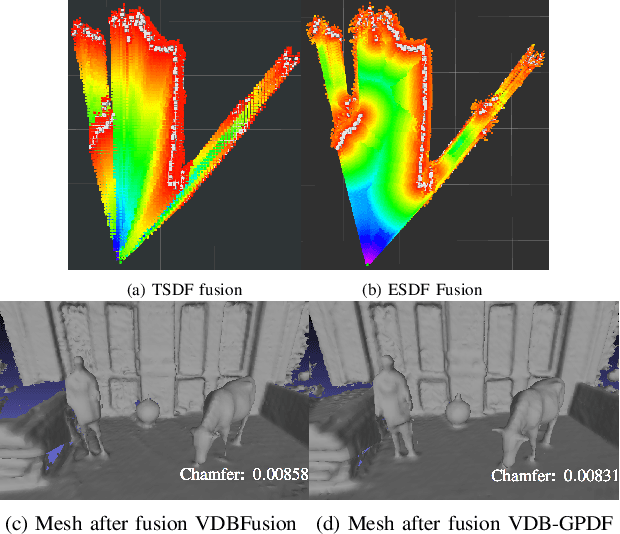

VDB-GPDF: Online Gaussian Process Distance Field with VDB Structure

Jul 12, 2024

Abstract:Robots reason about the environment through dedicated representations. Popular choices for dense representations exploit Truncated Signed Distance Functions (TSDF) and Octree data structures. However, TSDF is a projective signed distance obtained directly from depth measurements that overestimates the Euclidean distance. Octrees, despite being memory efficient, require tree traversal and can lead to increased runtime in large scenarios. Other representations based on Gaussian Process (GP) distance fields are appealing due to their probabilistic and continuous nature, but the computational complexity is a concern. In this paper, we present an online efficient mapping framework that seamlessly couples GP distance fields and the fast-access VDB data structure. This framework incrementally builds the Euclidean distance field and fuses other surface properties, like intensity or colour, into a global scene representation that can cater for large-scale scenarios. The key aspect is a latent Local GP Signed Distance Field (L-GPDF) contained in a local VDB structure that allows fast queries of the Euclidean distance, surface properties and their uncertainties for arbitrary points in the field of view. Probabilistic fusion is then performed by merging the inferred values of these points into a global VDB structure that is efficiently maintained over time. After fusion, the surface mesh is recovered, and a global GP Signed Distance Field (G-GPDF) is generated and made available for downstream applications to query accurate distance and gradients. A comparison with the state-of-the-art frameworks shows superior efficiency and accuracy of the inferred distance field and comparable reconstruction performance. The accompanying code will be publicly available. https://github.com/UTS-RI/VDB_GPDF

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge