Gavin Paul

Overcoming Dynamic Environments: A Hybrid Approach to Motion Planning for Manipulators

Apr 09, 2025Abstract:Robotic manipulators operating in dynamic and uncertain environments require efficient motion planning to navigate obstacles while maintaining smooth trajectories. Velocity Potential Field (VPF) planners offer real-time adaptability but struggle with complex constraints and local minima, leading to suboptimal performance in cluttered spaces. Traditional approaches rely on pre-planned trajectories, but frequent recomputation is computationally expensive. This study proposes a hybrid motion planning approach, integrating an improved VPF with a Sampling-Based Motion Planner (SBMP). The SBMP ensures optimal path generation, while VPF provides real-time adaptability to dynamic obstacles. This combination enhances motion planning efficiency, stability, and computational feasibility, addressing key challenges in uncertain environments such as warehousing and surgical robotics.

Towards Robust Perception for Assistive Robotics: An RGB-Event-LiDAR Dataset and Multi-Modal Detection Pipeline

Aug 23, 2024Abstract:The increasing adoption of human-robot interaction presents opportunities for technology to positively impact lives, particularly those with visual impairments, through applications such as guide-dog-like assistive robotics. We present a pipeline exploring the perception and "intelligent disobedience" required by such a system. A dataset of two people moving in and out of view has been prepared to compare RGB-based and event-based multi-modal dynamic object detection using LiDAR data for 3D position localisation. Our analysis highlights challenges in accurate 3D localisation using 2D image-LiDAR fusion, indicating the need for further refinement. Compared to the performance of the frame-based detection algorithm utilised (YOLOv4), current cutting-edge event-based detection models appear limited to contextual scenarios, such as for automotive platforms. This is highlighted by weak precision and recall over varying confidence and Intersection over Union (IoU) thresholds when using frame-based detections as a ground truth. Therefore, we have publicly released this dataset to the community, containing RGB, event, point cloud and Inertial Measurement Unit (IMU) data along with ground truth poses for the two people in the scene to fill a gap in the current landscape of publicly available datasets and provide a means to assist in the development of safer and more robust algorithms in the future: https://uts-ri.github.io/revel/.

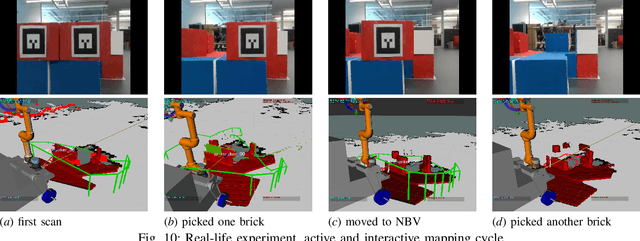

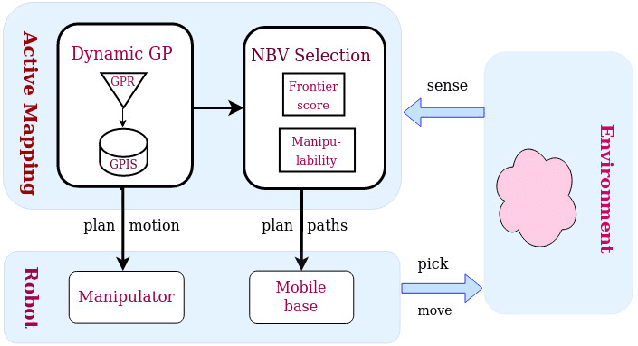

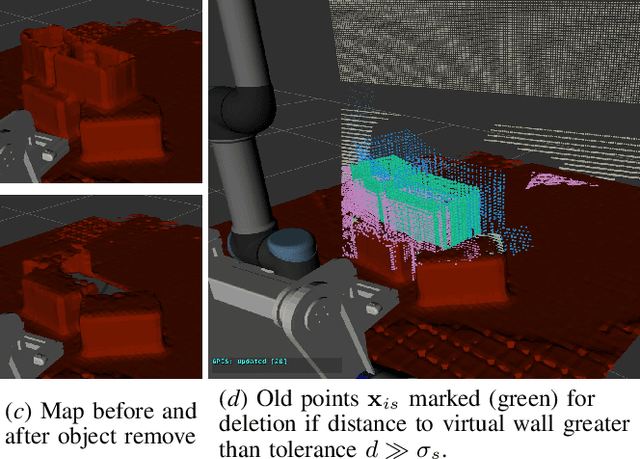

Active and Interactive Mapping with Dynamic Gaussian ProcessImplicit Surfaces for Mobile Manipulators

Oct 25, 2020

Abstract:In this paper, we present an interactive probabilistic framework for a mobile manipulator which moves in the environment, makes changes and maps the changing scene alongside. The framework is motivated by interactive robotic applications found in warehouses, construction sites and additive manufacturing, where a mobile robot manipulates objects in the scene. The proposed framework uses a novel dynamic Gaussian Process (GP) Implicit Surface method to incrementally build and update the scene map that reflects environment changes. Actively the framework provides the next-best-view (NBV), balancing the need of pick object reach-ability and map's information gain (IG). To enforce a priority of visiting boundary segments over unknown regions, the IG formulation includes an uncertainty gradient based frontier score by exploiting the GP kernel derivative. This leads to an efficient strategy that addresses the often conflicting requirement of unknown environment exploration and object picking exploitation given a limited execution horizon. We demonstrate the effectiveness of our framework with software simulation and real-life experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge