Fouad Sukkar

Long-Term, Store-Front Robotics: Interactive Music for Robotic Arm, Caxixi and Frame Drums

Jul 24, 2024Abstract:This paper presents an innovative exploration into the integration of interactive robotic musicianship within a commercial retail environment, specifically through a three-week-long in-store installation featuring a UR3 robotic arm, custom-built frame drums, and an adaptive music generation system. Situated in a prominent storefront in one of the world's largest cities, this project aimed to enhance the shopping experience by creating dynamic, engaging musical interactions that respond to the store's ambient soundscape. Key contributions include the novel application of industrial robotics in artistic expression, the deployment of interactive music to enrich retail ambiance, and the demonstration of continuous robotic operation in a public setting over an extended period. Challenges such as system reliability, variation in musical output, safety in interactive contexts, and brand alignment were addressed to ensure the installation's success. The project not only showcased the technical feasibility and artistic potential of robotic musicianship in retail spaces but also offered insights into the practical implications of such integration, including system reliability, the dynamics of human-robot interaction, and the impact on store operations. This exploration opens new avenues for enhancing consumer retail experiences through the intersection of technology, music, and interactive art, suggesting a future where robotic musicianship contributes meaningfully to public and commercial spaces.

Interactive Distance Field Mapping and Planning to Enable Human-Robot Collaboration

Mar 15, 2024

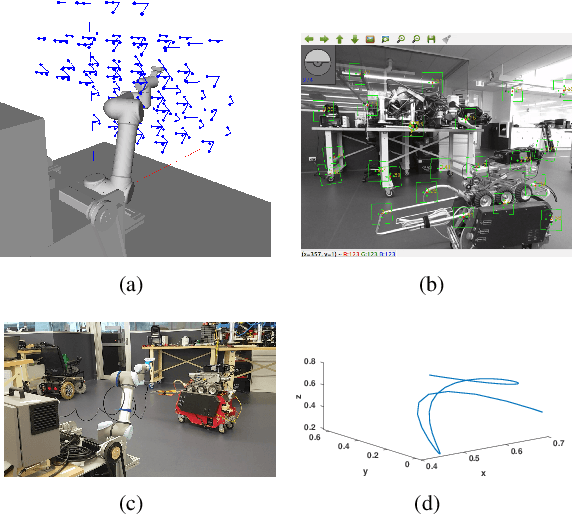

Abstract:Human-robot collaborative applications require scene representations that are kept up-to-date and facilitate safe motions in dynamic scenes. In this letter, we present an interactive distance field mapping and planning (IDMP) framework that handles dynamic objects and collision avoidance through an efficient representation. We define \textit{interactive} mapping and planning as the process of creating and updating the representation of the scene online while simultaneously planning and adapting the robot's actions based on that representation. Given depth sensor data, our framework builds a continuous field that allows to query the distance and gradient to the closest obstacle at any required position in 3D space. The key aspect of this work is an efficient Gaussian Process field that performs incremental updates and implicitly handles dynamic objects with a simple and elegant formulation based on a temporary latent model. In terms of mapping, IDMP is able to fuse point cloud data from single and multiple sensors, query the free space at any spatial resolution, and deal with moving objects without semantics. In terms of planning, IDMP allows seamless integration with gradient-based motion planners facilitating fast re-planning for collision-free navigation. The framework is evaluated on both real and synthetic datasets. A comparison with similar state-of-the-art frameworks shows superior performance when handling dynamic objects and comparable or better performance in the accuracy of the computed distance and gradient field. Finally, we show how the framework can be used for fast motion planning in the presence of moving objects. An accompanying video, code, and datasets are made publicly available https://uts-ri.github.io/IDMP.

Dynamic Object Detection in Range data using Spatiotemporal Normals

Oct 20, 2023

Abstract:On the journey to enable robots to interact with the real world where humans, animals, and unpredictable elements are acting as independent agents; it is crucial for robots to have the capability to detect dynamic objects. In this paper, we argue that the detection of dynamic objects can be solved by computing the spatiotemporal normals of a point cloud. In our experiments, we demonstrate that this simple method can be used robustly for LiDAR and depth cameras with performances similar to the state of the art while offering a significantly simpler method.

Guided Learning from Demonstration for Robust Transferability

Feb 08, 2023Abstract:Learning from demonstration (LfD) has the potential to greatly increase the applicability of robotic manipulators in modern industrial applications. Recent progress in LfD methods have put more emphasis in learning robustness than in guiding the demonstration itself in order to improve robustness. The latter is particularly important to consider when the target system reproducing the motion is structurally different to the demonstration system, as some demonstrated motions may not be reproducible. In light of this, this paper introduces a new guided learning from demonstration paradigm where an interactive graphical user interface (GUI) guides the user during demonstration, preventing them from demonstrating non-reproducible motions. The key aspect of our approach is determining the space of reproducible motions based on a motion planning framework which finds regions in the task space where trajectories are guaranteed to be of bounded length. We evaluate our method on two different setups with a six-degree-of-freedom (DOF) UR5 as the target system. First our method is validated using a seven-DOF Sawyer as the demonstration system. Then an extensive user study is carried out where several participants are asked to demonstrate, with and without guidance, a mock weld task using a hand held tool tracked by a VICON system. With guidance users were able to always carry out the task successfully in comparison to only 44% of the time without guidance.

Continuous Planning for Inertial-Aided Systems

Sep 12, 2022

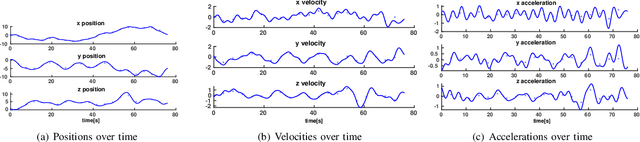

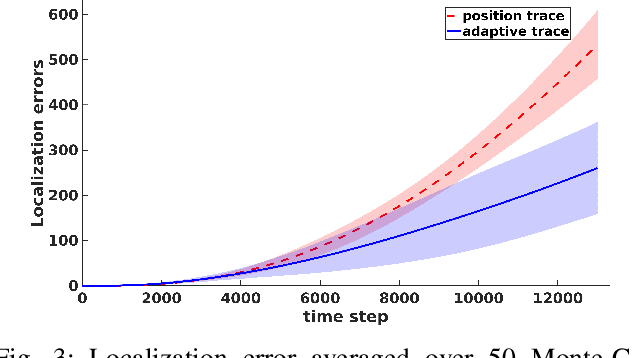

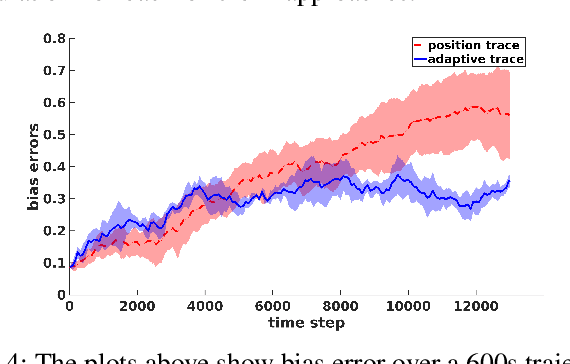

Abstract:Inertial-aided systems require continuous motion excitation among other reasons to characterize the measurement biases that will enable accurate integration required for localization frameworks. This paper proposes the use of informative path planning to find the best trajectory for minimizing the uncertainty of IMU biases and an adaptive traces method to guide the planner towards trajectories which aid convergence. The key contribution is a novel regression method based on Gaussian Process (GP) to enforce continuity and differentiability between waypoints from a variant of the RRT* planning algorithm. We employ linear operators applied to the GP kernel function to infer not only continuous position trajectories, but also velocities and accelerations. The use of linear functionals enable velocity and acceleration constraints given by the IMU measurements to be imposed on the position GP model. The results from both simulation and real world experiments show that planning for IMU bias convergence helps minimize localization errors in state estimation frameworks.

Motion planning in task space with Gromov-Hausdorff approximations

Sep 11, 2022

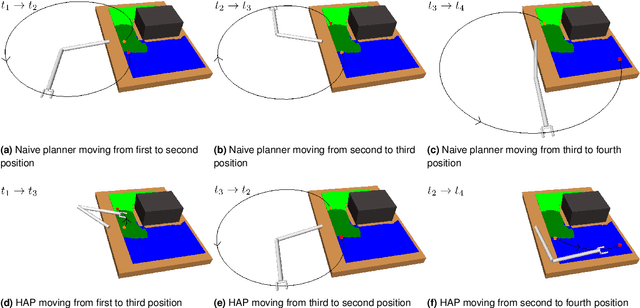

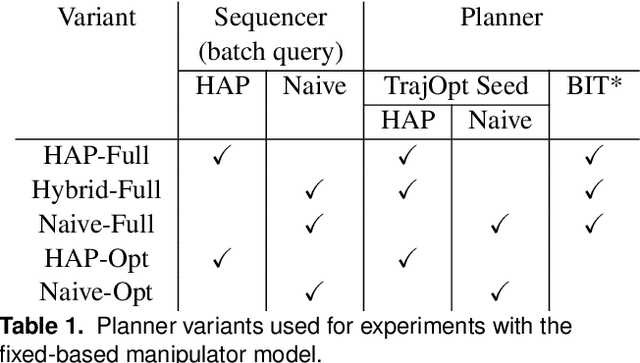

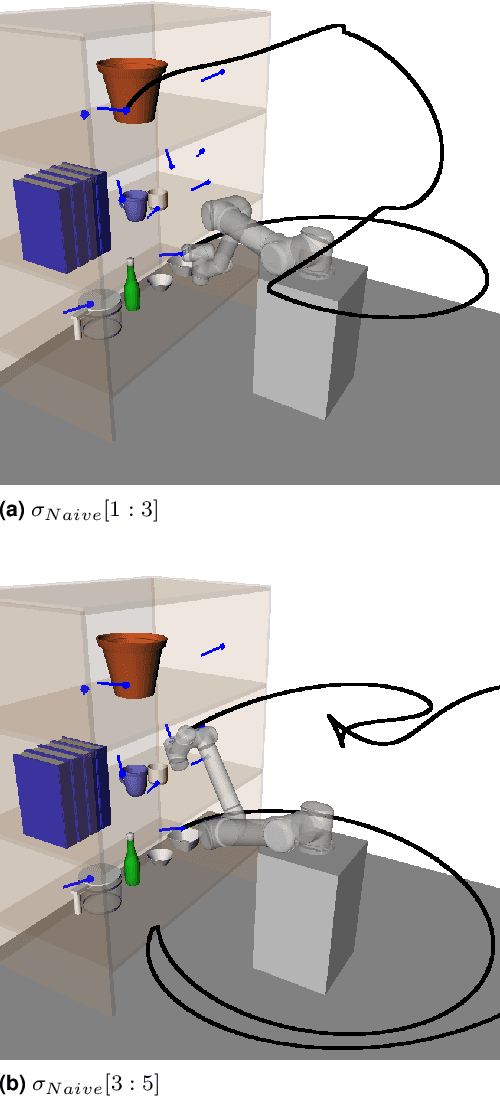

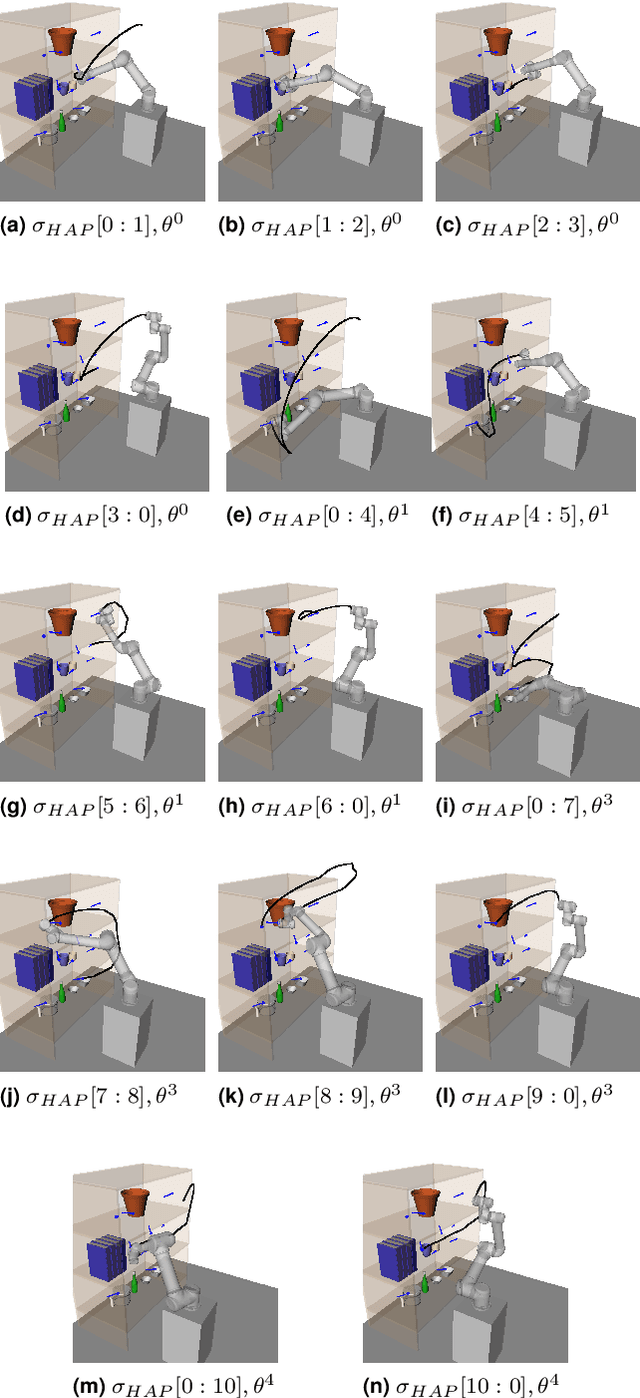

Abstract:Applications of industrial robotic manipulators such as cobots can require efficient online motion planning in environments that have a combination of static and non-static obstacles. Existing general purpose planning methods often produce poor quality solutions when available computation time is restricted, or fail to produce a solution entirely. We propose a new motion planning framework designed to operate in a user-defined task space, as opposed to the robot's workspace, that intentionally trades off workspace generality for planning and execution time efficiency. Our framework automatically constructs trajectory libraries that are queried online, similar to previous methods that exploit offline computation. Importantly, our method also offers bounded suboptimality guarantees on trajectory length. The key idea is to establish approximate isometries known as $\epsilon$-Gromov-Hausdorff approximations such that points that are close by in task space are also close in configuration space. These bounding relations further imply that trajectories can be smoothly concatenated, which enables our framework to address batch-query scenarios where the objective is to find a minimum length sequence of trajectories that visit an unordered set of goals. We evaluate our framework in simulation with several kinematic configurations, including a manipulator mounted to a mobile base. Results demonstrate that our method achieves feasible real-time performance for practical applications and suggest interesting opportunities for extending its capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge