Real-Time Truly-Coupled Lidar-Inertial Motion Correction and Spatiotemporal Dynamic Object Detection

Paper and Code

Oct 07, 2024

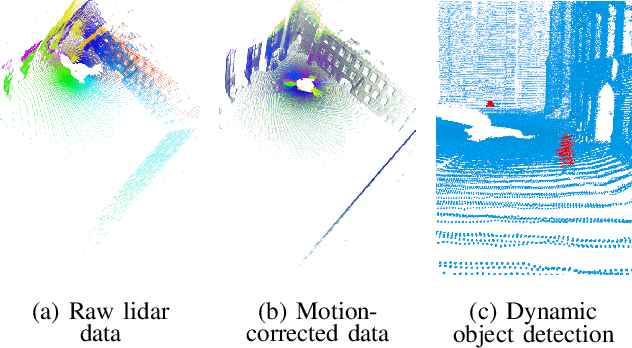

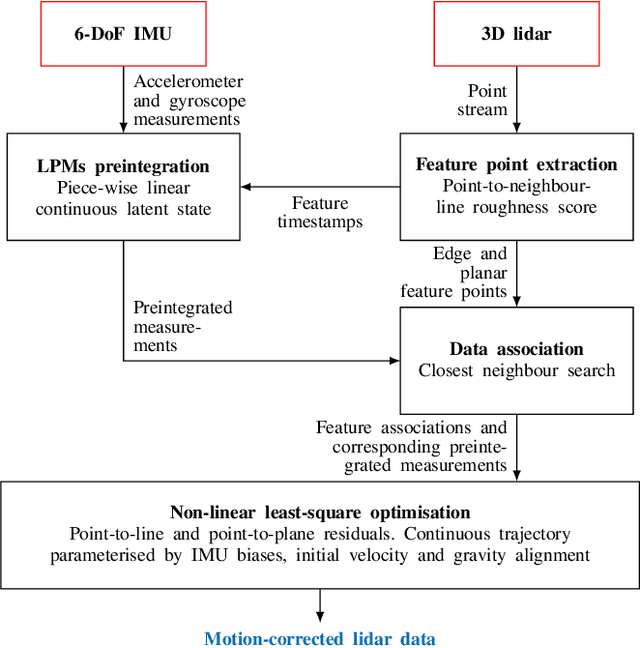

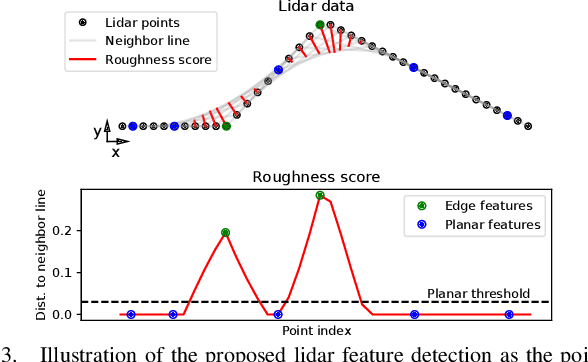

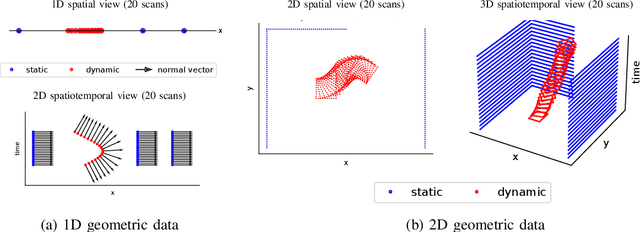

Over the past decade, lidars have become a cornerstone of robotics state estimation and perception thanks to their ability to provide accurate geometric information about their surroundings in the form of 3D scans. Unfortunately, most of nowadays lidars do not take snapshots of the environment but sweep the environment over a period of time (typically around 100 ms). Such a rolling-shutter-like mechanism introduces motion distortion into the collected lidar scan, thus hindering downstream perception applications. In this paper, we present a novel method for motion distortion correction of lidar data by tightly coupling lidar with Inertial Measurement Unit (IMU) data. The motivation of this work is a map-free dynamic object detection based on lidar. The proposed lidar data undistortion method relies on continuous preintegrated of IMU measurements that allow parameterising the sensors' continuous 6-DoF trajectory using solely eleven discrete state variables (biases, initial velocity, and gravity direction). The undistortion consists of feature-based distance minimisation of point-to-line and point-to-plane residuals in a non-linear least-square formulation. Given undistorted geometric data over a short temporal window, the proposed pipeline computes the spatiotemporal normal vector of each of the lidar points. The temporal component of the normals is a proxy for the corresponding point's velocity, therefore allowing for learning-free dynamic object classification without the need for registration in a global reference frame. We demonstrate the soundness of the proposed method and its different components using public datasets and compare them with state-of-the-art lidar-inertial state estimation and dynamic object detection algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge