Riccardo Giubilato

The S3LI Vulcano Dataset: A Dataset for Multi-Modal SLAM in Unstructured Planetary Environments

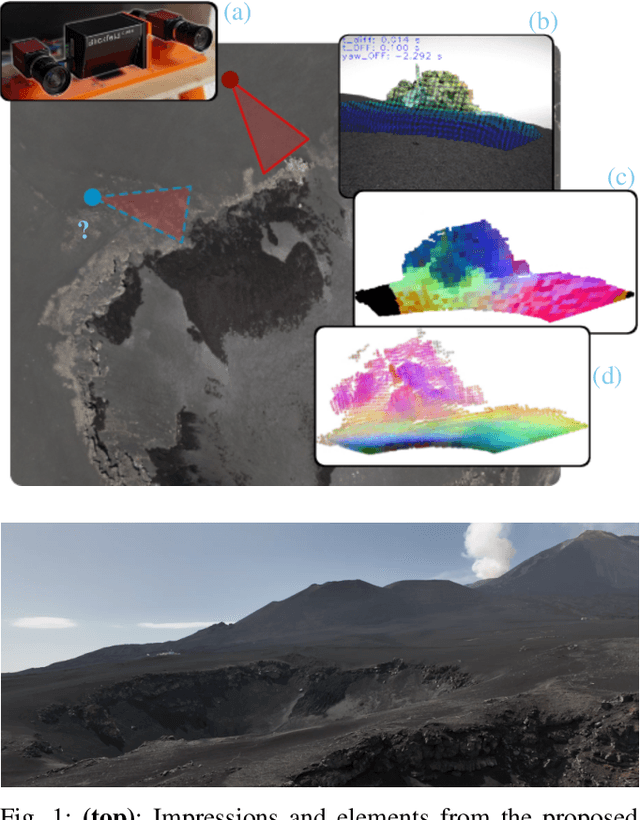

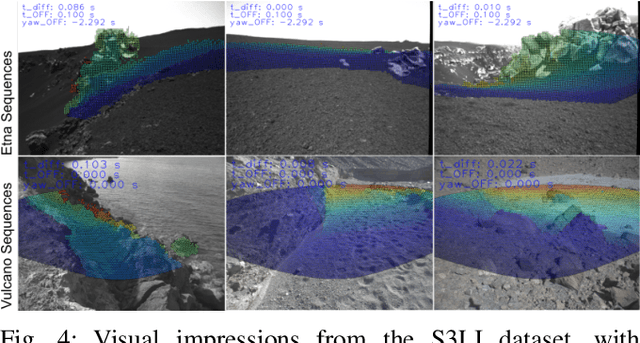

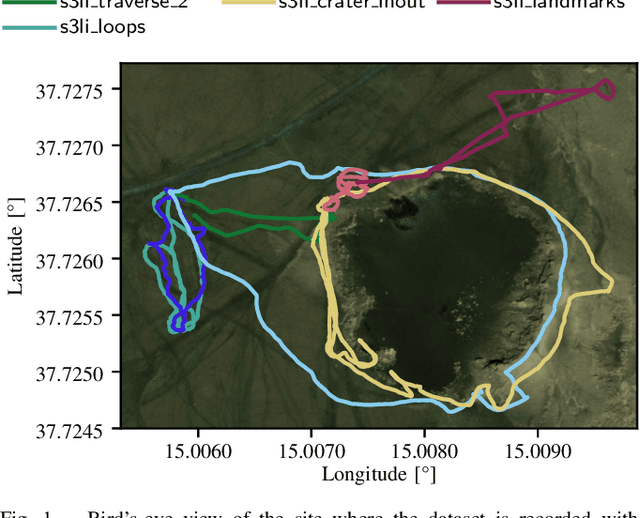

Jan 27, 2026Abstract:We release the S3LI Vulcano dataset, a multi-modal dataset towards development and benchmarking of Simultaneous Localization and Mapping (SLAM) and place recognition algorithms that rely on visual and LiDAR modalities. Several sequences are recorded on the volcanic island of Vulcano, from the Aeolian Islands in Sicily, Italy. The sequences provide users with data from a variety of environments, textures and terrains, including basaltic or iron-rich rocks, geological formations from old lava channels, as well as dry vegetation and water. The data (rmc.dlr.de/s3li_dataset) is accompanied by an open source toolkit (github.com/DLR-RM/s3li-toolkit) providing tools for generating ground truth poses as well as preparation of labelled samples for place recognition tasks.

Multi-modal Loop Closure Detection with Foundation Models in Severely Unstructured Environments

Nov 07, 2025

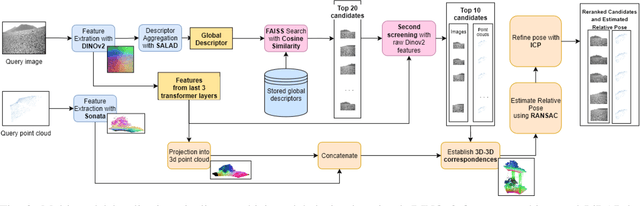

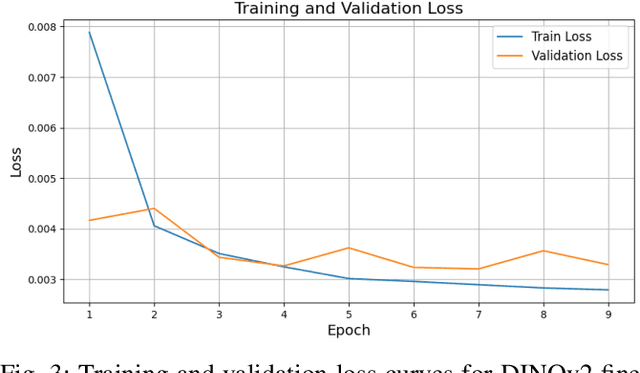

Abstract:Robust loop closure detection is a critical component of Simultaneous Localization and Mapping (SLAM) algorithms in GNSS-denied environments, such as in the context of planetary exploration. In these settings, visual place recognition often fails due to aliasing and weak textures, while LiDAR-based methods suffer from sparsity and ambiguity. This paper presents MPRF, a multimodal pipeline that leverages transformer-based foundation models for both vision and LiDAR modalities to achieve robust loop closure in severely unstructured environments. Unlike prior work limited to retrieval, MPRF integrates a two-stage visual retrieval strategy with explicit 6-DoF pose estimation, combining DINOv2 features with SALAD aggregation for efficient candidate screening and SONATA-based LiDAR descriptors for geometric verification. Experiments on the S3LI dataset and S3LI Vulcano dataset show that MPRF outperforms state-of-the-art retrieval methods in precision while enhancing pose estimation robustness in low-texture regions. By providing interpretable correspondences suitable for SLAM back-ends, MPRF achieves a favorable trade-off between accuracy, efficiency, and reliability, demonstrating the potential of foundation models to unify place recognition and pose estimation. Code and models will be released at github.com/DLR-RM/MPRF.

Unifying Local and Global Multimodal Features for Place Recognition in Aliased and Low-Texture Environments

Mar 20, 2024

Abstract:Perceptual aliasing and weak textures pose significant challenges to the task of place recognition, hindering the performance of Simultaneous Localization and Mapping (SLAM) systems. This paper presents a novel model, called UMF (standing for Unifying Local and Global Multimodal Features) that 1) leverages multi-modality by cross-attention blocks between vision and LiDAR features, and 2) includes a re-ranking stage that re-orders based on local feature matching the top-k candidates retrieved using a global representation. Our experiments, particularly on sequences captured on a planetary-analogous environment, show that UMF outperforms significantly previous baselines in those challenging aliased environments. Since our work aims to enhance the reliability of SLAM in all situations, we also explore its performance on the widely used RobotCar dataset, for broader applicability. Code and models are available at https://github.com/DLR-RM/UMF

Challenges of SLAM in extremely unstructured environments: the DLR Planetary Stereo, Solid-State LiDAR, Inertial Dataset

Jul 14, 2022

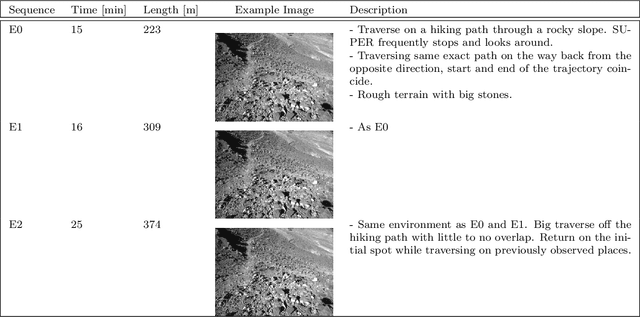

Abstract:We present the DLR Planetary Stereo, Solid-State LiDAR, Inertial (S3LI) dataset, recorded on Mt. Etna, Sicily, an environment analogous to the Moon and Mars, using a hand-held sensor suite with attributes suitable for implementation on a space-like mobile rover. The environment is characterized by challenging conditions regarding both the visual and structural appearance: severe visual aliasing poses significant limitations to the ability of visual SLAM systems to perform place recognition, while the absence of outstanding structural details, joined with the limited Field-of-View of the utilized Solid-State LiDAR sensor, challenges traditional LiDAR SLAM for the task of pose estimation using point clouds alone. With this data, that covers more than 4 kilometers of travel on soft volcanic slopes, we aim to: 1) provide a tool to expose limitations of state-of-the-art SLAM systems with respect to environments, which are not present in widely available datasets and 2) motivate the development of novel localization and mapping approaches, that rely efficiently on the complementary capabilities of the two sensors. The dataset is accessible at the following url: https://rmc.dlr.de/s3li_dataset

GPGM-SLAM: a Robust SLAM System for Unstructured Planetary Environments with Gaussian Process Gradient Maps

Sep 14, 2021

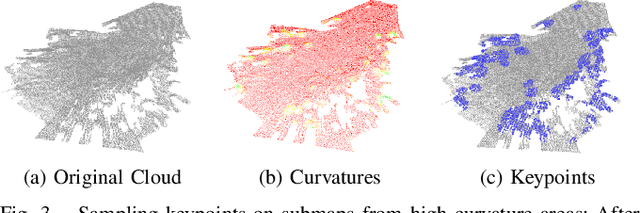

Abstract:Simultaneous Localization and Mapping (SLAM) techniques play a key role towards long-term autonomy of mobile robots due to the ability to correct localization errors and produce consistent maps of an environment over time. Contrarily to urban or man-made environments, where the presence of unique objects and structures offer unique cues for localization, the appearance of unstructured natural environments is often ambiguous and self-similar, hindering the performances of loop closure detection. In this paper, we present an approach to improve the robustness of place recognition in the context of a submap-based stereo SLAM based on Gaussian Process Gradient Maps (GPGMaps). GPGMaps embed a continuous representation of the gradients of the local terrain elevation by means of Gaussian Process regression and Structured Kernel Interpolation, given solely noisy elevation measurements. We leverage the image-like structure of GPGMaps to detect loop closures using traditional visual features and Bag of Words. GPGMap matching is performed as an SE(2) alignment to establish loop closure constraints within a pose graph. We evaluate the proposed pipeline on a variety of datasets recorded on Mt. Etna, Sicily and in the Morocco desert, respectively Moon- and Mars-like environments, and we compare the localization performances with state-of-the-art approaches for visual SLAM and visual loop closure detection.

Design of a user-friendly control system for planetary rovers with CPS feature

Jun 28, 2021

Abstract:In this paper, we present a user-friendly planetary rover's control system for low latency surface telerobotic. Thanks to the proposed system, an operator can comfortably give commands through the control base station to a rover using commercially available off-the-shelf (COTS) joysticks or by command sequencing with interactive monitoring on the sensed map of the environment. During operations, high situational awareness is made possible thanks to 3D map visualization. The map of the environment is built on the on-board computer by processing the rover's camera images with a visual Simultaneous Localization and Mapping (SLAM) algorithm. It is transmitted via Wi-Fi and displayed on the control base station screen in near real-time. The navigation stack takes as input the visual SLAM data to build a cost map to find the minimum cost path. By interacting with the virtual map, the rover exhibits properties of a Cyber Physical System (CPS) for its self-awareness capabilities. The software architecture is based on the Robot Operative System (ROS) middleware. The system design and the preliminary field test results are shown in the paper.

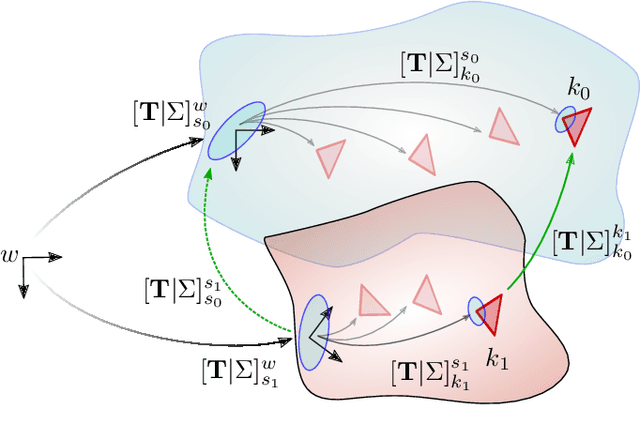

Multi-Modal Loop Closing in Unstructured Planetary Environments with Visually Enriched Submaps

May 05, 2021

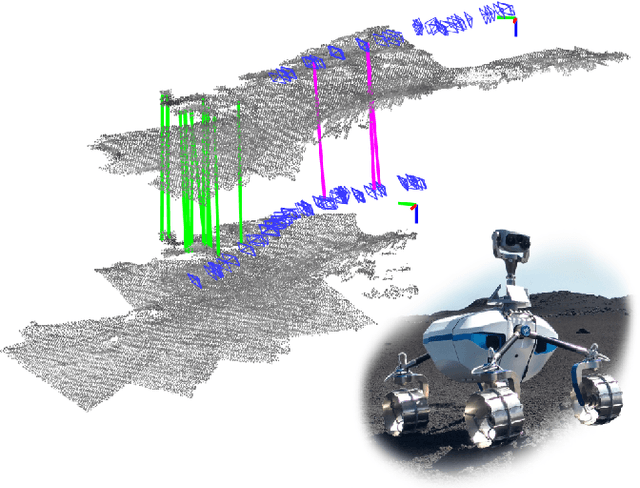

Abstract:Future planetary missions will rely on rovers that can autonomously explore and navigate in unstructured environments. An essential element is the ability to recognize places that were already visited or mapped. In this work we leverage the ability of stereo cameras to provide both visual and depth information, guiding the search and validation of loop closures from a multi-modal perspective. We propose to augment submaps that are created by aggregating stereo point clouds, with visual keyframes. Point clouds matches are found by comparing CSHOT descriptors and validated by clustering while visual matches are established by comparing keyframes using Bag-of-Words (BoW) and ORB descriptors. The relative transformations resulting from both keyframe and point cloud matches are then fused to provide pose constraints between submaps in our graph-based SLAM framework. Using the LRU rover, we performed several tests in both an indoor laboratory environment as well as a challenging planetary analog environment on Mount Etna, Italy. These environments consist of areas where either keyframes or point clouds alone fail to provide adequate matches, thus demonstrating the benefit of the proposed multi-modal approach.

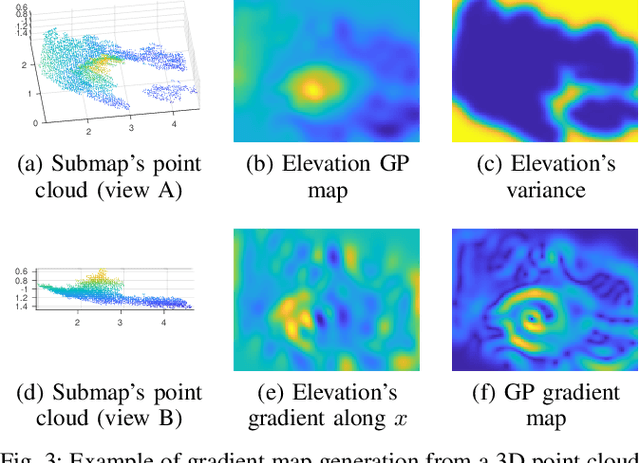

Gaussian Process Gradient Maps for Loop-Closure Detection in Unstructured Planetary Environments

Sep 01, 2020

Abstract:The ability to recognize previously mapped locations is an essential feature for autonomous systems. Unstructured planetary-like environments pose a major challenge to these systems due to the similarity of the terrain. As a result, the ambiguity of the visual appearance makes state-of-the-art visual place recognition approaches less effective than in urban or man-made environments. This paper presents a method to solve the loop closure problem using only spatial information. The key idea is to use a novel continuous and probabilistic representations of terrain elevation maps. Given 3D point clouds of the environment, the proposed approach exploits Gaussian Process (GP) regression with linear operators to generate continuous gradient maps of the terrain elevation information. Traditional image registration techniques are then used to search for potential matches. Loop closures are verified by leveraging both the spatial characteristic of the elevation maps (SE(2) registration) and the probabilistic nature of the GP representation. A submap-based localization and mapping framework is used to demonstrate the validity of the proposed approach. The performance of this pipeline is evaluated and benchmarked using real data from a rover that is equipped with a stereo camera and navigates in challenging, unstructured planetary-like environments in Morocco and on Mt. Etna.

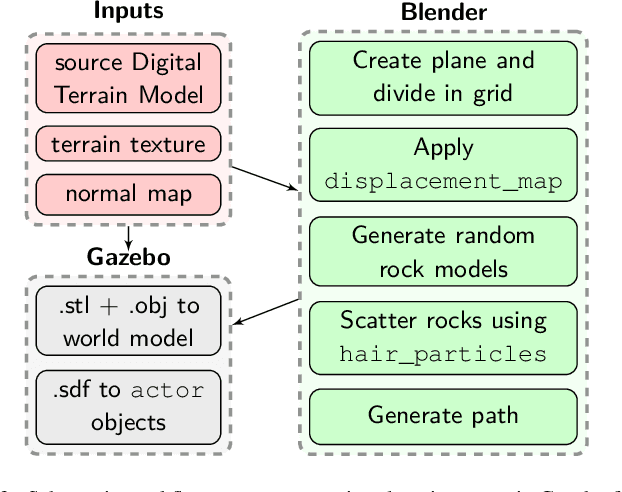

Simulation Framework for Mobile Robots in Planetary-Like Environments

Jun 17, 2020

Abstract:In this paper we present a simulation framework for the evaluation of the navigation and localization metrological performances of a robotic platform. The simulator, based on ROS (Robot Operating System) Gazebo, is targeted to a planetary-like research vehicle which allows to test various perception and navigation approaches for specific environment conditions. The possibility of simulating arbitrary sensor setups comprising cameras, LiDARs (Light Detection and Ranging) and IMUs makes Gazebo an excellent resource for rapid prototyping. In this work we evaluate a variety of open-source visual and LiDAR SLAM (Simultaneous Localization and Mapping) algorithms in a simulated Martian environment. Datasets are captured by driving the rover and recording sensors outputs as well as the ground truth for a precise performance evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge