Marco Pertile

Experimental Evaluation of Pose Initialization Methods for Relative Navigation Between Non-Cooperative Satellites

Jun 21, 2022

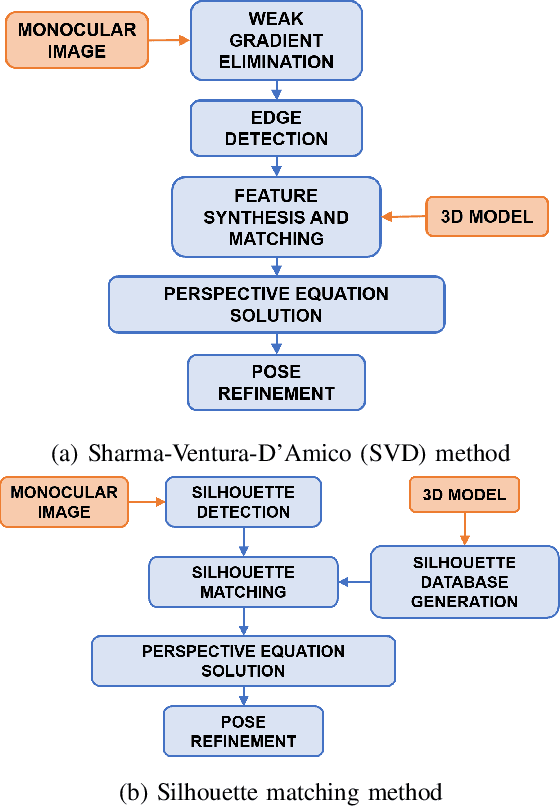

Abstract:In this work, we have analyzed the problem of relative pose initialization between two satellites: a chaser and a non-cooperating target. The analysis has been targeted to two close-range methods based on a monocular camera system: the Sharma-Ventura-D'Amico (SVD) method and the silhouette matching method. Both methods are based on a priori knowledge of the target geometry, but neither fiducial markers nor a priori range measurements or state information are needed. The tests were carried out using a 2U CubeSat mock-up as target attached to a motorized rotary stage to simulate its relative motion with respect to the chaser camera. A motion capture system was used as a reference instrument that provides the fiducial relative motion between the two mock-ups and allows to evaluate the performances of the initialization algorithms analyzed.

Design of a user-friendly control system for planetary rovers with CPS feature

Jun 28, 2021

Abstract:In this paper, we present a user-friendly planetary rover's control system for low latency surface telerobotic. Thanks to the proposed system, an operator can comfortably give commands through the control base station to a rover using commercially available off-the-shelf (COTS) joysticks or by command sequencing with interactive monitoring on the sensed map of the environment. During operations, high situational awareness is made possible thanks to 3D map visualization. The map of the environment is built on the on-board computer by processing the rover's camera images with a visual Simultaneous Localization and Mapping (SLAM) algorithm. It is transmitted via Wi-Fi and displayed on the control base station screen in near real-time. The navigation stack takes as input the visual SLAM data to build a cost map to find the minimum cost path. By interacting with the virtual map, the rover exhibits properties of a Cyber Physical System (CPS) for its self-awareness capabilities. The software architecture is based on the Robot Operative System (ROS) middleware. The system design and the preliminary field test results are shown in the paper.

Evaluation of 3D CNN Semantic Mapping for Rover Navigation

Jun 17, 2020

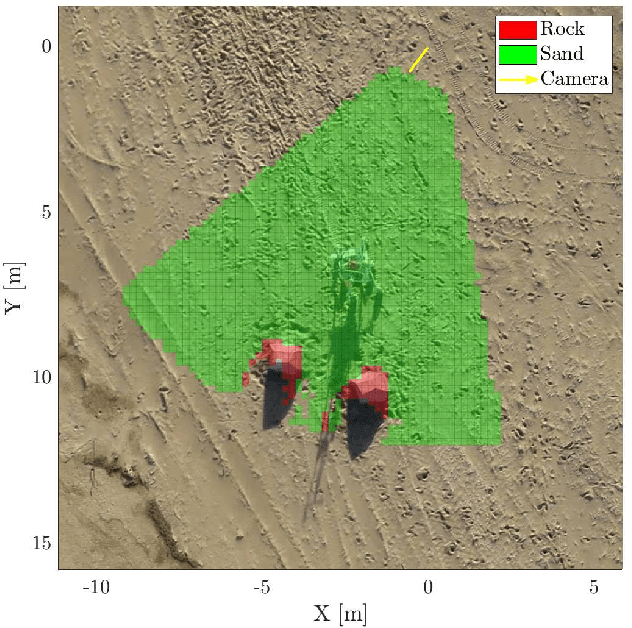

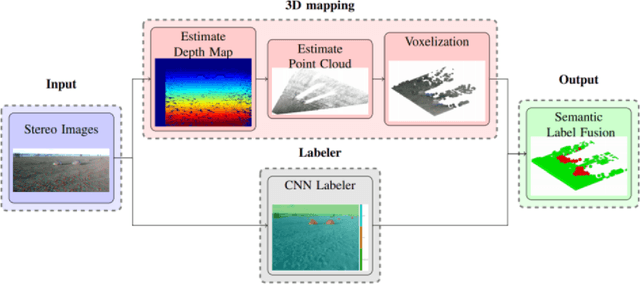

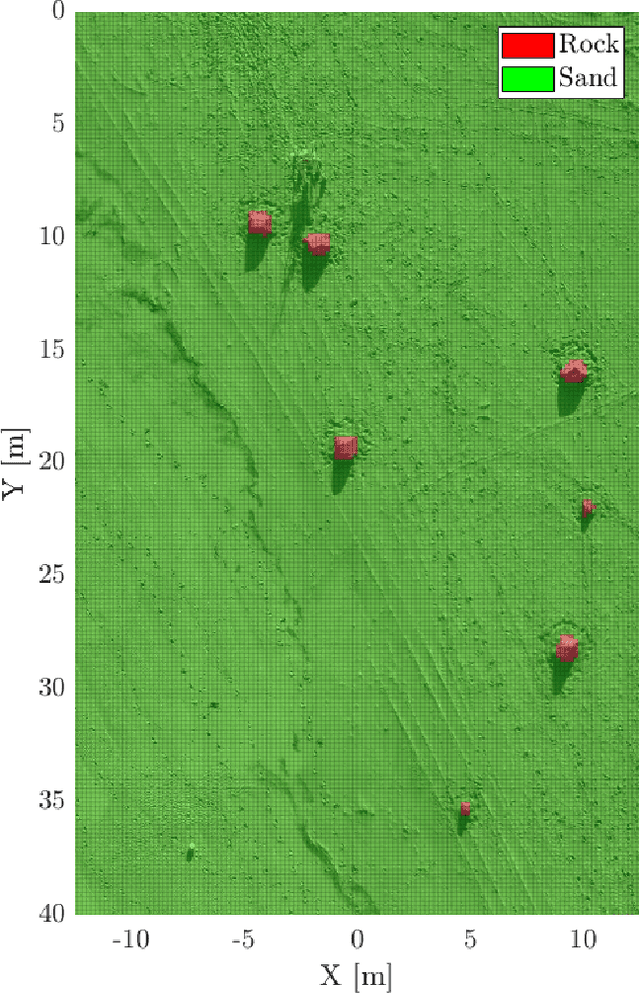

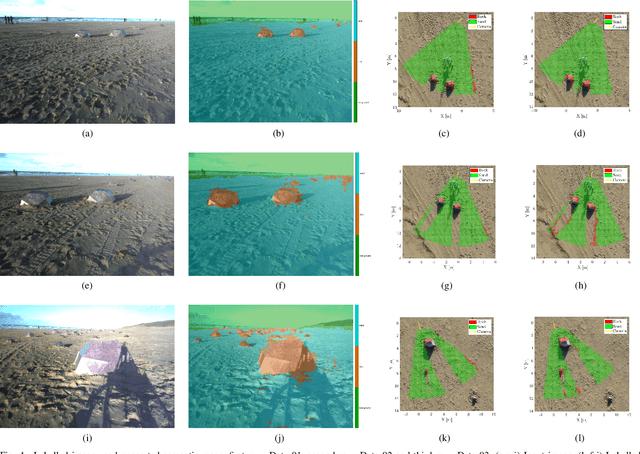

Abstract:Terrain assessment is a key aspect for autonomous exploration rovers, surrounding environment recognition is required for multiple purposes, such as optimal trajectory planning and autonomous target identification. In this work we present a technique to generate accurate three-dimensional semantic maps for Martian environment. The algorithm uses as input a stereo image acquired by a camera mounted on a rover. Firstly, images are labeled with DeepLabv3+, which is an encoder-decoder Convolutional Neural Networl (CNN). Then, the labels obtained by the semantic segmentation are combined to stereo depth-maps in a Voxel representation. We evaluate our approach on the ESA Katwijk Beach Planetary Rover Dataset.

Simulation Framework for Mobile Robots in Planetary-Like Environments

Jun 17, 2020

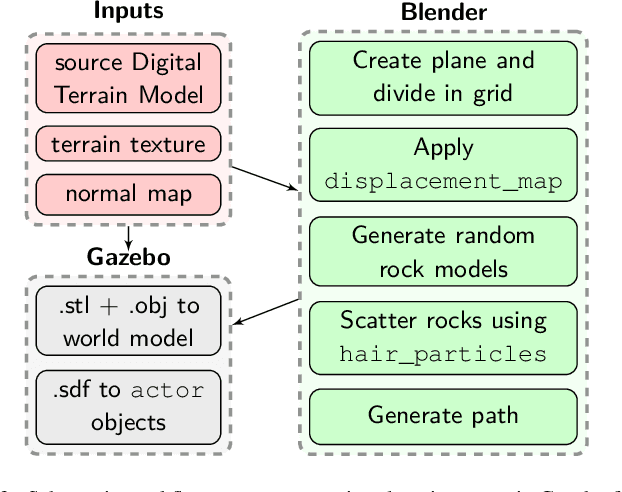

Abstract:In this paper we present a simulation framework for the evaluation of the navigation and localization metrological performances of a robotic platform. The simulator, based on ROS (Robot Operating System) Gazebo, is targeted to a planetary-like research vehicle which allows to test various perception and navigation approaches for specific environment conditions. The possibility of simulating arbitrary sensor setups comprising cameras, LiDARs (Light Detection and Ranging) and IMUs makes Gazebo an excellent resource for rapid prototyping. In this work we evaluate a variety of open-source visual and LiDAR SLAM (Simultaneous Localization and Mapping) algorithms in a simulated Martian environment. Datasets are captured by driving the rover and recording sensors outputs as well as the ground truth for a precise performance evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge