Robust Semi-Direct Monocular Visual Odometry Using Edge and Illumination-Robust Cost

Paper and Code

Sep 25, 2019

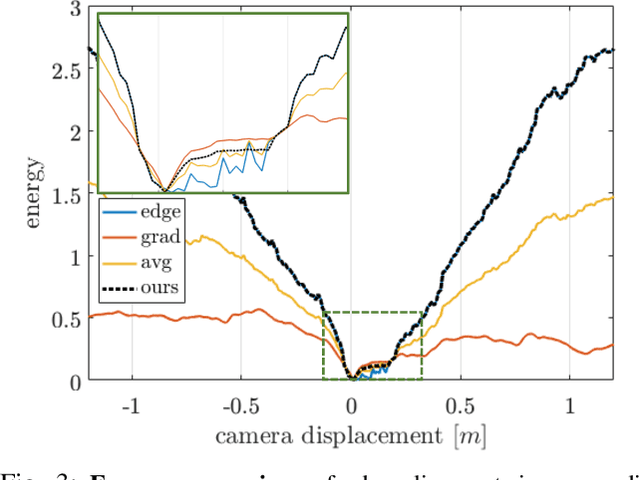

In this work, we propose a monocular semi-direct visual odometry framework, which is capable of exploiting the best attributes of edge features and local photometric information for illumination-robust camera motion estimation and scene reconstruction. In the tracking layer, the edge alignment error and image gradient error are jointly optimized through a convergence-preserved reweighting strategy, which not only preserves the property of illumination invariance but also leads to larger convergence basin and higher tracking accuracy compared with individual approaches. In the mapping layer, a fast probabilistic 1D search strategy is proposed to locate the best photometrically matched point along all geometrically possible edges, which enables real-time edge point correspondence generation using merely high-frequency components of the image. The resultant reprojection error is then used to substitute edge alignment error for joint optimization in local bundle adjustment, avoiding the partial observability issue of monocular edge mapping as well as improving the stability of optimization. We present extensive analysis and evaluation of our proposed system on synthetic and real-world benchmark datasets under the influence of illumination changes and large camera motions, where our proposed system outperforms current state-of-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge