Antoine Richard

Isaac Lab: A GPU-Accelerated Simulation Framework for Multi-Modal Robot Learning

Nov 06, 2025

Abstract:We present Isaac Lab, the natural successor to Isaac Gym, which extends the paradigm of GPU-native robotics simulation into the era of large-scale multi-modal learning. Isaac Lab combines high-fidelity GPU parallel physics, photorealistic rendering, and a modular, composable architecture for designing environments and training robot policies. Beyond physics and rendering, the framework integrates actuator models, multi-frequency sensor simulation, data collection pipelines, and domain randomization tools, unifying best practices for reinforcement and imitation learning at scale within a single extensible platform. We highlight its application to a diverse set of challenges, including whole-body control, cross-embodiment mobility, contact-rich and dexterous manipulation, and the integration of human demonstrations for skill acquisition. Finally, we discuss upcoming integration with the differentiable, GPU-accelerated Newton physics engine, which promises new opportunities for scalable, data-efficient, and gradient-based approaches to robot learning. We believe Isaac Lab's combination of advanced simulation capabilities, rich sensing, and data-center scale execution will help unlock the next generation of breakthroughs in robotics research.

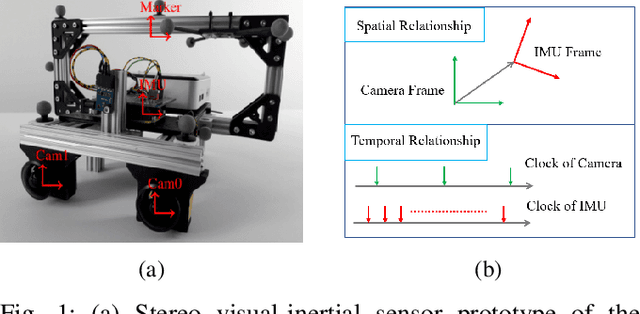

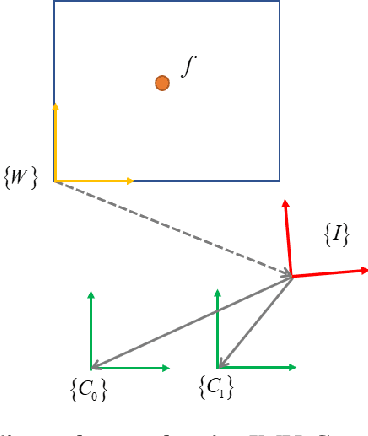

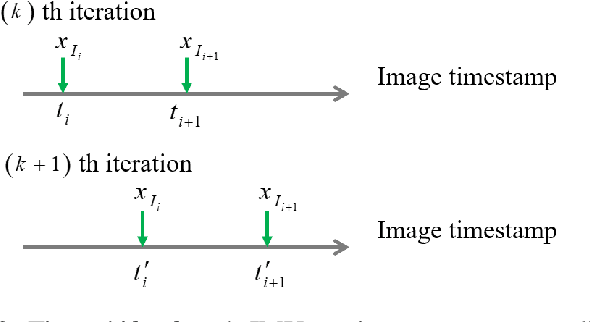

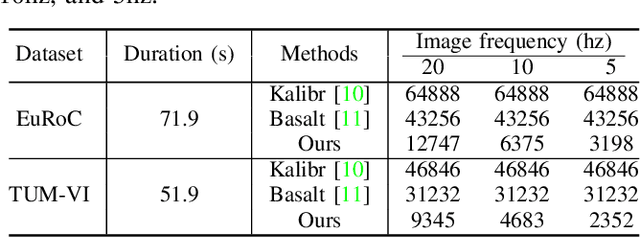

Unleashing the Power of Discrete-Time State Representation: Ultrafast Target-based IMU-Camera Spatial-Temporal Calibration

Sep 16, 2025

Abstract:Visual-inertial fusion is crucial for a large amount of intelligent and autonomous applications, such as robot navigation and augmented reality. To bootstrap and achieve optimal state estimation, the spatial-temporal displacements between IMU and cameras must be calibrated in advance. Most existing calibration methods adopt continuous-time state representation, more specifically the B-spline. Despite these methods achieve precise spatial-temporal calibration, they suffer from high computational cost caused by continuous-time state representation. To this end, we propose a novel and extremely efficient calibration method that unleashes the power of discrete-time state representation. Moreover, the weakness of discrete-time state representation in temporal calibration is tackled in this paper. With the increasing production of drones, cellphones and other visual-inertial platforms, if one million devices need calibration around the world, saving one minute for the calibration of each device means saving 2083 work days in total. To benefit both the research and industry communities, our code will be open-source.

PLUME: Procedural Layer Underground Modeling Engine

Aug 28, 2025Abstract:As space exploration advances, underground environments are becoming increasingly attractive due to their potential to provide shelter, easier access to resources, and enhanced scientific opportunities. Although such environments exist on Earth, they are often not easily accessible and do not accurately represent the diversity of underground environments found throughout the solar system. This paper presents PLUME, a procedural generation framework aimed at easily creating 3D underground environments. Its flexible structure allows for the continuous enhancement of various underground features, aligning with our expanding understanding of the solar system. The environments generated using PLUME can be used for AI training, evaluating robotics algorithms, 3D rendering, and facilitating rapid iteration on developed exploration algorithms. In this paper, it is demonstrated that PLUME has been used along with a robotic simulator. PLUME is open source and has been released on Github. https://github.com/Gabryss/P.L.U.M.E

NavBench: A Unified Robotics Benchmark for Reinforcement Learning-Based Autonomous Navigation

May 20, 2025Abstract:Autonomous robots must navigate and operate in diverse environments, from terrestrial and aquatic settings to aerial and space domains. While Reinforcement Learning (RL) has shown promise in training policies for specific autonomous robots, existing benchmarks are often constrained to unique platforms, limiting generalization and fair comparisons across different mobility systems. In this paper, we present NavBench, a multi-domain benchmark for training and evaluating RL-based navigation policies across diverse robotic platforms and operational environments. Built on IsaacLab, our framework standardizes task definitions, enabling different robots to tackle various navigation challenges without the need for ad-hoc task redesigns or custom evaluation metrics. Our benchmark addresses three key challenges: (1) Unified cross-medium benchmarking, enabling direct evaluation of diverse actuation methods (thrusters, wheels, water-based propulsion) in realistic environments; (2) Scalable and modular design, facilitating seamless robot-task interchangeability and reproducible training pipelines; and (3) Robust sim-to-real validation, demonstrated through successful policy transfer to multiple real-world robots, including a satellite robotic simulator, an unmanned surface vessel, and a wheeled ground vehicle. By ensuring consistency between simulation and real-world deployment, NavBench simplifies the development of adaptable RL-based navigation strategies. Its modular design allows researchers to easily integrate custom robots and tasks by following the framework's predefined templates, making it accessible for a wide range of applications. Our code is publicly available at NavBench.

Improving Monocular Visual-Inertial Initialization with Structureless Visual-Inertial Bundle Adjustment

Feb 23, 2025Abstract:Monocular visual inertial odometry (VIO) has facilitated a wide range of real-time motion tracking applications, thanks to the small size of the sensor suite and low power consumption. To successfully bootstrap VIO algorithms, the initialization module is extremely important. Most initialization methods rely on the reconstruction of 3D visual point clouds. These methods suffer from high computational cost as state vector contains both motion states and 3D feature points. To address this issue, some researchers recently proposed a structureless initialization method, which can solve the initial state without recovering 3D structure. However, this method potentially compromises performance due to the decoupled estimation of rotation and translation, as well as linear constraints. To improve its accuracy, we propose novel structureless visual-inertial bundle adjustment to further refine previous structureless solution. Extensive experiments on real-world datasets show our method significantly improves the VIO initialization accuracy, while maintaining real-time performance.

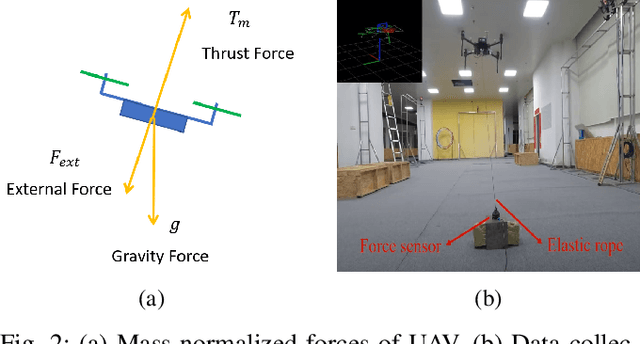

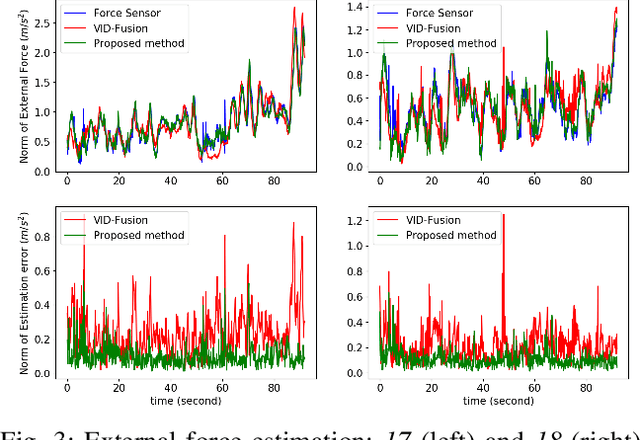

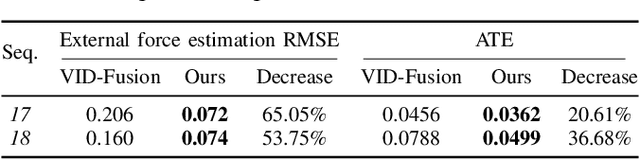

An Accurate Filter-based Visual Inertial External Force Estimator via Instantaneous Accelerometer Update

Aug 29, 2024

Abstract:Accurate disturbance estimation is crucial for reliable robotic physical interaction. To estimate environmental interference in a low-cost and sensorless way (without force sensor), a variety of tightly-coupled visual inertial external force estimators are proposed in the literature. However, existing solutions may suffer from relatively low-frequency preintegration. In this paper, a novel estimator is designed to overcome this issue via high-frequency instantaneous accelerometer update.

Modeling of Terrain Deformation by a Grouser Wheel for Lunar Rover Simulation

Aug 24, 2024Abstract:Simulation of vehicle motion in planetary environments is challenging. This is due to the modeling of complex terrain, optical conditions, and terrain-aware vehicle dynamics. One of the critical issues of typical simulators is that they assume terrain is a rigid body, which limits their ability to render wheel traces and compute the wheel-terrain interactions. This prevents, for example, the use of wheel traces as landmarks for localization, as well as the accurate simulation of motion. In the context of lunar regolith, the surface is not rigid but granular. As such, there are differences in the rover's motion, such as sinkage and slippage, and a clear wheel trace left behind the rover, compared to that on a rigid terrain. This study presents a novel approach to integrating a terramechanics-aware terrain deformation engine to simulate a realistic wheel trace in a digital lunar environment. By leveraging Discrete Element Method simulation results alongside experimental single-wheel test data, we construct a regression model to derive deformation height as a function of contact normal force. The region of interest in a height map is retrieved from the wheel poses. The elevation values of corresponding pixels are subsequently modified using contact normal forces and the regression model. Finally, we apply the determined elevation change to each mesh vertex to render wheel traces during runtime. The deformation engine is integrated into our ongoing development of a lunar simulator based on NVIDIA's Omniverse IsaacSim. We hypothesize that our work will be crucial to testing perception and downstream navigation systems under conditions similar to outdoor or terrestrial fields. A demonstration video is available here: https://www.youtube.com/watch?v=TpzD0h-5hv4

A Deep Reinforcement Learning Framework and Methodology for Reducing the Sim-to-Real Gap in ASV Navigation

Jul 11, 2024

Abstract:Despite the increasing adoption of Deep Reinforcement Learning (DRL) for Autonomous Surface Vehicles (ASVs), there still remain challenges limiting real-world deployment. In this paper, we first integrate buoyancy and hydrodynamics models into a modern Reinforcement Learning framework to reduce training time. Next, we show how system identification coupled with domain randomization improves the RL agent performance and narrows the sim-to-real gap. Real-world experiments for the task of capturing floating waste show that our approach lowers energy consumption by 13.1\% while reducing task completion time by 7.4\%. These findings, supported by sharing our open-source implementation, hold the potential to impact the efficiency and versatility of ASVs, contributing to environmental conservation efforts.

Performance Comparison of ROS2 Middlewares for Multi-robot Mesh Networks in Planetary Exploration

Jul 03, 2024

Abstract:Recent advancements in Multi-Robot Systems (MRS) and mesh network technologies pave the way for innovative approaches to explore extreme environments. The Artemis Accords, a series of international agreements, have further catalyzed this progress by fostering cooperation in space exploration, emphasizing the use of cutting-edge technologies. In parallel, the widespread adoption of the Robot Operating System 2 (ROS 2) by companies across various sectors underscores its robustness and versatility. This paper evaluates the performances of available ROS 2 MiddleWare (RMW), such as FastRTPS, CycloneDDS and Zenoh, over a mesh network with a dynamic topology. The final choice of RMW is determined by the one that would fit the most the scenario: an exploration of the extreme extra-terrestrial environment using a MRS. The conducted study in a real environment highlights Zenoh as a potential solution for future applications, showing a reduced delay, reachability, and CPU usage while being competitive on data overhead and RAM usage over a dynamic mesh topology

Object-centric Reconstruction and Tracking of Dynamic Unknown Objects using 3D Gaussian Splatting

May 30, 2024Abstract:Generalizable perception is one of the pillars of high-level autonomy in space robotics. Estimating the structure and motion of unknown objects in dynamic environments is fundamental for such autonomous systems. Traditionally, the solutions have relied on prior knowledge of target objects, multiple disparate representations, or low-fidelity outputs unsuitable for robotic operations. This work proposes a novel approach to incrementally reconstruct and track a dynamic unknown object using a unified representation -- a set of 3D Gaussian blobs that describe its geometry and appearance. The differentiable 3D Gaussian Splatting framework is adapted to a dynamic object-centric setting. The input to the pipeline is a sequential set of RGB-D images. 3D reconstruction and 6-DoF pose tracking tasks are tackled using first-order gradient-based optimization. The formulation is simple, requires no pre-training, assumes no prior knowledge of the object or its motion, and is suitable for online applications. The proposed approach is validated on a dataset of 10 unknown spacecraft of diverse geometry and texture under arbitrary relative motion. The experiments demonstrate successful 3D reconstruction and accurate 6-DoF tracking of the target object in proximity operations over a short to medium duration. The causes of tracking drift are discussed and potential solutions are outlined.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge