David Morilla-Cabello

Instituto de Investigación en Ingeniería de Aragón, Universidad de Zaragoza, Spain

CHORAL: Traversal-Aware Planning for Safe and Efficient Heterogeneous Multi-Robot Routing

Jan 15, 2026Abstract:Monitoring large, unknown, and complex environments with autonomous robots poses significant navigation challenges, where deploying teams of heterogeneous robots with complementary capabilities can substantially improve both mission performance and feasibility. However, effectively modeling how different robotic platforms interact with the environment requires rich, semantic scene understanding. Despite this, existing approaches often assume homogeneous robot teams or focus on discrete task compatibility rather than continuous routing. Consequently, scene understanding is not fully integrated into routing decisions, limiting their ability to adapt to the environment and to leverage each robot's strengths. In this paper, we propose an integrated semantic-aware framework for coordinating heterogeneous robots. Starting from a reconnaissance flight, we build a metric-semantic map using open-vocabulary vision models and use it to identify regions requiring closer inspection and capability-aware paths for each platform to reach them. These are then incorporated into a heterogeneous vehicle routing formulation that jointly assigns inspection tasks and computes robot trajectories. Experiments in simulation and in a real inspection mission with three robotic platforms demonstrate the effectiveness of our approach in planning safer and more efficient routes by explicitly accounting for each platform's navigation capabilities. We release our framework, CHORAL, as open source to support reproducibility and deployment of diverse robot teams.

vS-Graphs: Integrating Visual SLAM and Situational Graphs through Multi-level Scene Understanding

Mar 03, 2025Abstract:Current Visual Simultaneous Localization and Mapping (VSLAM) systems often struggle to create maps that are both semantically rich and easily interpretable. While incorporating semantic scene knowledge aids in building richer maps with contextual associations among mapped objects, representing them in structured formats like scene graphs has not been widely addressed, encountering complex map comprehension and limited scalability. This paper introduces visual S-Graphs (vS-Graphs), a novel real-time VSLAM framework that integrates vision-based scene understanding with map reconstruction and comprehensible graph-based representation. The framework infers structural elements (i.e., rooms and corridors) from detected building components (i.e., walls and ground surfaces) and incorporates them into optimizable 3D scene graphs. This solution enhances the reconstructed map's semantic richness, comprehensibility, and localization accuracy. Extensive experiments on standard benchmarks and real-world datasets demonstrate that vS-Graphs outperforms state-of-the-art VSLAM methods, reducing trajectory error by an average of 3.38% and up to 9.58% on real-world data. Furthermore, the proposed framework achieves environment-driven semantic entity detection accuracy comparable to precise LiDAR-based frameworks using only visual features. A web page containing more media and evaluation outcomes is available on https://snt-arg.github.io/vsgraphs-results/.

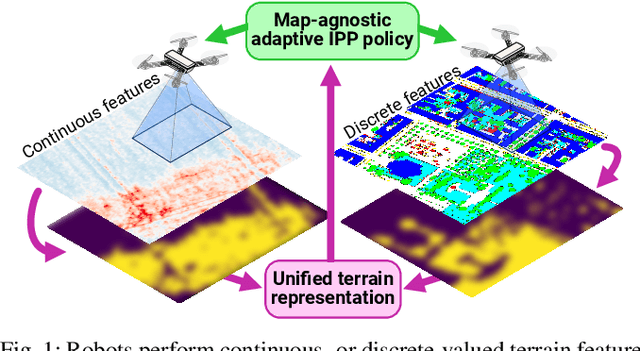

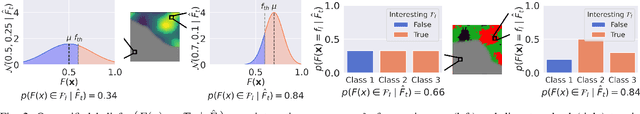

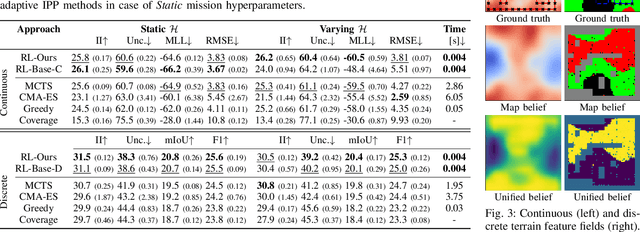

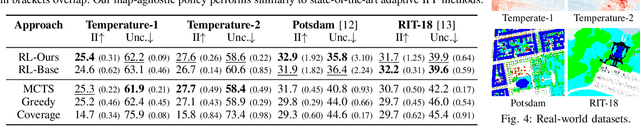

Towards Map-Agnostic Policies for Adaptive Informative Path Planning

Oct 22, 2024

Abstract:Robots are frequently tasked to gather relevant sensor data in unknown terrains. A key challenge for classical path planning algorithms used for autonomous information gathering is adaptively replanning paths online as the terrain is explored given limited onboard compute resources. Recently, learning-based approaches emerged that train planning policies offline and enable computationally efficient online replanning performing policy inference. These approaches are designed and trained for terrain monitoring missions assuming a single specific map representation, which limits their applicability to different terrains. To address these issues, we propose a novel formulation of the adaptive informative path planning problem unified across different map representations, enabling training and deploying planning policies in a larger variety of monitoring missions. Experimental results validate that our novel formulation easily integrates with classical non-learning-based planning approaches while maintaining their performance. Our trained planning policy performs similarly to state-of-the-art map-specifically trained policies. We validate our learned policy on unseen real-world terrain datasets.

Perceptual Factors for Environmental Modeling in Robotic Active Perception

Sep 19, 2023

Abstract:Accurately assessing the potential value of new sensor observations is a critical aspect of planning for active perception. This task is particularly challenging when reasoning about high-level scene understanding using measurements from vision-based neural networks. Due to appearance-based reasoning, the measurements are susceptible to several environmental effects such as the presence of occluders, variations in lighting conditions, and redundancy of information due to similarity in appearance between nearby viewpoints. To address this, we propose a new active perception framework incorporating an arbitrary number of perceptual effects in planning and fusion. Our method models the correlation with the environment by a set of general functions termed perceptual factors to construct a perceptual map, which quantifies the aggregated influence of the environment on candidate viewpoints. This information is seamlessly incorporated into the planning and fusion processes by adjusting the uncertainty associated with measurements to weigh their contributions. We evaluate our perceptual maps in a simulated environment that reproduces environmental conditions common in robotics applications. Our results show that, by accounting for environmental effects within our perceptual maps, we improve in the state estimation by correctly selecting the viewpoints and considering the measurement noise correctly when affected by environmental factors. We furthermore deploy our approach on a ground robot to showcase its applicability for real-world active perception missions.

Robust Fusion for Bayesian Semantic Mapping

Mar 14, 2023

Abstract:The integration of semantic information in a map allows robots to understand better their environment and make high-level decisions. In the last few years, neural networks have shown enormous progress in their perception capabilities. However, when fusing multiple observations from a neural network in a semantic map, its inherent overconfidence with unknown data gives too much weight to the outliers and decreases the robustness of the resulting map. In this work, we propose a novel robust fusion method to combine multiple Bayesian semantic predictions. Our method uses the uncertainty estimation provided by a Bayesian neural network to calibrate the way in which the measurements are fused. This is done by regularizing the observations to mitigate the problem of overconfident outlier predictions and using the epistemic uncertainty to weigh their influence in the fusion, resulting in a different formulation of the probability distributions. We validate our robust fusion strategy by performing experiments on photo-realistic simulated environments and real scenes. In both cases, we use a network trained on different data to expose the model to varying data distributions. The results show that considering the model's uncertainty and regularizing the probability distribution of the observations distribution results in a better semantic segmentation performance and more robustness to outliers, compared with other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge