Jonas Westheider

e Institute of Geodesy and Geoinformation, University of Bonn, Germany

Perceptual Factors for Environmental Modeling in Robotic Active Perception

Sep 19, 2023

Abstract:Accurately assessing the potential value of new sensor observations is a critical aspect of planning for active perception. This task is particularly challenging when reasoning about high-level scene understanding using measurements from vision-based neural networks. Due to appearance-based reasoning, the measurements are susceptible to several environmental effects such as the presence of occluders, variations in lighting conditions, and redundancy of information due to similarity in appearance between nearby viewpoints. To address this, we propose a new active perception framework incorporating an arbitrary number of perceptual effects in planning and fusion. Our method models the correlation with the environment by a set of general functions termed perceptual factors to construct a perceptual map, which quantifies the aggregated influence of the environment on candidate viewpoints. This information is seamlessly incorporated into the planning and fusion processes by adjusting the uncertainty associated with measurements to weigh their contributions. We evaluate our perceptual maps in a simulated environment that reproduces environmental conditions common in robotics applications. Our results show that, by accounting for environmental effects within our perceptual maps, we improve in the state estimation by correctly selecting the viewpoints and considering the measurement noise correctly when affected by environmental factors. We furthermore deploy our approach on a ground robot to showcase its applicability for real-world active perception missions.

Multi-UAV Adaptive Path Planning Using Deep Reinforcement Learning

Mar 02, 2023Abstract:Efficient aerial data collection is important in many remote sensing applications. In large-scale monitoring scenarios, deploying a team of unmanned aerial vehicles (UAVs) offers improved spatial coverage and robustness against individual failures. However, a key challenge is cooperative path planning for the UAVs to efficiently achieve a joint mission goal. We propose a novel multi-agent informative path planning approach based on deep reinforcement learning for adaptive terrain monitoring scenarios using UAV teams. We introduce new network feature representations to effectively learn path planning in a 3D workspace. By leveraging a counterfactual baseline, our approach explicitly addresses credit assignment to learn cooperative behaviour. Our experimental evaluation shows improved planning performance, i.e. maps regions of interest more quickly, with respect to non-counterfactual variants. Results on synthetic and real-world data show that our approach has superior performance compared to state-of-the-art non-learning-based methods, while being transferable to varying team sizes and communication constraints.

Adaptive Path Planning for UAVs for Multi-Resolution Semantic Segmentation

Mar 03, 2022

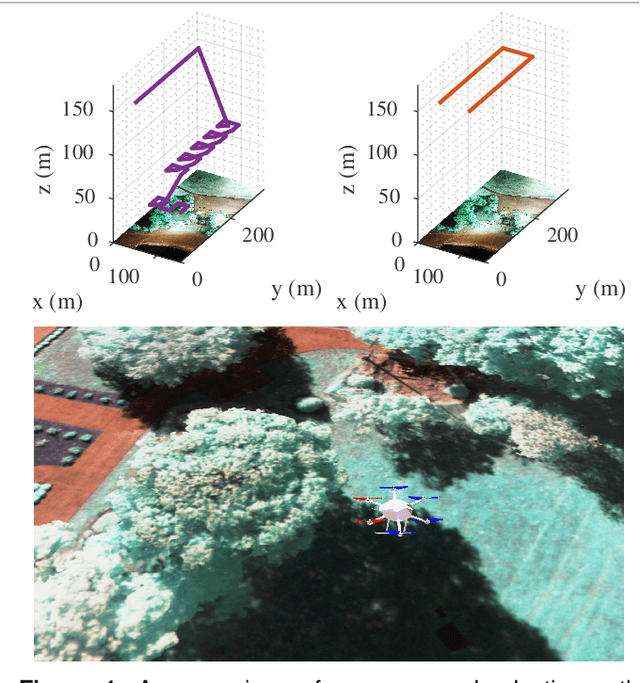

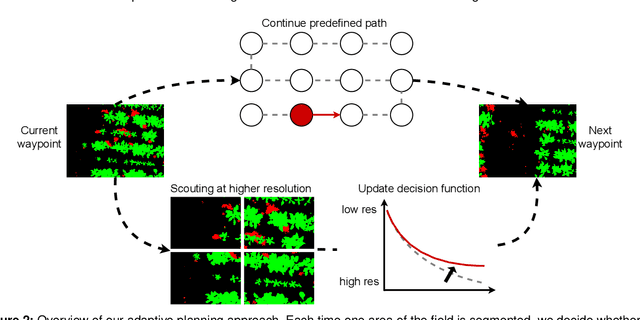

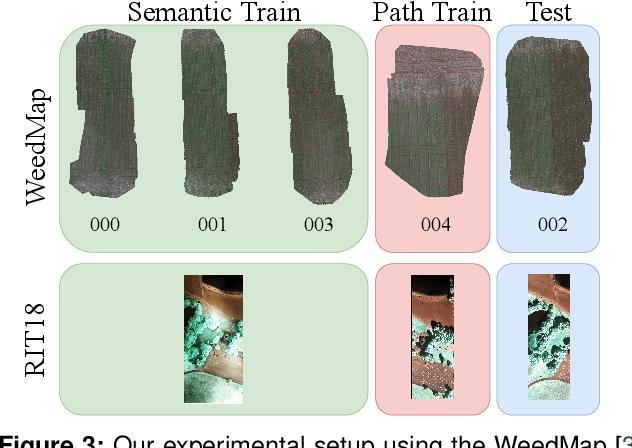

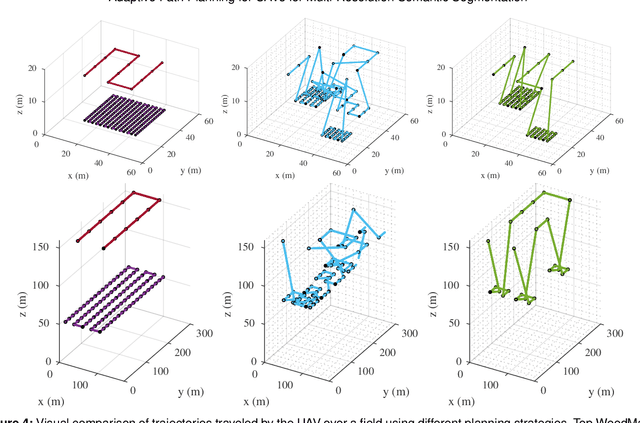

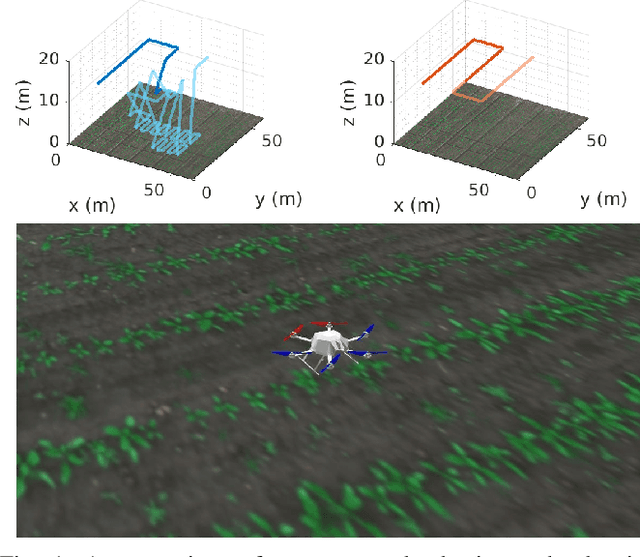

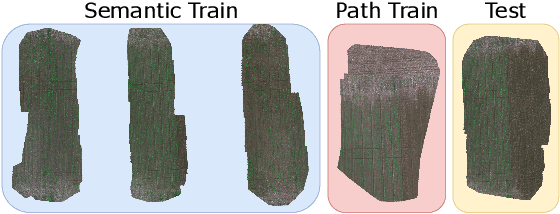

Abstract:Efficient data collection methods play a major role in helping us better understand the Earth and its ecosystems. In many applications, the usage of unmanned aerial vehicles (UAVs) for monitoring and remote sensing is rapidly gaining momentum due to their high mobility, low cost, and flexible deployment. A key challenge is planning missions to maximize the value of acquired data in large environments given flight time limitations. This is, for example, relevant for monitoring agricultural fields. This paper addresses the problem of adaptive path planning for accurate semantic segmentation of using UAVs. We propose an online planning algorithm which adapts the UAV paths to obtain high-resolution semantic segmentations necessary in areas with fine details as they are detected in incoming images. This enables us to perform close inspections at low altitudes only where required, without wasting energy on exhaustive mapping at maximum image resolution. A key feature of our approach is a new accuracy model for deep learning-based architectures that captures the relationship between UAV altitude and semantic segmentation accuracy. We evaluate our approach on different domains using real-world data, proving the efficacy and generability of our solution.

Adaptive Path Planning for UAV-based Multi-Resolution Semantic Segmentation

Aug 04, 2021

Abstract:In this paper, we address the problem of adaptive path planning for accurate semantic segmentation of terrain using unmanned aerial vehicles (UAVs). The usage of UAVs for terrain monitoring and remote sensing is rapidly gaining momentum due to their high mobility, low cost, and flexible deployment. However, a key challenge is planning missions to maximize the value of acquired data in large environments given flight time limitations. To address this, we propose an online planning algorithm which adapts the UAV paths to obtain high-resolution semantic segmentations necessary in areas on the terrain with fine details as they are detected in incoming images. This enables us to perform close inspections at low altitudes only where required, without wasting energy on exhaustive mapping at maximum resolution. A key feature of our approach is a new accuracy model for deep learning-based architectures that captures the relationship between UAV altitude and semantic segmentation accuracy. We evaluate our approach on the application of crop/weed segmentation in precision agriculture using real-world field data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge