Shehryar Khattak

CompSLAM: Complementary Hierarchical Multi-Modal Localization and Mapping for Robot Autonomy in Underground Environments

May 10, 2025Abstract:Robot autonomy in unknown, GPS-denied, and complex underground environments requires real-time, robust, and accurate onboard pose estimation and mapping for reliable operations. This becomes particularly challenging in perception-degraded subterranean conditions under harsh environmental factors, including darkness, dust, and geometrically self-similar structures. This paper details CompSLAM, a highly resilient and hierarchical multi-modal localization and mapping framework designed to address these challenges. Its flexible architecture achieves resilience through redundancy by leveraging the complementary nature of pose estimates derived from diverse sensor modalities. Developed during the DARPA Subterranean Challenge, CompSLAM was successfully deployed on all aerial, legged, and wheeled robots of Team Cerberus during their competition-winning final run. Furthermore, it has proven to be a reliable odometry and mapping solution in various subsequent projects, with extensions enabling multi-robot map sharing for marsupial robotic deployments and collaborative mapping. This paper also introduces a comprehensive dataset acquired by a manually teleoperated quadrupedal robot, covering a significant portion of the DARPA Subterranean Challenge finals course. This dataset evaluates CompSLAM's robustness to sensor degradations as the robot traverses 740 meters in an environment characterized by highly variable geometries and demanding lighting conditions. The CompSLAM code and the DARPA SubT Finals dataset are made publicly available for the benefit of the robotics community

Holistic Fusion: Task- and Setup-Agnostic Robot Localization and State Estimation with Factor Graphs

Apr 08, 2025Abstract:Seamless operation of mobile robots in challenging environments requires low-latency local motion estimation (e.g., dynamic maneuvers) and accurate global localization (e.g., wayfinding). While most existing sensor-fusion approaches are designed for specific scenarios, this work introduces a flexible open-source solution for task- and setup-agnostic multimodal sensor fusion that is distinguished by its generality and usability. Holistic Fusion formulates sensor fusion as a combined estimation problem of i) the local and global robot state and ii) a (theoretically unlimited) number of dynamic context variables, including automatic alignment of reference frames; this formulation fits countless real-world applications without any conceptual modifications. The proposed factor-graph solution enables the direct fusion of an arbitrary number of absolute, local, and landmark measurements expressed with respect to different reference frames by explicitly including them as states in the optimization and modeling their evolution as random walks. Moreover, local smoothness and consistency receive particular attention to prevent jumps in the robot state belief. HF enables low-latency and smooth online state estimation on typical robot hardware while simultaneously providing low-drift global localization at the IMU measurement rate. The efficacy of this released framework is demonstrated in five real-world scenarios on three robotic platforms, each with distinct task requirements.

Autonomous Robotic Radio Source Localization via a Novel Gaussian Mixture Filtering Approach

Mar 13, 2025Abstract:This study proposes a new Gaussian Mixture Filter (GMF) to improve the estimation performance for the autonomous robotic radio signal source search and localization problem in unknown environments. The proposed filter is first tested with a benchmark numerical problem to validate the performance with other state-of-practice approaches such as Particle Gaussian Mixture (PGM) filters and Particle Filter (PF). Then the proposed approach is tested and compared against PF and PGM filters in real-world robotic field experiments to validate its impact for real-world robotic applications. The considered real-world scenarios have partial observability with the range-only measurement and uncertainty with the measurement model. The results show that the proposed filter can handle this partial observability effectively whilst showing improved performance compared to PF, reducing the computation requirements while demonstrating improved robustness over compared techniques.

Few-shot Semantic Learning for Robust Multi-Biome 3D Semantic Mapping in Off-Road Environments

Nov 10, 2024

Abstract:Off-road environments pose significant perception challenges for high-speed autonomous navigation due to unstructured terrain, degraded sensing conditions, and domain-shifts among biomes. Learning semantic information across these conditions and biomes can be challenging when a large amount of ground truth data is required. In this work, we propose an approach that leverages a pre-trained Vision Transformer (ViT) with fine-tuning on a small (<500 images), sparse and coarsely labeled (<30% pixels) multi-biome dataset to predict 2D semantic segmentation classes. These classes are fused over time via a novel range-based metric and aggregated into a 3D semantic voxel map. We demonstrate zero-shot out-of-biome 2D semantic segmentation on the Yamaha (52.9 mIoU) and Rellis (55.5 mIoU) datasets along with few-shot coarse sparse labeling with existing data for improved segmentation performance on Yamaha (66.6 mIoU) and Rellis (67.2 mIoU). We further illustrate the feasibility of using a voxel map with a range-based semantic fusion approach to handle common off-road hazards like pop-up hazards, overhangs, and water features.

Enabling Novel Mission Operations and Interactions with ROSA: The Robot Operating System Agent

Oct 09, 2024Abstract:The advancement of robotic systems has revolutionized numerous industries, yet their operation often demands specialized technical knowledge, limiting accessibility for non-expert users. This paper introduces ROSA (Robot Operating System Agent), an AI-powered agent that bridges the gap between the Robot Operating System (ROS) and natural language interfaces. By leveraging state-of-the-art language models and integrating open-source frameworks, ROSA enables operators to interact with robots using natural language, translating commands into actions and interfacing with ROS through well-defined tools. ROSA's design is modular and extensible, offering seamless integration with both ROS1 and ROS2, along with safety mechanisms like parameter validation and constraint enforcement to ensure secure, reliable operations. While ROSA is originally designed for ROS, it can be extended to work with other robotics middle-wares to maximize compatibility across missions. ROSA enhances human-robot interaction by democratizing access to complex robotic systems, empowering users of all expertise levels with multi-modal capabilities such as speech integration and visual perception. Ethical considerations are thoroughly addressed, guided by foundational principles like Asimov's Three Laws of Robotics, ensuring that AI integration promotes safety, transparency, privacy, and accountability. By making robotic technology more user-friendly and accessible, ROSA not only improves operational efficiency but also sets a new standard for responsible AI use in robotics and potentially future mission operations. This paper introduces ROSA's architecture and showcases initial mock-up operations in JPL's Mars Yard, a laboratory, and a simulation using three different robots. The core ROSA library is available as open-source.

Robust High-Speed State Estimation for Off-road Navigation using Radar Velocity Factors

Sep 17, 2024Abstract:Enabling robot autonomy in complex environments for mission critical application requires robust state estimation. Particularly under conditions where the exteroceptive sensors, which the navigation depends on, can be degraded by environmental challenges thus, leading to mission failure. It is precisely in such challenges where the potential for FMCW radar sensors is highlighted: as a complementary exteroceptive sensing modality with direct velocity measuring capabilities. In this work we integrate radial speed measurements from a FMCW radar sensor, using a radial speed factor, to provide linear velocity updates into a sliding-window state estimator for fusion with LiDAR pose and IMU measurements. We demonstrate that this augmentation increases the robustness of the state estimator to challenging conditions present in the environment and the negative effects they can pose to vulnerable exteroceptive modalities. The proposed method is extensively evaluated using robotic field experiments conducted using an autonomous, full-scale, off-road vehicle operating at high-speeds (~12 m/s) in complex desert environments. Furthermore, the robustness of the approach is demonstrated for cases of both simulated and real-world degradation of the LiDAR odometry performance along with comparison against state-of-the-art methods for radar-inertial odometry on public datasets.

RoadRunner M&M -- Learning Multi-range Multi-resolution Traversability Maps for Autonomous Off-road Navigation

Sep 17, 2024Abstract:Autonomous robot navigation in off-road environments requires a comprehensive understanding of the terrain geometry and traversability. The degraded perceptual conditions and sparse geometric information at longer ranges make the problem challenging especially when driving at high speeds. Furthermore, the sensing-to-mapping latency and the look-ahead map range can limit the maximum speed of the vehicle. Building on top of the recent work RoadRunner, in this work, we address the challenge of long-range (100 m) traversability estimation. Our RoadRunner (M&M) is an end-to-end learning-based framework that directly predicts the traversability and elevation maps at multiple ranges (50 m, 100 m) and resolutions (0.2 m, 0.8 m) taking as input multiple images and a LiDAR voxel map. Our method is trained in a self-supervised manner by leveraging the dense supervision signal generated by fusing predictions from an existing traversability estimation stack (X-Racer) in hindsight and satellite Digital Elevation Maps. RoadRunner M&M achieves a significant improvement of up to 50% for elevation mapping and 30% for traversability estimation over RoadRunner, and is able to predict in 30% more regions compared to X-Racer while achieving real-time performance. Experiments on various out-of-distribution datasets also demonstrate that our data-driven approach starts to generalize to novel unstructured environments. We integrate our proposed framework in closed-loop with the path planner to demonstrate autonomous high-speed off-road robotic navigation in challenging real-world environments. Project Page: https://leggedrobotics.github.io/roadrunner_mm/

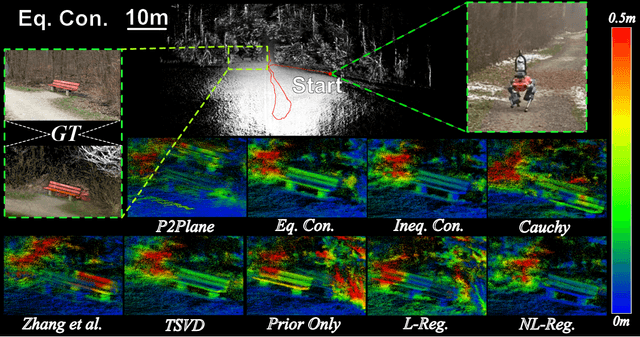

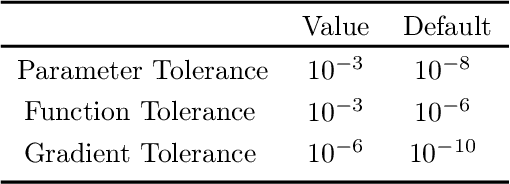

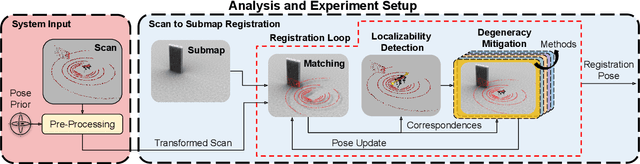

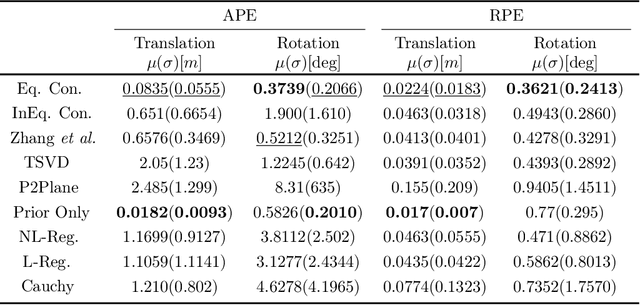

Informed, Constrained, Aligned: A Field Analysis on Degeneracy-aware Point Cloud Registration in the Wild

Aug 21, 2024

Abstract:The ICP registration algorithm has been a preferred method for LiDAR-based robot localization for nearly a decade. However, even in modern SLAM solutions, ICP can degrade and become unreliable in geometrically ill-conditioned environments. Current solutions primarily focus on utilizing additional sources of information, such as external odometry, to either replace the degenerate directions of the optimization solution or add additional constraints in a sensor-fusion setup afterward. In response, this work investigates and compares new and existing degeneracy mitigation methods for robust LiDAR-based localization and analyzes the efficacy of these approaches in degenerate environments for the first time in the literature at this scale. Specifically, this work proposes and investigates i) the incorporation of different types of constraints into the ICP algorithm, ii) the effect of using active or passive degeneracy mitigation techniques, and iii) the choice of utilizing global point cloud registration methods on the ill-conditioned ICP problem in LiDAR degenerate environments. The study results are validated through multiple real-world field and simulated experiments. The analysis shows that active optimization degeneracy mitigation is necessary and advantageous in the absence of reliable external estimate assistance for LiDAR-SLAM. Furthermore, introducing degeneracy-aware hard constraints in the optimization before or during the optimization is shown to perform better in the wild than by including the constraints after. Moreover, with heuristic fine-tuned parameters, soft constraints can provide equal or better results in complex ill-conditioned scenarios. The implementations used in the analysis of this work are made publicly available to the community.

RoadRunner - Learning Traversability Estimation for Autonomous Off-road Driving

Mar 03, 2024

Abstract:Autonomous navigation at high speeds in off-road environments necessitates robots to comprehensively understand their surroundings using onboard sensing only. The extreme conditions posed by the off-road setting can cause degraded camera image quality due to poor lighting and motion blur, as well as limited sparse geometric information available from LiDAR sensing when driving at high speeds. In this work, we present RoadRunner, a novel framework capable of predicting terrain traversability and an elevation map directly from camera and LiDAR sensor inputs. RoadRunner enables reliable autonomous navigation, by fusing sensory information, handling of uncertainty, and generation of contextually informed predictions about the geometry and traversability of the terrain while operating at low latency. In contrast to existing methods relying on classifying handcrafted semantic classes and using heuristics to predict traversability costs, our method is trained end-to-end in a self-supervised fashion. The RoadRunner network architecture builds upon popular sensor fusion network architectures from the autonomous driving domain, which embed LiDAR and camera information into a common Bird's Eye View perspective. Training is enabled by utilizing an existing traversability estimation stack to generate training data in hindsight in a scalable manner from real-world off-road driving datasets. Furthermore, RoadRunner improves the system latency by a factor of roughly 4, from 500 ms to 140 ms, while improving the accuracy for traversability costs and elevation map predictions. We demonstrate the effectiveness of RoadRunner in enabling safe and reliable off-road navigation at high speeds in multiple real-world driving scenarios through unstructured desert environments.

Pixel to Elevation: Learning to Predict Elevation Maps at Long Range using Images for Autonomous Offroad Navigation

Jan 30, 2024Abstract:Understanding terrain topology at long-range is crucial for the success of off-road robotic missions, especially when navigating at high-speeds. LiDAR sensors, which are currently heavily relied upon for geometric mapping, provide sparse measurements when mapping at greater distances. To address this challenge, we present a novel learning-based approach capable of predicting terrain elevation maps at long-range using only onboard egocentric images in real-time. Our proposed method is comprised of three main elements. First, a transformer-based encoder is introduced that learns cross-view associations between the egocentric views and prior bird-eye-view elevation map predictions. Second, an orientation-aware positional encoding is proposed to incorporate the 3D vehicle pose information over complex unstructured terrain with multi-view visual image features. Lastly, a history-augmented learn-able map embedding is proposed to achieve better temporal consistency between elevation map predictions to facilitate the downstream navigational tasks. We experimentally validate the applicability of our proposed approach for autonomous offroad robotic navigation in complex and unstructured terrain using real-world offroad driving data. Furthermore, the method is qualitatively and quantitatively compared against the current state-of-the-art methods. Extensive field experiments demonstrate that our method surpasses baseline models in accurately predicting terrain elevation while effectively capturing the overall terrain topology at long-ranges. Finally, ablation studies are conducted to highlight and understand the effect of key components of the proposed approach and validate their suitability to improve offroad robotic navigation capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge