Rohan Thakker

Risk-aware Integrated Task and Motion Planning for Versatile Snake Robots under Localization Failures

Feb 27, 2025

Abstract:Snake robots enable mobility through extreme terrains and confined environments in terrestrial and space applications. However, robust perception and localization for snake robots remain an open challenge due to the proximity of the sensor payload to the ground coupled with a limited field of view. To address this issue, we propose Blind-motion with Intermittently Scheduled Scans (BLISS) which combines proprioception-only mobility with intermittent scans to be resilient against both localization failures and collision risks. BLISS is formulated as an integrated Task and Motion Planning (TAMP) problem that leads to a Chance-Constrained Hybrid Partially Observable Markov Decision Process (CC-HPOMDP), known to be computationally intractable due to the curse of history. Our novelty lies in reformulating CC-HPOMDP as a tractable, convex Mixed Integer Linear Program. This allows us to solve BLISS-TAMP significantly faster and jointly derive optimal task-motion plans. Simulations and hardware experiments on the EELS snake robot show our method achieves over an order of magnitude computational improvement compared to state-of-the-art POMDP planners and $>$ 50\% better navigation time optimality versus classical two-stage planners.

Enabling Novel Mission Operations and Interactions with ROSA: The Robot Operating System Agent

Oct 09, 2024Abstract:The advancement of robotic systems has revolutionized numerous industries, yet their operation often demands specialized technical knowledge, limiting accessibility for non-expert users. This paper introduces ROSA (Robot Operating System Agent), an AI-powered agent that bridges the gap between the Robot Operating System (ROS) and natural language interfaces. By leveraging state-of-the-art language models and integrating open-source frameworks, ROSA enables operators to interact with robots using natural language, translating commands into actions and interfacing with ROS through well-defined tools. ROSA's design is modular and extensible, offering seamless integration with both ROS1 and ROS2, along with safety mechanisms like parameter validation and constraint enforcement to ensure secure, reliable operations. While ROSA is originally designed for ROS, it can be extended to work with other robotics middle-wares to maximize compatibility across missions. ROSA enhances human-robot interaction by democratizing access to complex robotic systems, empowering users of all expertise levels with multi-modal capabilities such as speech integration and visual perception. Ethical considerations are thoroughly addressed, guided by foundational principles like Asimov's Three Laws of Robotics, ensuring that AI integration promotes safety, transparency, privacy, and accountability. By making robotic technology more user-friendly and accessible, ROSA not only improves operational efficiency but also sets a new standard for responsible AI use in robotics and potentially future mission operations. This paper introduces ROSA's architecture and showcases initial mock-up operations in JPL's Mars Yard, a laboratory, and a simulation using three different robots. The core ROSA library is available as open-source.

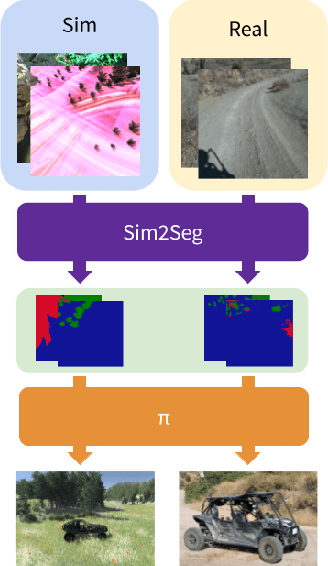

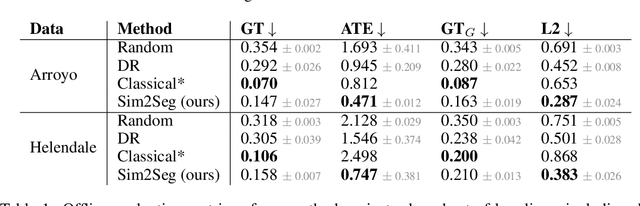

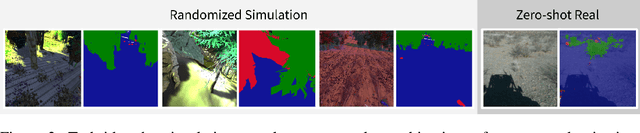

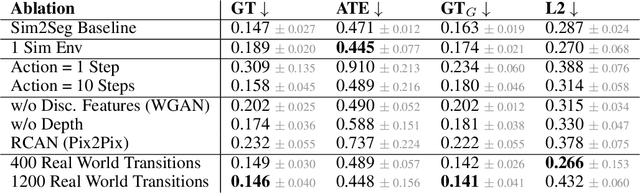

Sim-to-Real via Sim-to-Seg: End-to-end Off-road Autonomous Driving Without Real Data

Oct 25, 2022

Abstract:Autonomous driving is complex, requiring sophisticated 3D scene understanding, localization, mapping, and control. Rather than explicitly modelling and fusing each of these components, we instead consider an end-to-end approach via reinforcement learning (RL). However, collecting exploration driving data in the real world is impractical and dangerous. While training in simulation and deploying visual sim-to-real techniques has worked well for robot manipulation, deploying beyond controlled workspace viewpoints remains a challenge. In this paper, we address this challenge by presenting Sim2Seg, a re-imagining of RCAN that crosses the visual reality gap for off-road autonomous driving, without using any real-world data. This is done by learning to translate randomized simulation images into simulated segmentation and depth maps, subsequently enabling real-world images to also be translated. This allows us to train an end-to-end RL policy in simulation, and directly deploy in the real-world. Our approach, which can be trained in 48 hours on 1 GPU, can perform equally as well as a classical perception and control stack that took thousands of engineering hours over several months to build. We hope this work motivates future end-to-end autonomous driving research.

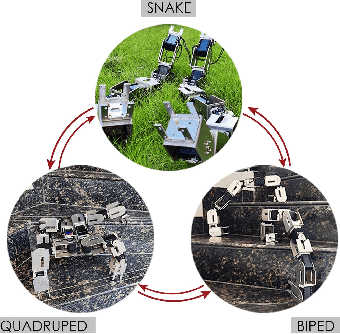

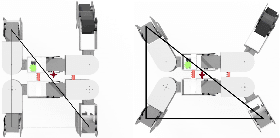

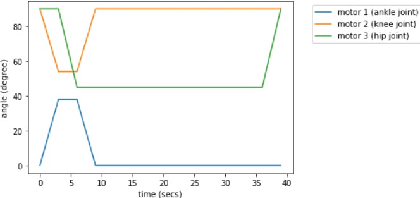

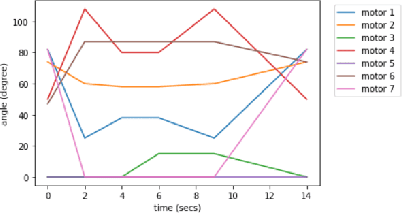

ReQuBiS -- Reconfigurable Quadrupedal-Bipedal Snake Robots

Jul 02, 2021

Abstract:The selection of mobility modes for robot navigation consists of various trade-offs. Snake robots are ideal for traversing through constrained environments such as pipes, cluttered and rough terrain, whereas bipedal robots are more suited for structured environments such as stairs. Finally, quadruped robots are more stable than bipeds and can carry larger payloads than snakes and bipeds but struggle to navigate soft soil, sand, ice, and constrained environments. A reconfigurable robot can achieve the best of all worlds. Unfortunately, state-of-the-art reconfigurable robots rely on the rearrangement of modules through complicated mechanisms to dissemble and assemble at different places, increasing the size, weight, and power (SWaP) requirements. We propose Reconfigurable Quadrupedal-Bipedal Snake Robots (ReQuBiS), which can transform between mobility modes without rearranging modules. Hence, requiring just a single modification mechanism. Furthermore, our design allows the robot to split into two agents to perform tasks in parallel for biped and snake mobility. Experimental results demonstrate these mobility capabilities in snake, quadruped, and biped modes and transitions between them.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

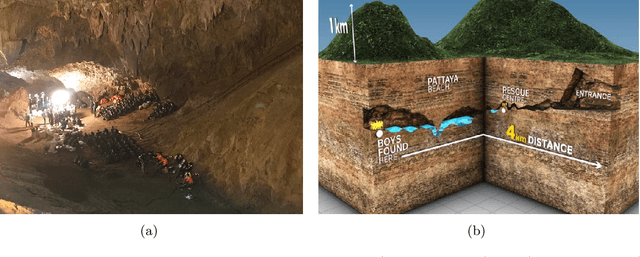

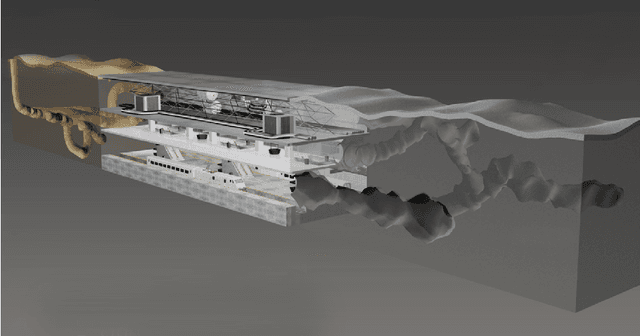

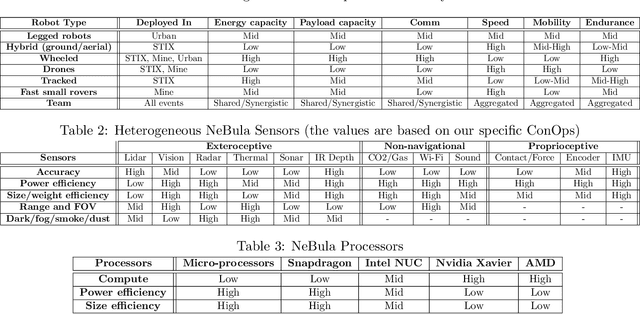

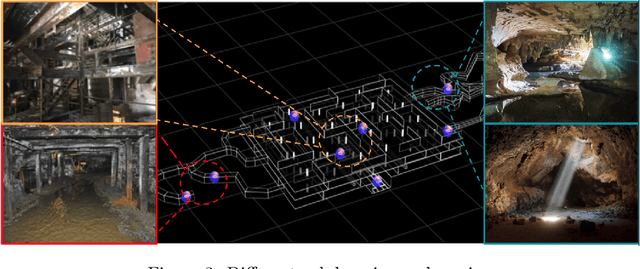

Mar 28, 2021

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

Autonomous Off-road Navigation over Extreme Terrains with Perceptually-challenging Conditions

Jan 26, 2021

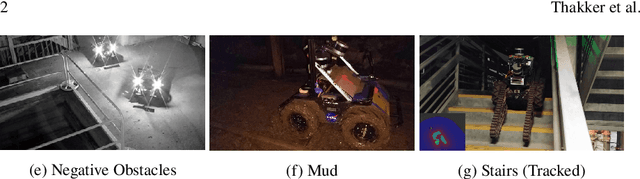

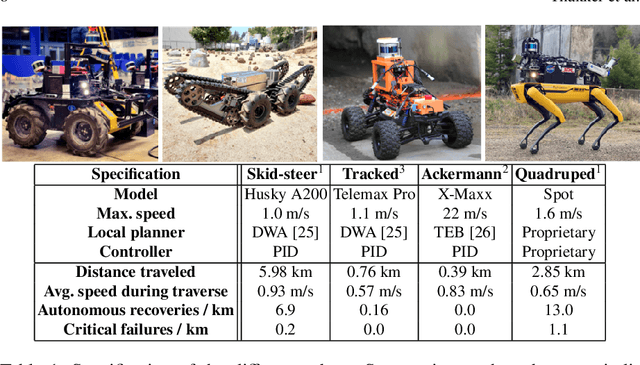

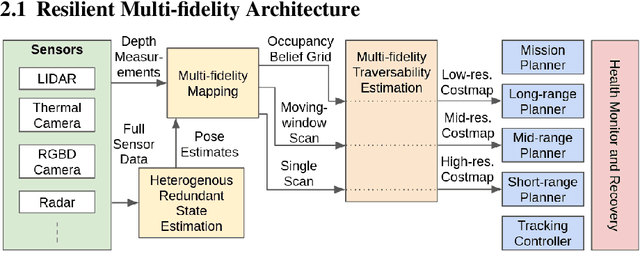

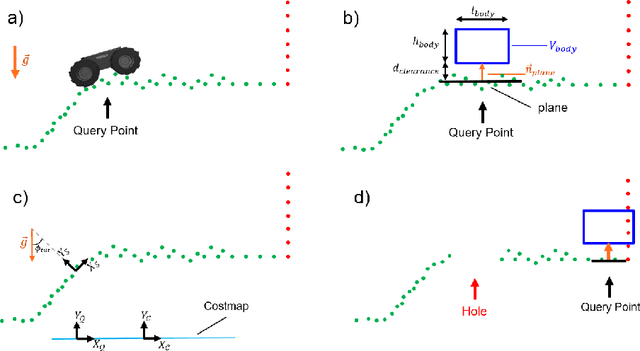

Abstract:We propose a framework for resilient autonomous navigation in perceptually challenging unknown environments with mobility-stressing elements such as uneven surfaces with rocks and boulders, steep slopes, negative obstacles like cliffs and holes, and narrow passages. Environments are GPS-denied and perceptually-degraded with variable lighting from dark to lit and obscurants (dust, fog, smoke). Lack of prior maps and degraded communication eliminates the possibility of prior or off-board computation or operator intervention. This necessitates real-time on-board computation using noisy sensor data. To address these challenges, we propose a resilient architecture that exploits redundancy and heterogeneity in sensing modalities. Further resilience is achieved by triggering recovery behaviors upon failure. We propose a fast settling algorithm to generate robust multi-fidelity traversability estimates in real-time. The proposed approach was deployed on multiple physical systems including skid-steer and tracked robots, a high-speed RC car and legged robots, as a part of Team CoSTAR's effort to the DARPA Subterranean Challenge, where the team won 2nd and 1st place in the Tunnel and Urban Circuits, respectively.

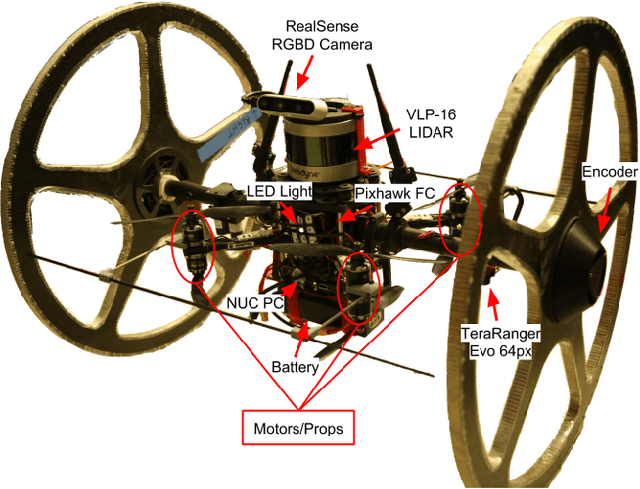

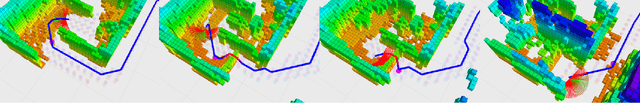

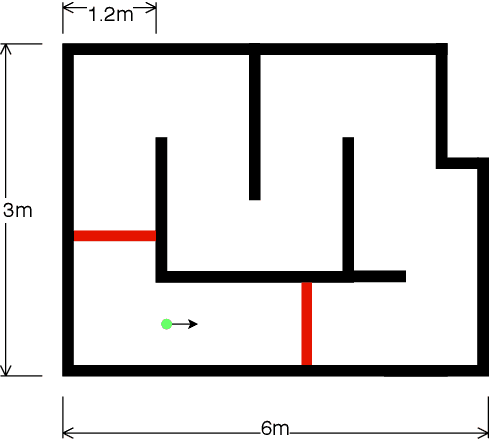

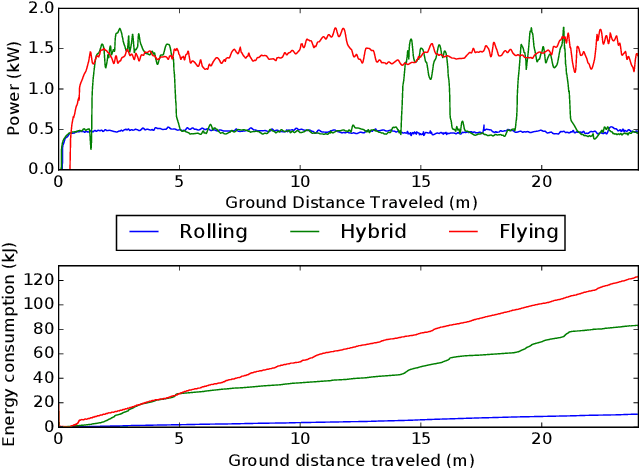

Autonomous Hybrid Ground/Aerial Mobility in Unknown Environments

Sep 11, 2020

Abstract:Hybrid ground and aerial vehicles can possess distinct advantages over ground-only or flight-only designs in terms of energy savings and increased mobility. In this work we outline our unified framework for controls, planning, and autonomy of hybrid ground/air vehicles. Our contribution is three-fold: 1) We develop a control scheme for the control of passive two-wheeled hybrid ground/aerial vehicles. 2) We present a unified planner for both rolling and flying by leveraging differential flatness mappings. 3) We conduct experiments leveraging mapping and global planning for hybrid mobility in unknown environments, showing that hybrid mobility uses up to five times less energy than flying only.

Towards Resilient Autonomous Navigation of Drones

Aug 21, 2020

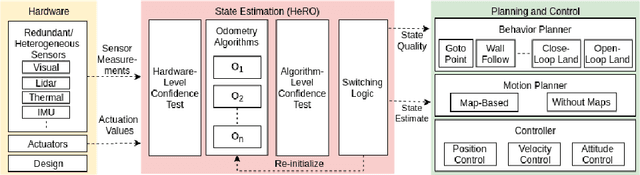

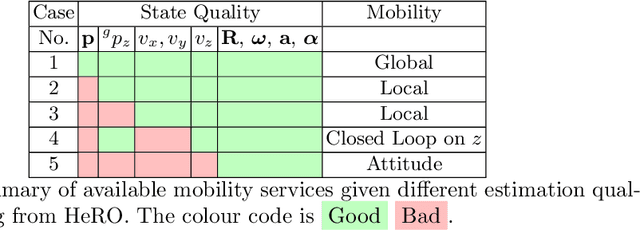

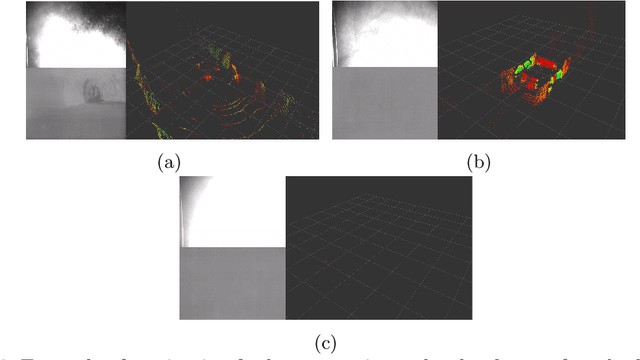

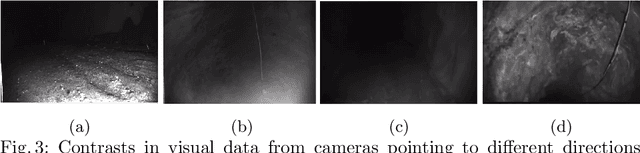

Abstract:Robots and particularly drones are especially useful in exploring extreme environments that pose hazards to humans. To ensure safe operations in these situations, usually perceptually degraded and without good GNSS, it is critical to have a reliable and robust state estimation solution. The main body of literature in robot state estimation focuses on developing complex algorithms favoring accuracy. Typically, these approaches rely on a strong underlying assumption: the main estimation engine will not fail during operation. In contrast, we propose an architecture that pursues robustness in state estimation by considering redundancy and heterogeneity in both sensing and estimation algorithms. The architecture is designed to expect and detect failures and adapt the behavior of the system to ensure safety. To this end, we present HeRO (Heterogeneous Redundant Odometry): a stack of estimation algorithms running in parallel supervised by a resiliency logic. This logic carries out three main functions: a) perform confidence tests both in data quality and algorithm health; b) re-initialize those algorithms that might be malfunctioning; c) generate a smooth state estimate by multiplexing the inputs based on their quality. The state and quality estimates are used by the guidance and control modules to adapt the mobility behaviors of the system. The validation and utility of the approach are shown with real experiments on a flying robot for the use case of autonomous exploration of subterranean environments, with particular results from the STIX event of the DARPA Subterranean Challenge.

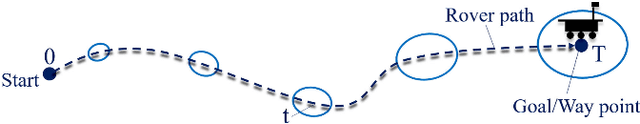

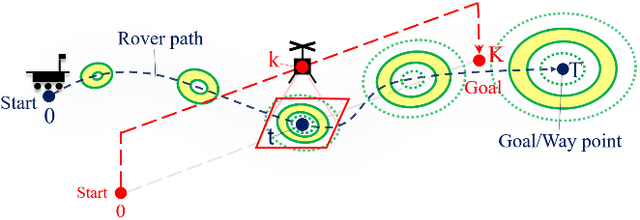

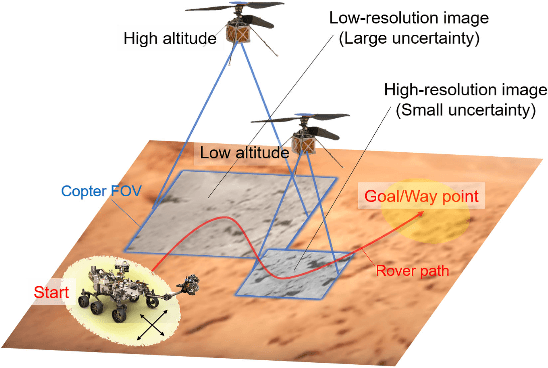

Where to Map? Iterative Rover-Copter Path Planning for Mars Exploration

Aug 17, 2020

Abstract:In addition to conventional ground rovers, the Mars 2020 mission will send a helicopter to Mars. The copter's high-resolution data helps the rover to identify small hazards such as steps and pointy rocks, as well as providing rich textual information useful to predict perception performance. In this paper, we consider a three-agent system composed of a Mars rover, copter, and orbiter. The objective is to provide good localization to the rover by selecting an optimal path that minimizes the localization uncertainty accumulation during the rover's traverse. To achieve this goal, we quantify the localizability as a goodness measure associated with the map, and conduct a joint-space search over rover's path and copter's perceptual actions given prior information from the orbiter. We jointly address where to map by the copter and where to drive by the rover using the proposed iterative copter-rover path planner. We conducted numerical simulations using the map of Mars 2020 landing site to demonstrate the effectiveness of the proposed planner.

* 8 pages, 7 figures

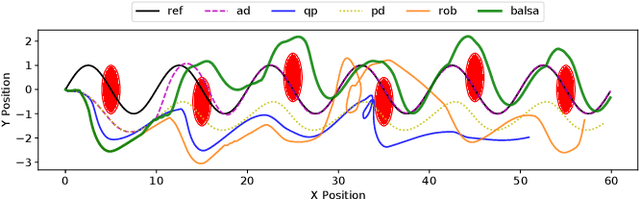

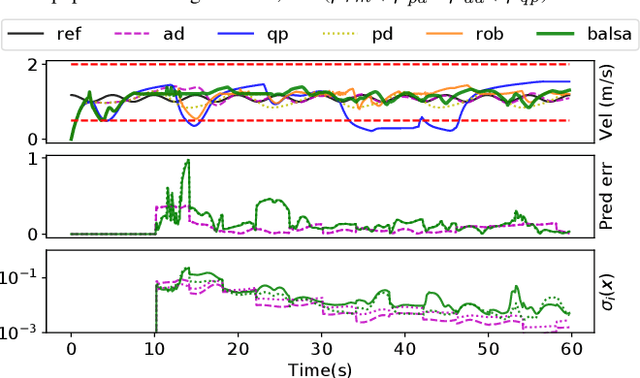

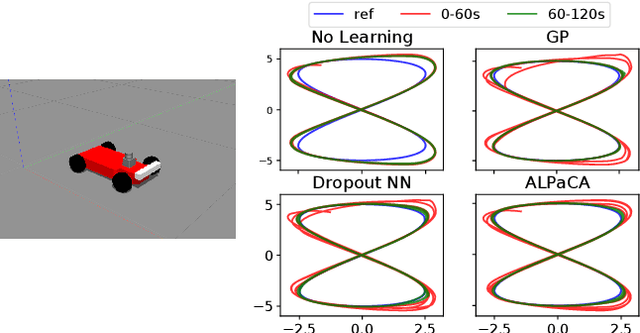

Bayesian Learning-Based Adaptive Control for Safety Critical Systems

Oct 05, 2019

Abstract:Deep learning has enjoyed much recent success, and applying state-of-the-art model learning methods to controls is an exciting prospect. However, there is a strong reluctance to use these methods on safety-critical systems, which have constraints on safety, stability, and real-time performance. We propose a framework which satisfies these constraints while allowing the use of deep neural networks for learning model uncertainties. Central to our method is the use of Bayesian model learning, which provides an avenue for maintaining appropriate degrees of caution in the face of the unknown. In the proposed approach, we develop an adaptive control framework leveraging the theory of stochastic CLFs (Control Lypunov Functions) and stochastic CBFs (Control Barrier Functions) along with tractable Bayesian model learning via Gaussian Processes or Bayesian neural networks. Under reasonable assumptions, we guarantee stability and safety while adapting to unknown dynamics with probability 1. We demonstrate this architecture for high-speed terrestrial mobility targeting potential applications in safety-critical high-speed Mars rover missions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge