Ali-akbar Agha-mohammadi

Jet Propulsion Lab., California Institute of Technology and

Delay-Aware Diffusion Policy: Bridging the Observation-Execution Gap in Dynamic Tasks

Dec 08, 2025

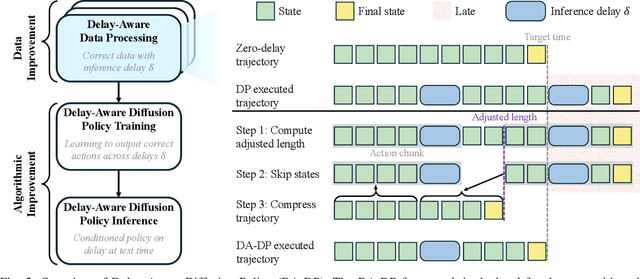

Abstract:As a robot senses and selects actions, the world keeps changing. This inference delay creates a gap of tens to hundreds of milliseconds between the observed state and the state at execution. In this work, we take the natural generalization from zero delay to measured delay during training and inference. We introduce Delay-Aware Diffusion Policy (DA-DP), a framework for explicitly incorporating inference delays into policy learning. DA-DP corrects zero-delay trajectories to their delay-compensated counterparts, and augments the policy with delay conditioning. We empirically validate DA-DP on a variety of tasks, robots, and delays and find its success rate more robust to delay than delay-unaware methods. DA-DP is architecture agnostic and transfers beyond diffusion policies, offering a general pattern for delay-aware imitation learning. More broadly, DA-DP encourages evaluation protocols that report performance as a function of measured latency, not just task difficulty.

StageACT: Stage-Conditioned Imitation for Robust Humanoid Door Opening

Sep 16, 2025Abstract:Humanoid robots promise to operate in everyday human environments without requiring modifications to the surroundings. Among the many skills needed, opening doors is essential, as doors are the most common gateways in built spaces and often limit where a robot can go. Door opening, however, poses unique challenges as it is a long-horizon task under partial observability, such as reasoning about the door's unobservable latch state that dictates whether the robot should rotate the handle or push the door. This ambiguity makes standard behavior cloning prone to mode collapse, yielding blended or out-of-sequence actions. We introduce StageACT, a stage-conditioned imitation learning framework that augments low-level policies with task-stage inputs. This effective addition increases robustness to partial observability, leading to higher success rates and shorter completion times. On a humanoid operating in a real-world office environment, StageACT achieves a 55% success rate on previously unseen doors, more than doubling the best baseline. Moreover, our method supports intentional behavior guidance through stage prompting, enabling recovery behaviors. These results highlight stage conditioning as a lightweight yet powerful mechanism for long-horizon humanoid loco-manipulation.

FALCON: Learning Force-Adaptive Humanoid Loco-Manipulation

May 10, 2025Abstract:Humanoid loco-manipulation holds transformative potential for daily service and industrial tasks, yet achieving precise, robust whole-body control with 3D end-effector force interaction remains a major challenge. Prior approaches are often limited to lightweight tasks or quadrupedal/wheeled platforms. To overcome these limitations, we propose FALCON, a dual-agent reinforcement-learning-based framework for robust force-adaptive humanoid loco-manipulation. FALCON decomposes whole-body control into two specialized agents: (1) a lower-body agent ensuring stable locomotion under external force disturbances, and (2) an upper-body agent precisely tracking end-effector positions with implicit adaptive force compensation. These two agents are jointly trained in simulation with a force curriculum that progressively escalates the magnitude of external force exerted on the end effector while respecting torque limits. Experiments demonstrate that, compared to the baselines, FALCON achieves 2x more accurate upper-body joint tracking, while maintaining robust locomotion under force disturbances and achieving faster training convergence. Moreover, FALCON enables policy training without embodiment-specific reward or curriculum tuning. Using the same training setup, we obtain policies that are deployed across multiple humanoids, enabling forceful loco-manipulation tasks such as transporting payloads (0-20N force), cart-pulling (0-100N), and door-opening (0-40N) in the real world.

SayComply: Grounding Field Robotic Tasks in Operational Compliance through Retrieval-Based Language Models

Nov 18, 2024

Abstract:This paper addresses the problem of task planning for robots that must comply with operational manuals in real-world settings. Task planning under these constraints is essential for enabling autonomous robot operation in domains that require adherence to domain-specific knowledge. Current methods for generating robot goals and plans rely on common sense knowledge encoded in large language models. However, these models lack grounding of robot plans to domain-specific knowledge and are not easily transferable between multiple sites or customers with different compliance needs. In this work, we present SayComply, which enables grounding robotic task planning with operational compliance using retrieval-based language models. We design a hierarchical database of operational, environment, and robot embodiment manuals and procedures to enable efficient retrieval of the relevant context under the limited context length of the LLMs. We then design a task planner using a tree-based retrieval augmented generation (RAG) technique to generate robot tasks that follow user instructions while simultaneously complying with the domain knowledge in the database. We demonstrate the benefits of our approach through simulations and hardware experiments in real-world scenarios that require precise context retrieval across various types of context, outperforming the standard RAG method. Our approach bridges the gap in deploying robots that consistently adhere to operational protocols, offering a scalable and edge-deployable solution for ensuring compliance across varied and complex real-world environments. Project website: saycomply.github.io.

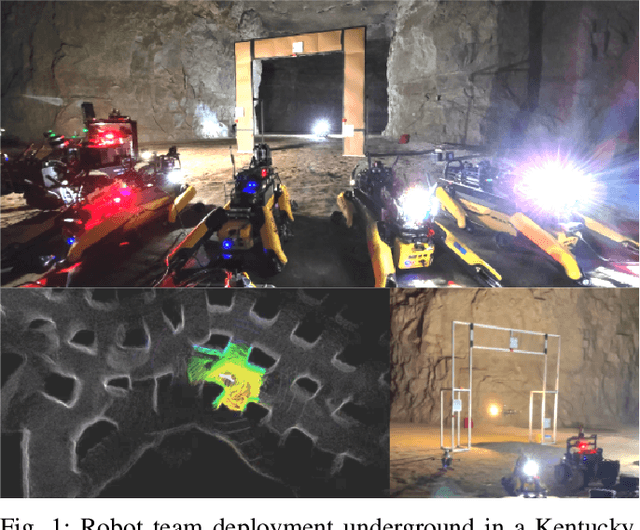

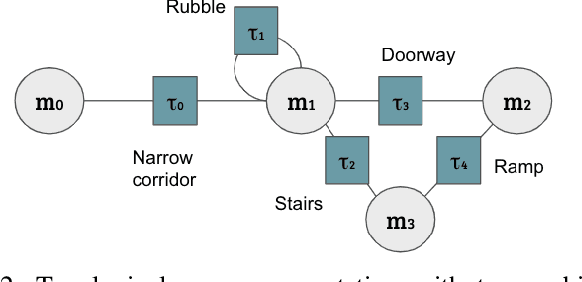

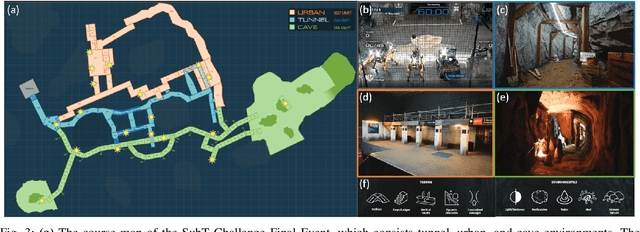

Capability-aware Task Allocation and Team Formation Analysis for Cooperative Exploration of Complex Environments

Nov 01, 2024

Abstract:To achieve autonomy in complex real-world exploration missions, we consider deployment strategies for a team of robots with heterogeneous autonomy capabilities. In this work, we formulate a multi-robot exploration mission and compute an operation policy to maintain robot team productivity and maximize mission rewards. The environment description, robot capability, and mission outcome are modeled as a Markov decision process (MDP). We also include constraints in real-world operation, such as sensor failures, limited communication coverage, and mobility-stressing elements. Then, we study the proposed operation model on a real-world scenario in the context of the DARPA Subterranean (SubT) Challenge. The computed deployment policy is also compared against the human-based operation strategy in the final competition of the SubT Challenge. Finally, using the proposed model, we discuss the design trade-off on building a multi-robot team with heterogeneous capabilities.

FRAME: A Modular Framework for Autonomous Map-merging: Advancements in the Field

Apr 27, 2024

Abstract:In this article, a novel approach for merging 3D point cloud maps in the context of egocentric multi-robot exploration is presented. Unlike traditional methods, the proposed approach leverages state-of-the-art place recognition and learned descriptors to efficiently detect overlap between maps, eliminating the need for the time-consuming global feature extraction and feature matching process. The estimated overlapping regions are used to calculate a homogeneous rigid transform, which serves as an initial condition for the GICP point cloud registration algorithm to refine the alignment between the maps. The advantages of this approach include faster processing time, improved accuracy, and increased robustness in challenging environments. Furthermore, the effectiveness of the proposed framework is successfully demonstrated through multiple field missions of robot exploration in a variety of different underground environments.

Low Frequency Sampling in Model Predictive Path Integral Control

Apr 03, 2024Abstract:Sampling-based model-predictive controllers have become a powerful optimization tool for planning and control problems in various challenging environments. In this paper, we show how the default choice of uncorrelated Gaussian distributions can be improved upon with the use of a colored noise distribution. Our choice of distribution allows for the emphasis on low frequency control signals, which can result in smoother and more exploratory samples. We use this frequency-based sampling distribution with Model Predictive Path Integral (MPPI) in both hardware and simulation experiments to show better or equal performance on systems with various speeds of input response.

Staircase Localization for Autonomous Exploration in Urban Environments

Mar 26, 2024

Abstract:A staircase localization method is proposed for robots to explore urban environments autonomously. The proposed method employs a modular design in the form of a cascade pipeline consisting of three modules of stair detection, line segment detection, and stair localization modules. The stair detection module utilizes an object detection algorithm based on deep learning to generate a region of interest (ROI). From the ROI, line segment features are extracted using a deep line segment detection algorithm. The extracted line segments are used to localize a staircase in terms of position, orientation, and stair direction. The stair detection and localization are performed only with a single RGB-D camera. Each component of the proposed pipeline does not need to be designed particularly for staircases, which makes it easy to maintain the whole pipeline and replace each component with state-of-the-art deep learning detection techniques. The results of real-world experiments show that the proposed method can perform accurate stair detection and localization during autonomous exploration for various structured and unstructured upstairs and downstairs with shadows, dirt, and occlusions by artificial and natural objects.

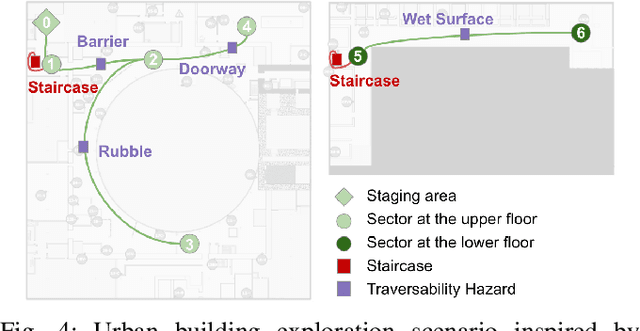

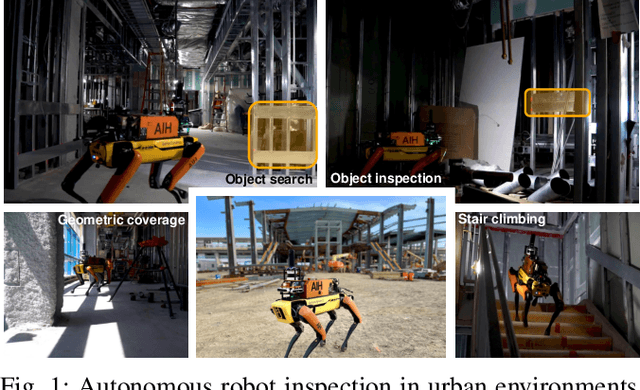

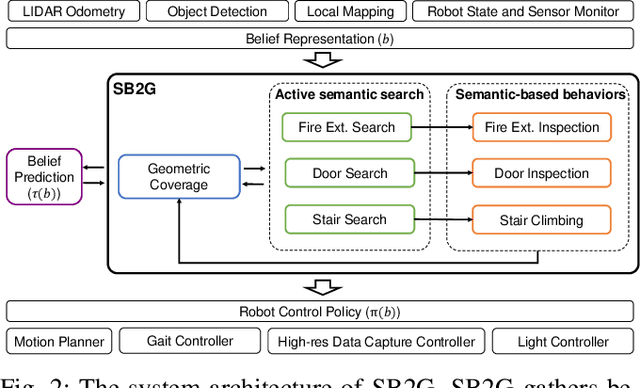

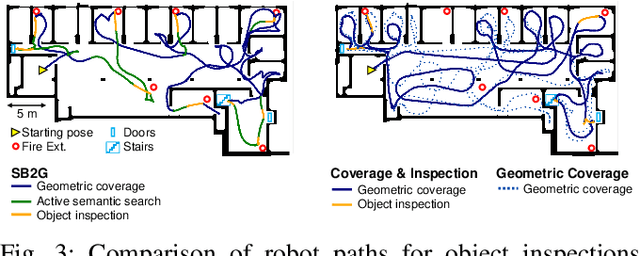

Semantic Belief Behavior Graph: Enabling Autonomous Robot Inspection in Unknown Environments

Jan 30, 2024

Abstract:This paper addresses the problem of autonomous robotic inspection in complex and unknown environments. This capability is crucial for efficient and precise inspections in various real-world scenarios, even when faced with perceptual uncertainty and lack of prior knowledge of the environment. Existing methods for real-world autonomous inspections typically rely on predefined targets and waypoints and often fail to adapt to dynamic or unknown settings. In this work, we introduce the Semantic Belief Behavior Graph (SB2G) framework as a novel approach to semantic-aware autonomous robot inspection. SB2G generates a control policy for the robot, featuring behavior nodes that encapsulate various semantic-based policies designed for inspecting different classes of objects. We design an active semantic search behavior to guide the robot in locating objects for inspection while reducing semantic information uncertainty. The edges in the SB2G encode transitions between these behaviors. We validate our approach through simulation and real-world urban inspections using a legged robotic platform. Our results show that SB2G enables a more efficient inspection policy, exhibiting performance comparable to human-operated inspections.

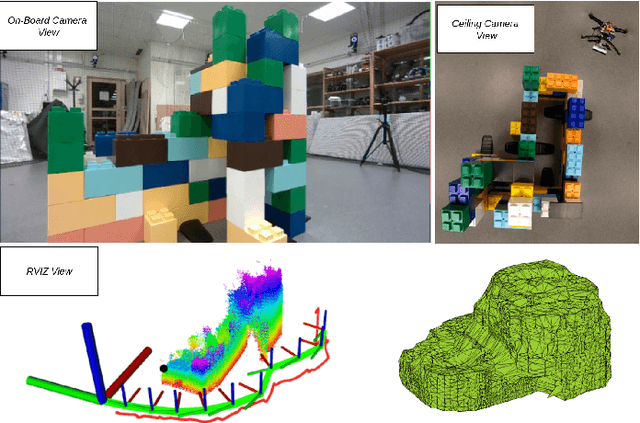

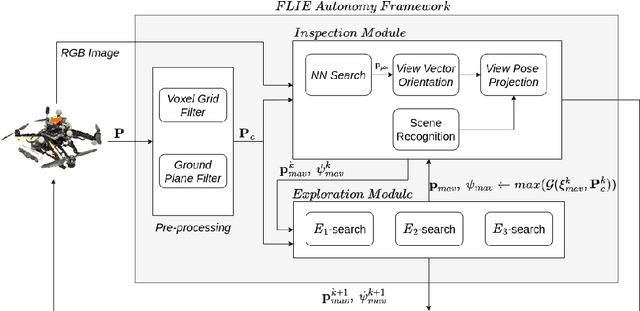

Towards a Reduced Dependency Framework for Autonomous Unified Inspect-Explore Missions

Sep 01, 2023

Abstract:The task of establishing and maintaining situational awareness in an unknown environment is a critical step to fulfil in a mission related to the field of rescue robotics. Predominantly, the problem of visual inspection of urban structures is dealt with view-planning being addressed by map-based approaches. In this article, we propose a novel approach towards effective use of Micro Aerial Vehicles (MAVs) for obtaining a 3-D shape of an unknown structure of objects utilizing a map-independent planning framework. The problem is undertaken via a bifurcated approach to address the task of executing a closer inspection of detected structures with a wider exploration strategy to identify and locate nearby structures, while being equipped with limited sensing capability. The proposed framework is evaluated experimentally in a controlled indoor environment in presence of a mock-up environment validating the efficacy of the proposed inspect-explore policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge