Vignesh Kottayam Viswanathan

SPADE: Towards Scalable Path Planning Architecture on Actionable Multi-Domain 3D Scene Graphs

May 25, 2025Abstract:In this work, we introduce SPADE, a path planning framework designed for autonomous navigation in dynamic environments using 3D scene graphs. SPADE combines hierarchical path planning with local geometric awareness to enable collision-free movement in dynamic scenes. The framework bifurcates the planning problem into two: (a) solving the sparse abstract global layer plan and (b) iterative path refinement across denser lower local layers in step with local geometric scene navigation. To ensure efficient extraction of a feasible route in a dense multi-task domain scene graphs, the framework enforces informed sampling of traversable edges prior to path-planning. This removes extraneous information not relevant to path-planning and reduces the overall planning complexity over a graph. Existing approaches address the problem of path planning over scene graphs by decoupling hierarchical and geometric path evaluation processes. Specifically, this results in an inefficient replanning over the entire scene graph when encountering path obstructions blocking the original route. In contrast, SPADE prioritizes local layer planning coupled with local geometric scene navigation, enabling navigation through dynamic scenes while maintaining efficiency in computing a traversable route. We validate SPADE through extensive simulation experiments and real-world deployment on a quadrupedal robot, demonstrating its efficacy in handling complex and dynamic scenarios.

Estimating Commonsense Scene Composition on Belief Scene Graphs

May 05, 2025Abstract:This work establishes the concept of commonsense scene composition, with a focus on extending Belief Scene Graphs by estimating the spatial distribution of unseen objects. Specifically, the commonsense scene composition capability refers to the understanding of the spatial relationships among related objects in the scene, which in this article is modeled as a joint probability distribution for all possible locations of the semantic object class. The proposed framework includes two variants of a Correlation Information (CECI) model for learning probability distributions: (i) a baseline approach based on a Graph Convolutional Network, and (ii) a neuro-symbolic extension that integrates a spatial ontology based on Large Language Models (LLMs). Furthermore, this article provides a detailed description of the dataset generation process for such tasks. Finally, the framework has been validated through multiple runs on simulated data, as well as in a real-world indoor environment, demonstrating its ability to spatially interpret scenes across different room types.

xFLIE: Leveraging Actionable Hierarchical Scene Representations for Autonomous Semantic-Aware Inspection Missions

Dec 27, 2024

Abstract:This article presents xFLIE, a fully integrated 3D hierarchical scene graph based autonomous inspection architecture. Specifically, we present a tightly-coupled solution of incremental 3D Layered Semantic Graphs (LSG) construction and real-time exploitation by a multi-modal autonomy, First-Look based Inspection and Exploration (FLIE) planner, to address the task of inspection of apriori unknown semantic targets of interest in unknown environments. This work aims to address the challenge of maintaining, in addition to or as an alternative to volumetric models, an intuitive scene representation during large-scale inspection missions. Through its contributions, the proposed architecture aims to provide a high-level multi-tiered abstract environment representation whilst simultaneously maintaining a tractable foundation for rapid and informed decision-making capable of enhancing inspection planning through scene understanding, what should it inspect ?, and reasoning, why should it inspect ?. The proposed LSG framework is designed to leverage the concept of nesting lower local graphs, at multiple layers of abstraction, with the abstract concepts grounded on the functionality of the integrated FLIE planner. Through intuitive scene representation, the proposed architecture offers an easily digestible environment model for human operators which helps to improve situational awareness and their understanding of the operating environment. We highlight the use-case benefits of hierarchical and semantic path-planning capability over LSG to address queries, by the integrated planner as well as the human operator. The validity of the proposed architecture is evaluated in large-scale simulated outdoor urban scenarios as well as being deployed onboard a Boston Dynamics Spot quadruped robot for extensive outdoor field experiments.

An Actionable Hierarchical Scene Representation Enhancing Autonomous Inspection Missions in Unknown Environments

Dec 27, 2024

Abstract:In this article, we present the Layered Semantic Graphs (LSG), a novel actionable hierarchical scene graph, fully integrated with a multi-modal mission planner, the FLIE: A First-Look based Inspection and Exploration planner. The novelty of this work stems from aiming to address the task of maintaining an intuitive and multi-resolution scene representation, while simultaneously offering a tractable foundation for planning and scene understanding during an ongoing inspection mission of apriori unknown targets-of-interest in an unknown environment. The proposed LSG scheme is composed of locally nested hierarchical graphs, at multiple layers of abstraction, with the abstract concepts grounded on the functionality of the integrated FLIE planner. Furthermore, LSG encapsulates real-time semantic segmentation models that offer extraction and localization of desired semantic elements within the hierarchical representation. This extends the capability of the inspection planner, which can then leverage LSG to make an informed decision to inspect a particular semantic of interest. We also emphasize the hierarchical and semantic path-planning capabilities of LSG, which can extend inspection missions by improving situational awareness for human operators in an unknown environment. The validity of the proposed scheme is proven through extensive evaluations of the proposed architecture in simulations, as well as experimental field deployments on a Boston Dynamics Spot quadruped robot in urban outdoor environment settings.

A Surface Adaptive First-Look Inspection Planner for Autonomous Remote Sensing of Open-Pit Mines

Oct 14, 2024

Abstract:In this work, we present an autonomous inspection framework for remote sensing tasks in active open-pit mines. Specifically, the contributions are focused towards developing a methodology where an initial approximate operator-defined inspection plan is exploited by an online view-planner to predict an inspection path that can adapt to changes in the current mine-face morphology caused by route mining activities. The proposed inspection framework leverages instantaneous 3D LiDAR and localization measurements coupled with modelled sensor footprint for view-planning satisfying desired viewing and photogrammetric conditions. The efficacy of the proposed framework has been demonstrated through simulation in Feiring-Bruk open-pit mine environment and hardware-based outdoor experimental trials. The video showcasing the performance of the proposed work can be found here: https://youtu.be/uWWbDfoBvFc

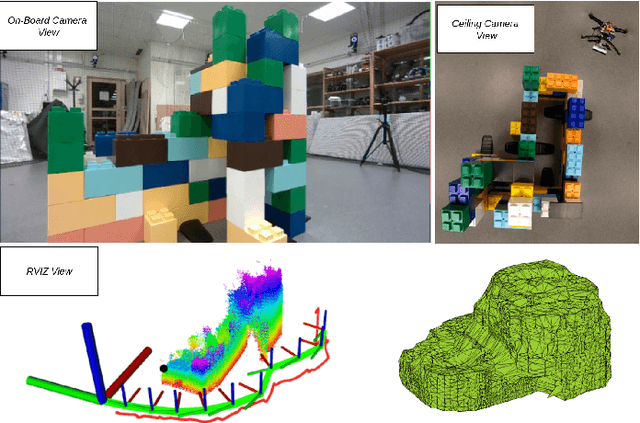

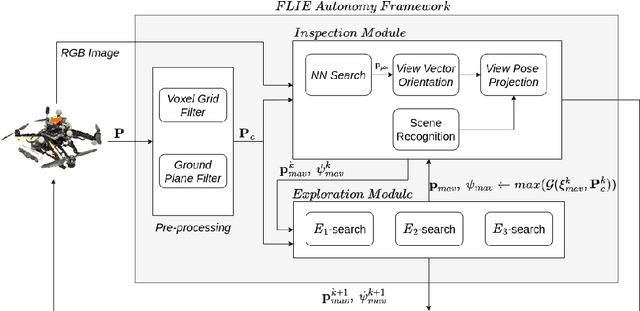

Towards a Reduced Dependency Framework for Autonomous Unified Inspect-Explore Missions

Sep 01, 2023

Abstract:The task of establishing and maintaining situational awareness in an unknown environment is a critical step to fulfil in a mission related to the field of rescue robotics. Predominantly, the problem of visual inspection of urban structures is dealt with view-planning being addressed by map-based approaches. In this article, we propose a novel approach towards effective use of Micro Aerial Vehicles (MAVs) for obtaining a 3-D shape of an unknown structure of objects utilizing a map-independent planning framework. The problem is undertaken via a bifurcated approach to address the task of executing a closer inspection of detected structures with a wider exploration strategy to identify and locate nearby structures, while being equipped with limited sensing capability. The proposed framework is evaluated experimentally in a controlled indoor environment in presence of a mock-up environment validating the efficacy of the proposed inspect-explore policy.

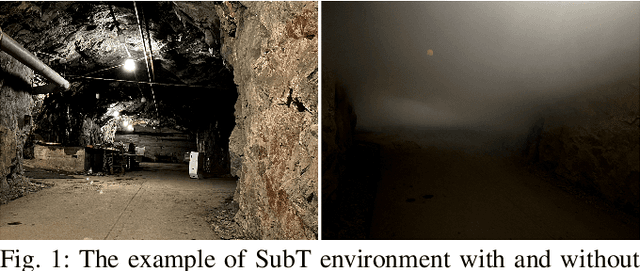

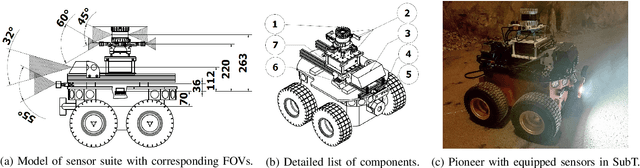

Multimodal Dataset from Harsh Sub-Terranean Environment with Aerosol Particles for Frontier Exploration

Apr 27, 2023

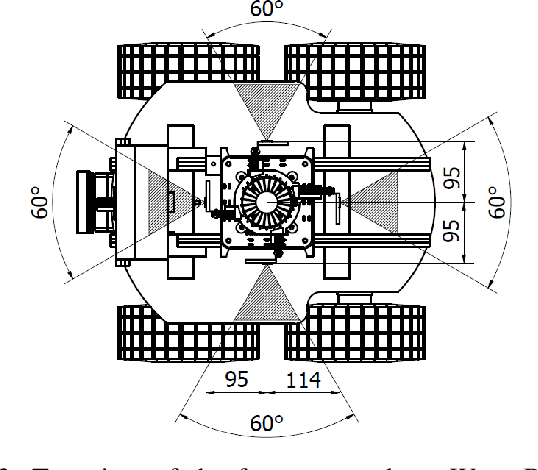

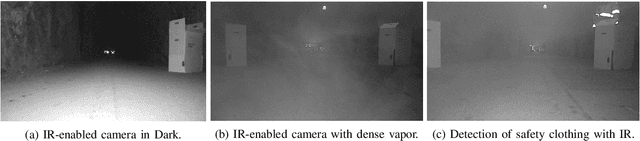

Abstract:Algorithms for autonomous navigation in environments without Global Navigation Satellite System (GNSS) coverage mainly rely on onboard perception systems. These systems commonly incorporate sensors like cameras and LiDARs, the performance of which may degrade in the presence of aerosol particles. Thus, there is a need of fusing acquired data from these sensors with data from RADARs which can penetrate through such particles. Overall, this will improve the performance of localization and collision avoidance algorithms under such environmental conditions. This paper introduces a multimodal dataset from the harsh and unstructured underground environment with aerosol particles. A detailed description of the onboard sensors and the environment, where the dataset is collected are presented to enable full evaluation of acquired data. Furthermore, the dataset contains synchronized raw data measurements from all onboard sensors in Robot Operating System (ROS) format to facilitate the evaluation of navigation, and localization algorithms in such environments. In contrast to the existing datasets, the focus of this paper is not only to capture both temporal and spatial data diversities but also to present the impact of harsh conditions on captured data. Therefore, to validate the dataset, a preliminary comparison of odometry from onboard LiDARs is presented.

Vision Based Docking of Multiple Satellites with an Uncooperative Target

Mar 16, 2023

Abstract:With the ever growing number of space debris in orbit, the need to prevent further space population is becoming more and more apparent. Refueling, servicing, inspection and deorbiting of spacecraft are some example missions that require precise navigation and docking in space. Having multiple, collaborating robots handling these tasks can greatly increase the efficiency of the mission in terms of time and cost. This article will introduce a modern and efficient control architecture for satellites on collaborative docking missions. The proposed architecture uses a centralized scheme that combines state-of-the-art, ad-hoc implementations of algorithms and techniques to maximize robustness and flexibility. It is based on a Model Predictive Controller (MPC) for which efficient cost function and constraint sets are designed to ensure a safe and accurate docking. A simulation environment is also presented to validate and test the proposed control scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge