Anton Koval

FRAME: A Modular Framework for Autonomous Map-merging: Advancements in the Field

Apr 27, 2024

Abstract:In this article, a novel approach for merging 3D point cloud maps in the context of egocentric multi-robot exploration is presented. Unlike traditional methods, the proposed approach leverages state-of-the-art place recognition and learned descriptors to efficiently detect overlap between maps, eliminating the need for the time-consuming global feature extraction and feature matching process. The estimated overlapping regions are used to calculate a homogeneous rigid transform, which serves as an initial condition for the GICP point cloud registration algorithm to refine the alignment between the maps. The advantages of this approach include faster processing time, improved accuracy, and increased robustness in challenging environments. Furthermore, the effectiveness of the proposed framework is successfully demonstrated through multiple field missions of robot exploration in a variety of different underground environments.

RecNet: An Invertible Point Cloud Encoding through Range Image Embeddings for Multi-Robot Map Sharing and Reconstruction

Feb 03, 2024Abstract:In the field of resource-constrained robots and the need for effective place recognition in multi-robotic systems, this article introduces RecNet, a novel approach that concurrently addresses both challenges. The core of RecNet's methodology involves a transformative process: it projects 3D point clouds into depth images, compresses them using an encoder-decoder framework, and subsequently reconstructs the range image, seamlessly restoring the original point cloud. Additionally, RecNet utilizes the latent vector extracted from this process for efficient place recognition tasks. This unique approach not only achieves comparable place recognition results but also maintains a compact representation, suitable for seamless sharing among robots to reconstruct their collective maps. The evaluation of RecNet encompasses an array of metrics, including place recognition performance, structural similarity of the reconstructed point clouds, and the bandwidth transmission advantages, derived from sharing only the latent vectors. This reconstructed map paves a groundbreaking way for exploring its usability in navigation, localization, map-merging, and other relevant missions. Our proposed approach is rigorously assessed using both a publicly available dataset and field experiments, confirming its efficacy and potential for real-world applications.

Efficient Real-time Smoke Filtration with 3D LiDAR for Search and Rescue with Autonomous Heterogeneous Robotic Systems

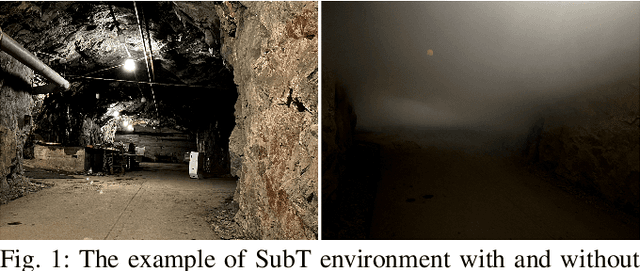

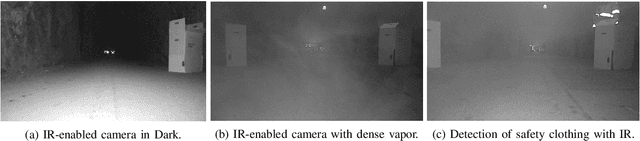

Aug 14, 2023Abstract:Search and Rescue (SAR) missions in harsh and unstructured Sub-Terranean (Sub-T) environments in the presence of aerosol particles have recently become the main focus in the field of robotics. Aerosol particles such as smoke and dust directly affect the performance of any mobile robotic platform due to their reliance on their onboard perception systems for autonomous navigation and localization in Global Navigation Satellite System (GNSS)-denied environments. Although obstacle avoidance and object detection algorithms are robust to the presence of noise to some degree, their performance directly relies on the quality of captured data by onboard sensors such as Light Detection And Ranging (LiDAR) and camera. Thus, this paper proposes a novel modular agnostic filtration pipeline based on intensity and spatial information such as local point density for removal of detected smoke particles from Point Cloud (PCL) prior to its utilization for collision detection. Furthermore, the efficacy of the proposed framework in the presence of smoke during multiple frontier exploration missions is investigated while the experimental results are presented to facilitate comparison with other methodologies and their computational impact. This provides valuable insight to the research community for better utilization of filtration schemes based on available computation resources while considering the safe autonomous navigation of mobile robots.

Autonomous Point Cloud Segmentation for Power Lines Inspection in Smart Grid

Aug 14, 2023Abstract:LiDAR is currently one of the most utilized sensors to effectively monitor the status of power lines and facilitate the inspection of remote power distribution networks and related infrastructures. To ensure the safe operation of the smart grid, various remote data acquisition strategies, such as Airborne Laser Scanning (ALS), Mobile Laser Scanning (MLS), and Terrestrial Laser Scanning (TSL) have been leveraged to allow continuous monitoring of regional power networks, which are typically surrounded by dense vegetation. In this article, an unsupervised Machine Learning (ML) framework is proposed, to detect, extract and analyze the characteristics of power lines of both high and low voltage, as well as the surrounding vegetation in a Power Line Corridor (PLC) solely from LiDAR data. Initially, the proposed approach eliminates the ground points from higher elevation points based on statistical analysis that applies density criteria and histogram thresholding. After denoising and transforming of the remaining candidate points by applying Principle Component Analysis (PCA) and Kd-tree, power line segmentation is achieved by utilizing a two-stage DBSCAN clustering to identify each power line individually. Finally, all high elevation points in the PLC are identified based on their distance to the newly segmented power lines. Conducted experiments illustrate that the proposed framework is an agnostic method that can efficiently detect the power lines and perform PLC-based hazard analysis.

Irregular Change Detection in Sparse Bi-Temporal Point Clouds using Learned Place Recognition Descriptors and Point-to-Voxel Comparison

Jul 04, 2023Abstract:Change detection and irregular object extraction in 3D point clouds is a challenging task that is of high importance not only for autonomous navigation but also for updating existing digital twin models of various industrial environments. This article proposes an innovative approach for change detection in 3D point clouds using deep learned place recognition descriptors and irregular object extraction based on voxel-to-point comparison. The proposed method first aligns the bi-temporal point clouds using a map-merging algorithm in order to establish a common coordinate frame. Then, it utilizes deep learning techniques to extract robust and discriminative features from the 3D point cloud scans, which are used to detect changes between consecutive point cloud frames and therefore find the changed areas. Finally, the altered areas are sampled and compared between the two time instances to extract any obstructions that caused the area to change. The proposed method was successfully evaluated in real-world field experiments, where it was able to detect different types of changes in 3D point clouds, such as object or muck-pile addition and displacement, showcasing the effectiveness of the approach. The results of this study demonstrate important implications for various applications, including safety and security monitoring in construction sites, mapping and exploration and suggests potential future research directions in this field.

Multimodal Dataset from Harsh Sub-Terranean Environment with Aerosol Particles for Frontier Exploration

Apr 27, 2023

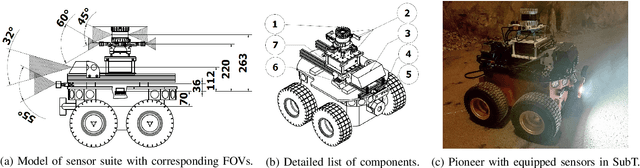

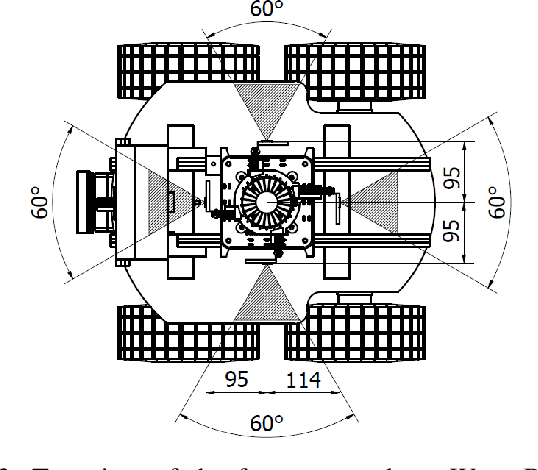

Abstract:Algorithms for autonomous navigation in environments without Global Navigation Satellite System (GNSS) coverage mainly rely on onboard perception systems. These systems commonly incorporate sensors like cameras and LiDARs, the performance of which may degrade in the presence of aerosol particles. Thus, there is a need of fusing acquired data from these sensors with data from RADARs which can penetrate through such particles. Overall, this will improve the performance of localization and collision avoidance algorithms under such environmental conditions. This paper introduces a multimodal dataset from the harsh and unstructured underground environment with aerosol particles. A detailed description of the onboard sensors and the environment, where the dataset is collected are presented to enable full evaluation of acquired data. Furthermore, the dataset contains synchronized raw data measurements from all onboard sensors in Robot Operating System (ROS) format to facilitate the evaluation of navigation, and localization algorithms in such environments. In contrast to the existing datasets, the focus of this paper is not only to capture both temporal and spatial data diversities but also to present the impact of harsh conditions on captured data. Therefore, to validate the dataset, a preliminary comparison of odometry from onboard LiDARs is presented.

Evaluation of Lidar-based 3D SLAM algorithms in SubT environment

Mar 13, 2023

Abstract:Autonomous navigation of robots in harsh and GPS denied subterranean (SubT) environments with lack of natural or poor illumination is a challenging task that fosters the development of algorithms for pose estimation and mapping. Inspired by the need for real-life deployment of autonomous robots in such environments, this article presents an experimental comparative study of 3D SLAM algorithms. The study focuses on state-of-the-art Lidar SLAM algorithms with open-source implementation that are i) lidar-only like BLAM, LOAM, A-LOAM, ISC-LOAM and hdl graph slam, or ii) lidar-inertial like LeGO-LOAM, Cartographer, LIO-mapping and LIO-SAM. The evaluation of the methods is performed based on a dataset collected from the Boston Dynamics Spot robot equipped with 3D lidar Velodyne Puck Lite and IMU Vectornav VN-100, during a mission in an underground tunnel. In the evaluation process poses and 3D tunnel reconstructions from SLAM algorithms are compared against each other to find methods with most solid performance in terms of pose accuracy and map quality.

FRAME: Fast and Robust Autonomous 3D point cloud Map-merging for Egocentric multi-robot exploration

Jan 24, 2023

Abstract:This article presents a 3D point cloud map-merging framework for egocentric heterogeneous multi-robot exploration, based on overlap detection and alignment, that is independent of a manual initial guess or prior knowledge of the robots' poses. The novel proposed solution utilizes state-of-the-art place recognition learned descriptors, that through the framework's main pipeline, offer a fast and robust region overlap estimation, hence eliminating the need for the time-consuming global feature extraction and feature matching process that is typically used in 3D map integration. The region overlap estimation provides a homogeneous rigid transform that is applied as an initial condition in the point cloud registration algorithm Fast-GICP, which provides the final and refined alignment. The efficacy of the proposed framework is experimentally evaluated based on multiple field multi-robot exploration missions in underground environments, where both ground and aerial robots are deployed, with different sensor configurations.

3DEG: Data-Driven Descriptor Extraction for Global re-localization in subterranean environments

Oct 13, 2022

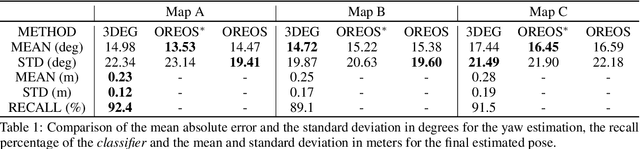

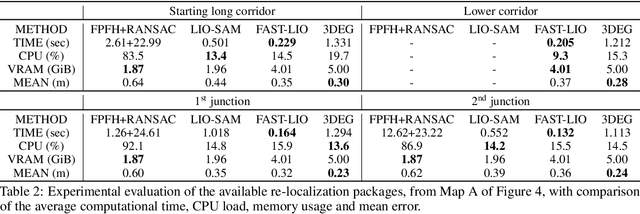

Abstract:Current global re-localization algorithms are built on top of localization and mapping methods and heavily rely on scan matching and direct point cloud feature extraction and therefore are vulnerable in featureless demanding environments like caves and tunnels. In this article, we propose a novel global re-localization framework that: a) does not require an initial guess, like most methods do, while b) it has the capability to offer the top-k candidates to choose from and last but not least provides an event-based re-localization trigger module for enabling, and c) supporting completely autonomous robotic missions. With the focus on subterranean environments with low features, we opt to use descriptors based on range images from 3D LiDAR scans in order to maintain the depth information of the environment. In our novel approach, we make use of a state-of-the-art data-driven descriptor extraction framework for place recognition and orientation regression and enhance it with the addition of a junction detection module that also utilizes the descriptors for classification purposes.

Edge Computing Architectures for Enabling the Realisation of the Next Generation Robotic Systems

Sep 17, 2022

Abstract:Edge Computing is a promising technology to provide new capabilities in technological fields that require instantaneous data processing. Researchers in areas such as machine and deep learning use extensively edge and cloud computing for their applications, mainly due to the significant computational and storage resources that they provide. Currently, Robotics is seeking to take advantage of these capabilities as well, and with the development of 5G networks, some existing limitations in the field can be overcome. In this context, it is important to know how to utilize the emerging edge architectures, what types of edge architectures and platforms exist today and which of them can and should be used based on each robotic application. In general, Edge platforms can be implemented and used differently, especially since there are several providers offering more or less the same set of services with some essential differences. Thus, this study addresses these discussions for those who work in the development of the next generation robotic systems and will help to understand the advantages and disadvantages of each edge computing architecture in order to choose wisely the right one for each application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge