Akash Patel

SPADE: Towards Scalable Path Planning Architecture on Actionable Multi-Domain 3D Scene Graphs

May 25, 2025Abstract:In this work, we introduce SPADE, a path planning framework designed for autonomous navigation in dynamic environments using 3D scene graphs. SPADE combines hierarchical path planning with local geometric awareness to enable collision-free movement in dynamic scenes. The framework bifurcates the planning problem into two: (a) solving the sparse abstract global layer plan and (b) iterative path refinement across denser lower local layers in step with local geometric scene navigation. To ensure efficient extraction of a feasible route in a dense multi-task domain scene graphs, the framework enforces informed sampling of traversable edges prior to path-planning. This removes extraneous information not relevant to path-planning and reduces the overall planning complexity over a graph. Existing approaches address the problem of path planning over scene graphs by decoupling hierarchical and geometric path evaluation processes. Specifically, this results in an inefficient replanning over the entire scene graph when encountering path obstructions blocking the original route. In contrast, SPADE prioritizes local layer planning coupled with local geometric scene navigation, enabling navigation through dynamic scenes while maintaining efficiency in computing a traversable route. We validate SPADE through extensive simulation experiments and real-world deployment on a quadrupedal robot, demonstrating its efficacy in handling complex and dynamic scenarios.

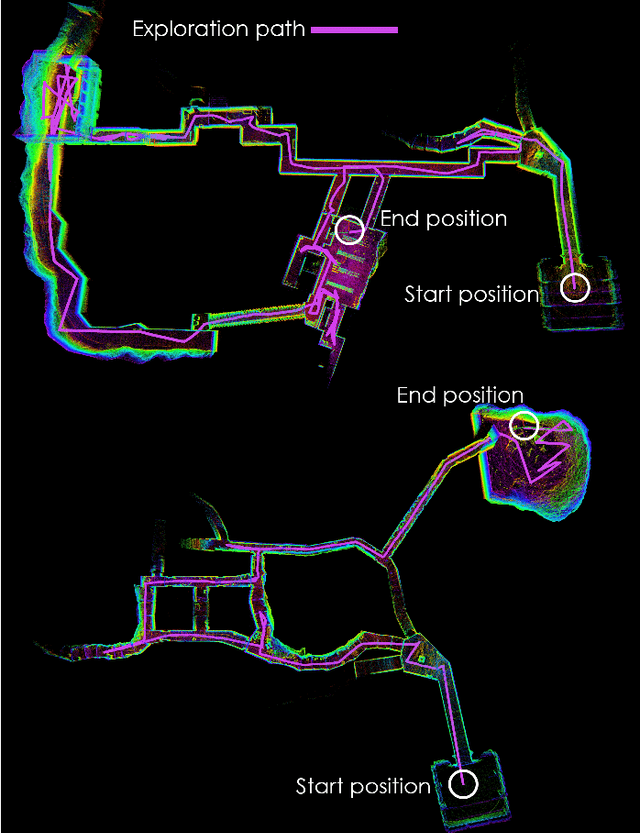

A Hierarchical Graph-Based Terrain-Aware Autonomous Navigation Approach for Complementary Multimodal Ground-Aerial Exploration

May 20, 2025Abstract:Autonomous navigation in unknown environments is a fundamental challenge in robotics, particularly in coordinating ground and aerial robots to maximize exploration efficiency. This paper presents a novel approach that utilizes a hierarchical graph to represent the environment, encoding both geometric and semantic traversability. The framework enables the robots to compute a shared confidence metric, which helps the ground robot assess terrain and determine when deploying the aerial robot will extend exploration. The robot's confidence in traversing a path is based on factors such as predicted volumetric gain, path traversability, and collision risk. A hierarchy of graphs is used to maintain an efficient representation of traversability and frontier information through multi-resolution maps. Evaluated in a real subterranean exploration scenario, the approach allows the ground robot to autonomously identify zones that are no longer traversable but suitable for aerial deployment. By leveraging this hierarchical structure, the ground robot can selectively share graph information on confidence-assessed frontier targets from parts of the scene, enabling the aerial robot to navigate beyond obstacles and continue exploration.

AlgoRxplorers | Precision in Mutation -- Enhancing Drug Design with Advanced Protein Stability Prediction Tools

Jan 13, 2025

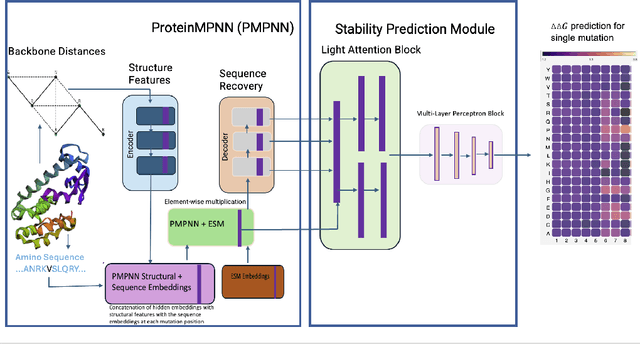

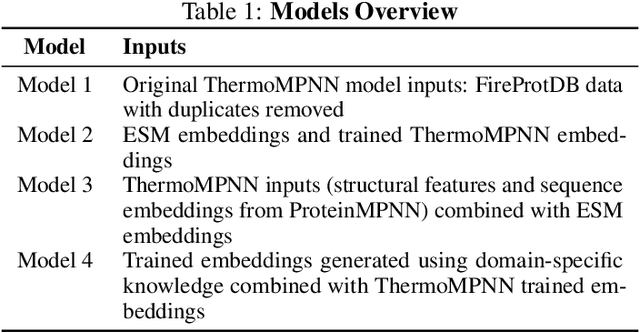

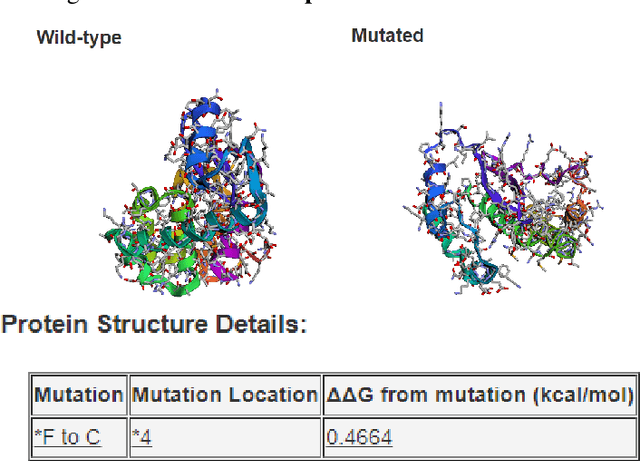

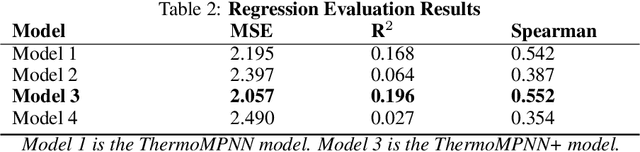

Abstract:Predicting the impact of single-point amino acid mutations on protein stability is essential for understanding disease mechanisms and advancing drug development. Protein stability, quantified by changes in Gibbs free energy ($\Delta\Delta G$), is influenced by these mutations. However, the scarcity of data and the complexity of model interpretation pose challenges in accurately predicting stability changes. This study proposes the application of deep neural networks, leveraging transfer learning and fusing complementary information from different models, to create a feature-rich representation of the protein stability landscape. We developed four models, with our third model, ThermoMPNN+, demonstrating the best performance in predicting $\Delta\Delta G$ values. This approach, which integrates diverse feature sets and embeddings through latent transfusion techniques, aims to refine $\Delta\Delta G$ predictions and contribute to a deeper understanding of protein dynamics, potentially leading to advancements in disease research and drug discovery.

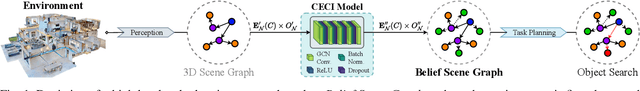

Leveraging Computation of Expectation Models for Commonsense Affordance Estimation on 3D Scene Graphs

Sep 09, 2024

Abstract:This article studies the commonsense object affordance concept for enabling close-to-human task planning and task optimization of embodied robotic agents in urban environments. The focus of the object affordance is on reasoning how to effectively identify object's inherent utility during the task execution, which in this work is enabled through the analysis of contextual relations of sparse information of 3D scene graphs. The proposed framework develops a Correlation Information (CECI) model to learn probability distributions using a Graph Convolutional Network, allowing to extract the commonsense affordance for individual members of a semantic class. The overall framework was experimentally validated in a real-world indoor environment, showcasing the ability of the method to level with human commonsense. For a video of the article, showcasing the experimental demonstration, please refer to the following link: https://youtu.be/BDCMVx2GiQE

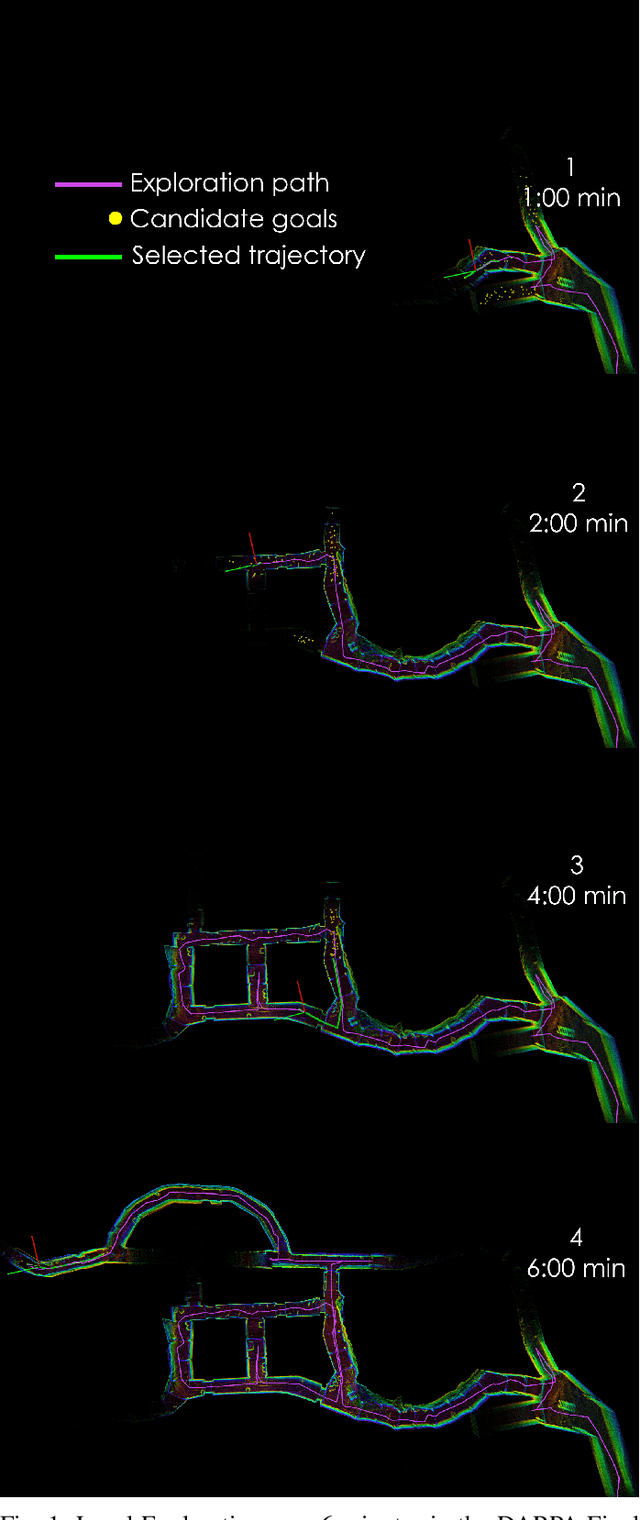

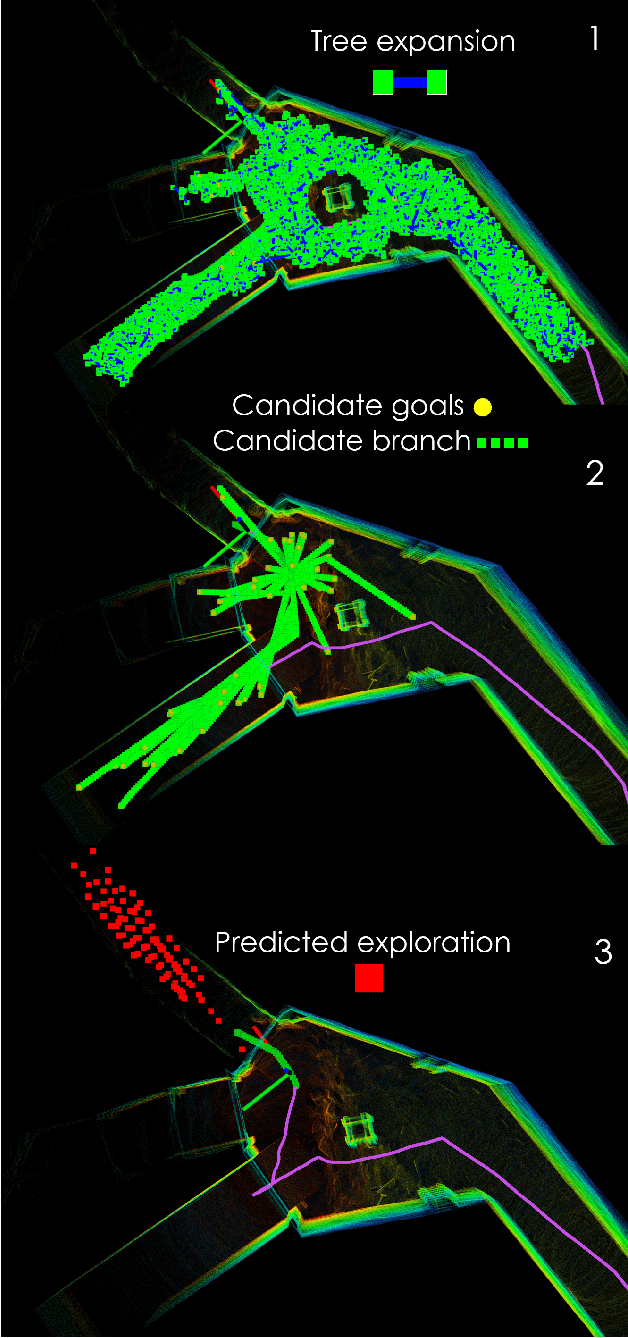

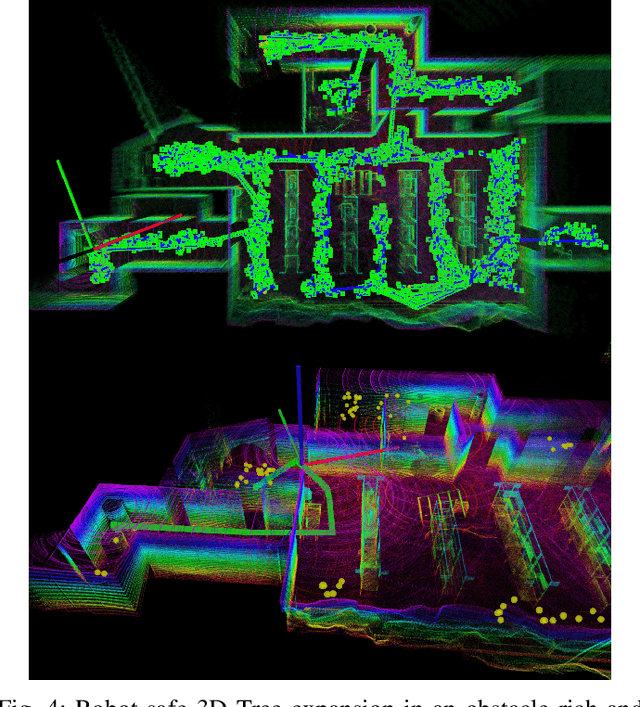

A Tree-based Next-best-trajectory Method for 3D UAV Exploration

Jul 05, 2024

Abstract:This work presents a fully integrated tree-based combined exploration-planning algorithm: Exploration-RRT (ERRT). The algorithm is focused on providing real-time solutions for local exploration in a fully unknown and unstructured environment while directly incorporating exploratory behavior, robot-safe path planning, and robot actuation into the central problem. ERRT provides a complete sampling and tree-based solution for evaluating "where to go next" by considering a trade-off between maximizing information gain, and minimizing the distances travelled and the robot actuation along the path. The complete scheme is evaluated in extensive simulations, comparisons, as well as real-world field experiments in constrained and narrow subterranean and GPS-denied environments. The framework is fully ROS-integrated, straight-forward to use, and we open-source it at https://github.com/LTU-RAI/ExplorationRRT.

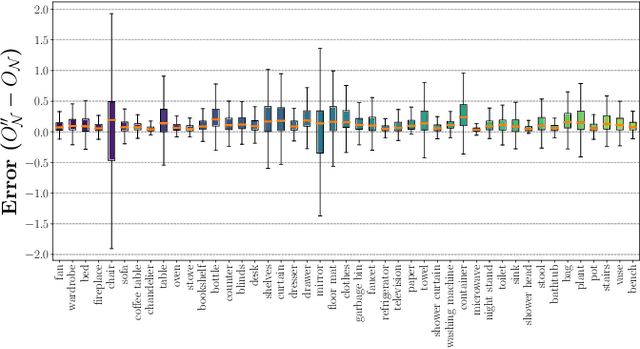

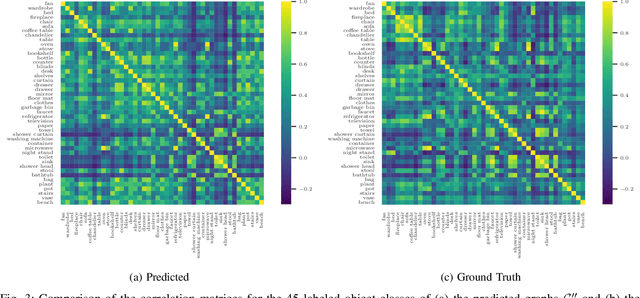

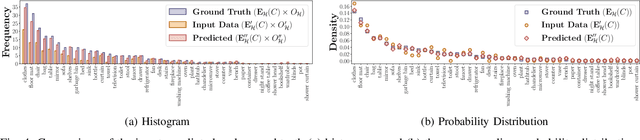

Belief Scene Graphs: Expanding Partial Scenes with Objects through Computation of Expectation

Feb 06, 2024

Abstract:In this article, we propose the novel concept of Belief Scene Graphs, which are utility-driven extensions of partial 3D scene graphs, that enable efficient high-level task planning with partial information. We propose a graph-based learning methodology for the computation of belief (also referred to as expectation) on any given 3D scene graph, which is then used to strategically add new nodes (referred to as blind nodes) that are relevant for a robotic mission. We propose the method of Computation of Expectation based on Correlation Information (CECI), to reasonably approximate real Belief/Expectation, by learning histograms from available training data. A novel Graph Convolutional Neural Network (GCN) model is developed, to learn CECI from a repository of 3D scene graphs. As no database of 3D scene graphs exists for the training of the novel CECI model, we present a novel methodology for generating a 3D scene graph dataset based on semantically annotated real-life 3D spaces. The generated dataset is then utilized to train the proposed CECI model and for extensive validation of the proposed method. We establish the novel concept of \textit{Belief Scene Graphs} (BSG), as a core component to integrate expectations into abstract representations. This new concept is an evolution of the classical 3D scene graph concept and aims to enable high-level reasoning for the task planning and optimization of a variety of robotics missions. The efficacy of the overall framework has been evaluated in an object search scenario, and has also been tested on a real-life experiment to emulate human common sense of unseen-objects.

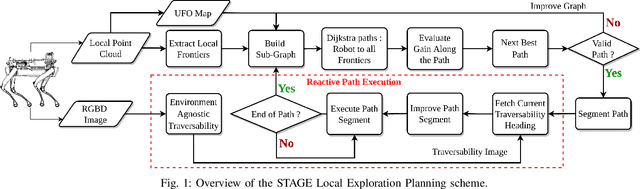

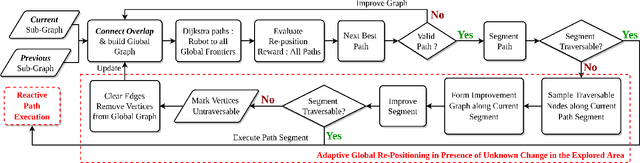

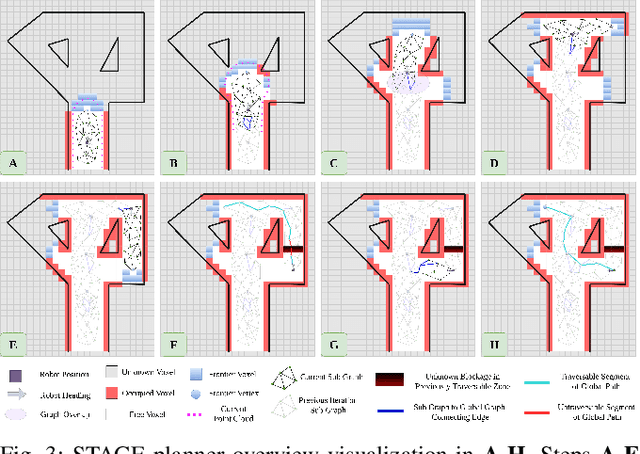

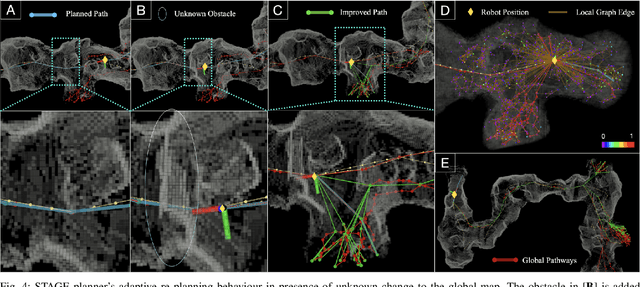

STAGE: Scalable and Traversability-Aware Graph based Exploration Planner for Dynamically Varying Environments

Feb 04, 2024

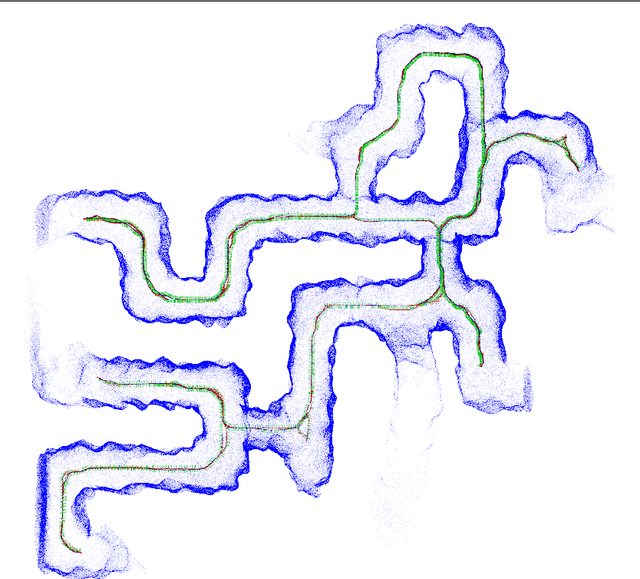

Abstract:In this article, we propose a novel navigation framework that leverages a two layered graph representation of the environment for efficient large-scale exploration, while it integrates a novel uncertainty awareness scheme to handle dynamic scene changes in previously explored areas. The framework is structured around a novel goal oriented graph representation, that consists of, i) the local sub-graph and ii) the global graph layer respectively. The local sub-graphs encode local volumetric gain locations as frontiers, based on the direct pointcloud visibility, allowing fast graph building and path planning. Additionally, the global graph is build in an efficient way, using node-edge information exchange only on overlapping regions of sequential sub-graphs. Different from the state-of-the-art graph based exploration methods, the proposed approach efficiently re-uses sub-graphs built in previous iterations to construct the global navigation layer. Another merit of the proposed scheme is the ability to handle scene changes (e.g. blocked pathways), adaptively updating the obstructed part of the global graph from traversable to not-traversable. This operation involved oriented sample space of a path segment in the global graph layer, while removing the respective edges from connected nodes of the global graph in cases of obstructions. As such, the exploration behavior is directing the robot to follow another route in the global re-positioning phase through path-way updates in the global graph. Finally, we showcase the performance of the method both in simulation runs as well as deployed in real-world scene involving a legged robot carrying camera and lidar sensor.

Event Camera and LiDAR based Human Tracking for Adverse Lighting Conditions in Subterranean Environments

Apr 18, 2023

Abstract:In this article, we propose a novel LiDAR and event camera fusion modality for subterranean (SubT) environments for fast and precise object and human detection in a wide variety of adverse lighting conditions, such as low or no light, high-contrast zones and in the presence of blinding light sources. In the proposed approach, information from the event camera and LiDAR are fused to localize a human or an object-of-interest in a robot's local frame. The local detection is then transformed into the inertial frame and used to set references for a Nonlinear Model Predictive Controller (NMPC) for reactive tracking of humans or objects in SubT environments. The proposed novel fusion uses intensity filtering and K-means clustering on the LiDAR point cloud and frequency filtering and connectivity clustering on the events induced in an event camera by the returning LiDAR beams. The centroids of the clusters in the event camera and LiDAR streams are then paired to localize reflective markers present on safety vests and signs in SubT environments. The efficacy of the proposed scheme has been experimentally validated in a real SubT environment (a mine) with a Pioneer 3AT mobile robot. The experimental results show real-time performance for human detection and the NMPC-based controller allows for reactive tracking of a human or object of interest, even in complete darkness.

Towards Energy Efficient Autonomous Exploration of Mars Lava Tube with a Martian Coaxial Quadrotor

Nov 13, 2022Abstract:Mapping and exploration of a Martian terrain with an aerial vehicle has become an emerging research direction, since the successful flight demonstration of the Mars helicopter Ingenuity. Although the autonomy and navigation capability of the state of the art Mars helicopter has proven to be efficient in an open environment, the next area of interest for exploration on Mars are caves or ancient lava tube like environments, especially towards the never-ending search of life on other planets. This article presents an autonomous exploration mission based on a modified frontier approach along with a risk aware planning and integrated collision avoidance scheme with a special focus on energy aspects of a custom designed Mars Coaxial Quadrotor (MCQ) in a Martian simulated lava tube. One of the biggest novelties of the article stems from addressing the exploration capability, while rapidly exploring in local areas and intelligently global re-positioning of the MCQ when reaching dead ends in order to to efficiently use the battery based consumed energy, while increasing the volume of the exploration. The proposed three layer cost based global re-position point selection assists in rapidly redirecting the MCQ to previously partially seen areas that could lead to more unexplored part of the lava tube. The Martian fully simulated mission presented in this article takes into consideration the fidelity of physics of Mars condition in terms of thin atmosphere, low surface pressure and low gravity of the planet, while proves the efficiency of the proposed scheme in exploring an area that is particularly challenging due to the subterranean-like environment. The proposed exploration-planning framework is also validated in simulation by comparing it against the graph based exploration planner.

REF: A Rapid Exploration Framework for Deploying Autonomous MAVs in Unknown Environments

May 31, 2022

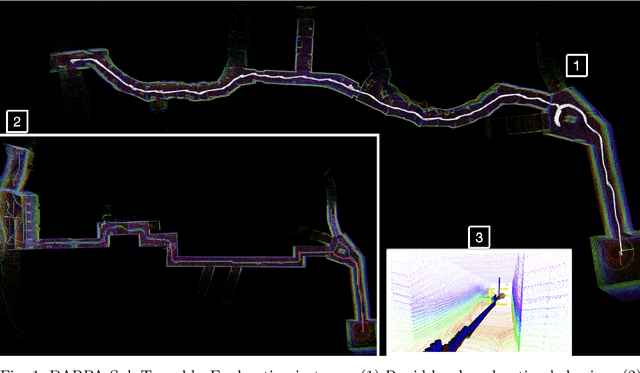

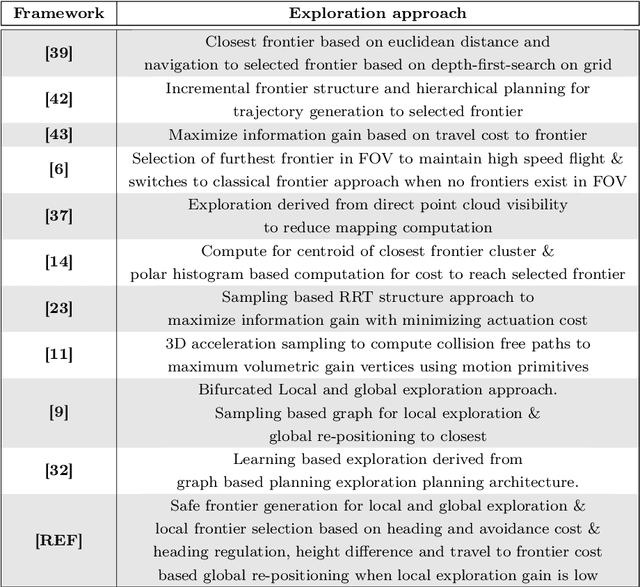

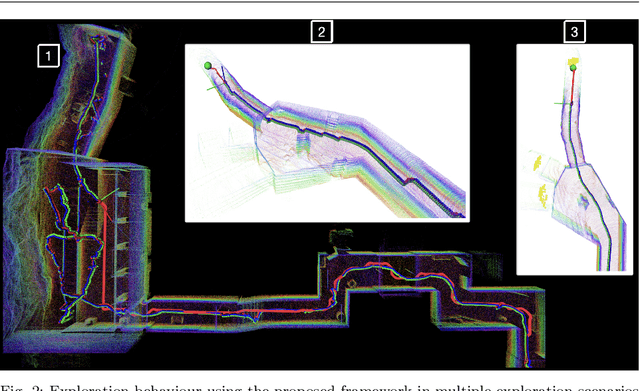

Abstract:Exploration and mapping of unknown environments is a fundamental task in applications for autonomous robots. In this article, we present a complete framework for deploying MAVs in autonomous exploration missions in unknown subterranean areas. The main motive of exploration algorithms is to depict the next best frontier for the robot such that new ground can be covered in a fast, safe yet efficient manner. The proposed framework uses a novel frontier selection method that also contributes to the safe navigation of autonomous robots in obstructed areas such as subterranean caves, mines, and urban areas. The framework presented in this work bifurcates the exploration problem in local and global exploration. The proposed exploration framework is also adaptable according to computational resources available onboard the robot which means the trade-off between the speed of exploration and the quality of the map can be made. Such capability allows the proposed framework to be deployed in a subterranean exploration, mapping as well as in fast search and rescue scenarios. The overall system is considered a low-complexity and baseline solution for navigation and object localization in tunnel-like environments. The performance of the proposed framework is evaluated in detailed simulation studies with comparisons made against a high-level exploration-planning framework developed for the DARPA Sub-T challenge as it will be presented in this article.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge