Akshit Saradagi

Collision-free landing of multiple UAVs on moving ground vehicles using time-varying control barrier functions

Apr 08, 2025

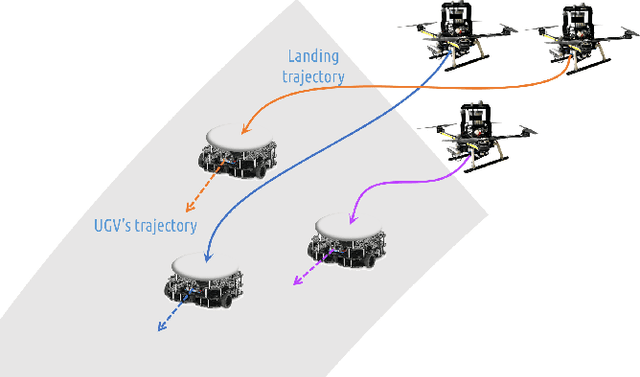

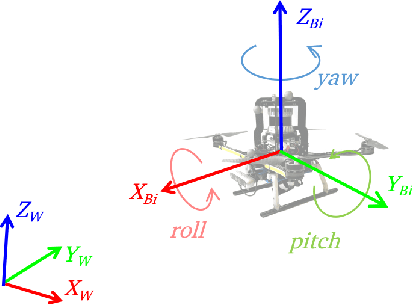

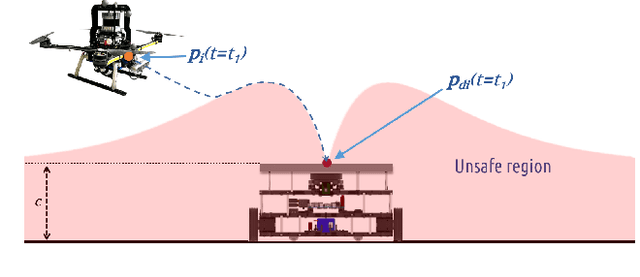

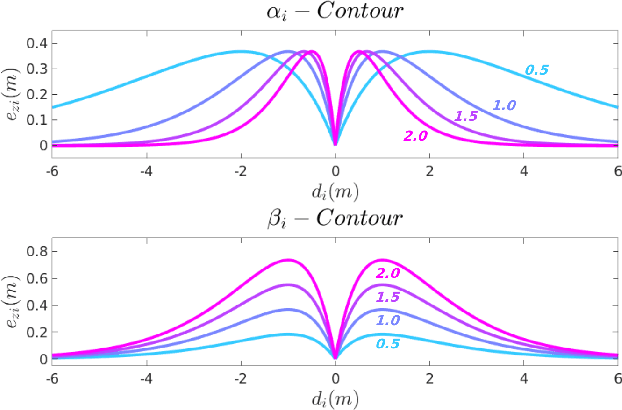

Abstract:In this article, we present a centralized approach for the control of multiple unmanned aerial vehicles (UAVs) for landing on moving unmanned ground vehicles (UGVs) using control barrier functions (CBFs). The proposed control framework employs two kinds of CBFs to impose safety constraints on the UAVs' motion. The first class of CBFs (LCBF) is a three-dimensional exponentially decaying function centered above the landing platform, designed to safely and precisely land UAVs on the UGVs. The second set is a spherical CBF (SCBF), defined between every pair of UAVs, which avoids collisions between them. The LCBF is time-varying and adapts to the motions of the UGVs. In the proposed CBF approach, the control input from the UAV's nominal tracking controller designed to reach the landing platform is filtered to choose a minimally-deviating control input that ensures safety (as defined by the CBFs). As the control inputs of every UAV are shared in establishing multiple CBF constraints, we prove that the control inputs are shared without conflict in rendering the safe sets forward invariant. The performance of the control framework is validated through a simulated scenario involving three UAVs landing on three moving targets.

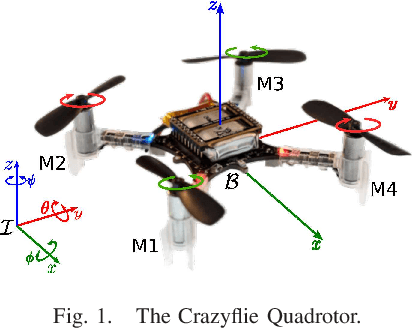

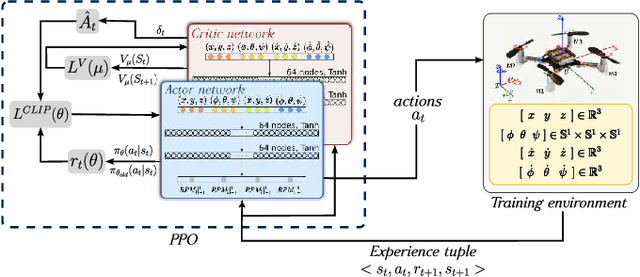

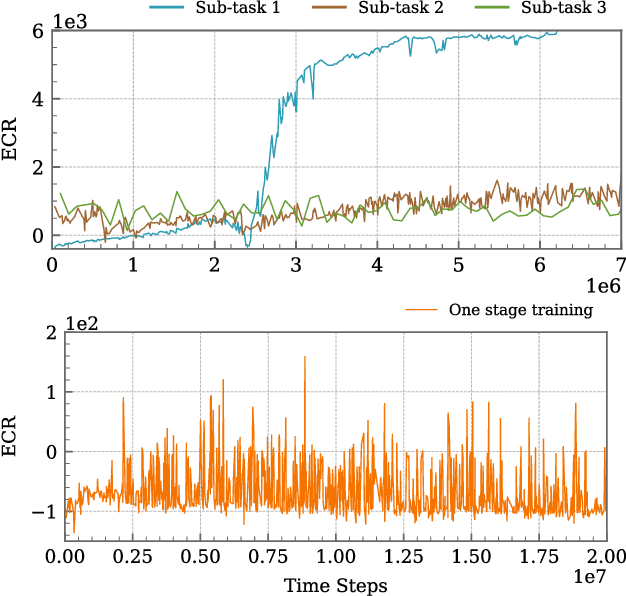

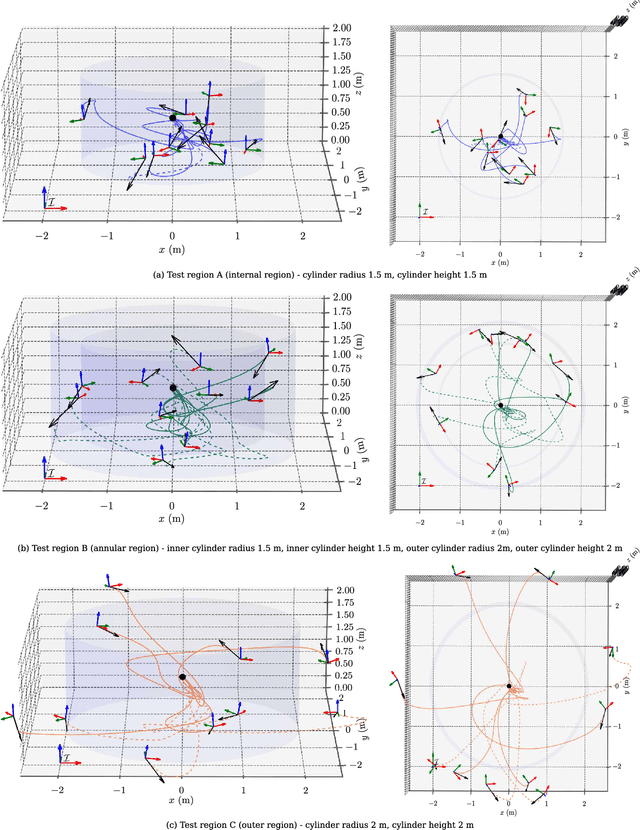

Curriculum-based Sample Efficient Reinforcement Learning for Robust Stabilization of a Quadrotor

Jan 30, 2025

Abstract:This article introduces a curriculum learning approach to develop a reinforcement learning-based robust stabilizing controller for a Quadrotor that meets predefined performance criteria. The learning objective is to achieve desired positions from random initial conditions while adhering to both transient and steady-state performance specifications. This objective is challenging for conventional one-stage end-to-end reinforcement learning, due to the strong coupling between position and orientation dynamics, the complexity in designing and tuning the reward function, and poor sample efficiency, which necessitates substantial computational resources and leads to extended convergence times. To address these challenges, this work decomposes the learning objective into a three-stage curriculum that incrementally increases task complexity. The curriculum begins with learning to achieve stable hovering from a fixed initial condition, followed by progressively introducing randomization in initial positions, orientations and velocities. A novel additive reward function is proposed, to incorporate transient and steady-state performance specifications. The results demonstrate that the Proximal Policy Optimization (PPO)-based curriculum learning approach, coupled with the proposed reward structure, achieves superior performance compared to a single-stage PPO-trained policy with the same reward function, while significantly reducing computational resource requirements and convergence time. The curriculum-trained policy's performance and robustness are thoroughly validated under random initial conditions and in the presence of disturbances.

Multi-Agent Path Finding Using Conflict-Based Search and Structural-Semantic Topometric Maps

Jan 29, 2025

Abstract:As industries increasingly adopt large robotic fleets, there is a pressing need for computationally efficient, practical, and optimal conflict-free path planning for multiple robots. Conflict-Based Search (CBS) is a popular method for multi-agent path finding (MAPF) due to its completeness and optimality; however, it is often impractical for real-world applications, as it is computationally intensive to solve and relies on assumptions about agents and operating environments that are difficult to realize. This article proposes a solution to overcome computational challenges and practicality issues of CBS by utilizing structural-semantic topometric maps. Instead of running CBS over large grid-based maps, the proposed solution runs CBS over a sparse topometric map containing structural-semantic cells representing intersections, pathways, and dead ends. This approach significantly accelerates the MAPF process and reduces the number of conflict resolutions handled by CBS while operating in continuous time. In the proposed method, robots are assigned time ranges to move between topometric regions, departing from the traditional CBS assumption that a robot can move to any connected cell in a single time step. The approach is validated through real-world multi-robot path-finding experiments and benchmarking simulations. The results demonstrate that the proposed MAPF method can be applied to real-world non-holonomic robots and yields significant improvement in computational efficiency compared to traditional CBS methods while improving conflict detection and resolution in cases of corridor symmetries.

Deployment of an Aerial Multi-agent System for Automated Task Execution in Large-scale Underground Mining Environments

Jan 17, 2025

Abstract:In this article, we present a framework for deploying an aerial multi-agent system in large-scale subterranean environments with minimal infrastructure for supporting multi-agent operations. The multi-agent objective is to optimally and reactively allocate and execute inspection tasks in a mine, which are entered by a mine operator on-the-fly. The assignment of currently available tasks to the team of agents is accomplished through an auction-based system, where the agents bid for available tasks, which are used by a central auctioneer to optimally assigns tasks to agents. A mobile Wi-Fi mesh supports inter-agent communication and bi-directional communication between the agents and the task allocator, while the task execution is performed completely infrastructure-free. Given a task to be accomplished, a reliable and modular agent behavior is synthesized by generating behavior trees from a pool of agent capabilities, using a back-chaining approach. The auction system in the proposed framework is reactive and supports addition of new operator-specified tasks on-the-go, at any point through a user-friendly operator interface. The framework has been validated in a real underground mining environment using three aerial agents, with several inspection locations spread in an environment of almost 200 meters. The proposed framework can be utilized for missions involving rapid inspection, gas detection, distributed sensing and mapping etc. in a subterranean environment. The proposed framework and its field deployment contributes towards furthering reliable automation in large-scale subterranean environments to offload both routine and dangerous tasks from human operators to autonomous aerial robots.

Barriers on the EDGE: A scalable CBF architecture over EDGE for safe aerial-ground multi-agent coordination

Nov 25, 2024Abstract:In this article, we address the problem of designing a scalable control architecture for a safe coordinated operation of a multi-agent system with aerial (UAVs) and ground robots (UGVs) in a confined task space. The proposed method uses Control Barrier Functions (CBFs) to impose constraints associated with (i) collision avoidance between agents, (ii) landing of UAVs on mobile UGVs, and (iii) task space restriction. Further, to account for the rapid increase in the number of constraints for a single agent with the increasing number of agents, the proposed architecture uses a centralized-decentralized Edge cluster, where a centralized node (Watcher) activates the relevant constraints, reducing the need for high onboard processing and network complexity. The distributed nodes run the controller locally to overcome latency and network issues. The proposed Edge architecture is experimentally validated using multiple aerial and ground robots in a confined environment performing a coordinated operation.

Robotic Exploration through Semantic Topometric Mapping

Jun 26, 2024Abstract:In this article, we introduce a novel strategy for robotic exploration in unknown environments using a semantic topometric map. As it will be presented, the semantic topometric map is generated by segmenting the grid map of the currently explored parts of the environment into regions, such as intersections, pathways, dead-ends, and unexplored frontiers, which constitute the structural semantics of an environment. The proposed exploration strategy leverages metric information of the frontier, such as distance and angle to the frontier, similar to existing frameworks, with the key difference being the additional utilization of structural semantic information, such as properties of the intersections leading to frontiers. The algorithm for generating semantic topometric mapping utilized by the proposed method is lightweight, resulting in the method's online execution being both rapid and computationally efficient. Moreover, the proposed framework can be applied to both structured and unstructured indoor and outdoor environments, which enhances the versatility of the proposed exploration algorithm. We validate our exploration strategy and demonstrate the utility of structural semantics in exploration in two complex indoor environments by utilizing a Turtlebot3 as the robotic agent. Compared to traditional frontier-based methods, our findings indicate that the proposed approach leads to faster exploration and requires less computation time.

GRID-FAST: A Grid-based Intersection Detection for Fast Semantic Topometric Mapping

Jun 17, 2024

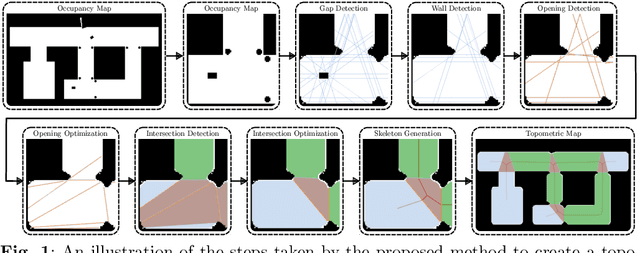

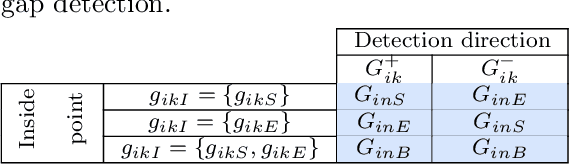

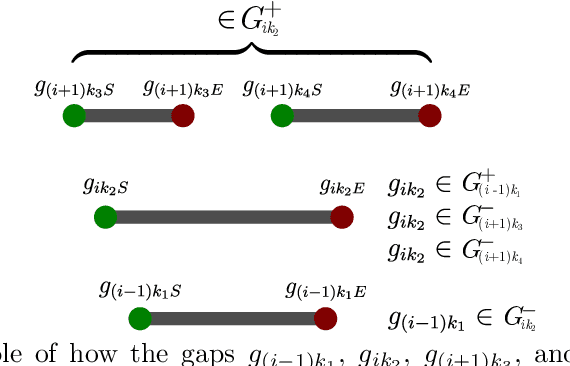

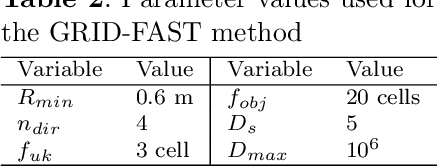

Abstract:This article introduces a novel approach to constructing a topometric map that allows for efficient navigation and decision-making in mobile robotics applications. The method generates the topometric map from a 2D grid-based map. The topometric map segments areas of the input map into different structural-semantic classes: intersections, pathways, dead ends, and pathways leading to unexplored areas. This method is grounded in a new technique for intersection detection that identifies the area and the openings of intersections in a semantically meaningful way. The framework introduces two levels of pre-filtering with minimal computational cost to eliminate small openings and objects from the map which are unimportant in the context of high-level map segmentation and decision making. The topological map generated by GRID-FAST enables fast navigation in large-scale environments, and the structural semantics can aid in mission planning, autonomous exploration, and human-to-robot cooperation. The efficacy of the proposed method is demonstrated through validation on real maps gathered from robotic experiments: 1) a structured indoor environment, 2) an unstructured cave-like subterranean environment, and 3) a large-scale outdoor environment, which comprises pathways, buildings, and scattered objects. Additionally, the proposed framework has been compared with state-of-the-art topological mapping solutions and is able to produce a topometric and topological map with up to \blue92% fewer nodes than the next best solution.

3D Voxel Maps to 2D Occupancy Maps for Efficient Path Planning for Aerial and Ground Robots

Jun 11, 2024

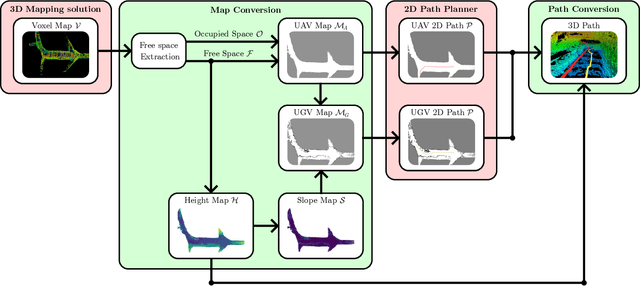

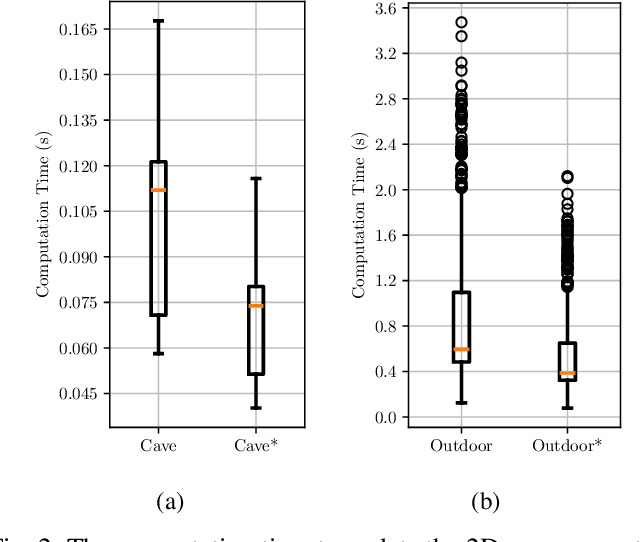

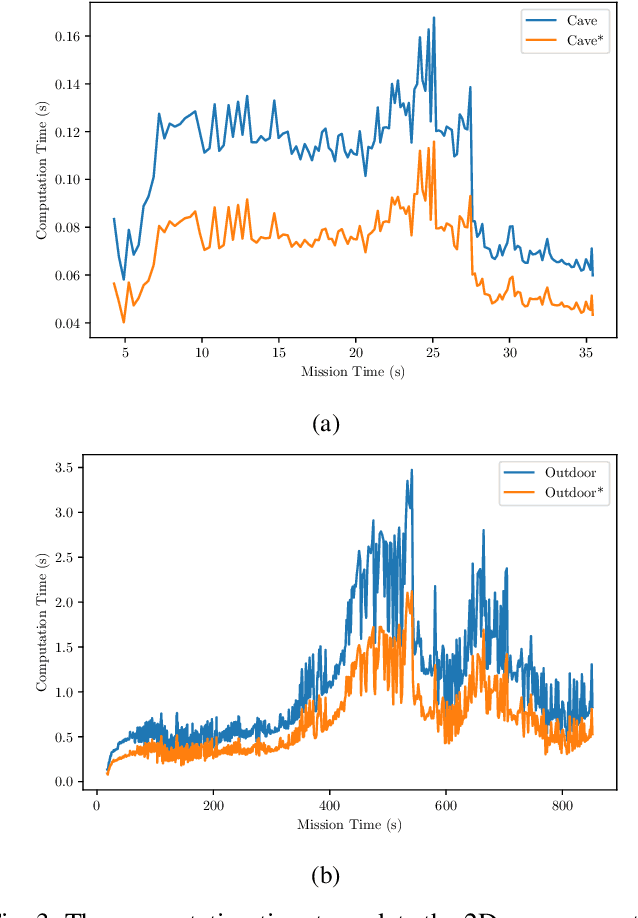

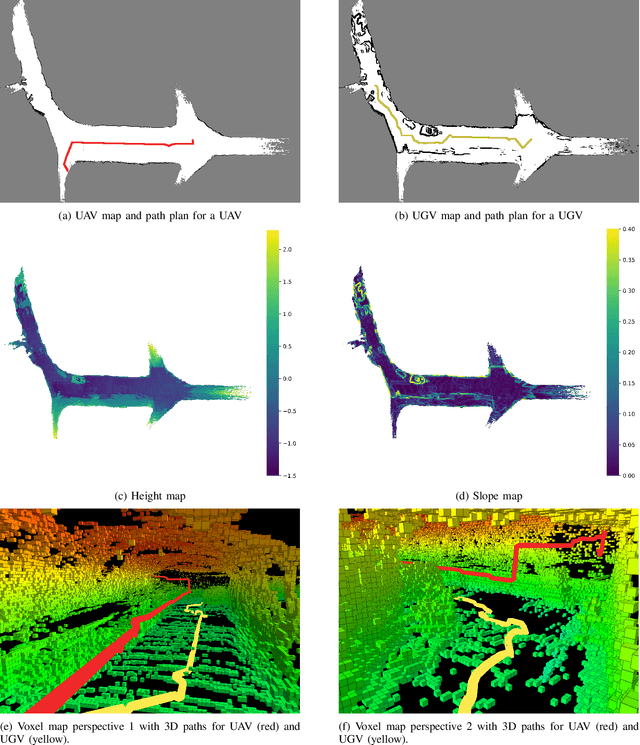

Abstract:This article introduces a novel method for converting 3D voxel maps, commonly utilized by robots for localization and navigation, into 2D occupancy maps that can be used for more computationally efficient large-scale navigation, both in the sense of computation time and memory usage. The main aim is to effectively integrate the distinct mapping advantages of 2D and 3D maps to enable efficient path planning for both unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs). The proposed method uses the free space representation in the UFOMap mapping solution to generate 2D occupancy maps with height and slope information. In the process of 3D to 2D map conversion, the proposed method conducts safety checks and eliminates free spaces in the map with dimensions (in the height axis) lower than the robot's safety margins. This allows an aerial or ground robot to navigate safely, relying primarily on the 2D map generated by the method. Additionally, the method extracts height and slope data from the 3D voxel map. The slope data identifies areas too steep for a ground robot to traverse, marking them as occupied, thus enabling a more accurate representation of the terrain for ground robots. The height data is utilized to convert paths generated using the 2D map into paths in 3D space for both UAVs and UGVs. The effectiveness of the proposed method is evaluated in two different environments.

A CBF-Adaptive Control Architecture for Visual Navigation for UAV in the Presence of Uncertainties

Feb 16, 2024Abstract:In this article, we propose a control solution for the safe transfer of a quadrotor UAV between two surface robots positioning itself only using the visual features on the surface robots, which enforces safety constraints for precise landing and visual locking, in the presence of modeling uncertainties and external disturbances. The controller handles the ascending and descending phases of the navigation using a visual locking control barrier function (VCBF) and a parametrizable switching descending CBF (DCBF) respectively, eliminating the need for an external planner. The control scheme has a backstepping approach for the position controller with the CBF filter acting on the position kinematics to produce a filtered virtual velocity control input, which is tracked by an adaptive controller to overcome modeling uncertainties and external disturbances. The experimental validation is carried out with a UAV that navigates from the base to the target using an RGB camera.

Environmental Awareness Dynamic 5G QoS for Retaining Real Time Constraints in Robotic Applications

Feb 09, 2024

Abstract:The fifth generation (5G) cellular network technology is mature and increasingly utilized in many industrial and robotics applications, while an important functionality is the advanced Quality of Service (QoS) features. Despite the prevalence of 5G QoS discussions in the related literature, there is a notable absence of real-life implementations and studies concerning their application in time-critical robotics scenarios. This article considers the operation of time-critical applications for 5G-enabled unmanned aerial vehicles (UAVs) and how their operation can be improved by the possibility to dynamically switch between QoS data flows with different priorities. As such, we introduce a robotics oriented analysis on the impact of the 5G QoS functionality on the performance of 5G-enabled UAVs. Furthermore, we introduce a novel framework for the dynamic selection of distinct 5G QoS data flows that is autonomously managed by the 5G-enabled UAV. This problem is addressed in a novel feedback loop fashion utilizing a probabilistic finite state machine (PFSM). Finally, the efficacy of the proposed scheme is experimentally validated with a 5G-enabled UAV in a real-world 5G stand-alone (SA) network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge