Konstantinos Karydis

Visual Alignment of Medical Vision-Language Models for Grounded Radiology Report Generation

Dec 18, 2025

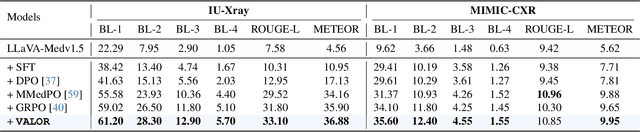

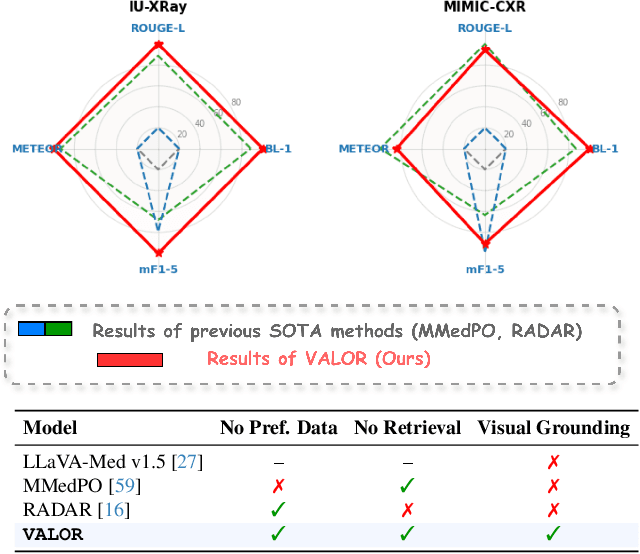

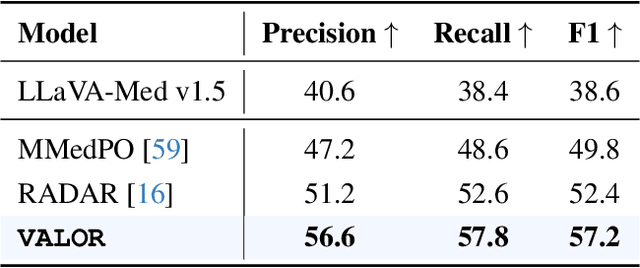

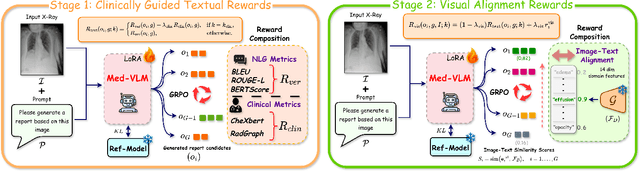

Abstract:Radiology Report Generation (RRG) is a critical step toward automating healthcare workflows, facilitating accurate patient assessments, and reducing the workload of medical professionals. Despite recent progress in Large Medical Vision-Language Models (Med-VLMs), generating radiology reports that are both visually grounded and clinically accurate remains a significant challenge. Existing approaches often rely on large labeled corpora for pre-training, costly task-specific preference data, or retrieval-based methods. However, these strategies do not adequately mitigate hallucinations arising from poor cross-modal alignment between visual and linguistic representations. To address these limitations, we propose VALOR:Visual Alignment of Medical Vision-Language Models for GrOunded Radiology Report Generation. Our method introduces a reinforcement learning-based post-alignment framework utilizing Group-Relative Proximal Optimization (GRPO). The training proceeds in two stages: (1) improving the Med-VLM with textual rewards to encourage clinically precise terminology, and (2) aligning the vision projection module of the textually grounded model with disease findings, thereby guiding attention toward image re gions most relevant to the diagnostic task. Extensive experiments on multiple benchmarks demonstrate that VALOR substantially improves factual accuracy and visual grounding, achieving significant performance gains over state-of-the-art report generation methods.

A Koopman Operator-based NMPC Framework for Mobile Robot Navigation under Uncertainty

Apr 29, 2025Abstract:Mobile robot navigation can be challenged by system uncertainty. For example, ground friction may vary abruptly causing slipping, and noisy sensor data can lead to inaccurate feedback control. Traditional model-based methods may be limited when considering such variations, making them fragile to varying types of uncertainty. One way to address this is by leveraging learned prediction models by means of the Koopman operator into nonlinear model predictive control (NMPC). This paper describes the formulation of, and provides the solution to, an NMPC problem using a lifted bilinear model that can accurately predict affine input systems with stochastic perturbations. System constraints are defined in the Koopman space, while the optimization problem is solved in the state space to reduce computational complexity. Training data to estimate the Koopman operator for the system are given via randomized control inputs. The output of the developed method enables closed-loop navigation control over environments populated with obstacles. The effectiveness of the proposed method has been tested through numerical simulations using a wheeled robot with additive stochastic velocity perturbations, Gazebo simulations with a realistic digital twin robot, and physical hardware experiments without knowledge of the true dynamics.

Deformable Multibody Modeling for Model Predictive Control in Legged Locomotion with Embodied Compliance

Apr 28, 2025Abstract:The paper presents a method to stabilize dynamic gait for a legged robot with embodied compliance. Our approach introduces a unified description for rigid and compliant bodies to approximate their deformation and a formulation for deformable multibody systems. We develop the centroidal composite predictive deformed inertia (CCPDI) tensor of a deformable multibody system and show how to integrate it with the standard-of-practice model predictive controller (MPC). Simulation shows that the resultant control framework can stabilize trot stepping on a quadrupedal robot with both rigid and compliant spines under the same MPC configurations. Compared to standard MPC, the developed CCPDI-enabled MPC distributes the ground reactive forces closer to the heuristics for body balance, and it is thus more likely to stabilize the gaits of the compliant robot. A parametric study shows that our method preserves some level of robustness within a suitable envelope of key parameter values.

Leveraging Synthetic Adult Datasets for Unsupervised Infant Pose Estimation

Apr 08, 2025Abstract:Human pose estimation is a critical tool across a variety of healthcare applications. Despite significant progress in pose estimation algorithms targeting adults, such developments for infants remain limited. Existing algorithms for infant pose estimation, despite achieving commendable performance, depend on fully supervised approaches that require large amounts of labeled data. These algorithms also struggle with poor generalizability under distribution shifts. To address these challenges, we introduce SHIFT: Leveraging SyntHetic Adult Datasets for Unsupervised InFanT Pose Estimation, which leverages the pseudo-labeling-based Mean-Teacher framework to compensate for the lack of labeled data and addresses distribution shifts by enforcing consistency between the student and the teacher pseudo-labels. Additionally, to penalize implausible predictions obtained from the mean-teacher framework, we incorporate an infant manifold pose prior. To enhance SHIFT's self-occlusion perception ability, we propose a novel visibility consistency module for improved alignment of the predicted poses with the original image. Extensive experiments on multiple benchmarks show that SHIFT significantly outperforms existing state-of-the-art unsupervised domain adaptation (UDA) pose estimation methods by 5% and supervised infant pose estimation methods by a margin of 16%. The project page is available at: https://sarosijbose.github.io/SHIFT.

SeGuE: Semantic Guided Exploration for Mobile Robots

Apr 04, 2025Abstract:The rise of embodied AI applications has enabled robots to perform complex tasks which require a sophisticated understanding of their environment. To enable successful robot operation in such settings, maps must be constructed so that they include semantic information, in addition to geometric information. In this paper, we address the novel problem of semantic exploration, whereby a mobile robot must autonomously explore an environment to fully map both its structure and the semantic appearance of features. We develop a method based on next-best-view exploration, where potential poses are scored based on the semantic features visible from that pose. We explore two alternative methods for sampling potential views and demonstrate the effectiveness of our framework in both simulation and physical experiments. Automatic creation of high-quality semantic maps can enable robots to better understand and interact with their environments and enable future embodied AI applications to be more easily deployed.

Uncertainty-Aware Diffusion Guided Refinement of 3D Scenes

Mar 19, 2025Abstract:Reconstructing 3D scenes from a single image is a fundamentally ill-posed task due to the severely under-constrained nature of the problem. Consequently, when the scene is rendered from novel camera views, existing single image to 3D reconstruction methods render incoherent and blurry views. This problem is exacerbated when the unseen regions are far away from the input camera. In this work, we address these inherent limitations in existing single image-to-3D scene feedforward networks. To alleviate the poor performance due to insufficient information beyond the input image's view, we leverage a strong generative prior in the form of a pre-trained latent video diffusion model, for iterative refinement of a coarse scene represented by optimizable Gaussian parameters. To ensure that the style and texture of the generated images align with that of the input image, we incorporate on-the-fly Fourier-style transfer between the generated images and the input image. Additionally, we design a semantic uncertainty quantification module that calculates the per-pixel entropy and yields uncertainty maps used to guide the refinement process from the most confident pixels while discarding the remaining highly uncertain ones. We conduct extensive experiments on real-world scene datasets, including in-domain RealEstate-10K and out-of-domain KITTI-v2, showing that our approach can provide more realistic and high-fidelity novel view synthesis results compared to existing state-of-the-art methods.

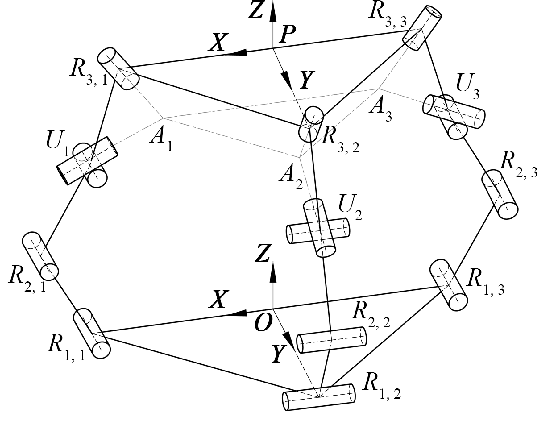

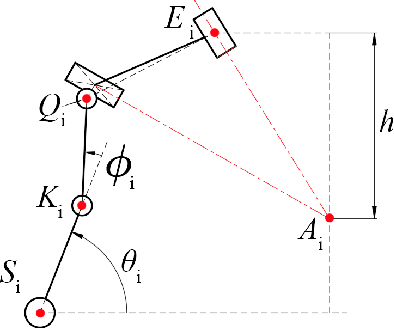

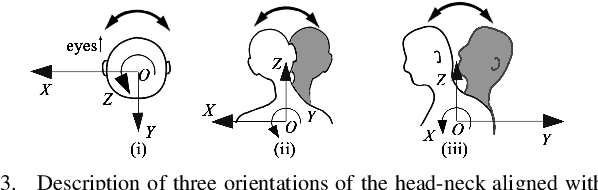

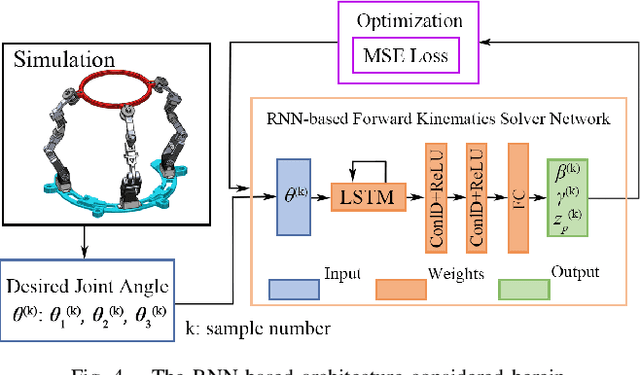

Learning-based Estimation of Forward Kinematics for an Orthotic Parallel Robotic Mechanism

Mar 14, 2025

Abstract:This paper introduces a 3D parallel robot with three identical five-degree-of-freedom chains connected to a circular brace end-effector, aimed to serve as an assistive device for patients with cervical spondylosis. The inverse kinematics of the system is solved analytically, whereas learning-based methods are deployed to solve the forward kinematics. The methods considered herein include a Koopman operator-based approach as well as a neural network-based approach. The task is to predict the position and orientation of end-effector trajectories. The dataset used to train these methods is based on the analytical solutions derived via inverse kinematics. The methods are tested both in simulation and via physical hardware experiments with the developed robot. Results validate the suitability of deploying learning-based methods for studying parallel mechanism forward kinematics that are generally hard to resolve analytically.

Preference VLM: Leveraging VLMs for Scalable Preference-Based Reinforcement Learning

Feb 03, 2025

Abstract:Preference-based reinforcement learning (RL) offers a promising approach for aligning policies with human intent but is often constrained by the high cost of human feedback. In this work, we introduce PrefVLM, a framework that integrates Vision-Language Models (VLMs) with selective human feedback to significantly reduce annotation requirements while maintaining performance. Our method leverages VLMs to generate initial preference labels, which are then filtered to identify uncertain cases for targeted human annotation. Additionally, we adapt VLMs using a self-supervised inverse dynamics loss to improve alignment with evolving policies. Experiments on Meta-World manipulation tasks demonstrate that PrefVLM achieves comparable or superior success rates to state-of-the-art methods while using up to 2 x fewer human annotations. Furthermore, we show that adapted VLMs enable efficient knowledge transfer across tasks, further minimizing feedback needs. Our results highlight the potential of combining VLMs with selective human supervision to make preference-based RL more scalable and practical.

Unsupervised Domain Adaptation for Occlusion Resilient Human Pose Estimation

Jan 06, 2025

Abstract:Occlusions are a significant challenge to human pose estimation algorithms, often resulting in inaccurate and anatomically implausible poses. Although current occlusion-robust human pose estimation algorithms exhibit impressive performance on existing datasets, their success is largely attributed to supervised training and the availability of additional information, such as multiple views or temporal continuity. Furthermore, these algorithms typically suffer from performance degradation under distribution shifts. While existing domain adaptive human pose estimation algorithms address this bottleneck, they tend to perform suboptimally when the target domain images are occluded, a common occurrence in real-life scenarios. To address these challenges, we propose OR-POSE: Unsupervised Domain Adaptation for Occlusion Resilient Human POSE Estimation. OR-POSE is an innovative unsupervised domain adaptation algorithm which effectively mitigates domain shifts and overcomes occlusion challenges by employing the mean teacher framework for iterative pseudo-label refinement. Additionally, OR-POSE reinforces realistic pose prediction by leveraging a learned human pose prior which incorporates the anatomical constraints of humans in the adaptation process. Lastly, OR-POSE avoids overfitting to inaccurate pseudo labels generated from heavily occluded images by employing a novel visibility-based curriculum learning approach. This enables the model to gradually transition from training samples with relatively less occlusion to more challenging, heavily occluded samples. Extensive experiments show that OR-POSE outperforms existing analogous state-of-the-art algorithms by $\sim$ 7% on challenging occluded human pose estimation datasets.

Adaptive LiDAR Odometry and Mapping for Autonomous Agricultural Mobile Robots in Unmanned Farms

Dec 03, 2024Abstract:Unmanned and intelligent agricultural systems are crucial for enhancing agricultural efficiency and for helping mitigate the effect of labor shortage. However, unlike urban environments, agricultural fields impose distinct and unique challenges on autonomous robotic systems, such as the unstructured and dynamic nature of the environment, the rough and uneven terrain, and the resulting non-smooth robot motion. To address these challenges, this work introduces an adaptive LiDAR odometry and mapping framework tailored for autonomous agricultural mobile robots operating in complex agricultural environments. The proposed framework consists of a robust LiDAR odometry algorithm based on dense Generalized-ICP scan matching, and an adaptive mapping module that considers motion stability and point cloud consistency for selective map updates. The key design principle of this framework is to prioritize the incremental consistency of the map by rejecting motion-distorted points and sparse dynamic objects, which in turn leads to high accuracy in odometry estimated from scan matching against the map. The effectiveness of the proposed method is validated via extensive evaluation against state-of-the-art methods on field datasets collected in real-world agricultural environments featuring various planting types, terrain types, and robot motion profiles. Results demonstrate that our method can achieve accurate odometry estimation and mapping results consistently and robustly across diverse agricultural settings, whereas other methods are sensitive to abrupt robot motion and accumulated drift in unstructured environments. Further, the computational efficiency of our method is competitive compared with other methods. The source code of the developed method and the associated field dataset are publicly available at https://github.com/UCR-Robotics/AG-LOAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge