Sung-Kyun Kim

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

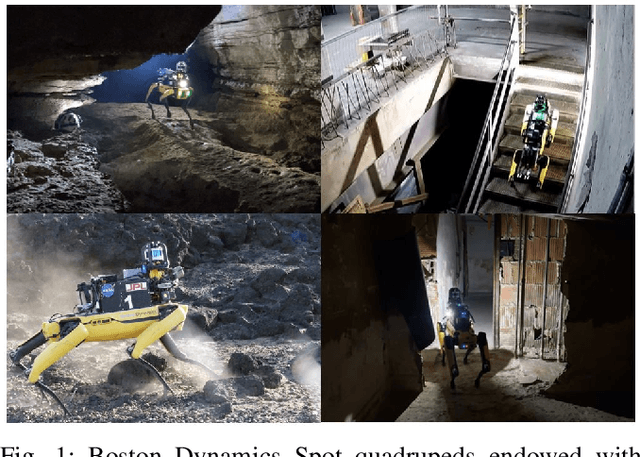

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

SayComply: Grounding Field Robotic Tasks in Operational Compliance through Retrieval-Based Language Models

Nov 18, 2024

Abstract:This paper addresses the problem of task planning for robots that must comply with operational manuals in real-world settings. Task planning under these constraints is essential for enabling autonomous robot operation in domains that require adherence to domain-specific knowledge. Current methods for generating robot goals and plans rely on common sense knowledge encoded in large language models. However, these models lack grounding of robot plans to domain-specific knowledge and are not easily transferable between multiple sites or customers with different compliance needs. In this work, we present SayComply, which enables grounding robotic task planning with operational compliance using retrieval-based language models. We design a hierarchical database of operational, environment, and robot embodiment manuals and procedures to enable efficient retrieval of the relevant context under the limited context length of the LLMs. We then design a task planner using a tree-based retrieval augmented generation (RAG) technique to generate robot tasks that follow user instructions while simultaneously complying with the domain knowledge in the database. We demonstrate the benefits of our approach through simulations and hardware experiments in real-world scenarios that require precise context retrieval across various types of context, outperforming the standard RAG method. Our approach bridges the gap in deploying robots that consistently adhere to operational protocols, offering a scalable and edge-deployable solution for ensuring compliance across varied and complex real-world environments. Project website: saycomply.github.io.

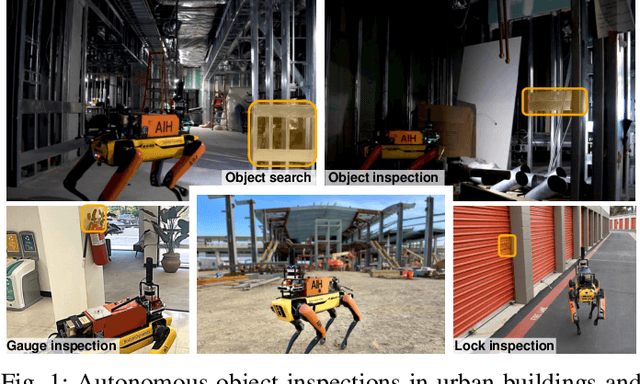

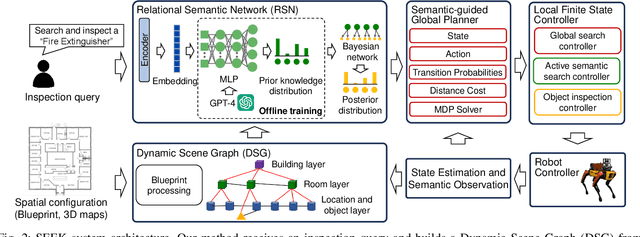

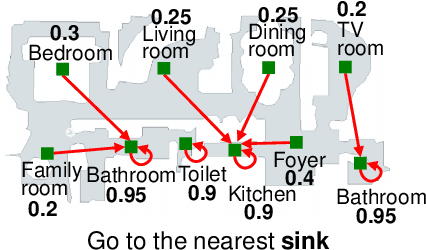

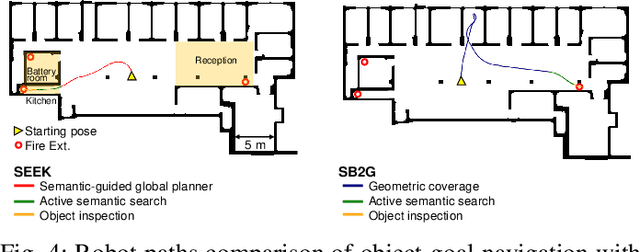

SEEK: Semantic Reasoning for Object Goal Navigation in Real World Inspection Tasks

May 16, 2024

Abstract:This paper addresses the problem of object-goal navigation in autonomous inspections in real-world environments. Object-goal navigation is crucial to enable effective inspections in various settings, often requiring the robot to identify the target object within a large search space. Current object inspection methods fall short of human efficiency because they typically cannot bootstrap prior and common sense knowledge as humans do. In this paper, we introduce a framework that enables robots to use semantic knowledge from prior spatial configurations of the environment and semantic common sense knowledge. We propose SEEK (Semantic Reasoning for Object Inspection Tasks) that combines semantic prior knowledge with the robot's observations to search for and navigate toward target objects more efficiently. SEEK maintains two representations: a Dynamic Scene Graph (DSG) and a Relational Semantic Network (RSN). The RSN is a compact and practical model that estimates the probability of finding the target object across spatial elements in the DSG. We propose a novel probabilistic planning framework to search for the object using relational semantic knowledge. Our simulation analyses demonstrate that SEEK outperforms the classical planning and Large Language Models (LLMs)-based methods that are examined in this study in terms of efficiency for object-goal inspection tasks. We validated our approach on a physical legged robot in urban environments, showcasing its practicality and effectiveness in real-world inspection scenarios.

Staircase Localization for Autonomous Exploration in Urban Environments

Mar 26, 2024

Abstract:A staircase localization method is proposed for robots to explore urban environments autonomously. The proposed method employs a modular design in the form of a cascade pipeline consisting of three modules of stair detection, line segment detection, and stair localization modules. The stair detection module utilizes an object detection algorithm based on deep learning to generate a region of interest (ROI). From the ROI, line segment features are extracted using a deep line segment detection algorithm. The extracted line segments are used to localize a staircase in terms of position, orientation, and stair direction. The stair detection and localization are performed only with a single RGB-D camera. Each component of the proposed pipeline does not need to be designed particularly for staircases, which makes it easy to maintain the whole pipeline and replace each component with state-of-the-art deep learning detection techniques. The results of real-world experiments show that the proposed method can perform accurate stair detection and localization during autonomous exploration for various structured and unstructured upstairs and downstairs with shadows, dirt, and occlusions by artificial and natural objects.

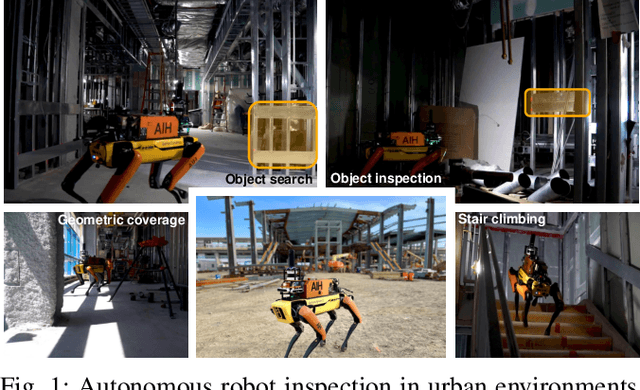

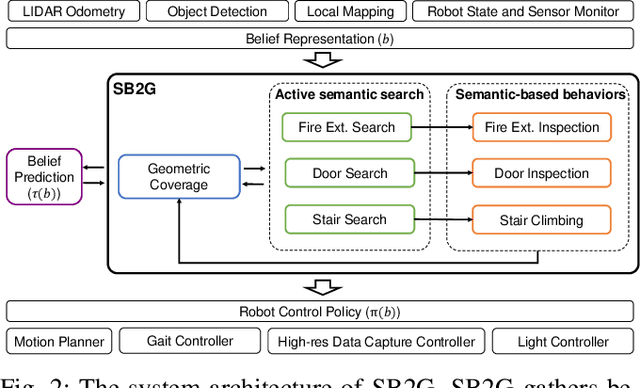

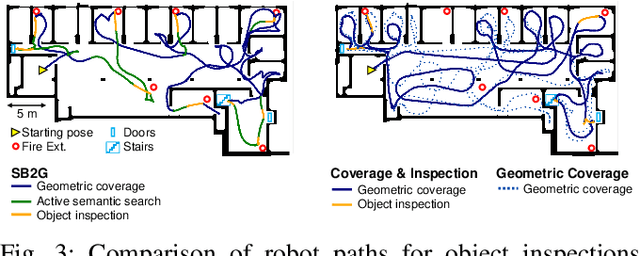

Semantic Belief Behavior Graph: Enabling Autonomous Robot Inspection in Unknown Environments

Jan 30, 2024

Abstract:This paper addresses the problem of autonomous robotic inspection in complex and unknown environments. This capability is crucial for efficient and precise inspections in various real-world scenarios, even when faced with perceptual uncertainty and lack of prior knowledge of the environment. Existing methods for real-world autonomous inspections typically rely on predefined targets and waypoints and often fail to adapt to dynamic or unknown settings. In this work, we introduce the Semantic Belief Behavior Graph (SB2G) framework as a novel approach to semantic-aware autonomous robot inspection. SB2G generates a control policy for the robot, featuring behavior nodes that encapsulate various semantic-based policies designed for inspecting different classes of objects. We design an active semantic search behavior to guide the robot in locating objects for inspection while reducing semantic information uncertainty. The edges in the SB2G encode transitions between these behaviors. We validate our approach through simulation and real-world urban inspections using a legged robotic platform. Our results show that SB2G enables a more efficient inspection policy, exhibiting performance comparable to human-operated inspections.

Safe and Efficient Navigation in Extreme Environments using Semantic Belief Graphs

Apr 02, 2023

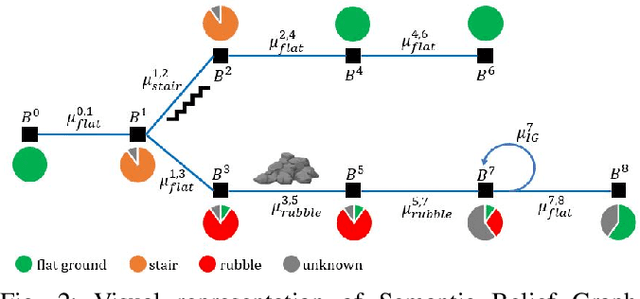

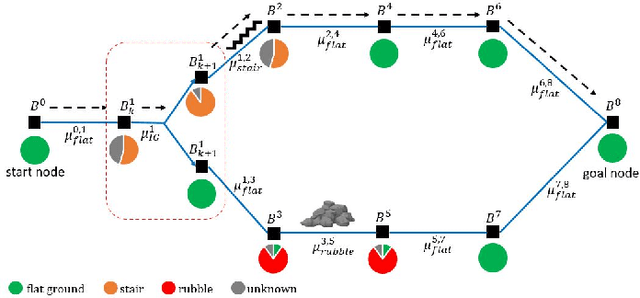

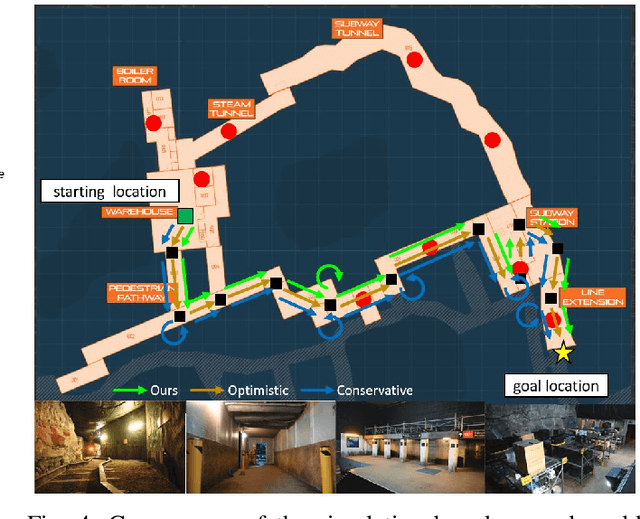

Abstract:To achieve autonomy in unknown and unstructured environments, we propose a method for semantic-based planning under perceptual uncertainty. This capability is crucial for safe and efficient robot navigation in environment with mobility-stressing elements that require terrain-specific locomotion policies. We propose the Semantic Belief Graph (SBG), a geometric- and semantic-based representation of a robot's probabilistic roadmap in the environment. The SBG nodes comprise of the robot geometric state and the semantic-knowledge of the terrains in the environment. The SBG edges represent local semantic-based controllers that drive the robot between the nodes or invoke an information gathering action to reduce semantic belief uncertainty. We formulate a semantic-based planning problem on SBG that produces a policy for the robot to safely navigate to the target location with minimal traversal time. We analyze our method in simulation and present real-world results with a legged robotic platform navigating multi-level outdoor environments.

Adaptive Coverage Path Planning for Efficient Exploration of Unknown Environments

Feb 06, 2023

Abstract:We present a method for solving the coverage problem with the objective of autonomously exploring an unknown environment under mission time constraints. Here, the robot is tasked with planning a path over a horizon such that the accumulated area swept out by its sensor footprint is maximized. Because this problem exhibits a diminishing returns property known as submodularity, we choose to formulate it as a tree-based sequential decision making process. This formulation allows us to evaluate the effects of the robot's actions on future world coverage states, while simultaneously accounting for traversability risk and the dynamic constraints of the robot. To quickly find near-optimal solutions, we propose an effective approximation to the coverage sensor model which adapts to the local environment. Our method was extensively tested across various complex environments and served as the local exploration algorithm for a competing entry in the DARPA Subterranean Challenge.

Fast and Scalable Signal Inference for Active Robotic Source Seeking

Jan 06, 2023

Abstract:In active source seeking, a robot takes repeated measurements in order to locate a signal source in a cluttered and unknown environment. A key component of an active source seeking robot planner is a model that can produce estimates of the signal at unknown locations with uncertainty quantification. This model allows the robot to plan for future measurements in the environment. Traditionally, this model has been in the form of a Gaussian process, which has difficulty scaling and cannot represent obstacles. %In this work, We propose a global and local factor graph model for active source seeking, which allows the model to scale to a large number of measurements and represent unknown obstacles in the environment. We combine this model with extensions to a highly scalable planner to form a system for large-scale active source seeking. We demonstrate that our approach outperforms baseline methods in both simulated and real robot experiments.

Risk-aware Meta-level Decision Making for Exploration Under Uncertainty

Sep 12, 2022

Abstract:Robotic exploration of unknown environments is fundamentally a problem of decision making under uncertainty where the robot must account for uncertainty in sensor measurements, localization, action execution, as well as many other factors. For large-scale exploration applications, autonomous systems must overcome the challenges of sequentially deciding which areas of the environment are valuable to explore while safely evaluating the risks associated with obstacles and hazardous terrain. In this work, we propose a risk-aware meta-level decision making framework to balance the tradeoffs associated with local and global exploration. Meta-level decision making builds upon classical hierarchical coverage planners by switching between local and global policies with the overall objective of selecting the policy that is most likely to maximize reward in a stochastic environment. We use information about the environment history, traversability risk, and kinodynamic constraints to reason about the probability of successful policy execution to switch between local and global policies. We have validated our solution in both simulation and on a variety of large-scale real world hardware tests. Our results show that by balancing local and global exploration we are able to significantly explore large-scale environments more efficiently.

ACHORD: Communication-Aware Multi-Robot Coordination with Intermittent Connectivity

Jun 05, 2022

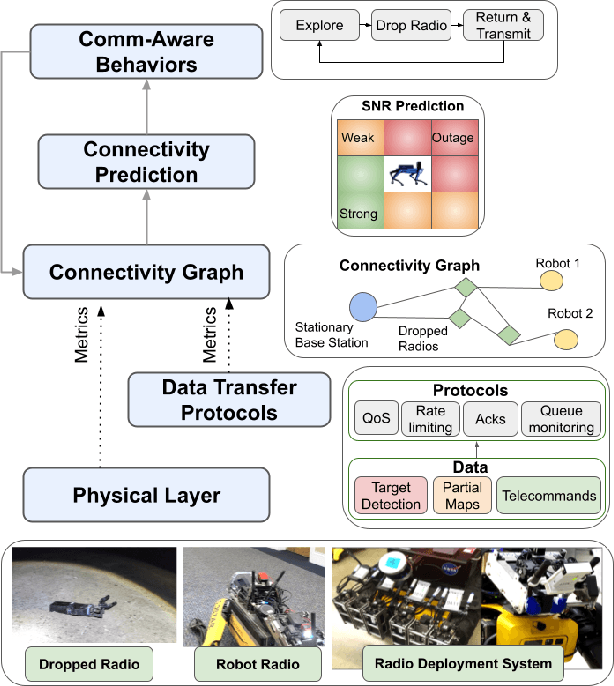

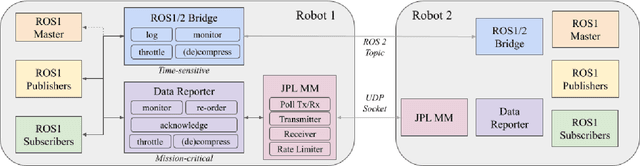

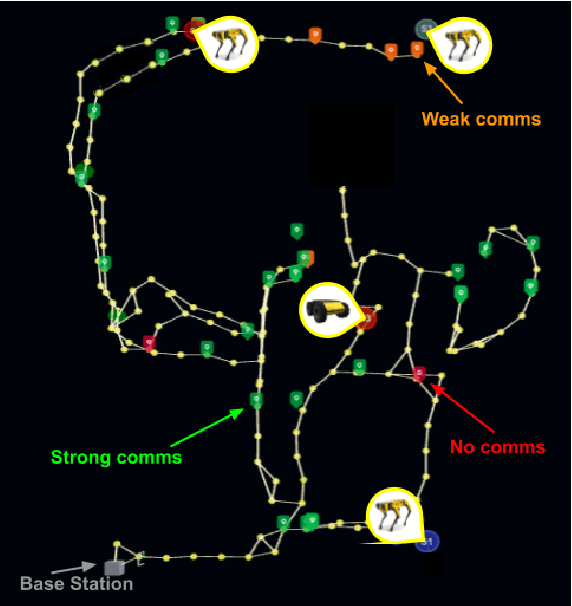

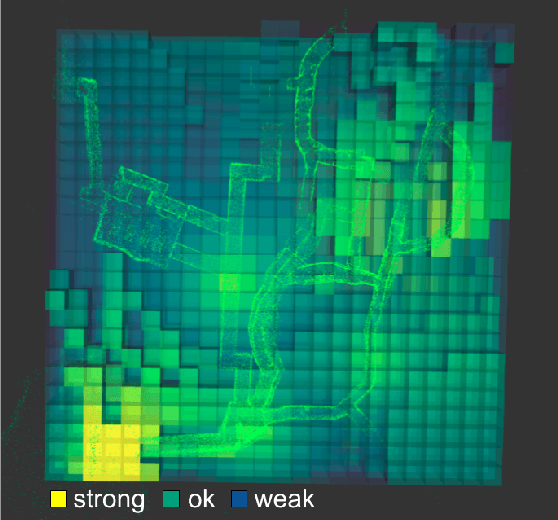

Abstract:Communication is an important capability for multi-robot exploration because (1) inter-robot communication (comms) improves coverage efficiency and (2) robot-to-base comms improves situational awareness. Exploring comms-restricted (e.g., subterranean) environments requires a multi-robot system to tolerate and anticipate intermittent connectivity, and to carefully consider comms requirements, otherwise mission-critical data may be lost. In this paper, we describe and analyze ACHORD (Autonomous & Collaborative High-Bandwidth Operations with Radio Droppables), a multi-layer networking solution which tightly co-designs the network architecture and high-level decision-making for improved comms. ACHORD provides bandwidth prioritization and timely and reliable data transfer despite intermittent connectivity. Furthermore, it exposes low-layer networking metrics to the application layer to enable robots to autonomously monitor, map, and extend the network via droppable radios, as well as restore connectivity to improve collaborative exploration. We evaluate our solution with respect to the comms performance in several challenging underground environments including the DARPA SubT Finals competition environment. Our findings support the use of data stratification and flow control to improve bandwidth-usage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge