Thomas Touma

AI Space Cortex: An Experimental System for Future Era Space Exploration

Jul 09, 2025Abstract:Our Robust, Explainable Autonomy for Scientific Icy Moon Operations (REASIMO) effort contributes to NASA's Concepts for Ocean worlds Life Detection Technology (COLDTech) program, which explores science platform technologies for ocean worlds such as Europa and Enceladus. Ocean world missions pose significant operational challenges. These include long communication lags, limited power, and lifetime limitations caused by radiation damage and hostile conditions. Given these operational limitations, onboard autonomy will be vital for future Ocean world missions. Besides the management of nominal lander operations, onboard autonomy must react appropriately in the event of anomalies. Traditional spacecraft rely on a transition into 'safe-mode' in which non-essential components and subsystems are powered off to preserve safety and maintain communication with Earth. For a severely time-limited Ocean world mission, resolutions to these anomalies that can be executed without Earth-in-the-loop communication and associated delays are paramount for completion of the mission objectives and science goals. To address these challenges, the REASIMO effort aims to demonstrate a robust level of AI-assisted autonomy for such missions, including the ability to detect and recover from anomalies, and to perform missions based on pre-trained behaviors rather than hard-coded, predetermined logic like all prior space missions. We developed an AI-assisted, personality-driven, intelligent framework for control of an Ocean world mission by combining a mix of advanced technologies. To demonstrate the capabilities of the framework, we perform tests of autonomous sampling operations on a lander-manipulator testbed at the NASA Jet Propulsion Laboratory, approximating possible surface conditions such a mission might encounter.

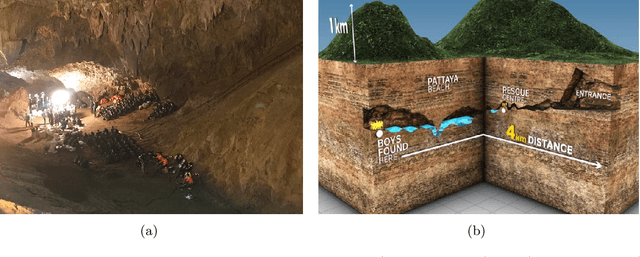

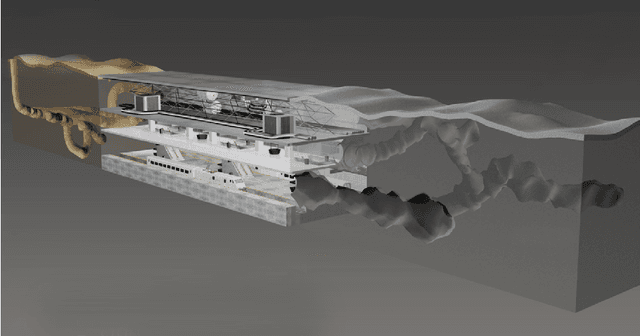

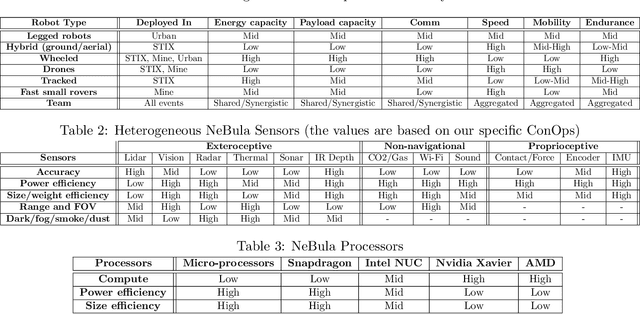

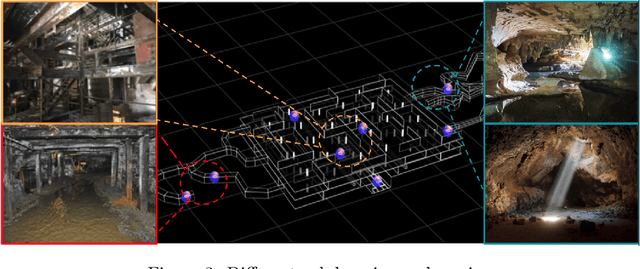

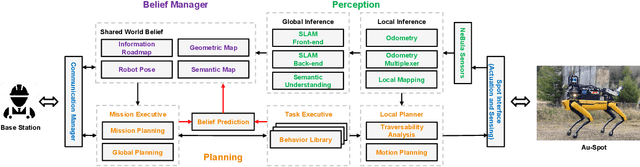

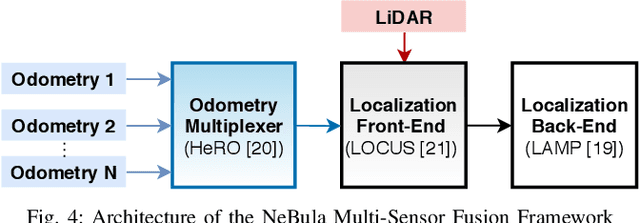

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

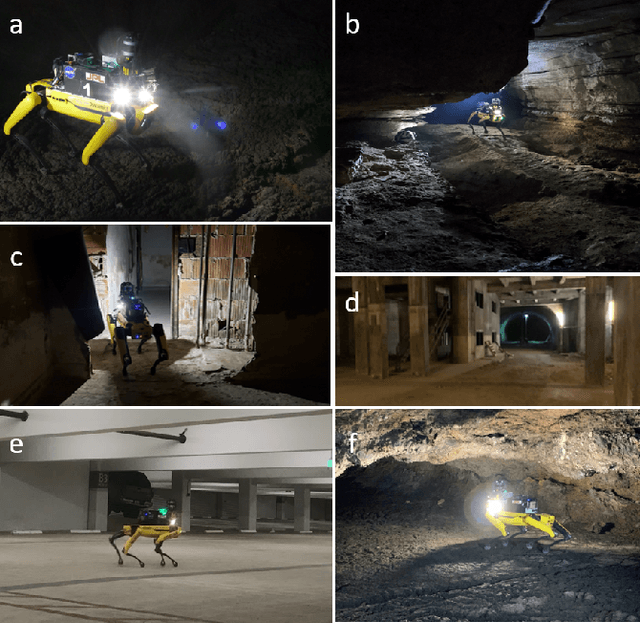

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

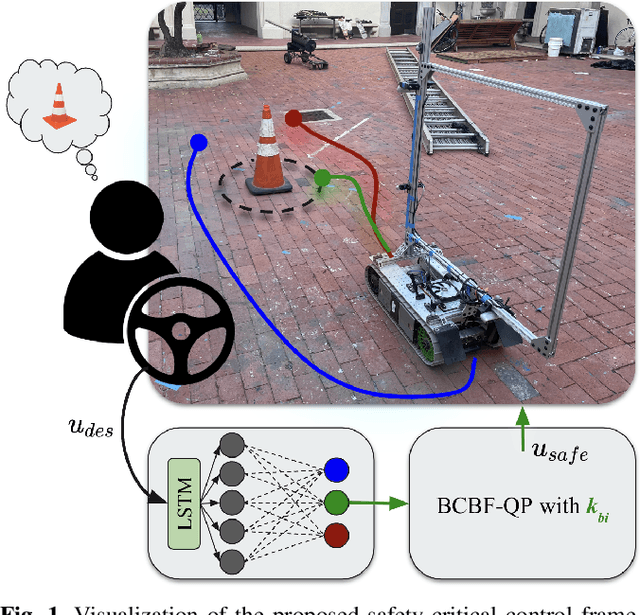

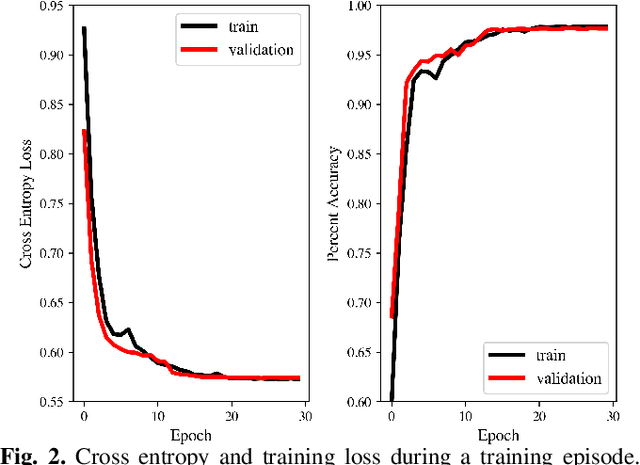

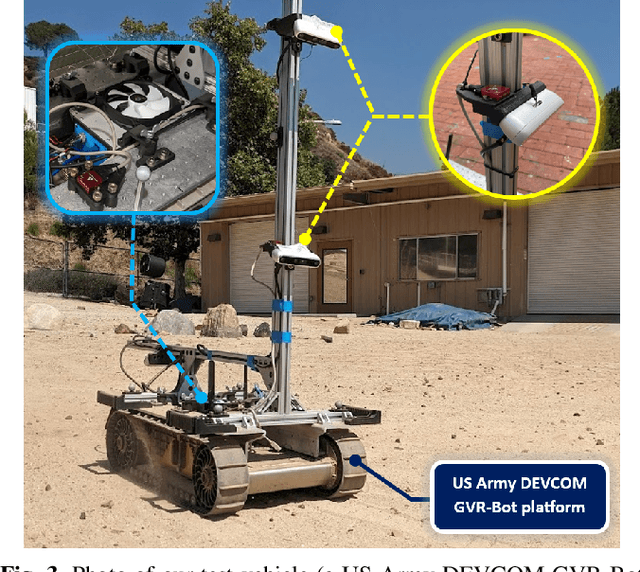

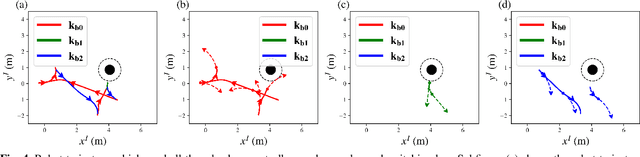

A Learning-Based Framework for Safe Human-Robot Collaboration with Multiple Backup Control Barrier Functions

Oct 09, 2023

Abstract:Ensuring robot safety in complex environments is a difficult task due to actuation limits, such as torque bounds. This paper presents a safety-critical control framework that leverages learning-based switching between multiple backup controllers to formally guarantee safety under bounded control inputs while satisfying driver intention. By leveraging backup controllers designed to uphold safety and input constraints, backup control barrier functions (BCBFs) construct implicitly defined control invariance sets via a feasible quadratic program (QP). However, BCBF performance largely depends on the design and conservativeness of the chosen backup controller, especially in our setting of human-driven vehicles in complex, e.g, off-road, conditions. While conservativeness can be reduced by using multiple backup controllers, determining when to switch is an open problem. Consequently, we develop a broadcast scheme that estimates driver intention and integrates BCBFs with multiple backup strategies for human-robot interaction. An LSTM classifier uses data inputs from the robot, human, and safety algorithms to continually choose a backup controller in real-time. We demonstrate our method's efficacy on a dual-track robot in obstacle avoidance scenarios. Our framework guarantees robot safety while adhering to driver intention.

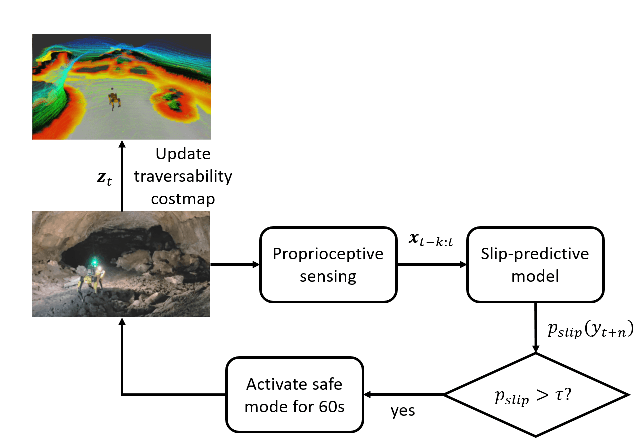

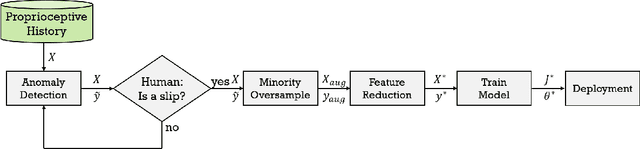

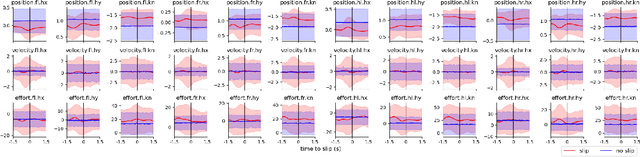

PrePARE: Predictive Proprioception for Agile Failure Event Detection in Robotic Exploration of Extreme Terrains

Jul 30, 2022

Abstract:Legged robots can traverse a wide variety of terrains, some of which may be challenging for wheeled robots, such as stairs or highly uneven surfaces. However, quadruped robots face stability challenges on slippery surfaces. This can be resolved by adjusting the robot's locomotion by switching to more conservative and stable locomotion modes, such as crawl mode (where three feet are in contact with the ground always) or amble mode (where one foot touches down at a time) to prevent potential falls. To tackle these challenges, we propose an approach to learn a model from past robot experience for predictive detection of potential failures. Accordingly, we trigger gait switching merely based on proprioceptive sensory information. To learn this predictive model, we propose a semi-supervised process for detecting and annotating ground truth slip events in two stages: We first detect abnormal occurrences in the time series sequences of the gait data using an unsupervised anomaly detector, and then, the anomalies are verified with expert human knowledge in a replay simulation to assert the event of a slip. These annotated slip events are then used as ground truth examples to train an ensemble decision learner for predicting slip probabilities across terrains for traversability. We analyze our model on data recorded by a legged robot on multiple sites with slippery terrain. We demonstrate that a potential slip event can be predicted up to 720 ms ahead of a potential fall with an average precision greater than 0.95 and an average F-score of 0.82. Finally, we validate our approach in real-time by deploying it on a legged robot and switching its gait mode based on slip event detection.

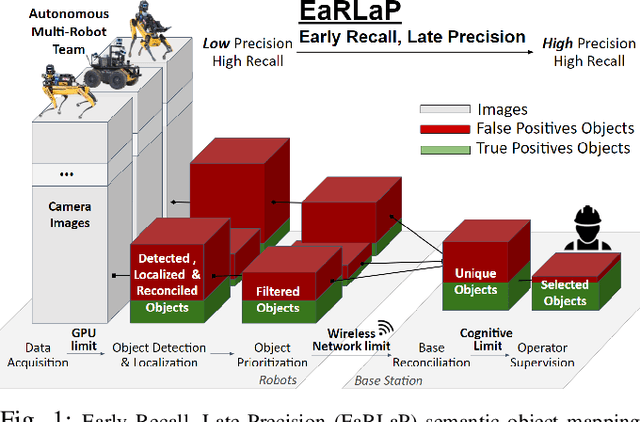

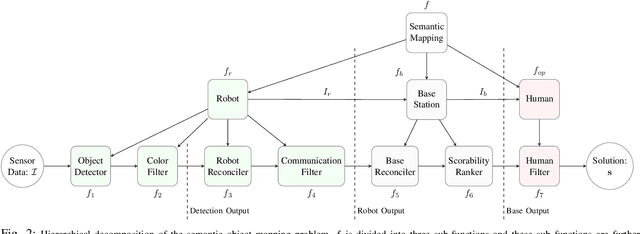

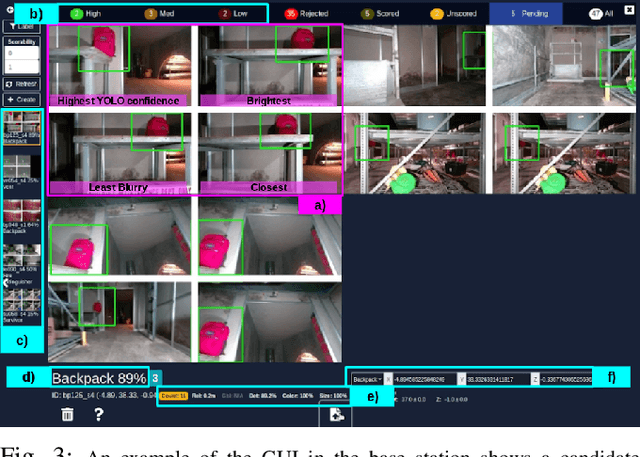

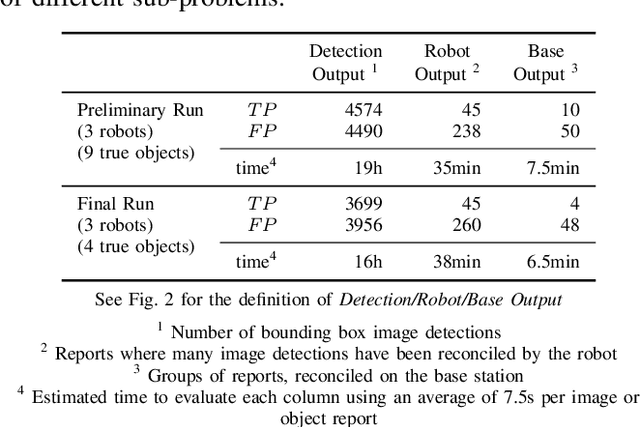

Early Recall, Late Precision: Multi-Robot Semantic Object Mapping under Operational Constraints in Perceptually-Degraded Environments

Jun 21, 2022

Abstract:Semantic object mapping in uncertain, perceptually degraded environments during long-range multi-robot autonomous exploration tasks such as search-and-rescue is important and challenging. During such missions, high recall is desirable to avoid missing true target objects and high precision is also critical to avoid wasting valuable operational time on false positives. Given recent advancements in visual perception algorithms, the former is largely solvable autonomously, but the latter is difficult to address without the supervision of a human operator. However, operational constraints such as mission time, computational requirements, mesh network bandwidth and so on, can make the operator's task infeasible unless properly managed. We propose the Early Recall, Late Precision (EaRLaP) semantic object mapping pipeline to solve this problem. EaRLaP was used by Team CoSTAR in DARPA Subterranean Challenge, where it successfully detected all the artifacts encountered by the team of robots. We will discuss these results and performance of the EaRLaP on various datasets.

ACHORD: Communication-Aware Multi-Robot Coordination with Intermittent Connectivity

Jun 05, 2022

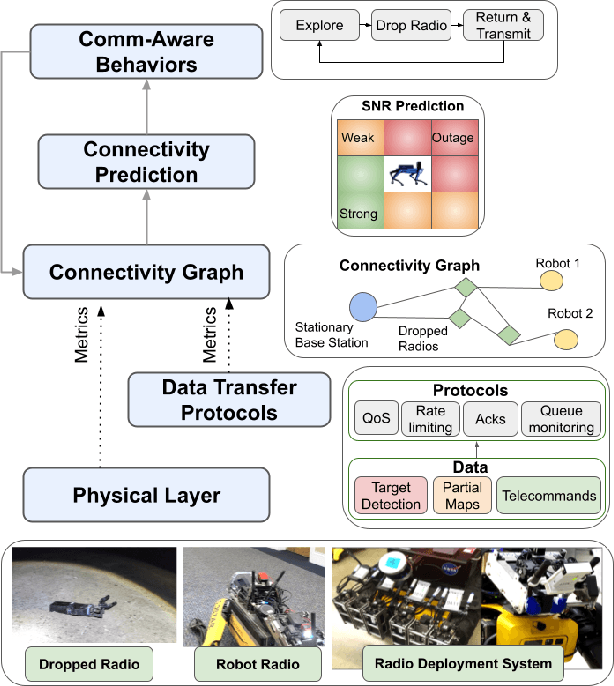

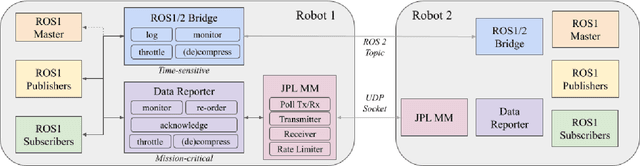

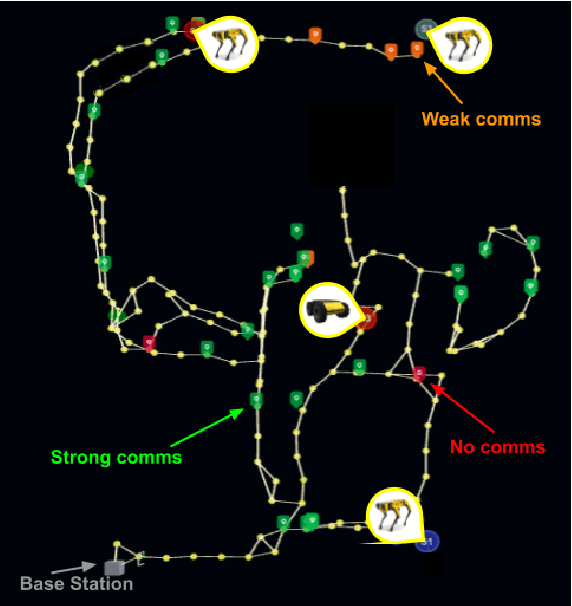

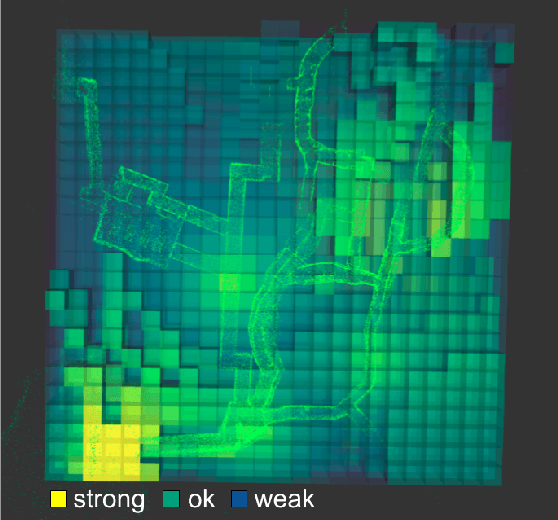

Abstract:Communication is an important capability for multi-robot exploration because (1) inter-robot communication (comms) improves coverage efficiency and (2) robot-to-base comms improves situational awareness. Exploring comms-restricted (e.g., subterranean) environments requires a multi-robot system to tolerate and anticipate intermittent connectivity, and to carefully consider comms requirements, otherwise mission-critical data may be lost. In this paper, we describe and analyze ACHORD (Autonomous & Collaborative High-Bandwidth Operations with Radio Droppables), a multi-layer networking solution which tightly co-designs the network architecture and high-level decision-making for improved comms. ACHORD provides bandwidth prioritization and timely and reliable data transfer despite intermittent connectivity. Furthermore, it exposes low-layer networking metrics to the application layer to enable robots to autonomously monitor, map, and extend the network via droppable radios, as well as restore connectivity to improve collaborative exploration. We evaluate our solution with respect to the comms performance in several challenging underground environments including the DARPA SubT Finals competition environment. Our findings support the use of data stratification and flow control to improve bandwidth-usage.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

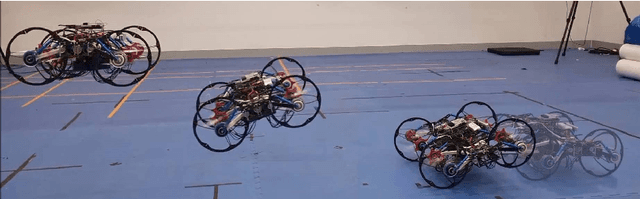

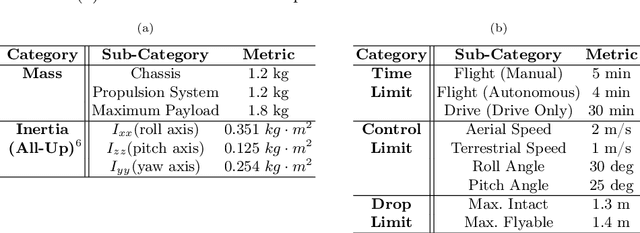

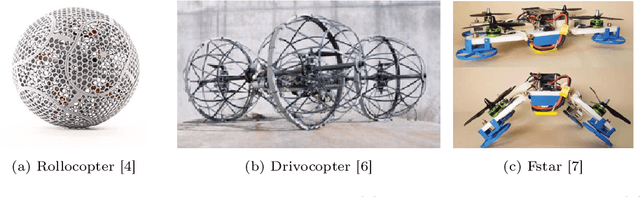

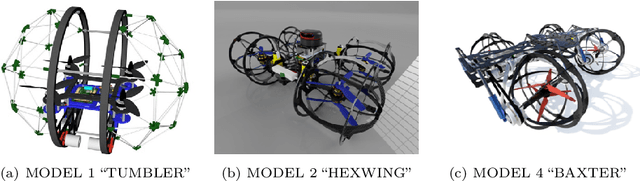

BAXTER: Bi-modal Aerial-Terrestrial Hybrid Vehicle for Long-endurance Versatile Mobility: Preprint Version

Feb 05, 2021

Abstract:Unmanned aerial vehicles are rapidly evolving within the field of robotics. However, their performance is often limited by payload capacity, operational time, and robustness to impact and collision. These limitations of aerial vehicles become more acute for missions in challenging environments such as subterranean structures which may require extended autonomous operation in confined spaces. While software solutions for aerial robots are developing rapidly, improvements to hardware are critical to applying advanced planners and algorithms in large and dangerous environments where the short range and high susceptibility to collisions of most modern aerial robots make applications in realistic subterranean missions infeasible. To provide such hardware capabilities, one needs to design and implement a hardware solution that takes into the account the Size, Weight, and Power (SWaP) constraints. This work focuses on providing a robust and versatile hybrid platform that improves payload capacity, operation time, endurance, and versatility. The Bi-modal Aerial and Terrestrial hybrid vehicle (BAXTER) is a solution that provides two modes of operation, aerial and terrestrial. BAXTER employs two novel hardware mechanisms: the M-Suspension and the Decoupled Transmission which together provide resilience during landing and crashes and efficient terrestrial operation. Extensive flight tests were conducted to characterize the vehicle's capabilities, including robustness and endurance. Additionally, we propose Agile Mode Transfer (AMT), a transition from aerial to terrestrial operation that seeks to minimize impulses during impact to the ground which is a quick and simple transition process that exploits BAXTER's resilience to impact.

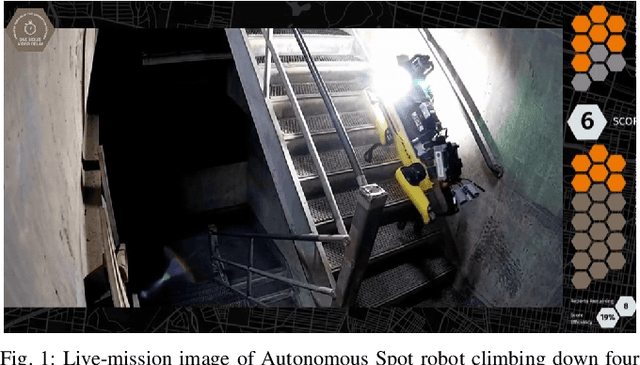

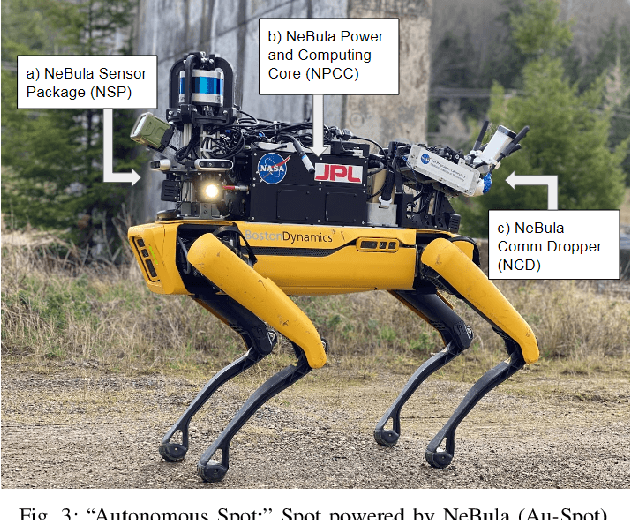

Autonomous Spot: Long-Range Autonomous Exploration of Extreme Environments with Legged Locomotion

Nov 01, 2020

Abstract:This paper serves as one of the first efforts to enable large-scale and long-duration autonomy using the Boston Dynamics Spot robot. Motivated by exploring extreme environments, particularly those involved in the DARPA Subterranean Challenge, this paper pushes the boundaries of the state-of-practice in enabling legged robotic systems to accomplish real-world complex missions in relevant scenarios. In particular, we discuss the behaviors and capabilities which emerge from the integration of the autonomy architecture NeBula (Networked Belief-aware Perceptual Autonomy) with next-generation mobility systems. We will discuss the hardware and software challenges, and solutions in mobility, perception, autonomy, and very briefly, wireless networking, as well as lessons learned and future directions. We demonstrate the performance of the proposed solutions on physical systems in real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge