Arndt F. Schilling

Continual Imitation Learning for Prosthetic Limbs

May 02, 2024

Abstract:Lower limb amputations and neuromuscular impairments severely restrict mobility, necessitating advancements beyond conventional prosthetics. Motorized bionic limbs offer promise, but their utility depends on mimicking the evolving synergy of human movement in various settings. In this context, we present a novel model for bionic prostheses' application that leverages camera-based motion capture and wearable sensor data, to learn the synergistic coupling of the lower limbs during human locomotion, empowering it to infer the kinematic behavior of a missing lower limb across varied tasks, such as climbing inclines and stairs. We propose a model that can multitask, adapt continually, anticipate movements, and refine. The core of our method lies in an approach which we call -- multitask prospective rehearsal -- that anticipates and synthesizes future movements based on the previous prediction and employs a corrective mechanism for subsequent predictions. We design an evolving architecture that merges lightweight, task-specific modules on a shared backbone, ensuring both specificity and scalability. We empirically validate our model against various baselines using real-world human gait datasets, including experiments with transtibial amputees, which encompass a broad spectrum of locomotion tasks. The results show that our approach consistently outperforms baseline models, particularly under scenarios affected by distributional shifts, adversarial perturbations, and noise.

Embracing Large Language and Multimodal Models for Prosthetic Technologies

Mar 11, 2024Abstract:This article presents a vision for the future of prosthetic devices, leveraging the advancements in large language models (LLMs) and Large Multimodal Models (LMMs) to revolutionize the interaction between humans and assistive technologies. Unlike traditional prostheses, which rely on limited and predefined commands, this approach aims to develop intelligent prostheses that understand and respond to users' needs through natural language and multimodal inputs. The realization of this vision involves developing a control system capable of understanding and translating a wide array of natural language and multimodal inputs into actionable commands for prosthetic devices. This includes the creation of models that can extract and interpret features from both textual and multimodal data, ensuring devices not only follow user commands but also respond intelligently to the environment and user intent, thus marking a significant leap forward in prosthetic technology.

PrePARE: Predictive Proprioception for Agile Failure Event Detection in Robotic Exploration of Extreme Terrains

Jul 30, 2022

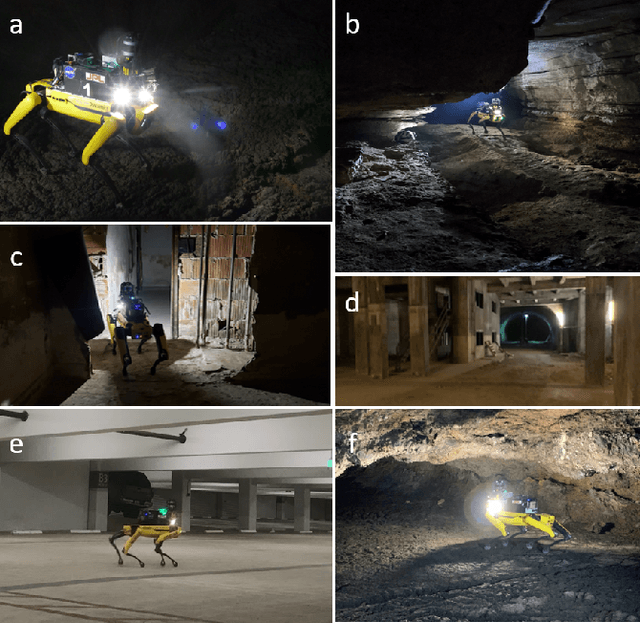

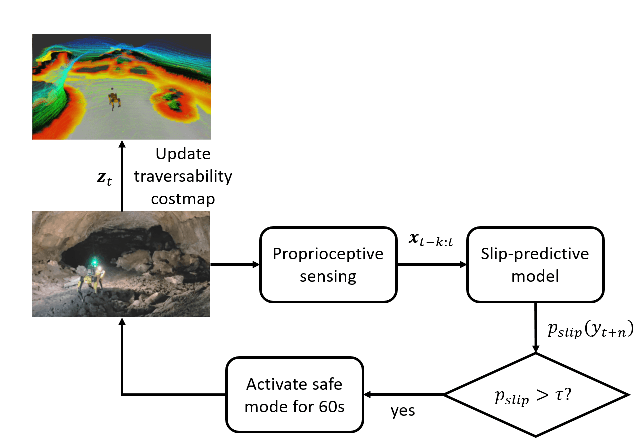

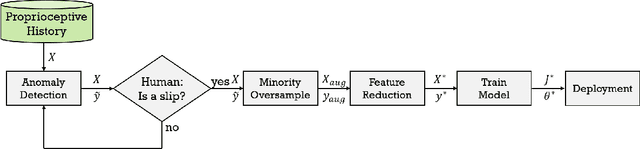

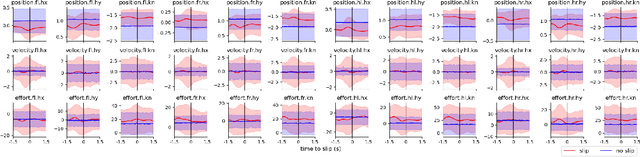

Abstract:Legged robots can traverse a wide variety of terrains, some of which may be challenging for wheeled robots, such as stairs or highly uneven surfaces. However, quadruped robots face stability challenges on slippery surfaces. This can be resolved by adjusting the robot's locomotion by switching to more conservative and stable locomotion modes, such as crawl mode (where three feet are in contact with the ground always) or amble mode (where one foot touches down at a time) to prevent potential falls. To tackle these challenges, we propose an approach to learn a model from past robot experience for predictive detection of potential failures. Accordingly, we trigger gait switching merely based on proprioceptive sensory information. To learn this predictive model, we propose a semi-supervised process for detecting and annotating ground truth slip events in two stages: We first detect abnormal occurrences in the time series sequences of the gait data using an unsupervised anomaly detector, and then, the anomalies are verified with expert human knowledge in a replay simulation to assert the event of a slip. These annotated slip events are then used as ground truth examples to train an ensemble decision learner for predicting slip probabilities across terrains for traversability. We analyze our model on data recorded by a legged robot on multiple sites with slippery terrain. We demonstrate that a potential slip event can be predicted up to 720 ms ahead of a potential fall with an average precision greater than 0.95 and an average F-score of 0.82. Finally, we validate our approach in real-time by deploying it on a legged robot and switching its gait mode based on slip event detection.

Learning a Shared Model for Motorized Prosthetic Joints to Predict Ankle-Joint Motion

Nov 14, 2021

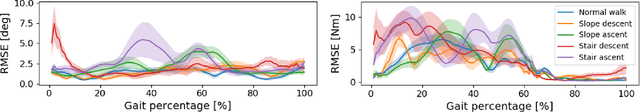

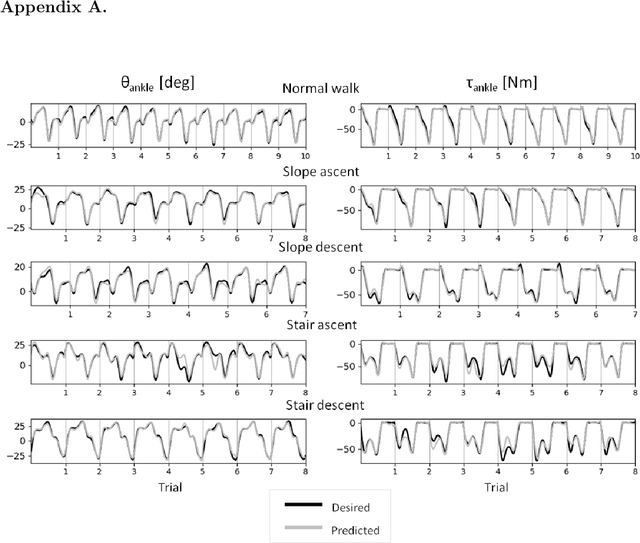

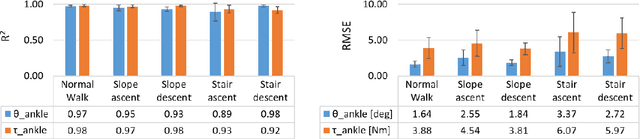

Abstract:Control strategies for active prostheses or orthoses use sensor inputs to recognize the user's locomotive intention and generate corresponding control commands for producing the desired locomotion. In this paper, we propose a learning-based shared model for predicting ankle-joint motion for different locomotion modes like level-ground walking, stair ascent, stair descent, slope ascent, and slope descent without the need to classify between them. Features extracted from hip and knee joint angular motion are used to continuously predict the ankle angles and moments using a Feed-Forward Neural Network-based shared model. We show that the shared model is adequate for predicting the ankle angles and moments for different locomotion modes without explicitly classifying between the modes. The proposed strategy shows the potential for devising a high-level controller for an intelligent prosthetic ankle that can adapt to different locomotion modes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge