Angel Santamaria-Navarro

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

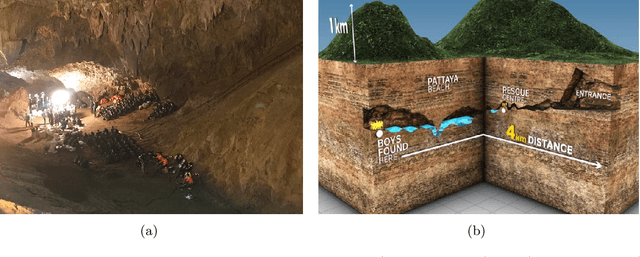

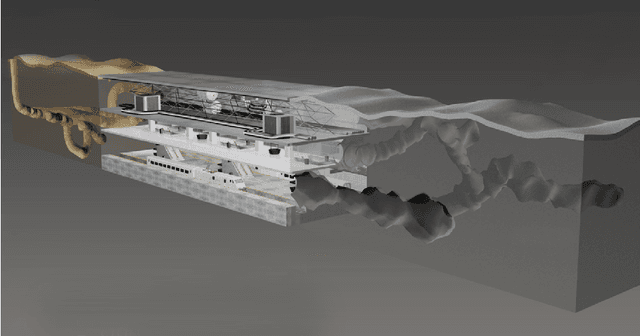

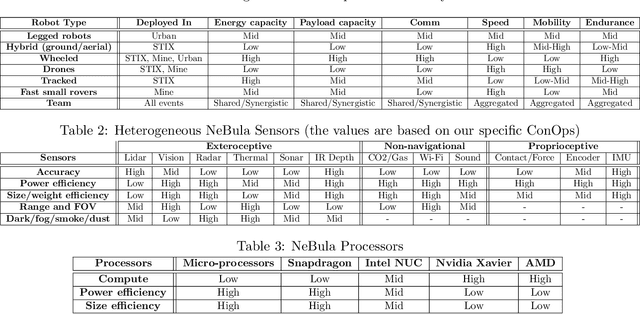

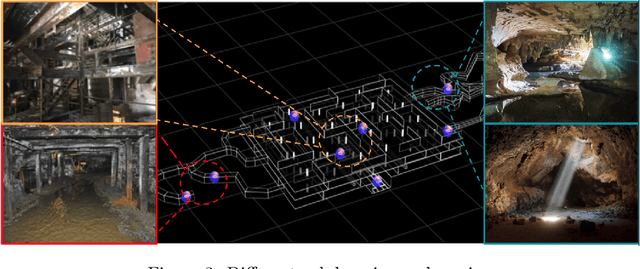

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

TRIFFID: Autonomous Robotic Aid For Increasing First Responders Efficiency

Feb 13, 2025

Abstract:The increasing complexity of natural disaster incidents demands innovative technological solutions to support first responders in their efforts. This paper introduces the TRIFFID system, a comprehensive technical framework that integrates unmanned ground and aerial vehicles with advanced artificial intelligence functionalities to enhance disaster response capabilities across wildfires, urban floods, and post-earthquake search and rescue missions. By leveraging state-of-the-art autonomous navigation, semantic perception, and human-robot interaction technologies, TRIFFID provides a sophisticated system com- posed of the following key components: hybrid robotic platform, centralized ground station, custom communication infrastructure, and smartphone application. The defined research and development activities demonstrate how deep neural networks, knowledge graphs, and multimodal information fusion can enable robots to autonomously navigate and analyze disaster environ- ments, reducing personnel risks and accelerating response times. The proposed system enhances emergency response teams by providing advanced mission planning, safety monitoring, and adaptive task execution capabilities. Moreover, it ensures real- time situational awareness and operational support in complex and risky situations, facilitating rapid and precise information collection and coordinated actions.

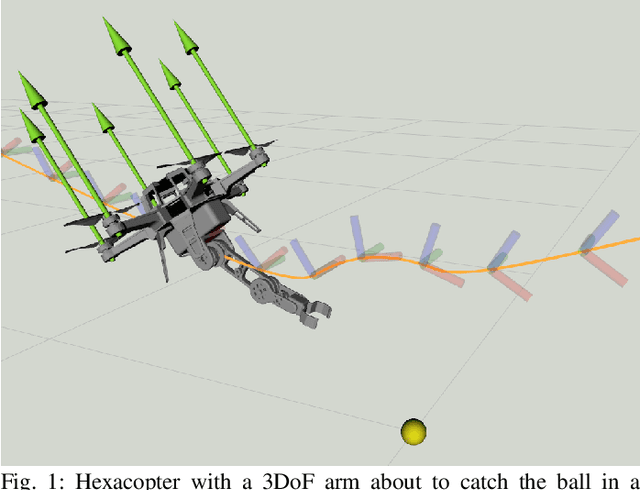

Borinot: an open thrust-torque-controlled robot for research on agile aerial-contact motion

Jul 27, 2023

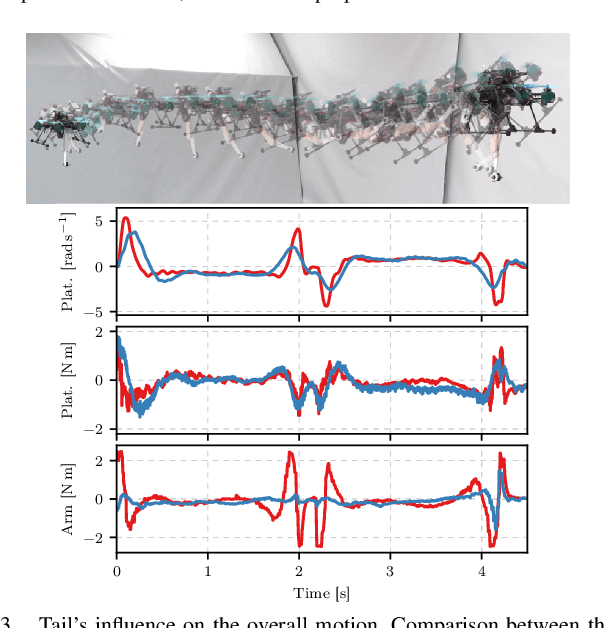

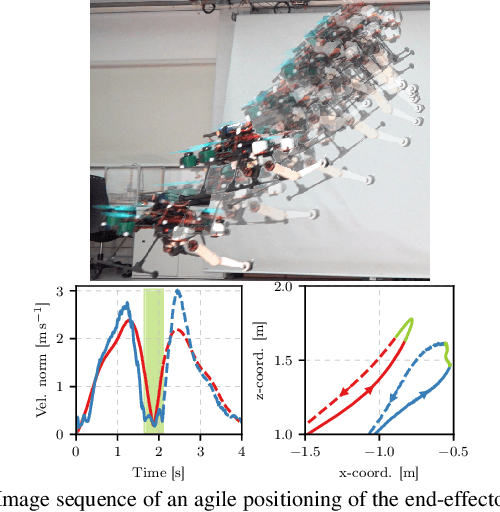

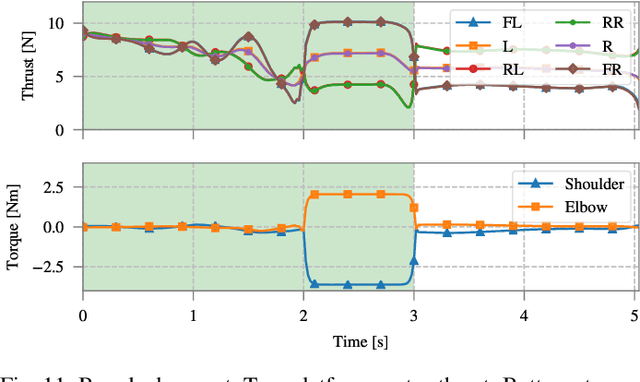

Abstract:This paper introduces Borinot, an open-source aerial robotic platform designed to conduct research on hybrid agile locomotion and manipulation using flight and contacts. This platform features an agile and powerful hexarotor that can be outfitted with torque-actuated limbs of diverse architecture, allowing for whole-body dynamic control. As a result, Borinot can perform agile tasks such as aggressive or acrobatic maneuvers with the participation of the whole-body dynamics. The limbs attached to Borinot can be utilized in various ways; during contact, they can be used as legs to create contact-based locomotion, or as arms to manipulate objects. In free flight, they can be used as tails to contribute to dynamics, mimicking the movements of many animals. This allows for any hybridization of these dynamic modes, making Borinot an ideal open-source platform for research on hybrid aerial-contact agile motion. To demonstrate the key capabilities of Borinot in terms of agility with hybrid motion modes, we have fitted a planar 2DoF limb and implemented a whole-body torque-level model-predictive-control. The result is a capable and adaptable platform that, we believe, opens up new avenues of research in the field of agile robotics. Interesting links\footnote{Documentation: \url{www.iri.upc.edu/borinot}}\footnote{Video: \url{https://youtu.be/Ob7IIVB6P_A}}.

Borinot: an agile torque-controlled robot for hybrid flying and contact loco-manipulation

May 02, 2023

Abstract:This paper introduces Borinot, an open-source flying robotic platform designed to perform hybrid agile locomotion and manipulation. This platform features a compact and powerful hexarotor that can be outfitted with torque-actuated extremities of diverse architecture, allowing for whole-body dynamic control. As a result, Borinot can perform agile tasks such as aggressive or acrobatic maneuvers with the participation of the whole-body dynamics. The extremities attached to Borinot can be utilized in various ways; during contact, they can be used as legs to create contact-based locomotion, or as arms to manipulate objects. In free flight, they can be used as tails to contribute to dynamics, mimicking the movements of many animals. This allows for any hybridization of these dynamic modes, like the jump-flight of chicken and locusts, making Borinot an ideal open-source platform for research on hybrid aerial-contact agile motion. To demonstrate the key capabilities of Borinot, we have fitted a planar 2DoF arm and implemented whole-body torque-level model-predictive-control. The result is a capable and adaptable platform that, we believe, opens up new avenues of research in the field of agile robotics.

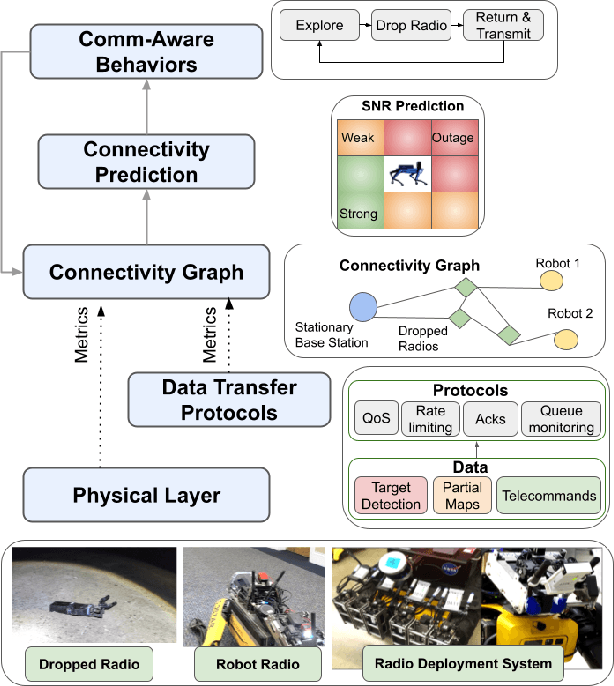

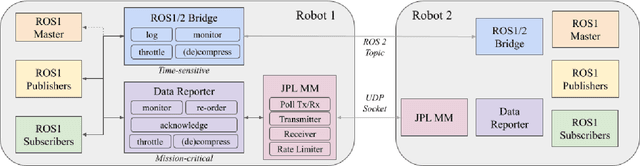

ACHORD: Communication-Aware Multi-Robot Coordination with Intermittent Connectivity

Jun 05, 2022

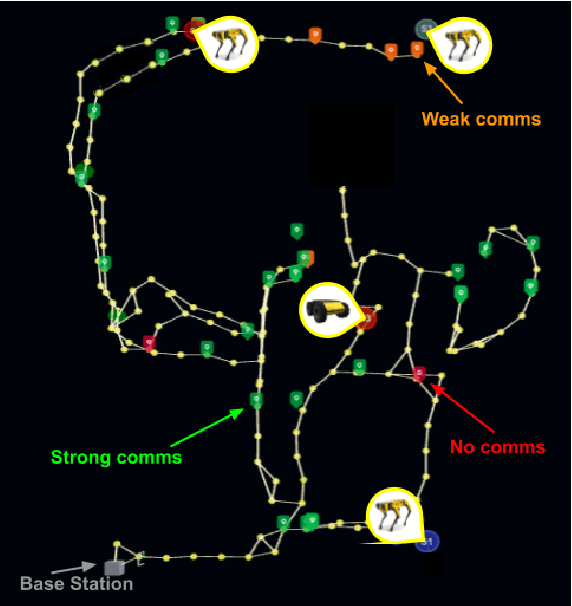

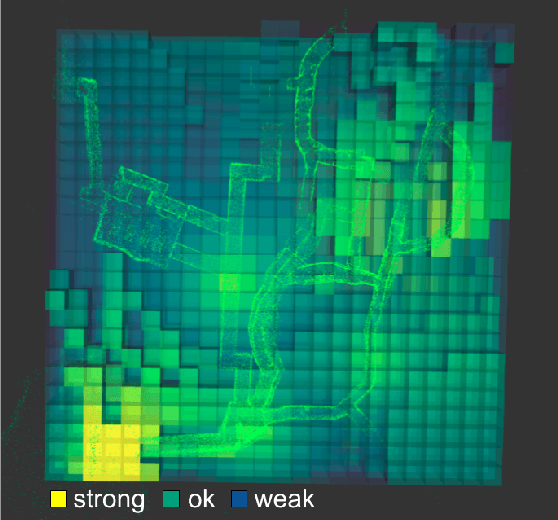

Abstract:Communication is an important capability for multi-robot exploration because (1) inter-robot communication (comms) improves coverage efficiency and (2) robot-to-base comms improves situational awareness. Exploring comms-restricted (e.g., subterranean) environments requires a multi-robot system to tolerate and anticipate intermittent connectivity, and to carefully consider comms requirements, otherwise mission-critical data may be lost. In this paper, we describe and analyze ACHORD (Autonomous & Collaborative High-Bandwidth Operations with Radio Droppables), a multi-layer networking solution which tightly co-designs the network architecture and high-level decision-making for improved comms. ACHORD provides bandwidth prioritization and timely and reliable data transfer despite intermittent connectivity. Furthermore, it exposes low-layer networking metrics to the application layer to enable robots to autonomously monitor, map, and extend the network via droppable radios, as well as restore connectivity to improve collaborative exploration. We evaluate our solution with respect to the comms performance in several challenging underground environments including the DARPA SubT Finals competition environment. Our findings support the use of data stratification and flow control to improve bandwidth-usage.

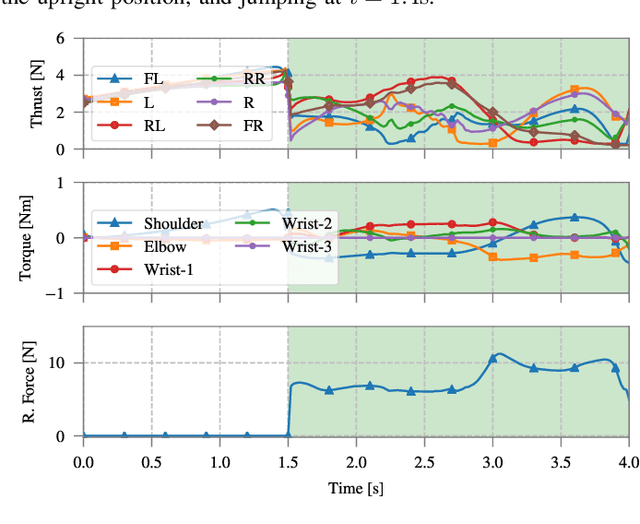

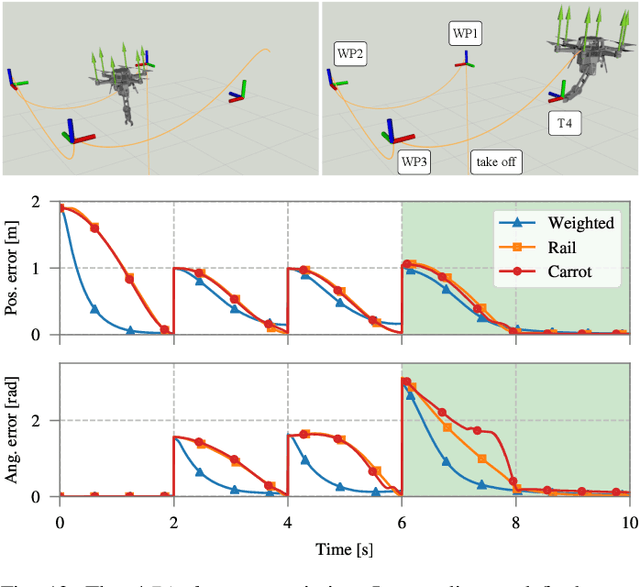

Full-Body Torque-Level Non-linear Model Predictive Control for Aerial Manipulation

Jul 08, 2021

Abstract:Non-linear model predictive control (nMPC) is a powerful approach to control complex robots (such as humanoids, quadrupeds, or unmanned aerial manipulators (UAMs)) as it brings important advantages over other existing techniques. The full-body dynamics, along with the prediction capability of the optimal control problem (OCP) solved at the core of the controller, allows to actuate the robot in line with its dynamics. This fact enhances the robot capabilities and allows, e.g., to perform intricate maneuvers at high dynamics while optimizing the amount of energy used. Despite the many similarities between humanoids or quadrupeds and UAMs, full-body torque-level nMPC has rarely been applied to UAMs. This paper provides a thorough description of how to use such techniques in the field of aerial manipulation. We give a detailed explanation of the different parts involved in the OCP, from the UAM dynamical model to the residuals in the cost function. We develop and compare three different nMPC controllers: Weighted MPC, Rail MPC, and Carrot MPC, which differ on the structure of their OCPs and on how these are updated at every time step. To validate the proposed framework, we present a wide variety of simulated case studies. First, we evaluate the trajectory generation problem, i.e., optimal control problems solved offline, involving different kinds of motions (e.g., aggressive maneuvers or contact locomotion) for different types of UAMs. Then, we assess the performance of the three nMPC controllers, i.e., closed-loop controllers solved online, through a variety of realistic simulations. For the benefit of the community, we have made available the source code related to this work.

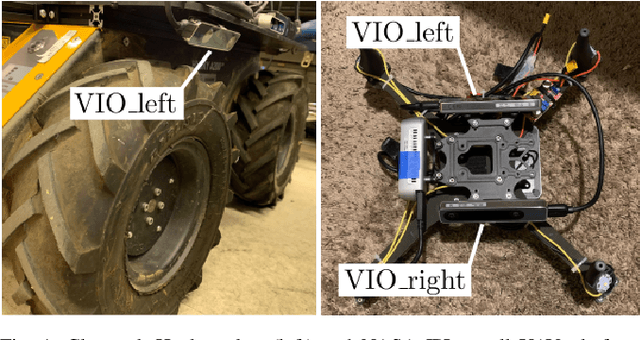

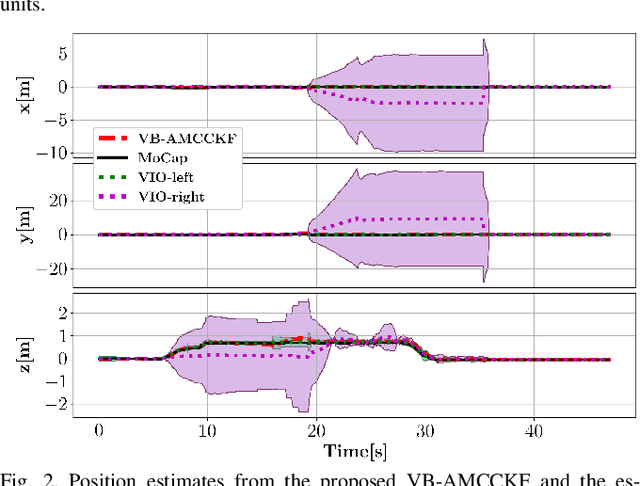

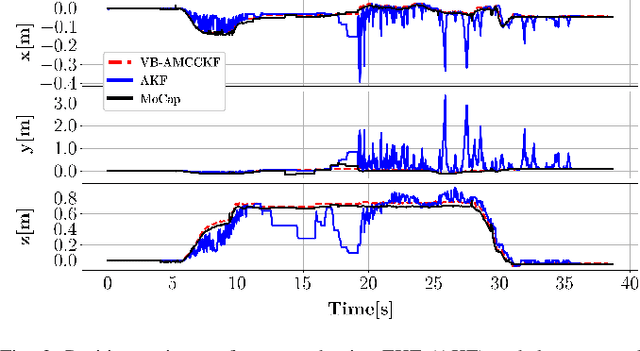

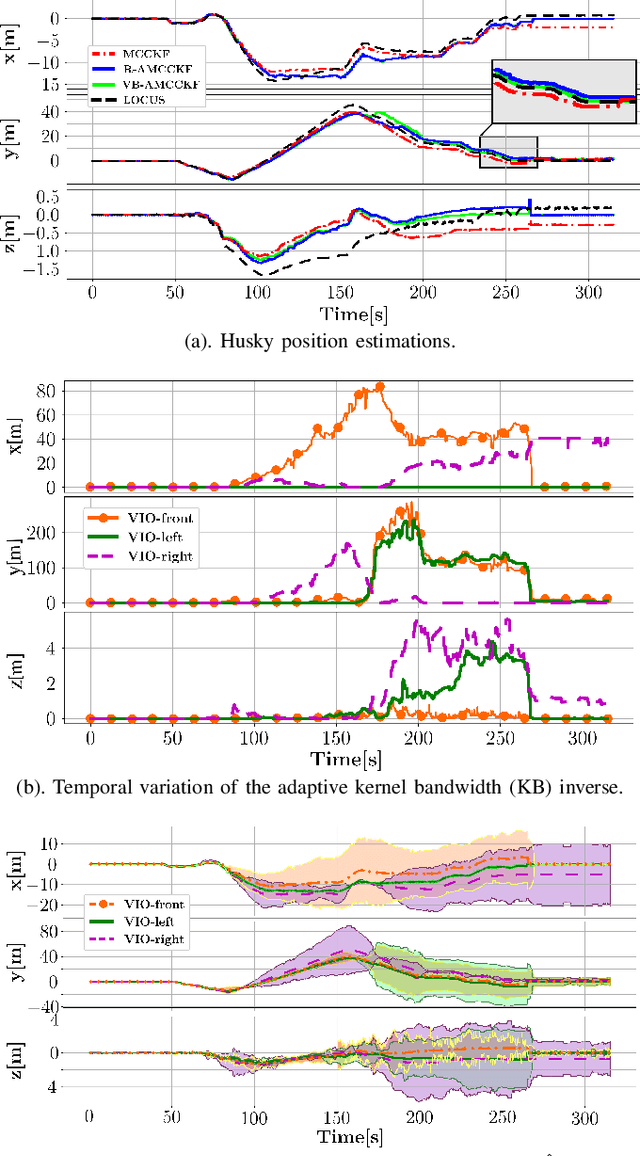

Towards Robust State Estimation by Boosting the Maximum Correntropy Criterion Kalman Filter with Adaptive Behaviors

Mar 29, 2021

Abstract:This work proposes a resilient and adaptive state estimation framework for robots operating in perceptually-degraded environments. The approach, called Adaptive Maximum Correntropy Criterion Kalman Filtering (AMCCKF), is inherently robust to corrupted measurements, such as those containing jumps or general non-Gaussian noise, and is able to modify filter parameters online to improve performance. Two separate methods are developed -- the Variational Bayesian AMCCKF (VB-AMCCKF) and Residual AMCCKF (R-AMCCKF) -- that modify the process and measurement noise models in addition to the bandwidth of the kernel function used in MCCKF based on the quality of measurements received. The two approaches differ in computational complexity and overall performance which is experimentally analyzed. The method is demonstrated in real experiments on both aerial and ground robots and is part of the solution used by the COSTAR team participating at the DARPA Subterranean Challenge.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

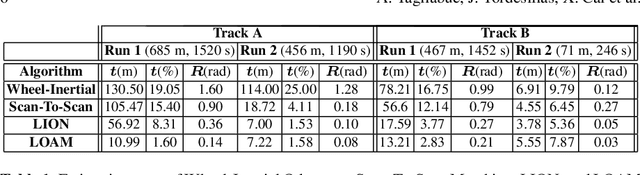

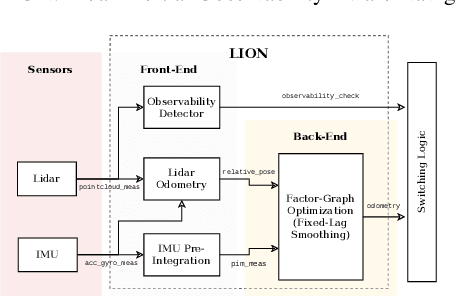

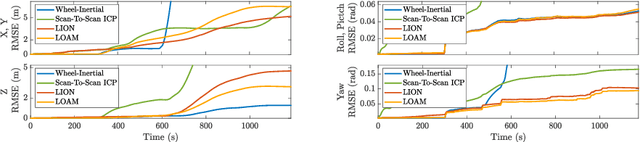

LION: Lidar-Inertial Observability-Aware Navigator for Vision-Denied Environments

Feb 05, 2021

Abstract:State estimation for robots navigating in GPS-denied and perceptually-degraded environments, such as underground tunnels, mines and planetary subsurface voids, remains challenging in robotics. Towards this goal, we present LION (Lidar-Inertial Observability-Aware Navigator), which is part of the state estimation framework developed by the team CoSTAR for the DARPA Subterranean Challenge, where the team achieved second and first places in the Tunnel and Urban circuits in August 2019 and February 2020, respectively. LION provides high-rate odometry estimates by fusing high-frequency inertial data from an IMU and low-rate relative pose estimates from a lidar via a fixed-lag sliding window smoother. LION does not require knowledge of relative positioning between lidar and IMU, as the extrinsic calibration is estimated online. In addition, LION is able to self-assess its performance using an observability metric that evaluates whether the pose estimate is geometrically ill-constrained. Odometry and confidence estimates are used by HeRO, a supervisory algorithm that provides robust estimates by switching between different odometry sources. In this paper we benchmark the performance of LION in perceptually-degraded subterranean environments, demonstrating its high technology readiness level for deployment in the field.

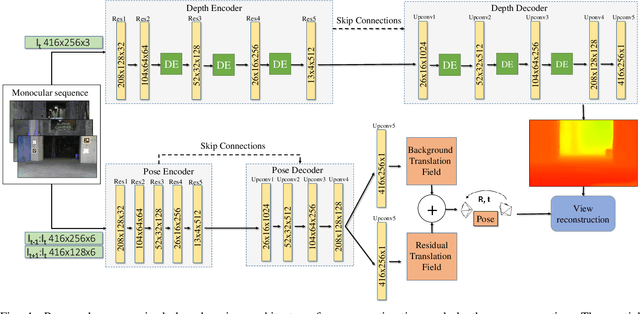

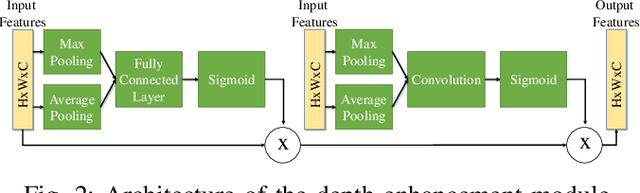

Unsupervised Deep Persistent Monocular Visual Odometry and Depth Estimation in Extreme Environments

Oct 31, 2020

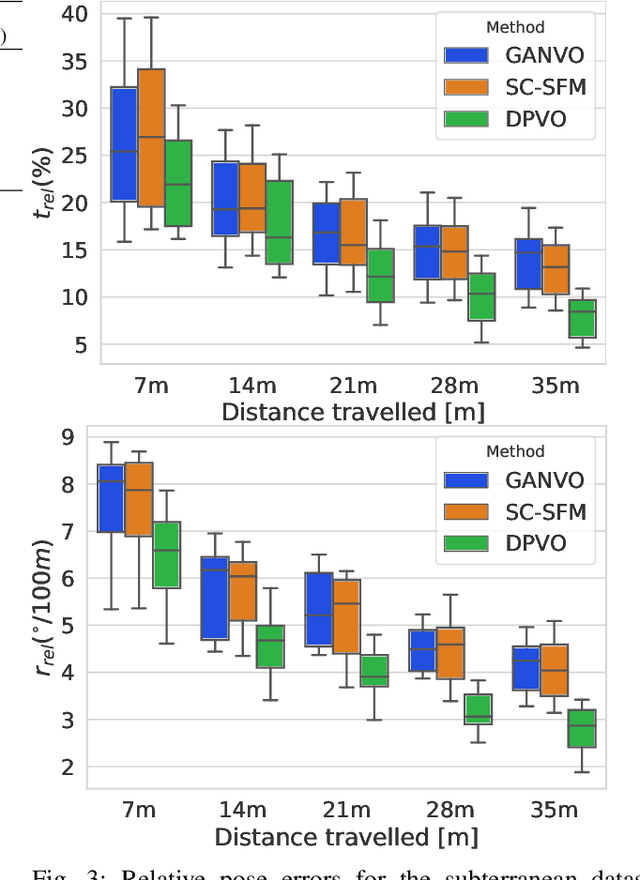

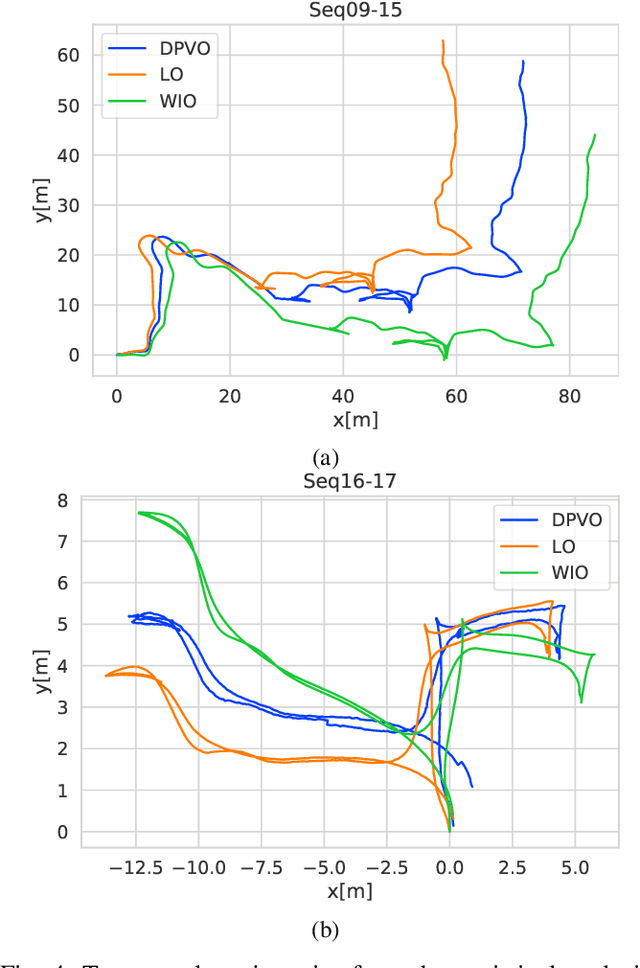

Abstract:In recent years, unsupervised deep learning approaches have received significant attention to estimate the depth and visual odometry (VO) from unlabelled monocular image sequences. However, their performance is limited in challenging environments due to perceptual degradation, occlusions and rapid motions. Moreover, the existing unsupervised methods suffer from the lack of scale-consistency constraints across frames, which causes that the VO estimators fail to provide persistent trajectories over long sequences. In this study, we propose an unsupervised monocular deep VO framework that predicts six-degrees-of-freedom pose camera motion and depth map of the scene from unlabelled RGB image sequences. We provide detailed quantitative and qualitative evaluations of the proposed framework on a) a challenging dataset collected during the DARPA Subterranean challenge; and b) the benchmark KITTI and Cityscapes datasets. The proposed approach outperforms both traditional and state-of-the-art unsupervised deep VO methods providing better results for both pose estimation and depth recovery. The presented approach is part of the solution used by the COSTAR team participating at the DARPA Subterranean Challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge