Jeffrey A. Edlund

Robust High-Speed State Estimation for Off-road Navigation using Radar Velocity Factors

Sep 17, 2024Abstract:Enabling robot autonomy in complex environments for mission critical application requires robust state estimation. Particularly under conditions where the exteroceptive sensors, which the navigation depends on, can be degraded by environmental challenges thus, leading to mission failure. It is precisely in such challenges where the potential for FMCW radar sensors is highlighted: as a complementary exteroceptive sensing modality with direct velocity measuring capabilities. In this work we integrate radial speed measurements from a FMCW radar sensor, using a radial speed factor, to provide linear velocity updates into a sliding-window state estimator for fusion with LiDAR pose and IMU measurements. We demonstrate that this augmentation increases the robustness of the state estimator to challenging conditions present in the environment and the negative effects they can pose to vulnerable exteroceptive modalities. The proposed method is extensively evaluated using robotic field experiments conducted using an autonomous, full-scale, off-road vehicle operating at high-speeds (~12 m/s) in complex desert environments. Furthermore, the robustness of the approach is demonstrated for cases of both simulated and real-world degradation of the LiDAR odometry performance along with comparison against state-of-the-art methods for radar-inertial odometry on public datasets.

ROAMER: Robust Offroad Autonomy using Multimodal State Estimation with Radar Velocity Integration

Jan 30, 2024Abstract:Reliable offroad autonomy requires low-latency, high-accuracy state estimates of pose as well as velocity, which remain viable throughout environments with sub-optimal operating conditions for the utilized perception modalities. As state estimation remains a single point of failure system in the majority of aspiring autonomous systems, failing to address the environmental degradation the perception sensors could potentially experience given the operating conditions, can be a mission-critical shortcoming. In this work, a method for integration of radar velocity information in a LiDAR-inertial odometry solution is proposed, enabling consistent estimation performance even with degraded LiDAR-inertial odometry. The proposed method utilizes the direct velocity-measuring capabilities of an Frequency Modulated Continuous Wave (FMCW) radar sensor to enhance the LiDAR-inertial smoother solution onboard the vehicle through integration of the forward velocity measurement into the graph-based smoother. This leads to increased robustness in the overall estimation solution, even in the absence of LiDAR data. This method was validated by hardware experiments conducted onboard an all-terrain vehicle traveling at high speed, ~12 m/s, in demanding offroad environments.

ACHORD: Communication-Aware Multi-Robot Coordination with Intermittent Connectivity

Jun 05, 2022

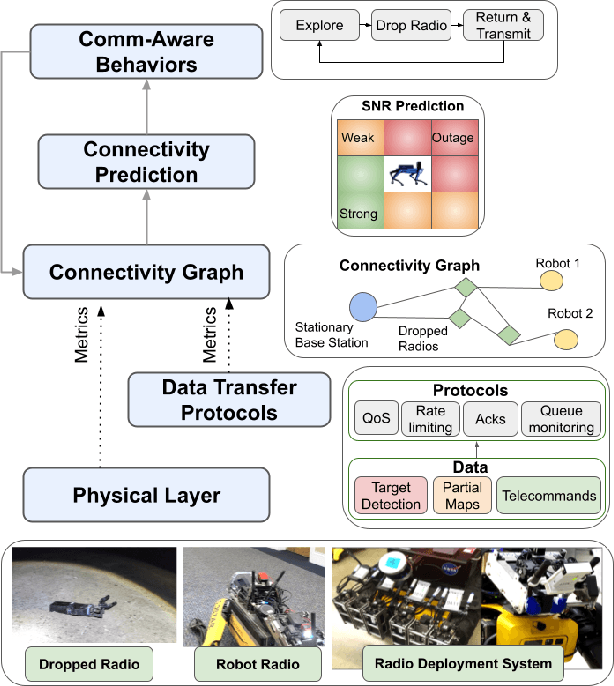

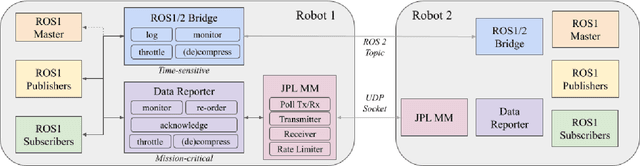

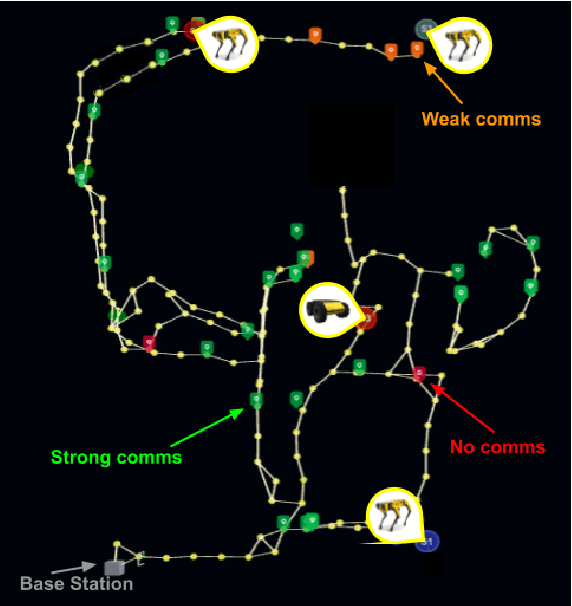

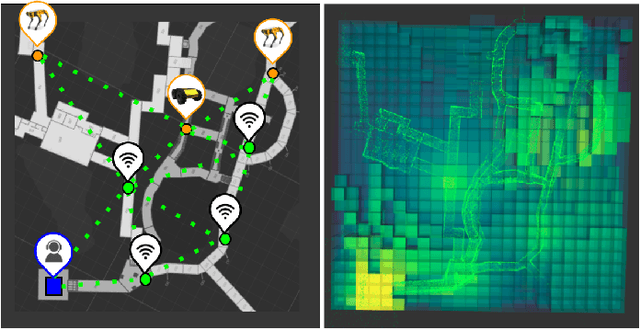

Abstract:Communication is an important capability for multi-robot exploration because (1) inter-robot communication (comms) improves coverage efficiency and (2) robot-to-base comms improves situational awareness. Exploring comms-restricted (e.g., subterranean) environments requires a multi-robot system to tolerate and anticipate intermittent connectivity, and to carefully consider comms requirements, otherwise mission-critical data may be lost. In this paper, we describe and analyze ACHORD (Autonomous & Collaborative High-Bandwidth Operations with Radio Droppables), a multi-layer networking solution which tightly co-designs the network architecture and high-level decision-making for improved comms. ACHORD provides bandwidth prioritization and timely and reliable data transfer despite intermittent connectivity. Furthermore, it exposes low-layer networking metrics to the application layer to enable robots to autonomously monitor, map, and extend the network via droppable radios, as well as restore connectivity to improve collaborative exploration. We evaluate our solution with respect to the comms performance in several challenging underground environments including the DARPA SubT Finals competition environment. Our findings support the use of data stratification and flow control to improve bandwidth-usage.

PropEM-L: Radio Propagation Environment Modeling and Learning for Communication-Aware Multi-Robot Exploration

May 03, 2022

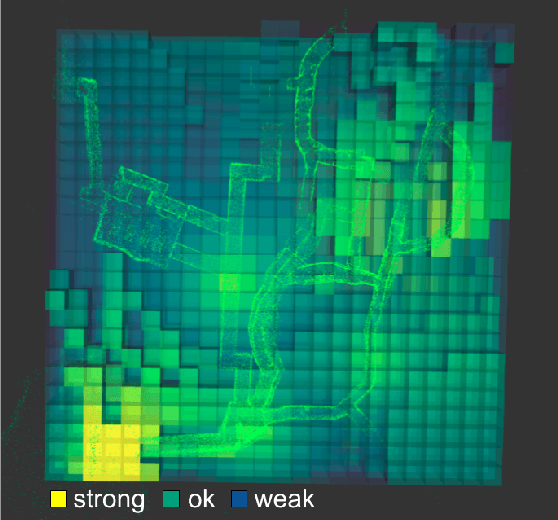

Abstract:Multi-robot exploration of complex, unknown environments benefits from the collaboration and cooperation offered by inter-robot communication. Accurate radio signal strength prediction enables communication-aware exploration. Models which ignore the effect of the environment on signal propagation or rely on a priori maps suffer in unknown, communication-restricted (e.g. subterranean) environments. In this work, we present Propagation Environment Modeling and Learning (PropEM-L), a framework which leverages real-time sensor-derived 3D geometric representations of an environment to extract information about line of sight between radios and attenuating walls/obstacles in order to accurately predict received signal strength (RSS). Our data-driven approach combines the strengths of well-known models of signal propagation phenomena (e.g. shadowing, reflection, diffraction) and machine learning, and can adapt online to new environments. We demonstrate the performance of PropEM-L on a six-robot team in a communication-restricted environment with subway-like, mine-like, and cave-like characteristics, constructed for the 2021 DARPA Subterranean Challenge. Our findings indicate that PropEM-L can improve signal strength prediction accuracy by up to 44% over a log-distance path loss model.

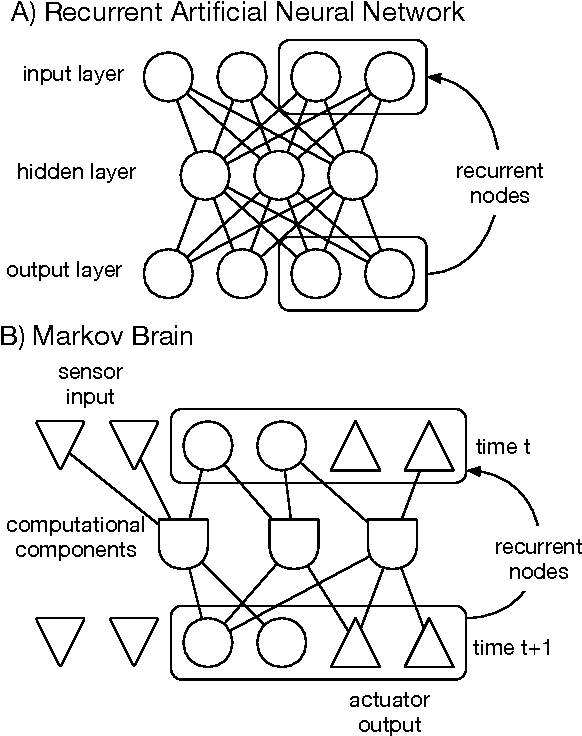

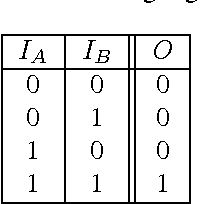

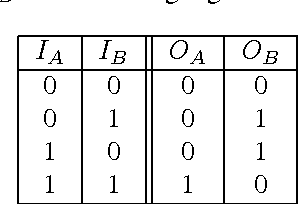

Markov Brains: A Technical Introduction

Sep 17, 2017

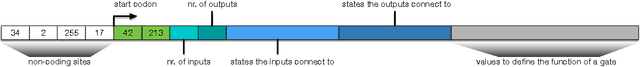

Abstract:Markov Brains are a class of evolvable artificial neural networks (ANN). They differ from conventional ANNs in many aspects, but the key difference is that instead of a layered architecture, with each node performing the same function, Markov Brains are networks built from individual computational components. These computational components interact with each other, receive inputs from sensors, and control motor outputs. The function of the computational components, their connections to each other, as well as connections to sensors and motors are all subject to evolutionary optimization. Here we describe in detail how a Markov Brain works, what techniques can be used to study them, and how they can be evolved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge