Curtis Padgett

COARSE: Collaborative Pseudo-Labeling with Coarse Real Labels for Off-Road Semantic Segmentation

Mar 05, 2025

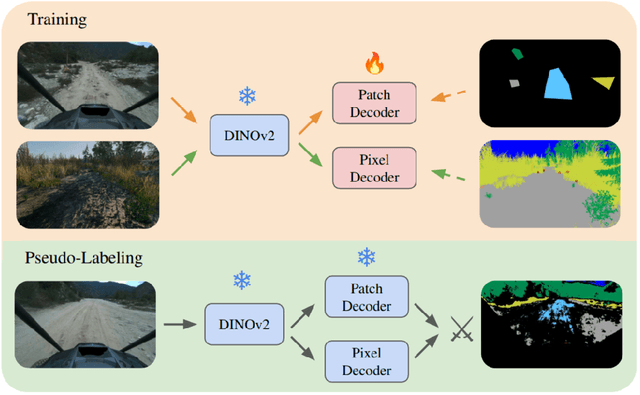

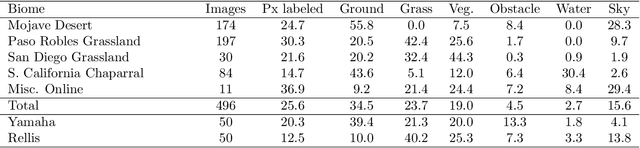

Abstract:Autonomous off-road navigation faces challenges due to diverse, unstructured environments, requiring robust perception with both geometric and semantic understanding. However, scarce densely labeled semantic data limits generalization across domains. Simulated data helps, but introduces domain adaptation issues. We propose COARSE, a semi-supervised domain adaptation framework for off-road semantic segmentation, leveraging sparse, coarse in-domain labels and densely labeled out-of-domain data. Using pretrained vision transformers, we bridge domain gaps with complementary pixel-level and patch-level decoders, enhanced by a collaborative pseudo-labeling strategy on unlabeled data. Evaluations on RUGD and Rellis-3D datasets show significant improvements of 9.7\% and 8.4\% respectively, versus only using coarse data. Tests on real-world off-road vehicle data in a multi-biome setting further demonstrate COARSE's applicability.

Few-shot Semantic Learning for Robust Multi-Biome 3D Semantic Mapping in Off-Road Environments

Nov 10, 2024

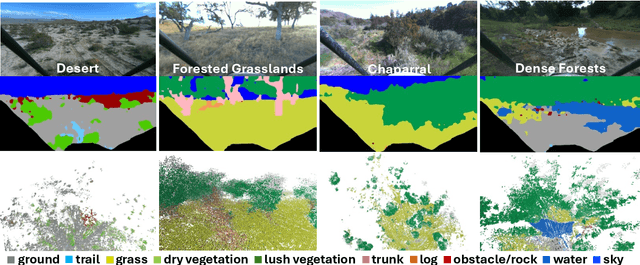

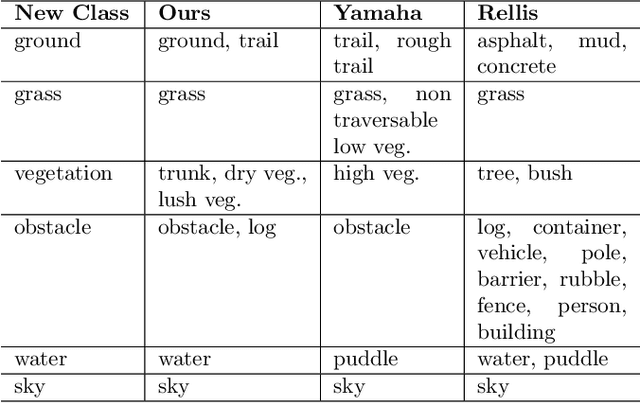

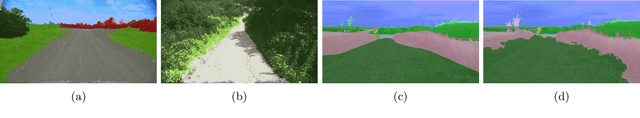

Abstract:Off-road environments pose significant perception challenges for high-speed autonomous navigation due to unstructured terrain, degraded sensing conditions, and domain-shifts among biomes. Learning semantic information across these conditions and biomes can be challenging when a large amount of ground truth data is required. In this work, we propose an approach that leverages a pre-trained Vision Transformer (ViT) with fine-tuning on a small (<500 images), sparse and coarsely labeled (<30% pixels) multi-biome dataset to predict 2D semantic segmentation classes. These classes are fused over time via a novel range-based metric and aggregated into a 3D semantic voxel map. We demonstrate zero-shot out-of-biome 2D semantic segmentation on the Yamaha (52.9 mIoU) and Rellis (55.5 mIoU) datasets along with few-shot coarse sparse labeling with existing data for improved segmentation performance on Yamaha (66.6 mIoU) and Rellis (67.2 mIoU). We further illustrate the feasibility of using a voxel map with a range-based semantic fusion approach to handle common off-road hazards like pop-up hazards, overhangs, and water features.

Robust High-Speed State Estimation for Off-road Navigation using Radar Velocity Factors

Sep 17, 2024Abstract:Enabling robot autonomy in complex environments for mission critical application requires robust state estimation. Particularly under conditions where the exteroceptive sensors, which the navigation depends on, can be degraded by environmental challenges thus, leading to mission failure. It is precisely in such challenges where the potential for FMCW radar sensors is highlighted: as a complementary exteroceptive sensing modality with direct velocity measuring capabilities. In this work we integrate radial speed measurements from a FMCW radar sensor, using a radial speed factor, to provide linear velocity updates into a sliding-window state estimator for fusion with LiDAR pose and IMU measurements. We demonstrate that this augmentation increases the robustness of the state estimator to challenging conditions present in the environment and the negative effects they can pose to vulnerable exteroceptive modalities. The proposed method is extensively evaluated using robotic field experiments conducted using an autonomous, full-scale, off-road vehicle operating at high-speeds (~12 m/s) in complex desert environments. Furthermore, the robustness of the approach is demonstrated for cases of both simulated and real-world degradation of the LiDAR odometry performance along with comparison against state-of-the-art methods for radar-inertial odometry on public datasets.

RoadRunner - Learning Traversability Estimation for Autonomous Off-road Driving

Mar 03, 2024

Abstract:Autonomous navigation at high speeds in off-road environments necessitates robots to comprehensively understand their surroundings using onboard sensing only. The extreme conditions posed by the off-road setting can cause degraded camera image quality due to poor lighting and motion blur, as well as limited sparse geometric information available from LiDAR sensing when driving at high speeds. In this work, we present RoadRunner, a novel framework capable of predicting terrain traversability and an elevation map directly from camera and LiDAR sensor inputs. RoadRunner enables reliable autonomous navigation, by fusing sensory information, handling of uncertainty, and generation of contextually informed predictions about the geometry and traversability of the terrain while operating at low latency. In contrast to existing methods relying on classifying handcrafted semantic classes and using heuristics to predict traversability costs, our method is trained end-to-end in a self-supervised fashion. The RoadRunner network architecture builds upon popular sensor fusion network architectures from the autonomous driving domain, which embed LiDAR and camera information into a common Bird's Eye View perspective. Training is enabled by utilizing an existing traversability estimation stack to generate training data in hindsight in a scalable manner from real-world off-road driving datasets. Furthermore, RoadRunner improves the system latency by a factor of roughly 4, from 500 ms to 140 ms, while improving the accuracy for traversability costs and elevation map predictions. We demonstrate the effectiveness of RoadRunner in enabling safe and reliable off-road navigation at high speeds in multiple real-world driving scenarios through unstructured desert environments.

Pixel to Elevation: Learning to Predict Elevation Maps at Long Range using Images for Autonomous Offroad Navigation

Jan 30, 2024Abstract:Understanding terrain topology at long-range is crucial for the success of off-road robotic missions, especially when navigating at high-speeds. LiDAR sensors, which are currently heavily relied upon for geometric mapping, provide sparse measurements when mapping at greater distances. To address this challenge, we present a novel learning-based approach capable of predicting terrain elevation maps at long-range using only onboard egocentric images in real-time. Our proposed method is comprised of three main elements. First, a transformer-based encoder is introduced that learns cross-view associations between the egocentric views and prior bird-eye-view elevation map predictions. Second, an orientation-aware positional encoding is proposed to incorporate the 3D vehicle pose information over complex unstructured terrain with multi-view visual image features. Lastly, a history-augmented learn-able map embedding is proposed to achieve better temporal consistency between elevation map predictions to facilitate the downstream navigational tasks. We experimentally validate the applicability of our proposed approach for autonomous offroad robotic navigation in complex and unstructured terrain using real-world offroad driving data. Furthermore, the method is qualitatively and quantitatively compared against the current state-of-the-art methods. Extensive field experiments demonstrate that our method surpasses baseline models in accurately predicting terrain elevation while effectively capturing the overall terrain topology at long-ranges. Finally, ablation studies are conducted to highlight and understand the effect of key components of the proposed approach and validate their suitability to improve offroad robotic navigation capabilities.

ROAMER: Robust Offroad Autonomy using Multimodal State Estimation with Radar Velocity Integration

Jan 30, 2024Abstract:Reliable offroad autonomy requires low-latency, high-accuracy state estimates of pose as well as velocity, which remain viable throughout environments with sub-optimal operating conditions for the utilized perception modalities. As state estimation remains a single point of failure system in the majority of aspiring autonomous systems, failing to address the environmental degradation the perception sensors could potentially experience given the operating conditions, can be a mission-critical shortcoming. In this work, a method for integration of radar velocity information in a LiDAR-inertial odometry solution is proposed, enabling consistent estimation performance even with degraded LiDAR-inertial odometry. The proposed method utilizes the direct velocity-measuring capabilities of an Frequency Modulated Continuous Wave (FMCW) radar sensor to enhance the LiDAR-inertial smoother solution onboard the vehicle through integration of the forward velocity measurement into the graph-based smoother. This leads to increased robustness in the overall estimation solution, even in the absence of LiDAR data. This method was validated by hardware experiments conducted onboard an all-terrain vehicle traveling at high speed, ~12 m/s, in demanding offroad environments.

Rover Relocalization for Mars Sample Return by Virtual Template Synthesis and Matching

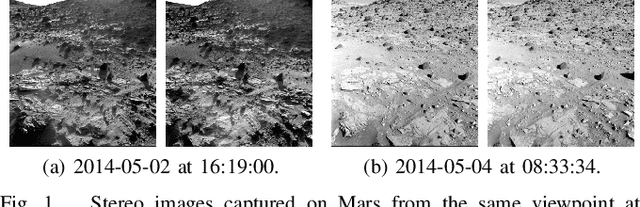

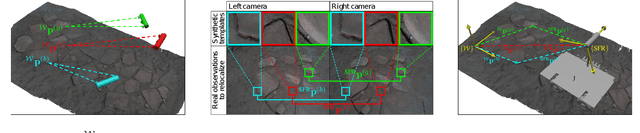

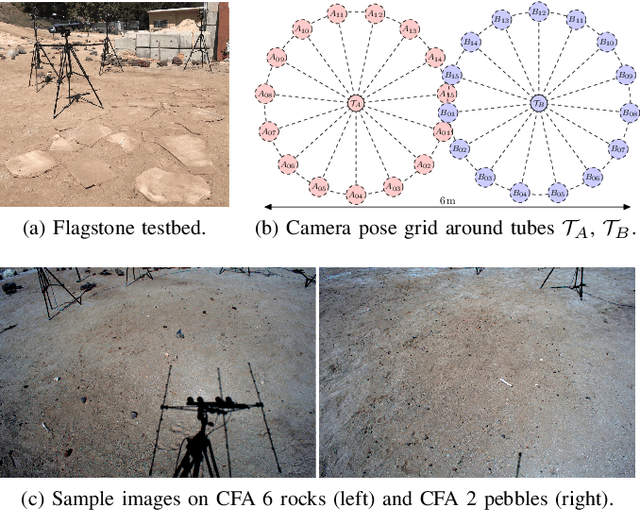

Mar 05, 2021

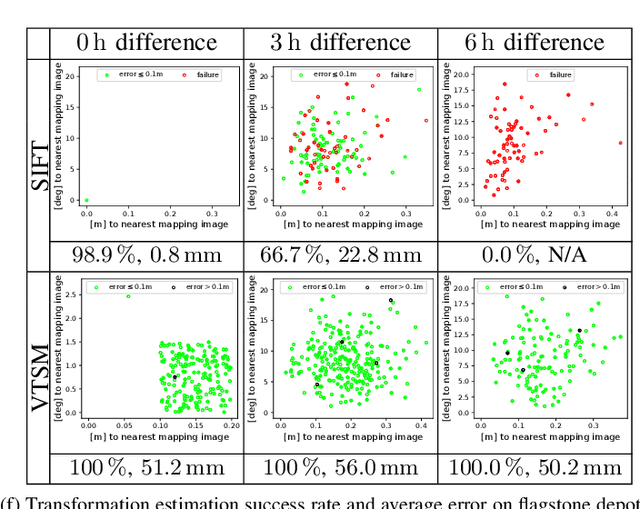

Abstract:We consider the problem of rover relocalization in the context of the notional Mars Sample Return campaign. In this campaign, a rover (R1) needs to be capable of autonomously navigating and localizing itself within an area of approximately 50 x 50 m using reference images collected years earlier by another rover (R0). We propose a visual localizer that exhibits robustness to the relatively barren terrain that we expect to find in relevant areas, and to large lighting and viewpoint differences between R0 and R1. The localizer synthesizes partial renderings of a mesh built from reference R0 images and matches those to R1 images. We evaluate our method on a dataset totaling 2160 images covering the range of expected environmental conditions (terrain, lighting, approach angle). Experimental results show the effectiveness of our approach. This work informs the Mars Sample Return campaign on the choice of a site where Perseverance (R0) will place a set of sample tubes for future retrieval by another rover (R1).

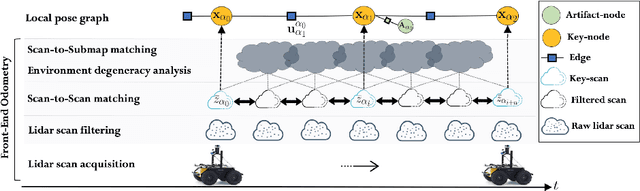

DARE-SLAM: Degeneracy-Aware and Resilient Loop Closing in Perceptually-Degraded Environments

Feb 09, 2021

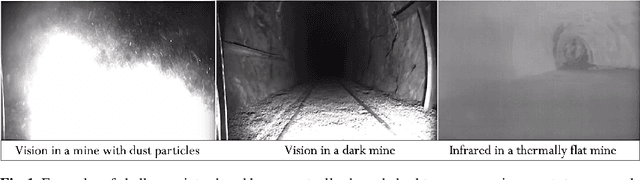

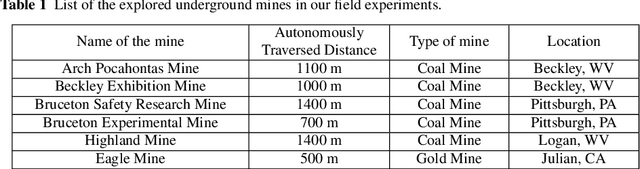

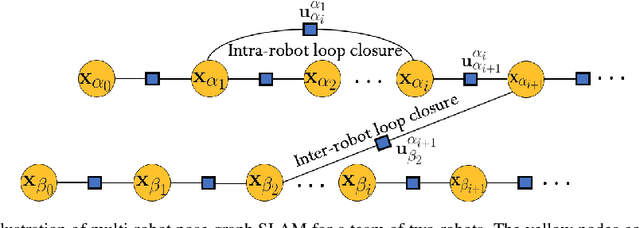

Abstract:Enabling fully autonomous robots capable of navigating and exploring large-scale, unknown and complex environments has been at the core of robotics research for several decades. A key requirement in autonomous exploration is building accurate and consistent maps of the unknown environment that can be used for reliable navigation. Loop closure detection, the ability to assert that a robot has returned to a previously visited location, is crucial for consistent mapping as it reduces the drift caused by error accumulation in the estimated robot trajectory. Moreover, in multi-robot systems, loop closures enable merging local maps obtained by a team of robots into a consistent global map of the environment. In this paper, we present a degeneracy-aware and drift-resilient loop closing method to improve place recognition and resolve 3D location ambiguities for simultaneous localization and mapping (SLAM) in GPS-denied, large-scale and perceptually-degraded environments. More specifically, we focus on SLAM in subterranean environments (e.g., lava tubes, caves, and mines) that represent examples of complex and ambiguous environments where current methods have inadequate performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge