Larissa Albantakis

Dissociating Artificial Intelligence from Artificial Consciousness

Dec 05, 2024Abstract:Developments in machine learning and computing power suggest that artificial general intelligence is within reach. This raises the question of artificial consciousness: if a computer were to be functionally equivalent to a human, being able to do all we do, would it experience sights, sounds, and thoughts, as we do when we are conscious? Answering this question in a principled manner can only be done on the basis of a theory of consciousness that is grounded in phenomenology and that states the necessary and sufficient conditions for any system, evolved or engineered, to support subjective experience. Here we employ Integrated Information Theory (IIT), which provides principled tools to determine whether a system is conscious, to what degree, and the content of its experience. We consider pairs of systems constituted of simple Boolean units, one of which -- a basic stored-program computer -- simulates the other with full functional equivalence. By applying the principles of IIT, we demonstrate that (i) two systems can be functionally equivalent without being phenomenally equivalent, and (ii) that this conclusion is not dependent on the simulated system's function. We further demonstrate that, according to IIT, it is possible for a digital computer to simulate our behavior, possibly even by simulating the neurons in our brain, without replicating our experience. This contrasts sharply with computational functionalism, the thesis that performing computations of the right kind is necessary and sufficient for consciousness.

What we are is more than what we do

Jan 21, 2021Abstract:If we take the subjective character of consciousness seriously, consciousness becomes a matter of "being" rather than "doing". Because "doing" can be dissociated from "being", functional criteria alone are insufficient to decide whether a system possesses the necessary requirements for being a physical substrate of consciousness. The dissociation between "being" and "doing" is most salient in artificial general intelligence, which may soon replicate any human capacity: computers can perform complex functions (in the limit resembling human behavior) in the absence of consciousness. Complex behavior becomes meaningless if it is not performed by a conscious being.

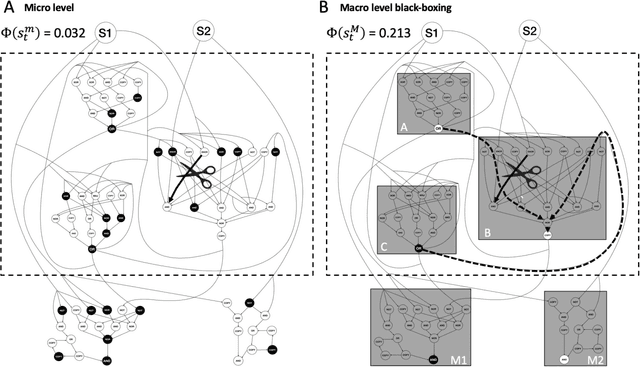

A macro agent and its actions

Mar 31, 2020

Abstract:In science, macro level descriptions of the causal interactions within complex, dynamical systems are typically deemed convenient, but ultimately reducible to a complete causal account of the underlying micro constituents. Yet, such a reductionist perspective is hard to square with several issues related to autonomy and agency: (1) agents require (causal) borders that separate them from the environment, (2) at least in a biological context, agents are associated with macroscopic systems, and (3) agents are supposed to act upon their environment. Integrated information theory (IIT) (Oizumi et al., 2014) offers a quantitative account of causation based on a set of causal principles, including notions such as causal specificity, composition, and irreducibility, that challenges the reductionist perspective in multiple ways. First, the IIT formalism provides a complete account of a system's causal structure, including irreducible higher-order mechanisms constituted of multiple system elements. Second, a system's amount of integrated information ($\Phi$) measures the causal constraints a system exerts onto itself and can peak at a macro level of description (Hoel et al., 2016; Marshall et al., 2018). Finally, the causal principles of IIT can also be employed to identify and quantify the actual causes of events ("what caused what"), such as an agent's actions (Albantakis et al., 2019). Here, we demonstrate this framework by example of a simulated agent, equipped with a small neural network, that forms a maximum of $\Phi$ at a macro scale.

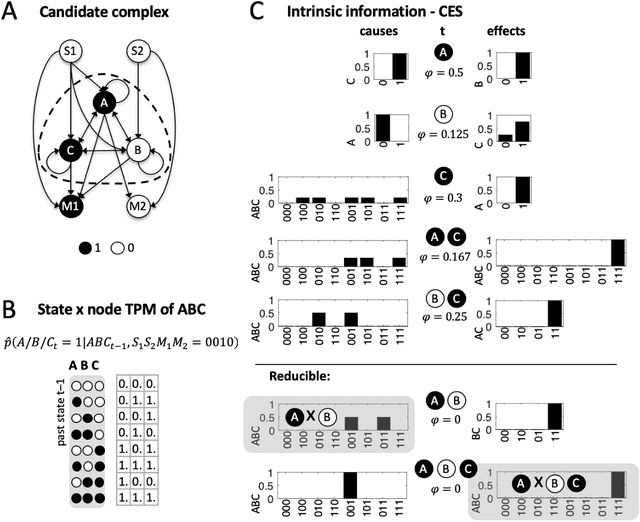

PyPhi: A toolbox for integrated information theory

Jun 27, 2018

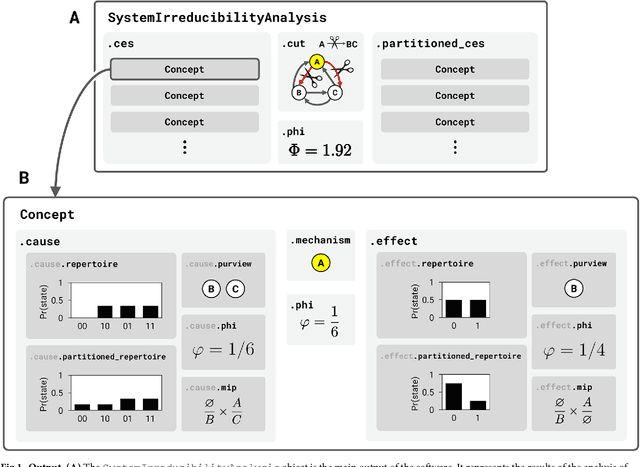

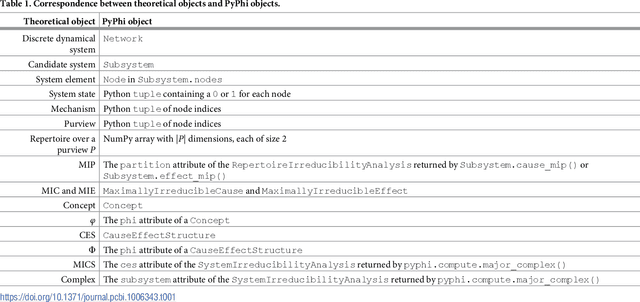

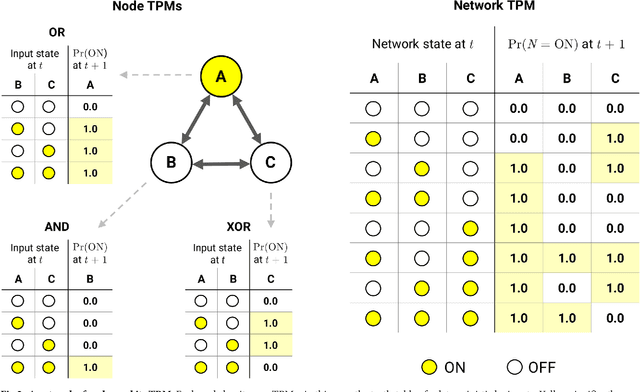

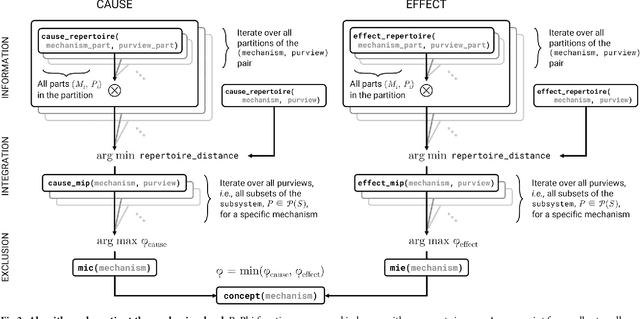

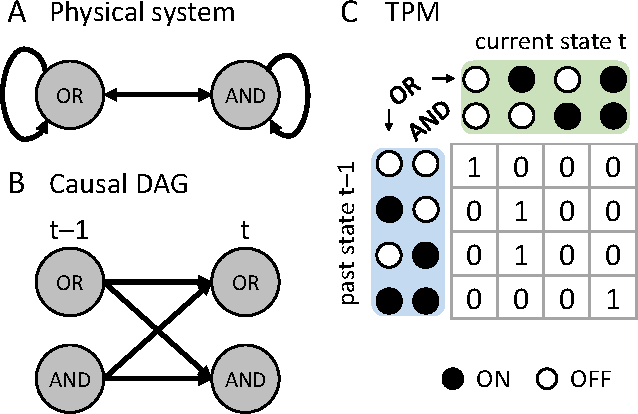

Abstract:Integrated information theory provides a mathematical framework to fully characterize the cause-effect structure of a physical system. Here, we introduce PyPhi, a Python software package that implements this framework for causal analysis and unfolds the full cause-effect structure of discrete dynamical systems of binary elements. The software allows users to easily study these structures, serves as an up-to-date reference implementation of the formalisms of integrated information theory, and has been applied in research on complexity, emergence, and certain biological questions. We first provide an overview of the main algorithm and demonstrate PyPhi's functionality in the course of analyzing an example system, and then describe details of the algorithm's design and implementation. PyPhi can be installed with Python's package manager via the command 'pip install pyphi' on Linux and macOS systems equipped with Python 3.4 or higher. PyPhi is open-source and licensed under the GPLv3; the source code is hosted on GitHub at https://github.com/wmayner/pyphi . Comprehensive and continually-updated documentation is available at https://pyphi.readthedocs.io/ . The pyphi-users mailing list can be joined at https://groups.google.com/forum/#!forum/pyphi-users . A web-based graphical interface to the software is available at http://integratedinformationtheory.org/calculate.html .

* 22 pages, 4 figures, 6 pages of appendices. Supporting information "S1 Calculating Phi" can be found in the ancillary files

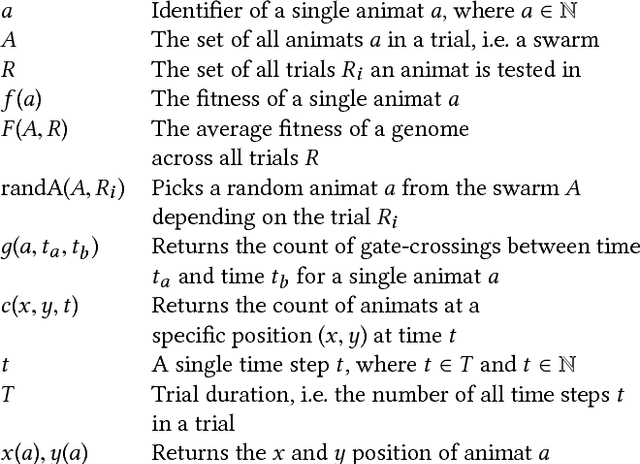

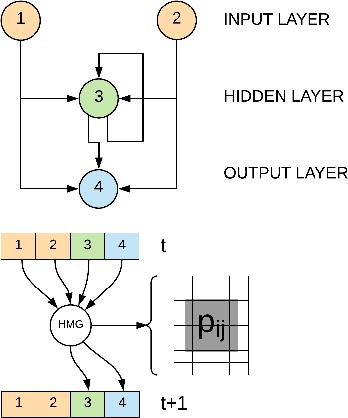

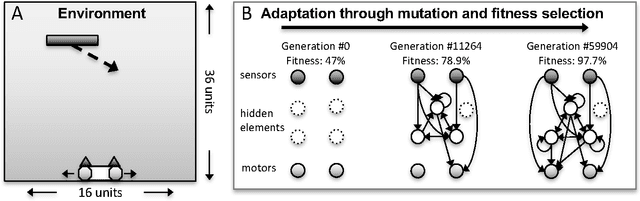

How swarm size during evolution impacts the behavior, generalizability, and brain complexity of animats performing a spatial navigation task

Apr 24, 2018

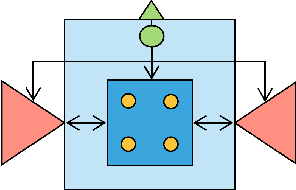

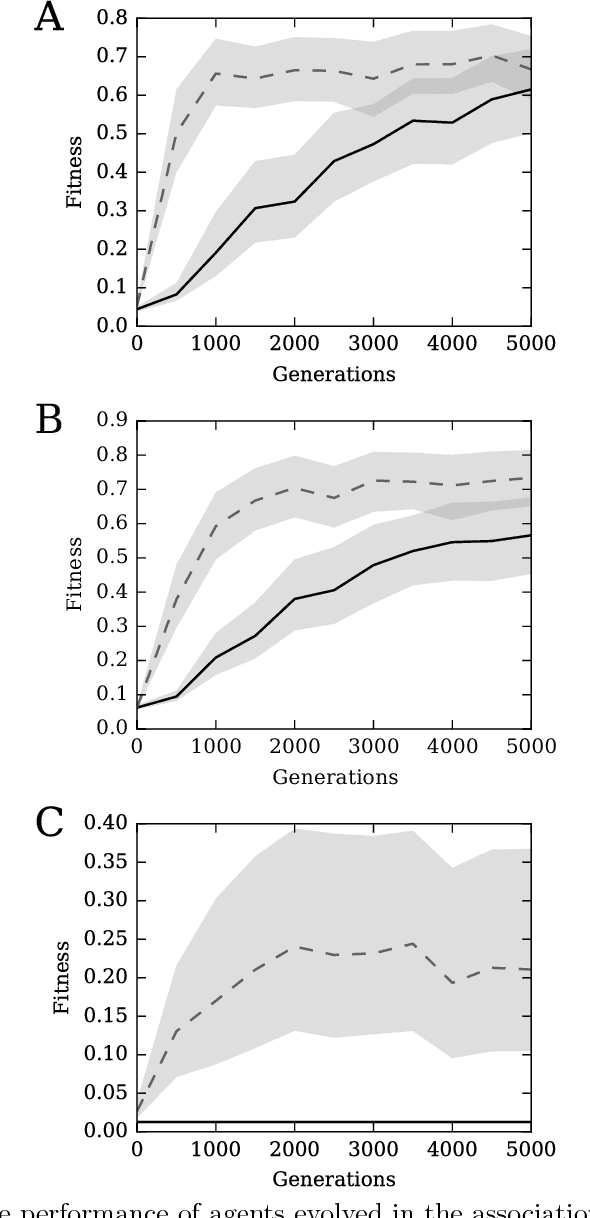

Abstract:While it is relatively easy to imitate and evolve natural swarm behavior in simulations, less is known about the social characteristics of simulated, evolved swarms, such as the optimal (evolutionary) group size, why individuals in a swarm perform certain actions, and how behavior would change in swarms of different sizes. To address these questions, we used a genetic algorithm to evolve animats equipped with Markov Brains in a spatial navigation task that facilitates swarm behavior. The animats' goal was to frequently cross between two rooms without colliding with other animats. Animats were evolved in swarms of various sizes. We then evaluated the task performance and social behavior of the final generation from each evolution when placed with swarms of different sizes in order to evaluate their generalizability across conditions. According to our experiments, we find that swarm size during evolution matters: animats evolved in a balanced swarm developed more flexible behavior, higher fitness across conditions, and, in addition, higher brain complexity.

The Role of Conditional Independence in the Evolution of Intelligent Systems

Jan 16, 2018

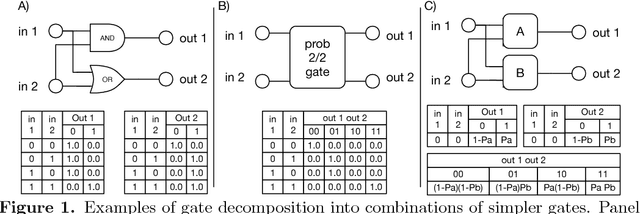

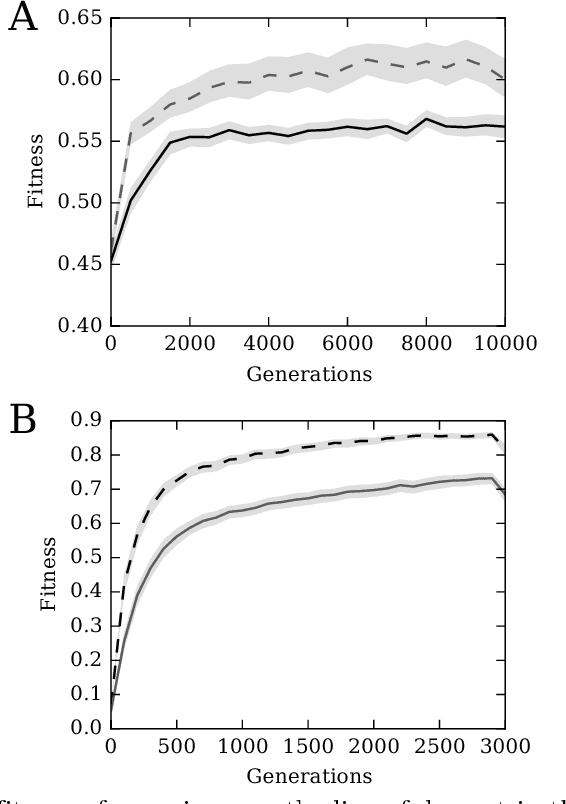

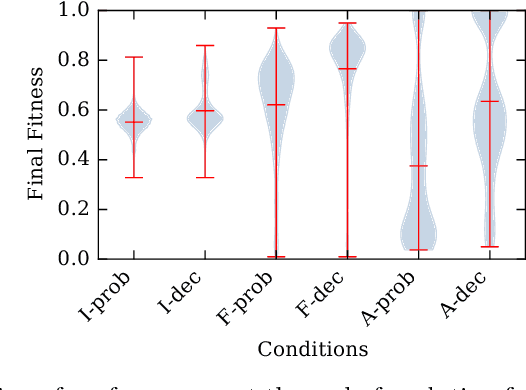

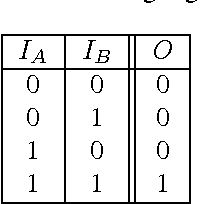

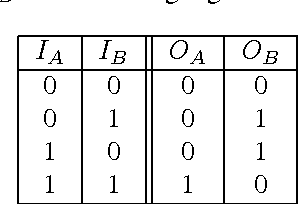

Abstract:Systems are typically made from simple components regardless of their complexity. While the function of each part is easily understood, higher order functions are emergent properties and are notoriously difficult to explain. In networked systems, both digital and biological, each component receives inputs, performs a simple computation, and creates an output. When these components have multiple outputs, we intuitively assume that the outputs are causally dependent on the inputs but are themselves independent of each other given the state of their shared input. However, this intuition can be violated for components with probabilistic logic, as these typically cannot be decomposed into separate logic gates with one output each. This violation of conditional independence on the past system state is equivalent to instantaneous interaction --- the idea is that some information between the outputs is not coming from the inputs and thus must have been created instantaneously. Here we compare evolved artificial neural systems with and without instantaneous interaction across several task environments. We show that systems without instantaneous interactions evolve faster, to higher final levels of performance, and require fewer logic components to create a densely connected cognitive machinery.

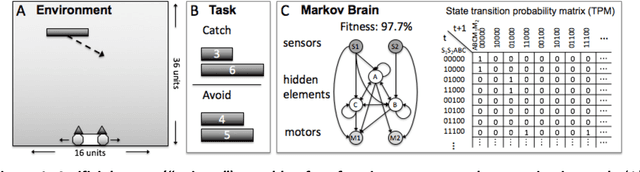

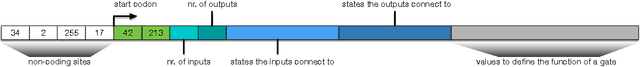

Markov Brains: A Technical Introduction

Sep 17, 2017

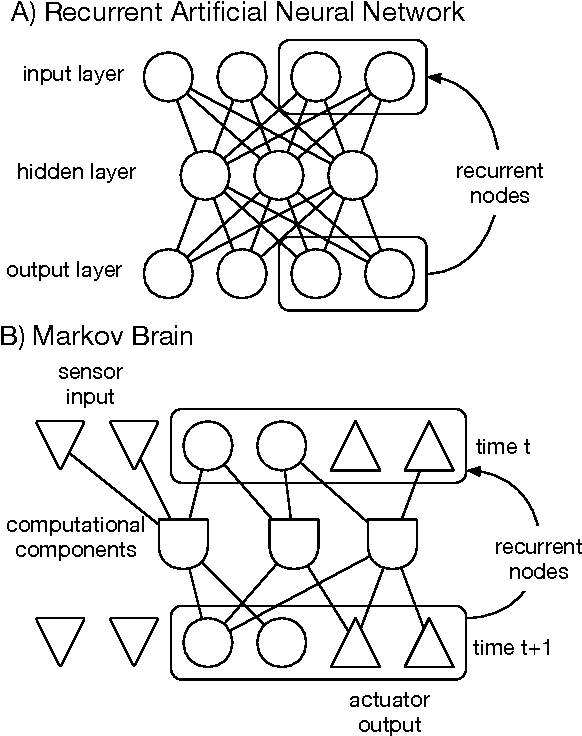

Abstract:Markov Brains are a class of evolvable artificial neural networks (ANN). They differ from conventional ANNs in many aspects, but the key difference is that instead of a layered architecture, with each node performing the same function, Markov Brains are networks built from individual computational components. These computational components interact with each other, receive inputs from sensors, and control motor outputs. The function of the computational components, their connections to each other, as well as connections to sensors and motors are all subject to evolutionary optimization. Here we describe in detail how a Markov Brain works, what techniques can be used to study them, and how they can be evolved.

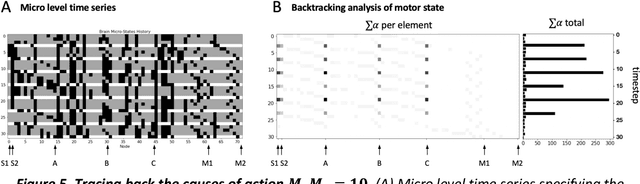

What caused what? An irreducible account of actual causation

Aug 22, 2017

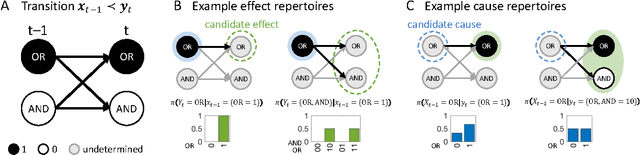

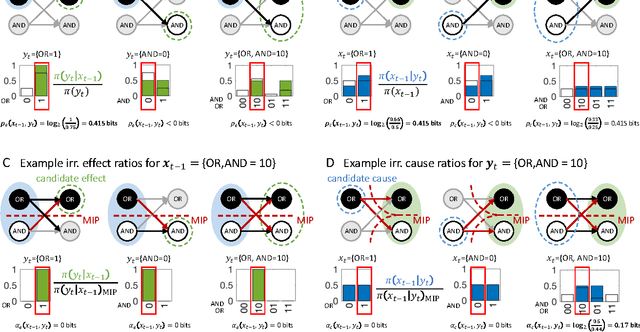

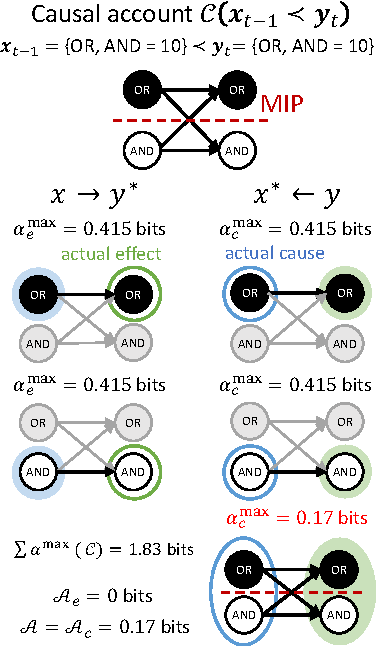

Abstract:Actual causation is concerned with the question "what caused what?". Consider a transition between two subsequent observations within a system of elements. Even under perfect knowledge of the system, a straightforward answer to this question may not be available. Counterfactual accounts of actual causation based on graphical models, paired with system interventions, have demonstrated initial success in addressing specific problem cases. We present a formal account of actual causation, applicable to discrete dynamical systems of interacting elements, that considers all counterfactual states of a state transition from t-1 to t. Within such a transition, causal links are considered from two complementary points of view: we can ask if any occurrence at time t has an actual cause at t-1, but also if any occurrence at time t-1 has an actual effect at t. We address the problem of identifying such actual causes and actual effects in a principled manner by starting from a set of basic requirements for causation (existence, composition, information, integration, and exclusion). We present a formal framework to implement these requirements based on system manipulations and partitions. This framework is used to provide a complete causal account of the transition by identifying and quantifying the strength of all actual causes and effects linking two occurrences. Finally, we examine several exemplary cases and paradoxes of causation and show that they can be illuminated by the proposed framework for quantifying actual causation.

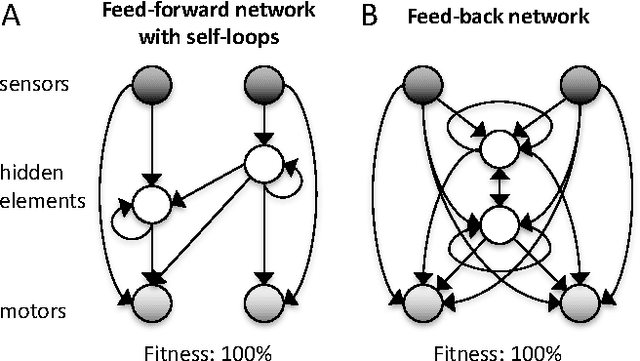

A Tale of Two Animats: What does it take to have goals?

May 30, 2017

Abstract:What does it take for a system, biological or not, to have goals? Here, this question is approached in the context of in silico artificial evolution. By examining the informational and causal properties of artificial organisms ('animats') controlled by small, adaptive neural networks (Markov Brains), this essay discusses necessary requirements for intrinsic information, autonomy, and meaning. The focus lies on comparing two types of Markov Brains that evolved in the same simple environment: one with purely feedforward connections between its elements, the other with an integrated set of elements that causally constrain each other. While both types of brains 'process' information about their environment and are equally fit, only the integrated one forms a causally autonomous entity above a background of external influences. This suggests that to assess whether goals are meaningful for a system itself, it is important to understand what the system is, rather than what it does.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge