A macro agent and its actions

Paper and Code

Mar 31, 2020

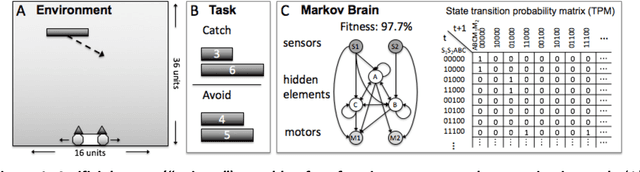

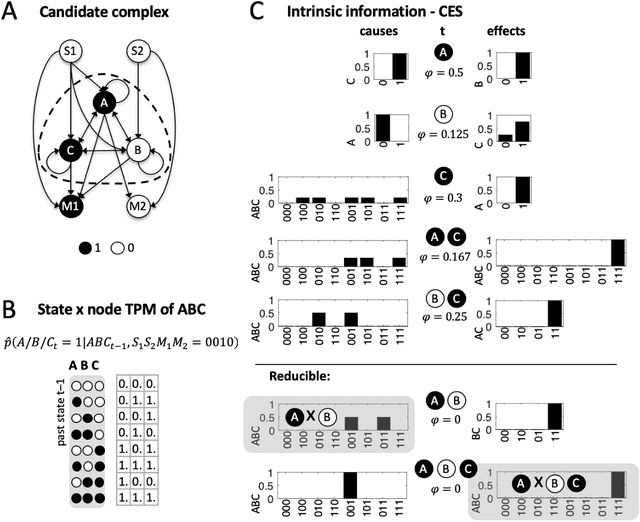

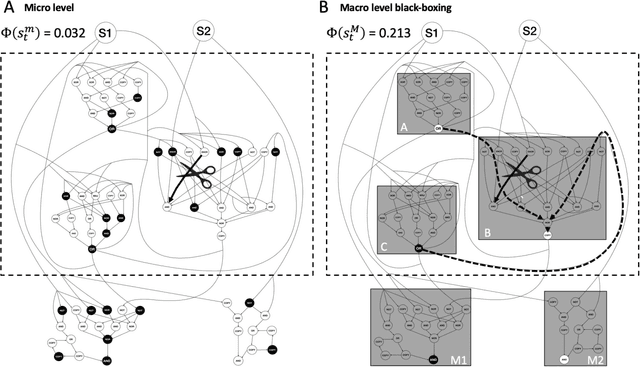

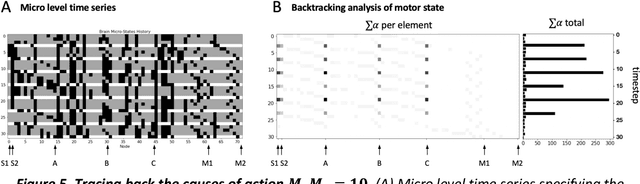

In science, macro level descriptions of the causal interactions within complex, dynamical systems are typically deemed convenient, but ultimately reducible to a complete causal account of the underlying micro constituents. Yet, such a reductionist perspective is hard to square with several issues related to autonomy and agency: (1) agents require (causal) borders that separate them from the environment, (2) at least in a biological context, agents are associated with macroscopic systems, and (3) agents are supposed to act upon their environment. Integrated information theory (IIT) (Oizumi et al., 2014) offers a quantitative account of causation based on a set of causal principles, including notions such as causal specificity, composition, and irreducibility, that challenges the reductionist perspective in multiple ways. First, the IIT formalism provides a complete account of a system's causal structure, including irreducible higher-order mechanisms constituted of multiple system elements. Second, a system's amount of integrated information ($\Phi$) measures the causal constraints a system exerts onto itself and can peak at a macro level of description (Hoel et al., 2016; Marshall et al., 2018). Finally, the causal principles of IIT can also be employed to identify and quantify the actual causes of events ("what caused what"), such as an agent's actions (Albantakis et al., 2019). Here, we demonstrate this framework by example of a simulated agent, equipped with a small neural network, that forms a maximum of $\Phi$ at a macro scale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge