Jory Schossau

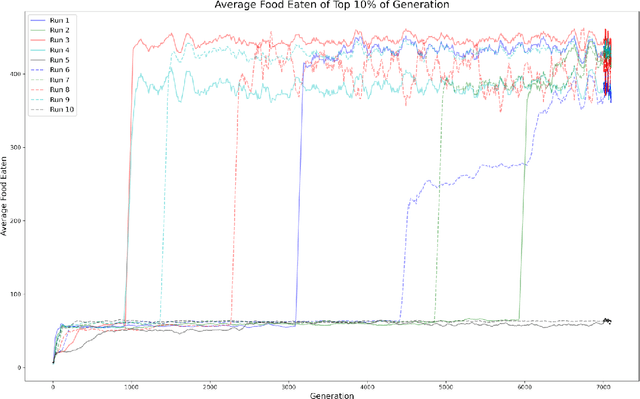

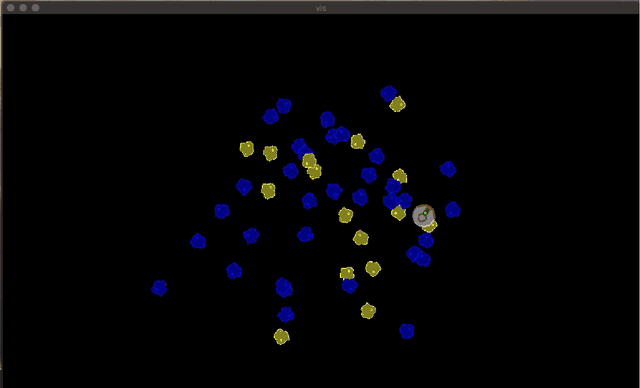

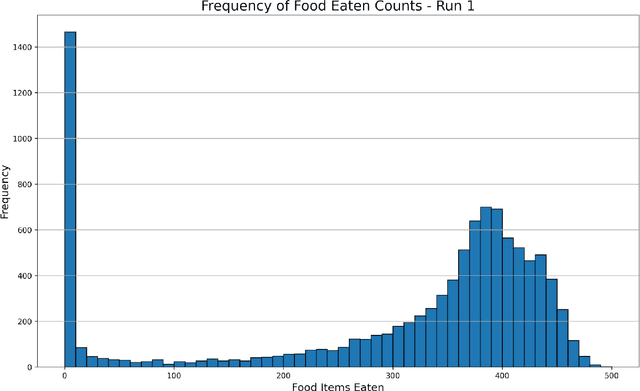

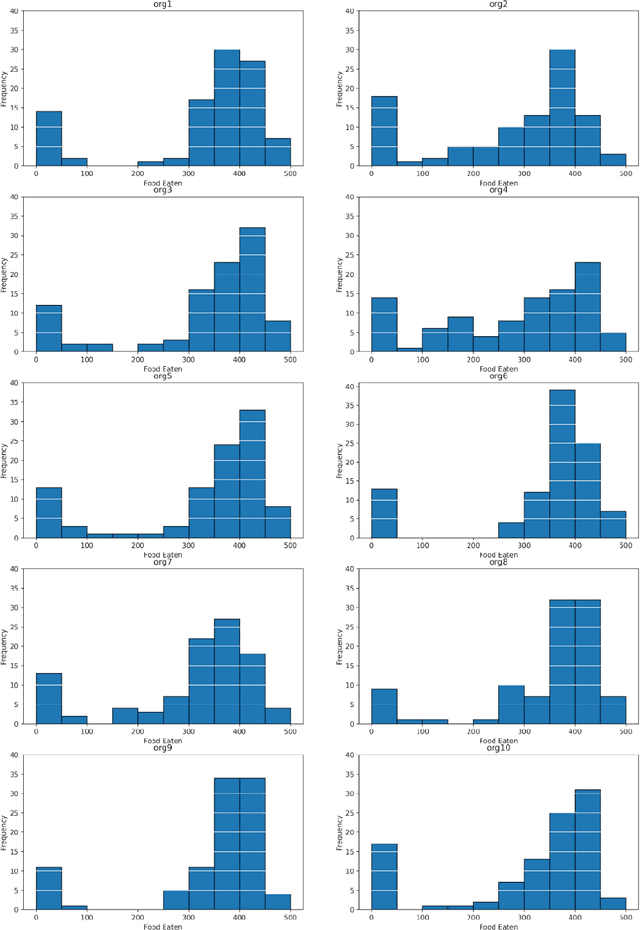

A neural net architecture based on principles of neural plasticity and development evolves to effectively catch prey in a simulated environment

Jan 31, 2022

Abstract:A profound challenge for A-Life is to construct agents whose behavior is 'life-like' in a deep way. We propose an architecture and approach to constructing networks driving artificial agents, using processes analogous to the processes that construct and sculpt the brains of animals. Furthermore the instantiation of action is dynamic: the whole network responds in real-time to sensory inputs to activate effectors, rather than computing a representation of the optimal behavior and sending off an encoded representation to effector controllers. There are many parameters and we use an evolutionary algorithm to select them, in the context of a specific prey-capture task. We think this architecture may be useful for controlling small autonomous robots or drones, because it allows for a rapid response to changes in sensor inputs.

The Role of Conditional Independence in the Evolution of Intelligent Systems

Jan 16, 2018

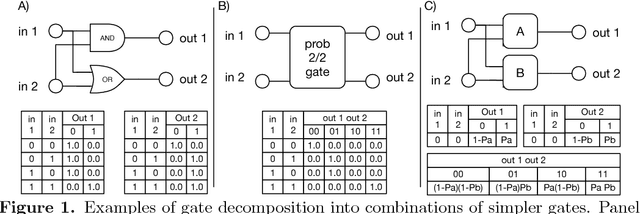

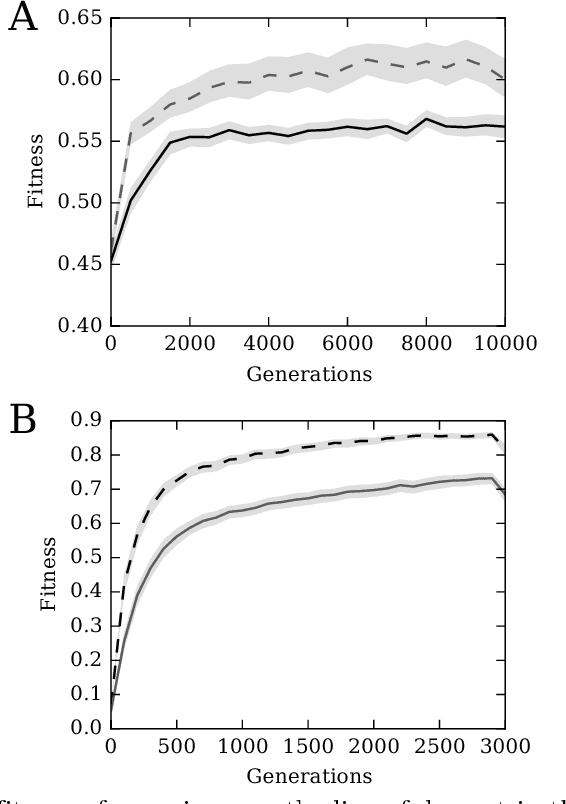

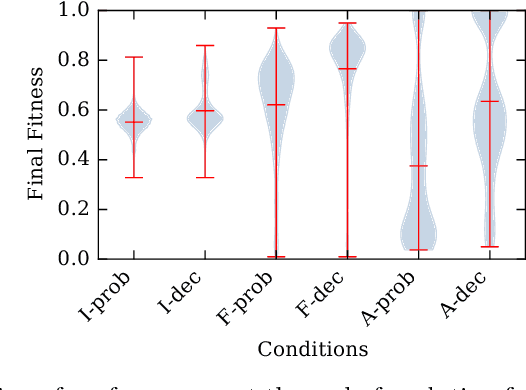

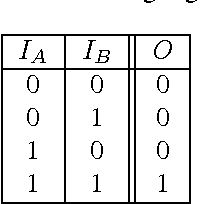

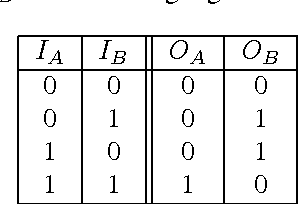

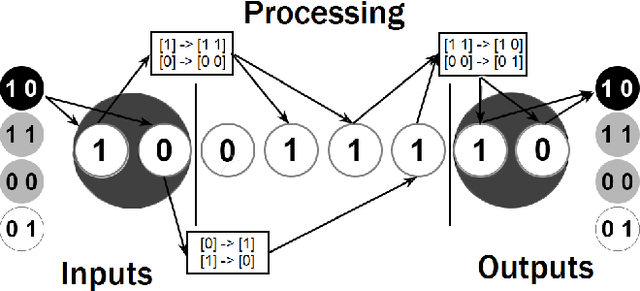

Abstract:Systems are typically made from simple components regardless of their complexity. While the function of each part is easily understood, higher order functions are emergent properties and are notoriously difficult to explain. In networked systems, both digital and biological, each component receives inputs, performs a simple computation, and creates an output. When these components have multiple outputs, we intuitively assume that the outputs are causally dependent on the inputs but are themselves independent of each other given the state of their shared input. However, this intuition can be violated for components with probabilistic logic, as these typically cannot be decomposed into separate logic gates with one output each. This violation of conditional independence on the past system state is equivalent to instantaneous interaction --- the idea is that some information between the outputs is not coming from the inputs and thus must have been created instantaneously. Here we compare evolved artificial neural systems with and without instantaneous interaction across several task environments. We show that systems without instantaneous interactions evolve faster, to higher final levels of performance, and require fewer logic components to create a densely connected cognitive machinery.

Markov Brains: A Technical Introduction

Sep 17, 2017

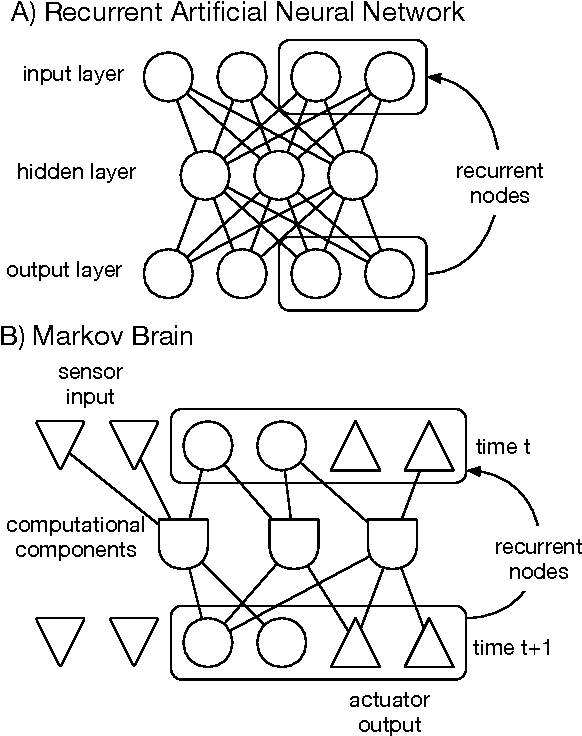

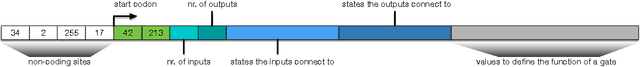

Abstract:Markov Brains are a class of evolvable artificial neural networks (ANN). They differ from conventional ANNs in many aspects, but the key difference is that instead of a layered architecture, with each node performing the same function, Markov Brains are networks built from individual computational components. These computational components interact with each other, receive inputs from sensors, and control motor outputs. The function of the computational components, their connections to each other, as well as connections to sensors and motors are all subject to evolutionary optimization. Here we describe in detail how a Markov Brain works, what techniques can be used to study them, and how they can be evolved.

Computational evolution of decision-making strategies

Sep 18, 2015

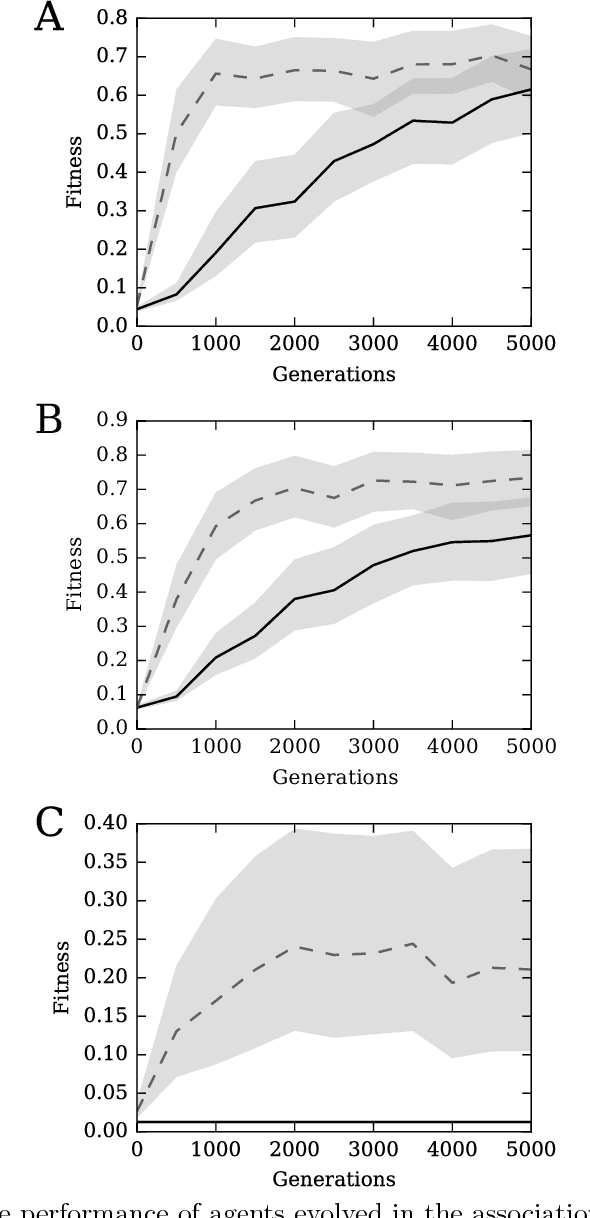

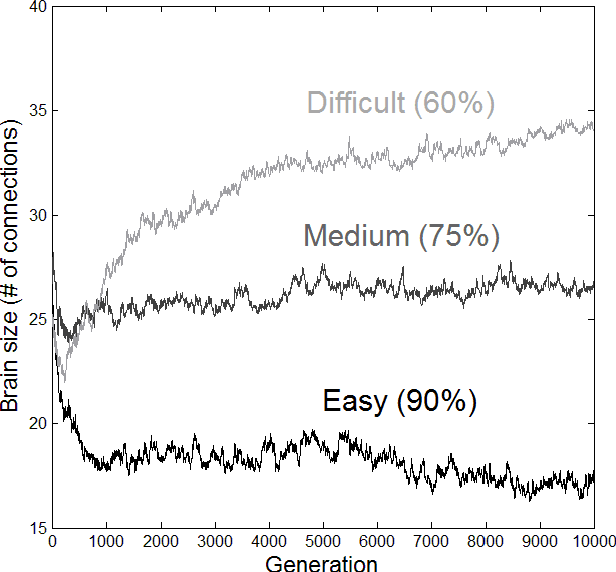

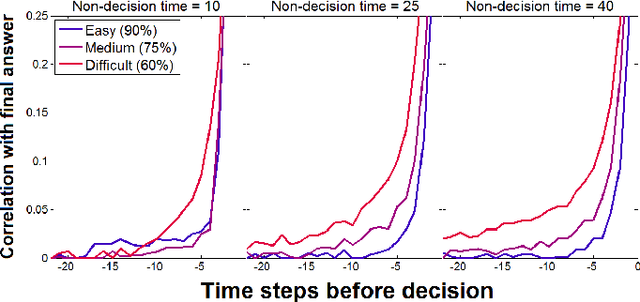

Abstract:Most research on adaptive decision-making takes a strategy-first approach, proposing a method of solving a problem and then examining whether it can be implemented in the brain and in what environments it succeeds. We present a method for studying strategy development based on computational evolution that takes the opposite approach, allowing strategies to develop in response to the decision-making environment via Darwinian evolution. We apply this approach to a dynamic decision-making problem where artificial agents make decisions about the source of incoming information. In doing so, we show that the complexity of the brains and strategies of evolved agents are a function of the environment in which they develop. More difficult environments lead to larger brains and more information use, resulting in strategies resembling a sequential sampling approach. Less difficult environments drive evolution toward smaller brains and less information use, resulting in simpler heuristic-like strategies.

* Conference paper, 6 pages / 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge