A neural net architecture based on principles of neural plasticity and development evolves to effectively catch prey in a simulated environment

Paper and Code

Jan 31, 2022

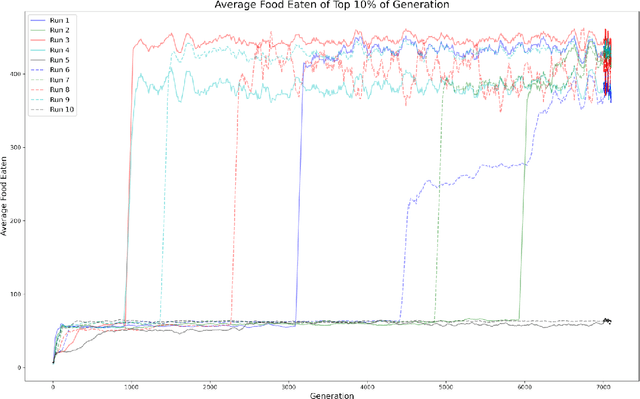

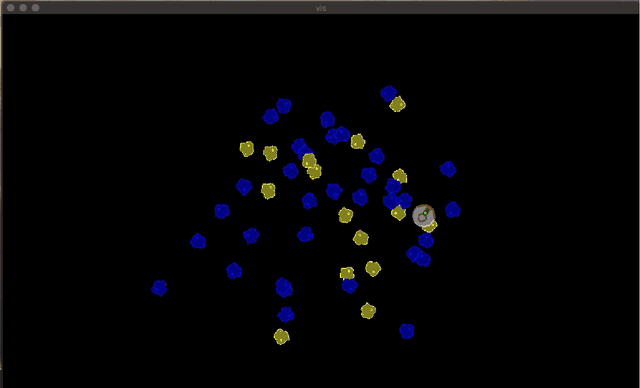

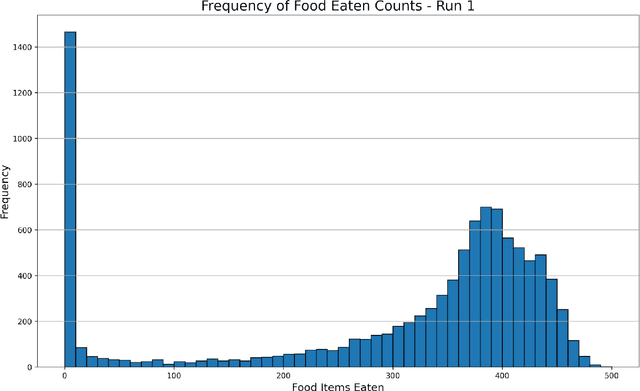

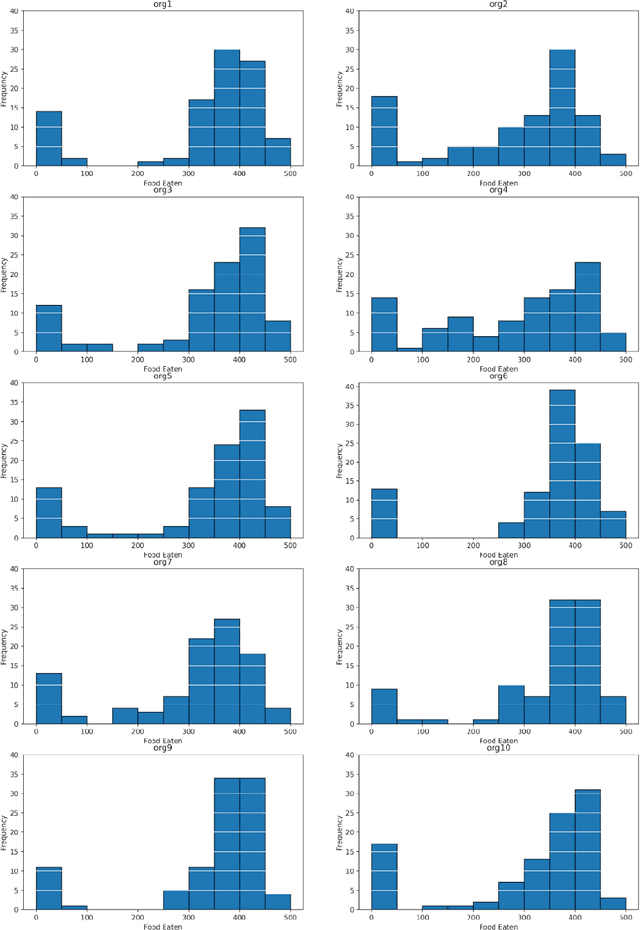

A profound challenge for A-Life is to construct agents whose behavior is 'life-like' in a deep way. We propose an architecture and approach to constructing networks driving artificial agents, using processes analogous to the processes that construct and sculpt the brains of animals. Furthermore the instantiation of action is dynamic: the whole network responds in real-time to sensory inputs to activate effectors, rather than computing a representation of the optimal behavior and sending off an encoded representation to effector controllers. There are many parameters and we use an evolutionary algorithm to select them, in the context of a specific prey-capture task. We think this architecture may be useful for controlling small autonomous robots or drones, because it allows for a rapid response to changes in sensor inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge