Heather Eisthen

Computational evolution of decision-making strategies

Sep 18, 2015

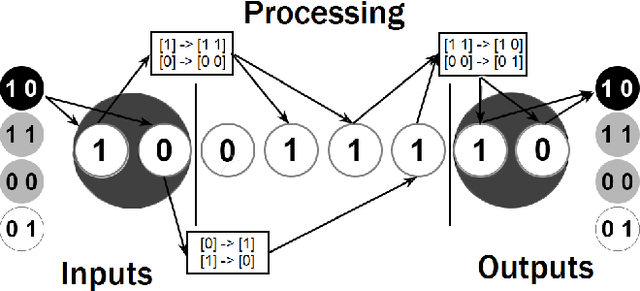

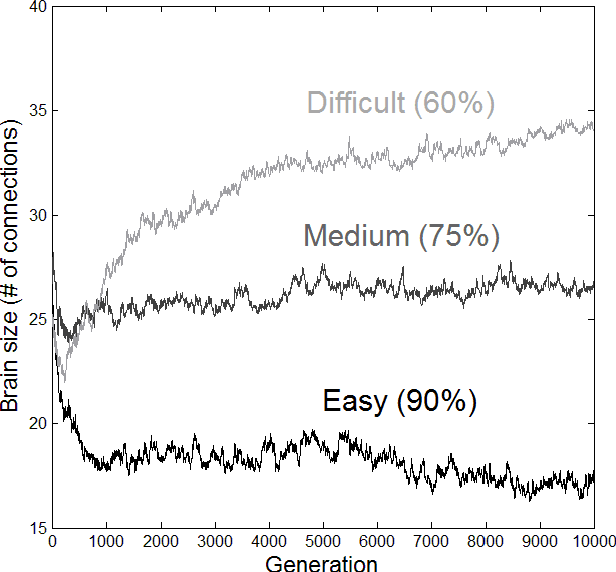

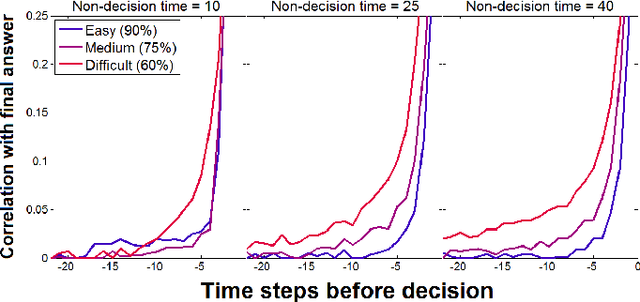

Abstract:Most research on adaptive decision-making takes a strategy-first approach, proposing a method of solving a problem and then examining whether it can be implemented in the brain and in what environments it succeeds. We present a method for studying strategy development based on computational evolution that takes the opposite approach, allowing strategies to develop in response to the decision-making environment via Darwinian evolution. We apply this approach to a dynamic decision-making problem where artificial agents make decisions about the source of incoming information. In doing so, we show that the complexity of the brains and strategies of evolved agents are a function of the environment in which they develop. More difficult environments lead to larger brains and more information use, resulting in strategies resembling a sequential sampling approach. Less difficult environments drive evolution toward smaller brains and less information use, resulting in simpler heuristic-like strategies.

* Conference paper, 6 pages / 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge