Micah Corah

Nightmare Dreamer: Dreaming About Unsafe States And Planning Ahead

Jan 08, 2026Abstract:Reinforcement Learning (RL) has shown remarkable success in real-world applications, particularly in robotics control. However, RL adoption remains limited due to insufficient safety guarantees. We introduce Nightmare Dreamer, a model-based Safe RL algorithm that addresses safety concerns by leveraging a learned world model to predict potential safety violations and plan actions accordingly. Nightmare Dreamer achieves nearly zero safety violations while maximizing rewards. Nightmare Dreamer outperforms model-free baselines on Safety Gymnasium tasks using only image observations, achieving nearly a 20x improvement in efficiency.

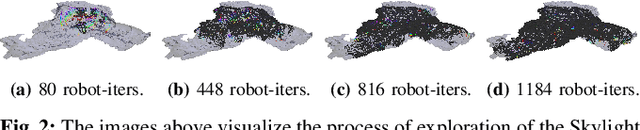

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

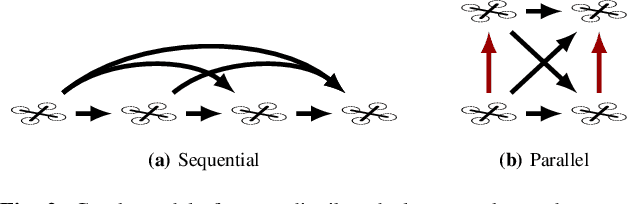

CoCap: Coordinated motion Capture for multi-actor scenes in outdoor environments

Dec 30, 2024Abstract:Motion capture has become increasingly important, not only in computer animation but also in emerging fields like the virtual reality, bioinformatics, and humanoid training. Capturing outdoor environments offers extended horizon scenes but introduces challenges with occlusions and obstacles. Recent approaches using multi-drone systems to capture multiple actor scenes often fail to account for multi-view consistency and reasoning across cameras in cluttered environments. Coordinated motion Capture (CoCap), inspired by Conflict-Based Search (CBS), addresses this issue by coordinating view planning to ensure multi-view reasoning during conflicts. In scenarios with high occlusions and obstacles, where the likelihood of inter-robot collisions increases, CoCap demonstrates performance that approaches the ideal outcomes of unconstrained planning, outperforming existing sequential planning methods. Additionally, CoCap offers a single-robot view search approach for real-time applications in dense environments.

Multi-Robot Planning for Filming Groups of Moving Actors Leveraging Submodularity and Pixel Density

Apr 03, 2024Abstract:Observing and filming a group of moving actors with a team of aerial robots is a challenging problem that combines elements of multi-robot coordination, coverage, and view planning. A single camera may observe multiple actors at once, and the robot team may observe individual actors from multiple views. As actors move about, groups may split, merge, and reform, and robots filming these actors should be able to adapt smoothly to such changes in actor formations. Rather than adopt an approach based on explicit formations or assignments, we propose an approach based on optimizing views directly. We model actors as moving polyhedra and compute approximate pixel densities for each face and camera view. Then, we propose an objective that exhibits diminishing returns as pixel densities increase from repeated observation. This gives rise to a multi-robot perception planning problem which we solve via a combination of value iteration and greedy submodular maximization. %using a combination of value iteration to optimize views for individual robots and sequential submodular maximization methods to coordinate the team. We evaluate our approach on challenging scenarios modeled after various kinds of social behaviors and featuring different numbers of robots and actors and observe that robot assignments and formations arise implicitly based on the movements of groups of actors. Simulation results demonstrate that our approach consistently outperforms baselines, and in addition to performing well with the planner's approximation of pixel densities our approach also performs comparably for evaluation based on rendered views. Overall, the multi-round variant of the sequential planner we propose meets (within 1%) or exceeds the formation and assignment baselines in all scenarios we consider.

Greedy Perspectives: Multi-Drone View Planning for Collaborative Coverage in Cluttered Environments

Oct 16, 2023Abstract:Deployment of teams of aerial robots could enable large-scale filming of dynamic groups of people (actors) in complex environments for novel applications in areas such as team sports and cinematography. Toward this end, methods for submodular maximization via sequential greedy planning can be used for scalable optimization of camera views across teams of robots but face challenges with efficient coordination in cluttered environments. Obstacles can produce occlusions and increase chances of inter-robot collision which can violate requirements for near-optimality guarantees. To coordinate teams of aerial robots in filming groups of people in dense environments, a more general view-planning approach is required. We explore how collision and occlusion impact performance in filming applications through the development of a multi-robot multi-actor view planner with an occlusion-aware objective for filming groups of people and compare with a greedy formation planner. To evaluate performance, we plan in five test environments with complex multiple-actor behaviors. Compared with a formation planner, our sequential planner generates 14% greater view reward over the actors for three scenarios and comparable performance to formation planning on two others. We also observe near identical performance of sequential planning both with and without inter-robot collision constraints. Overall, we demonstrate effective coordination of teams of aerial robots for filming groups that may split, merge, or spread apart and in environments cluttered with obstacles that may cause collisions or occlusions.

Multi-Robot Multi-Room Exploration with Geometric Cue Extraction and Spherical Decomposition

Jul 27, 2023

Abstract:This work proposes an autonomous multi-robot exploration pipeline that coordinates the behaviors of robots in an indoor environment composed of multiple rooms. Contrary to simple frontier-based exploration approaches, we aim to enable robots to methodically explore and observe an unknown set of rooms in a structured building, keeping track of which rooms are already explored and sharing this information among robots to coordinate their behaviors in a distributed manner. To this end, we propose (1) a geometric cue extraction method that processes 3D map point cloud data and detects the locations of potential cues such as doors and rooms, (2) a spherical decomposition for open spaces used for target assignment. Using these two components, our pipeline effectively assigns tasks among robots, and enables a methodical exploration of rooms. We evaluate the performance of our pipeline using a team of up to 3 aerial robots, and show that our method outperforms the baseline by 36.6% in simulation and 26.4% in real-world experiments.

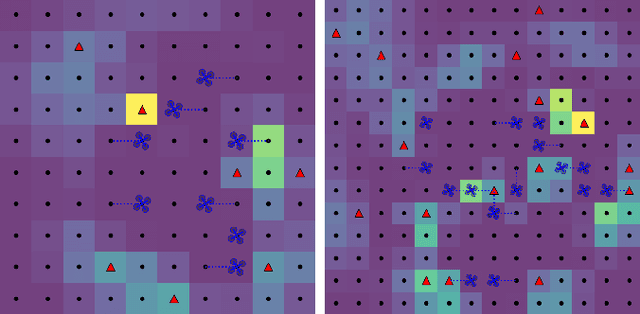

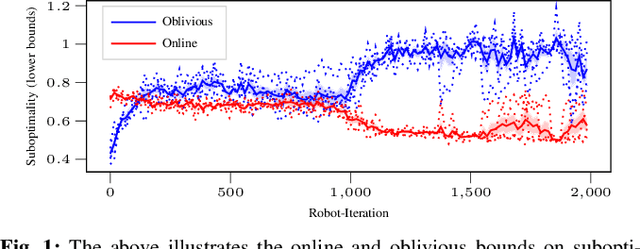

Scalable Distributed Planning for Multi-Robot, Multi-Target Tracking

Jul 18, 2021

Abstract:In multi-robot multi-target tracking, robots coordinate to monitor groups of targets moving about an environment. We approach planning for such scenarios by formulating a receding-horizon, multi-robot sensing problem with a mutual information objective. Such problems are NP-Hard in general. Yet, our objective is submodular which enables certain greedy planners to guarantee constant-factor suboptimality. However, these greedy planners require robots to plan their actions in sequence, one robot at a time, so planning time is at least proportional to the number of robots. Solving these problems becomes intractable for large teams, even for distributed implementations. Our prior work proposed a distributed planner (RSP) which reduces this number of sequential steps to a constant, even for large numbers of robots, by allowing robots to plan in parallel while ignoring some of each others' decisions. Although that analysis is not applicable to target tracking, we prove a similar guarantee, that RSP planning approaches performance guarantees for fully sequential planners, by employing a novel bound which takes advantage of the independence of target motions to quantify effective redundancy between robots' observations and actions. Further, we present analysis that explicitly accounts for features of practical implementations including approximations to the objective and anytime planning. Simulation results -- available via open source release -- for target tracking with ranging sensors demonstrate that our planners consistently approach the performance of sequential planning (in terms of position uncertainty) given only 2--8 planning steps and for as many as 96 robots with a 24x reduction in the number of sequential steps in planning. Thus, this work makes planning for multi-robot target tracking tractable at much larger scales than before, for practical planners and general tracking problems.

Volumetric Objectives for Multi-Robot Exploration of Three-Dimensional Environments

Mar 26, 2021

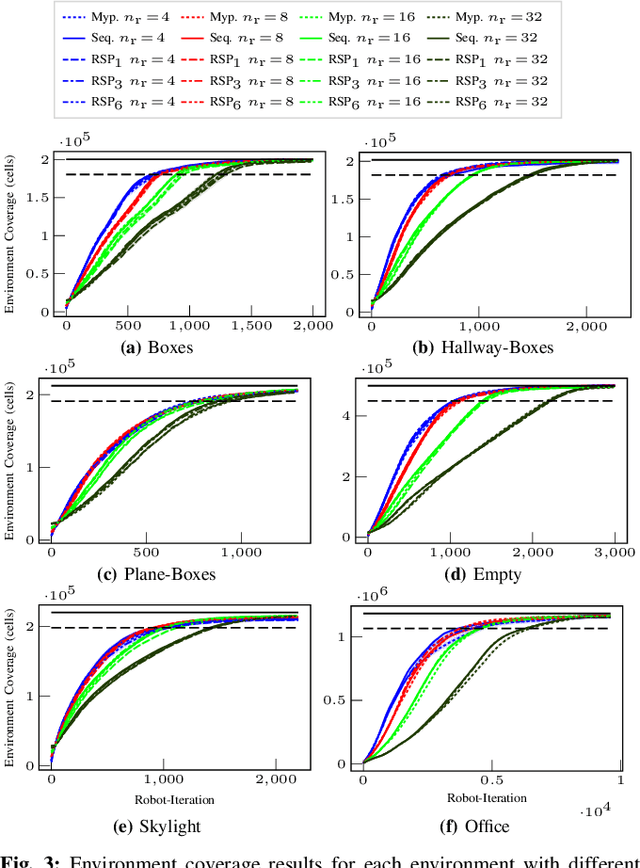

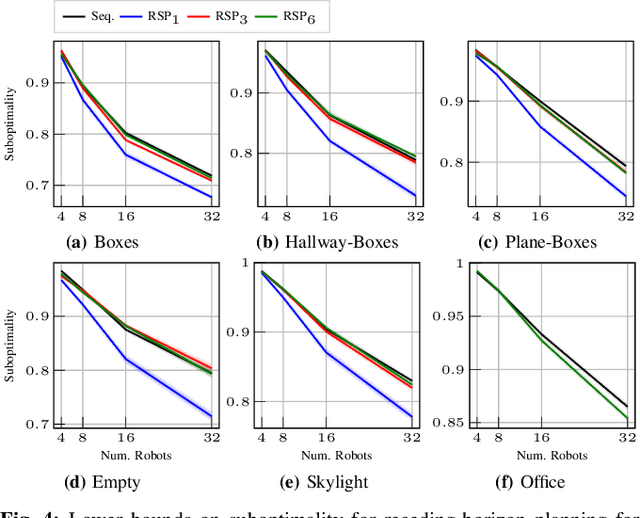

Abstract:Volumetric objectives for exploration and perception tasks seek to capture a sense of value (or reward) for hypothetical observations at one or more camera views for robots operating in unknown environments. For example, a volumetric objective may reward robots proportionally to the expected volume of unknown space to be observed. We identify connections between existing information-theoretic and coverage objectives in terms of expected coverage, particularly that mutual information without noise is a special case of expected coverage. Likewise, we provide the first comparison, of which we are aware, between information-based approximations and coverage objectives for exploration, and we find, perhaps surprisingly, that coverage objectives can significantly outperform information-based objectives in practice. Additionally, the analysis for information and coverage objectives demonstrates that Randomized Sequential Partitions -- a method for efficient distributed sensor planning -- applies for both classes of objectives, and we provide simulation results in a variety of environments for as many as 32 robots.

Sensor Planning for Large Numbers of Robots

Feb 08, 2021

Abstract:*The following abbreviates the abstract. Please refer to the thesis for the full abstract.* After a disaster, locating and extracting victims quickly is critical because mortality rises rapidly after the first two days. To assist search and rescue teams and improve response times, teams of camera-equipped aerial robots can engage in tasks such as mapping buildings and locating victims. These sensing tasks encapsulate difficult (NP-Hard) problems. One way to simplify planning for these tasks is to focus on maximizing sensing performance over a short time horizon. Specifically, consider the problem of how to select motions for a team of robots to maximize a notion of sensing quality (the sensing objective) over the near future, say by maximizing the amount of unknown space in a map that robots will observe over the next several seconds. By repeating this process regularly, the team can react quickly to new observations as they work to complete the sensing task. In technical terms, this planning and control process forms an example of receding-horizon control. Fortunately, common sensing objectives benefit from well-known monotonicity properties (e.g. submodularity), and greedy algorithms can exploit these monotonicity properties to solve the receding-horizon optimization problems that we study near-optimally. However, greedy algorithms typically force robots to make decisions sequentially so that planning time grows with the number of robots. Further, recent works that investigate sequential greedy planning, have demonstrated that reducing the number of sequential steps while retaining suboptimality guarantees can be hard or impossible. We demonstrate that halting growth in planning time is sometimes possible. To do so, we introduce novel greedy algorithms involving fixed numbers of sequential steps.

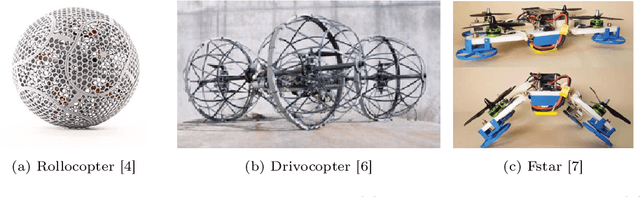

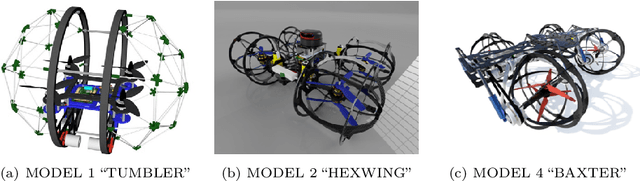

BAXTER: Bi-modal Aerial-Terrestrial Hybrid Vehicle for Long-endurance Versatile Mobility: Preprint Version

Feb 05, 2021

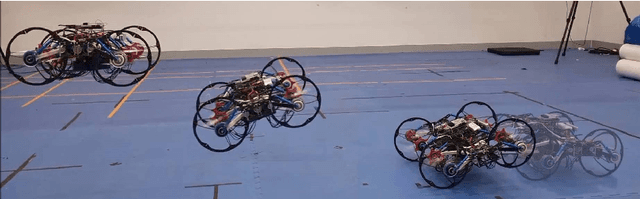

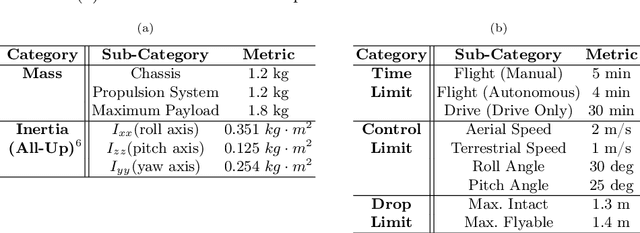

Abstract:Unmanned aerial vehicles are rapidly evolving within the field of robotics. However, their performance is often limited by payload capacity, operational time, and robustness to impact and collision. These limitations of aerial vehicles become more acute for missions in challenging environments such as subterranean structures which may require extended autonomous operation in confined spaces. While software solutions for aerial robots are developing rapidly, improvements to hardware are critical to applying advanced planners and algorithms in large and dangerous environments where the short range and high susceptibility to collisions of most modern aerial robots make applications in realistic subterranean missions infeasible. To provide such hardware capabilities, one needs to design and implement a hardware solution that takes into the account the Size, Weight, and Power (SWaP) constraints. This work focuses on providing a robust and versatile hybrid platform that improves payload capacity, operation time, endurance, and versatility. The Bi-modal Aerial and Terrestrial hybrid vehicle (BAXTER) is a solution that provides two modes of operation, aerial and terrestrial. BAXTER employs two novel hardware mechanisms: the M-Suspension and the Decoupled Transmission which together provide resilience during landing and crashes and efficient terrestrial operation. Extensive flight tests were conducted to characterize the vehicle's capabilities, including robustness and endurance. Additionally, we propose Agile Mode Transfer (AMT), a transition from aerial to terrestrial operation that seeks to minimize impulses during impact to the ground which is a quick and simple transition process that exploits BAXTER's resilience to impact.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge