Nikhilesh Alatur

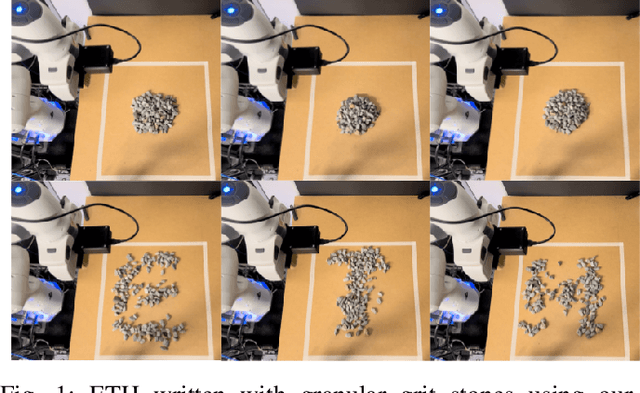

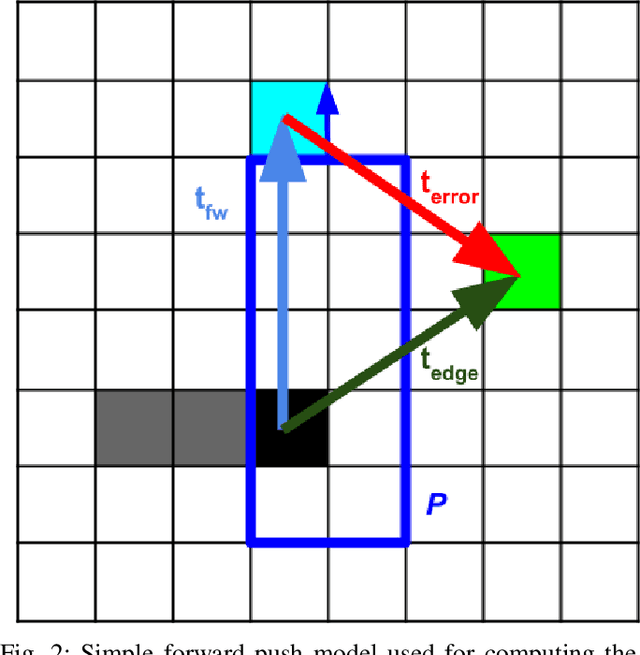

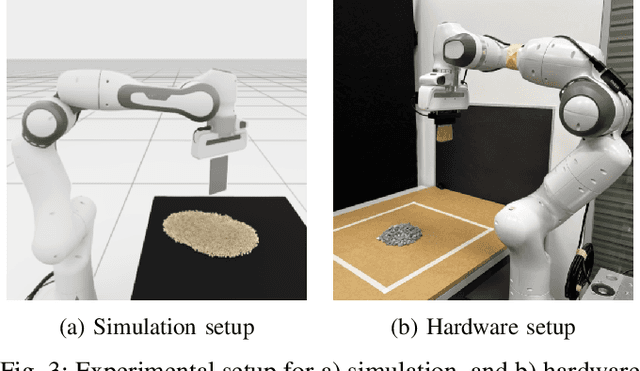

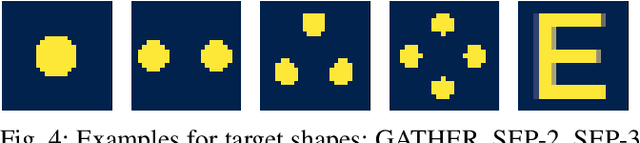

Material-agnostic Shaping of Granular Materials with Optimal Transport

Mar 29, 2023

Abstract:From construction materials, such as sand or asphalt, to kitchen ingredients, like rice, sugar, or salt; the world is full of granular materials. Despite impressive progress in robotic manipulation, manipulating and interacting with granular material remains a challenge due to difficulties in perceiving, representing, modelling, and planning for these variable materials that have complex internal dynamics. While some prior work has looked into estimating or learning accurate dynamics models for granular materials, the literature is still missing a more abstract planning method that can be used for planning manipulation actions for granular materials with unknown material properties. In this work, we leverage tools from optimal transport and connect them to robot motion planning. We propose a heuristics-based sweep planner that does not require knowledge of the material's properties and directly uses a height map representation to generate promising sweeps. These sweeps transform granular material from arbitrary start shapes into arbitrary target shapes. We apply the sweep planner in a fast and reactive feedback loop and avoid the need for model-based planning over multiple time steps. We validate our approach with a large set of simulation and hardware experiments where we show that our method is capable of efficiently solving several complex tasks, including gathering, separating, and shaping of several types of granular materials into different target shapes.

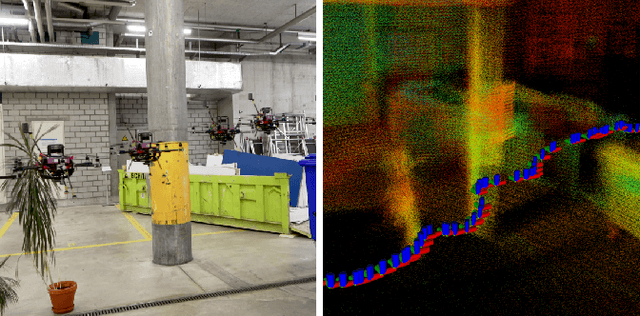

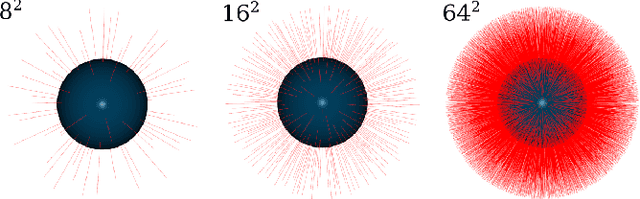

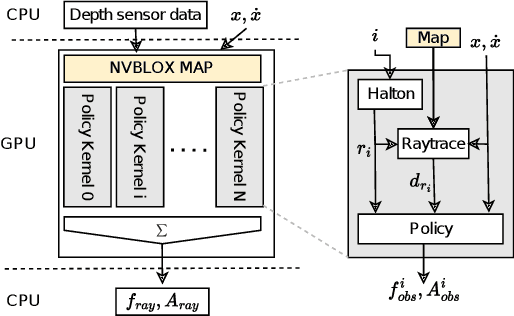

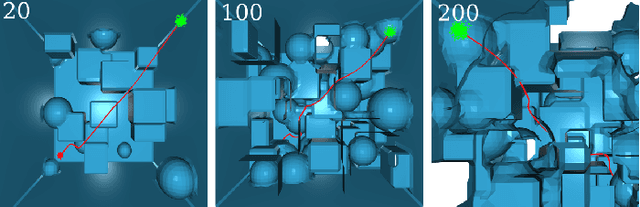

Obstacle avoidance using raycasting and Riemannian Motion Policies at kHz rates for MAVs

Jan 19, 2023

Abstract:In this paper, we present a novel method for using Riemannian Motion Policies on volumetric maps, shown in the example of obstacle avoidance for Micro Aerial Vehicles (MAVs). While sampling or optimization-based planners are widely used for obstacle avoidance with volumetric maps, they are computationally expensive and often have inflexible monolithic architectures. Riemannian Motion Policies are a modular, parallelizable, and efficient navigation paradigm but are challenging to use with the widely used voxel-based environment representations. We propose using GPU raycasting and a large number of concurrent policies to provide direct obstacle avoidance using Riemannian Motion Policies in voxelized maps without the need for smoothing or pre-processing of the map. Additionally, we present how the same method can directly plan on LiDAR scans without the need for an intermediate map. We show how this reactive approach compares favorably to traditional planning methods and is able to plan using thousands of rays at kilohertz rates. We demonstrate the planner successfully on a real MAV for static and dynamic obstacles. The presented planner is made available as an open-source software package.

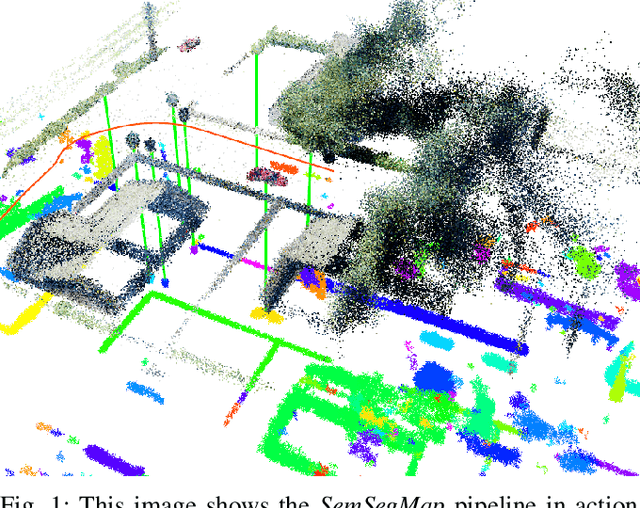

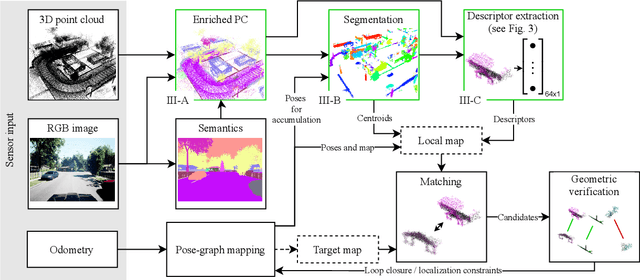

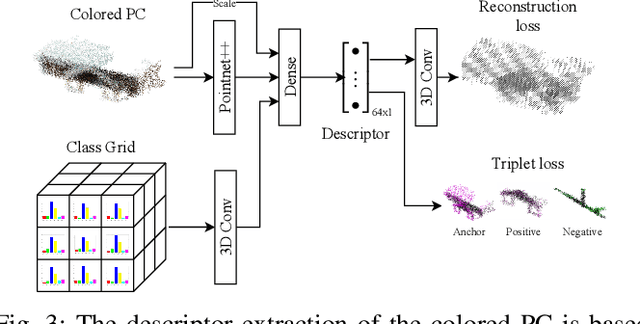

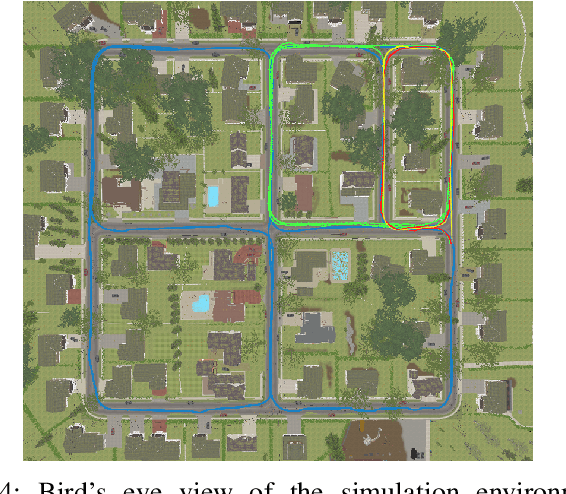

SemSegMap- 3D Segment-Based Semantic Localization

Jul 30, 2021

Abstract:Localization is an essential task for mobile autonomous robotic systems that want to use pre-existing maps or create new ones in the context of SLAM. Today, many robotic platforms are equipped with high-accuracy 3D LiDAR sensors, which allow a geometric mapping, and cameras able to provide semantic cues of the environment. Segment-based mapping and localization have been applied with great success to 3D point-cloud data, while semantic understanding has been shown to improve localization performance in vision based systems. In this paper we combine both modalities in SemSegMap, extending SegMap into a segment based mapping framework able to also leverage color and semantic data from the environment to improve localization accuracy and robustness. In particular, we present new segmentation and descriptor extraction processes. The segmentation process benefits from additional distance information from color and semantic class consistency resulting in more repeatable segments and more overlap after re-visiting a place. For the descriptor, a tight fusion approach in a deep-learned descriptor extraction network is performed leading to a higher descriptiveness for landmark matching. We demonstrate the advantages of this fusion on multiple simulated and real-world datasets and compare its performance to various baselines. We show that we are able to find 50.9% more high-accuracy prior-less global localizations compared to SegMap on challenging datasets using very compact maps while also providing accurate full 6 DoF pose estimates in real-time.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

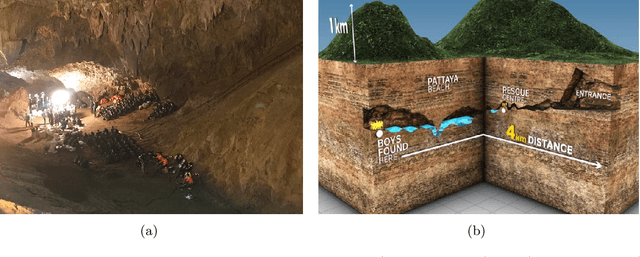

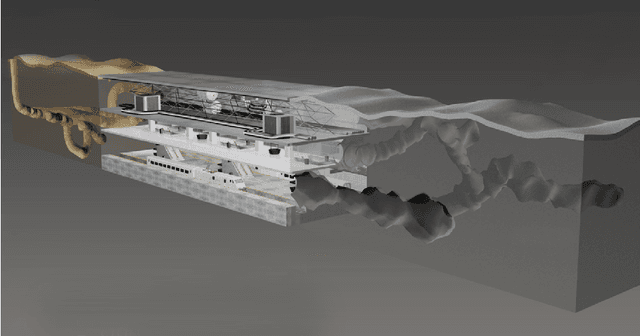

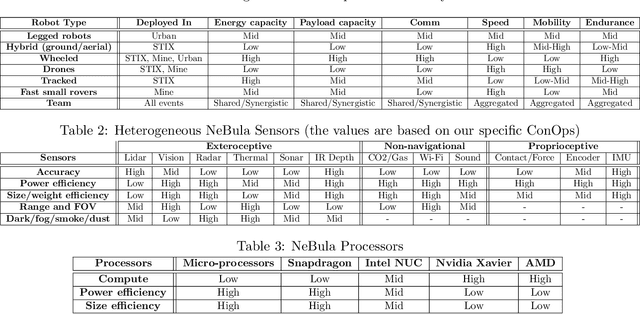

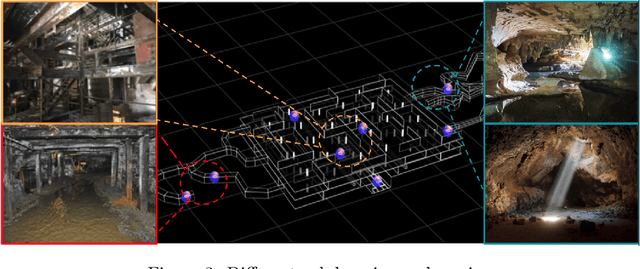

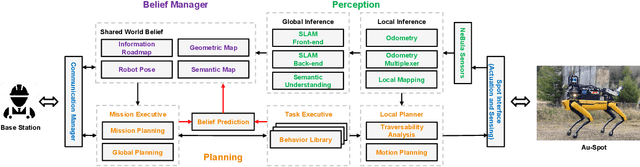

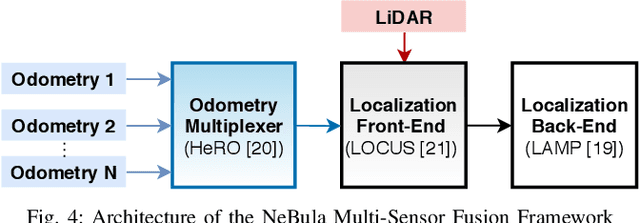

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

Autonomous Off-road Navigation over Extreme Terrains with Perceptually-challenging Conditions

Jan 26, 2021

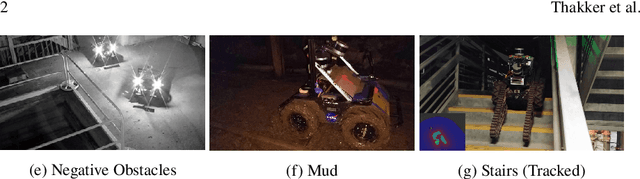

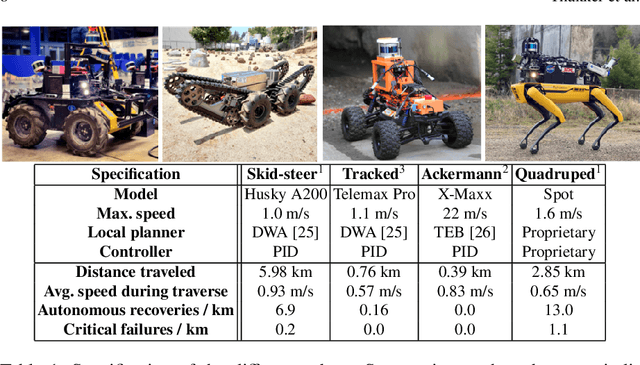

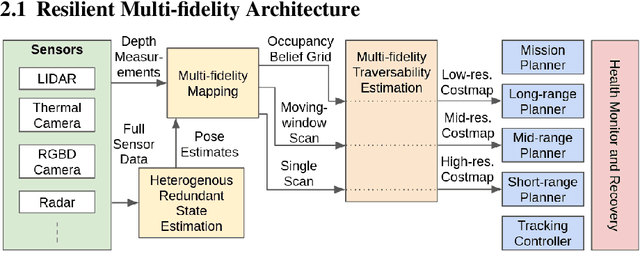

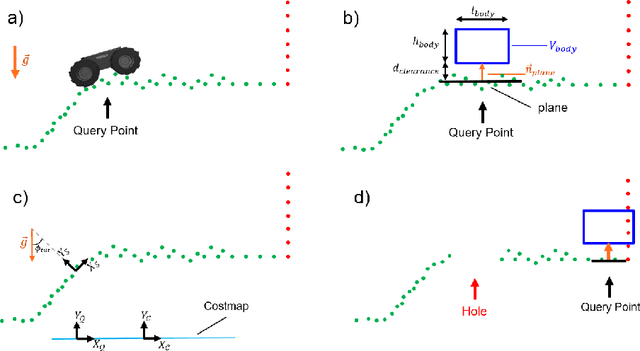

Abstract:We propose a framework for resilient autonomous navigation in perceptually challenging unknown environments with mobility-stressing elements such as uneven surfaces with rocks and boulders, steep slopes, negative obstacles like cliffs and holes, and narrow passages. Environments are GPS-denied and perceptually-degraded with variable lighting from dark to lit and obscurants (dust, fog, smoke). Lack of prior maps and degraded communication eliminates the possibility of prior or off-board computation or operator intervention. This necessitates real-time on-board computation using noisy sensor data. To address these challenges, we propose a resilient architecture that exploits redundancy and heterogeneity in sensing modalities. Further resilience is achieved by triggering recovery behaviors upon failure. We propose a fast settling algorithm to generate robust multi-fidelity traversability estimates in real-time. The proposed approach was deployed on multiple physical systems including skid-steer and tracked robots, a high-speed RC car and legged robots, as a part of Team CoSTAR's effort to the DARPA Subterranean Challenge, where the team won 2nd and 1st place in the Tunnel and Urban Circuits, respectively.

Autonomous Spot: Long-Range Autonomous Exploration of Extreme Environments with Legged Locomotion

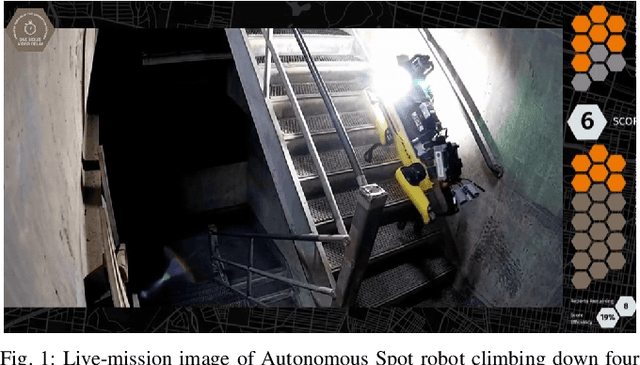

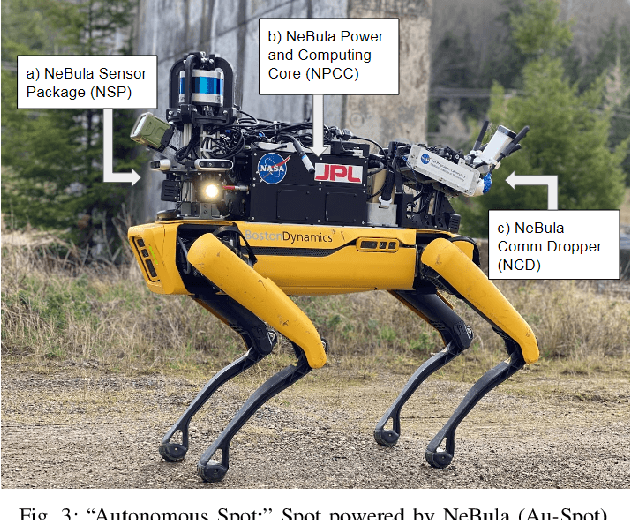

Nov 01, 2020

Abstract:This paper serves as one of the first efforts to enable large-scale and long-duration autonomy using the Boston Dynamics Spot robot. Motivated by exploring extreme environments, particularly those involved in the DARPA Subterranean Challenge, this paper pushes the boundaries of the state-of-practice in enabling legged robotic systems to accomplish real-world complex missions in relevant scenarios. In particular, we discuss the behaviors and capabilities which emerge from the integration of the autonomy architecture NeBula (Networked Belief-aware Perceptual Autonomy) with next-generation mobility systems. We will discuss the hardware and software challenges, and solutions in mobility, perception, autonomy, and very briefly, wireless networking, as well as lessons learned and future directions. We demonstrate the performance of the proposed solutions on physical systems in real-world scenarios.

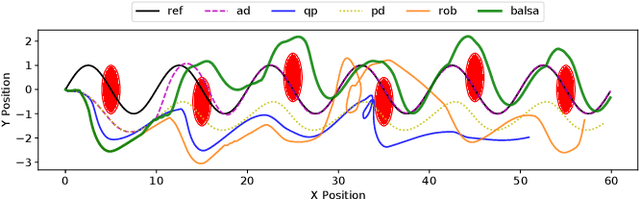

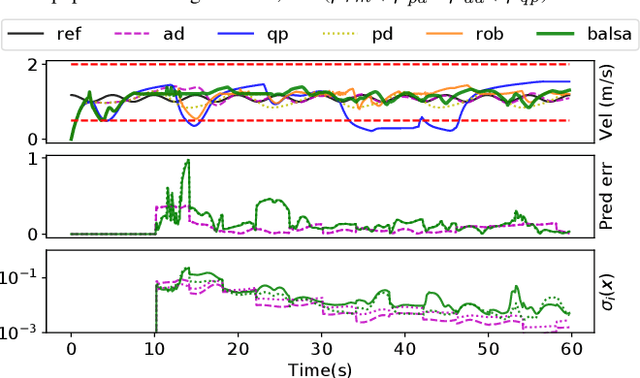

Bayesian Learning-Based Adaptive Control for Safety Critical Systems

Oct 05, 2019

Abstract:Deep learning has enjoyed much recent success, and applying state-of-the-art model learning methods to controls is an exciting prospect. However, there is a strong reluctance to use these methods on safety-critical systems, which have constraints on safety, stability, and real-time performance. We propose a framework which satisfies these constraints while allowing the use of deep neural networks for learning model uncertainties. Central to our method is the use of Bayesian model learning, which provides an avenue for maintaining appropriate degrees of caution in the face of the unknown. In the proposed approach, we develop an adaptive control framework leveraging the theory of stochastic CLFs (Control Lypunov Functions) and stochastic CBFs (Control Barrier Functions) along with tractable Bayesian model learning via Gaussian Processes or Bayesian neural networks. Under reasonable assumptions, we guarantee stability and safety while adapting to unknown dynamics with probability 1. We demonstrate this architecture for high-speed terrestrial mobility targeting potential applications in safety-critical high-speed Mars rover missions.

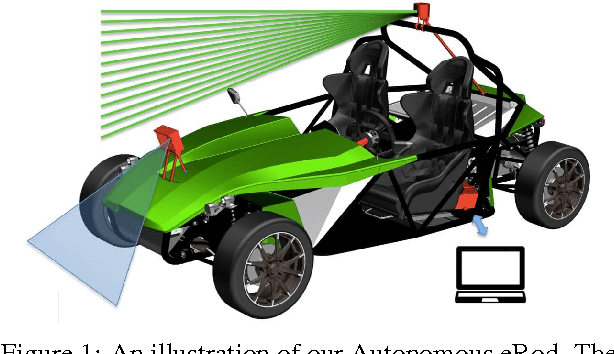

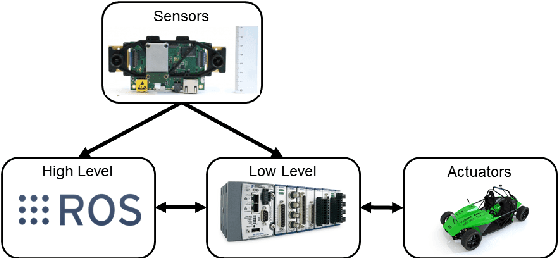

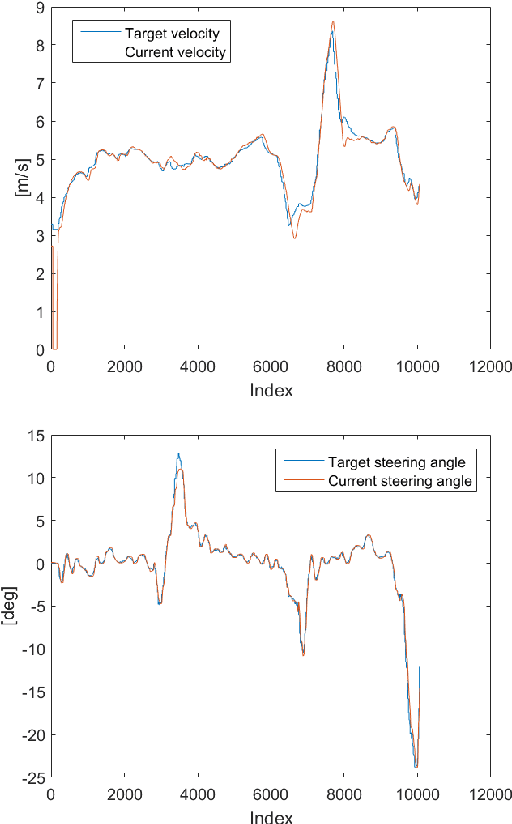

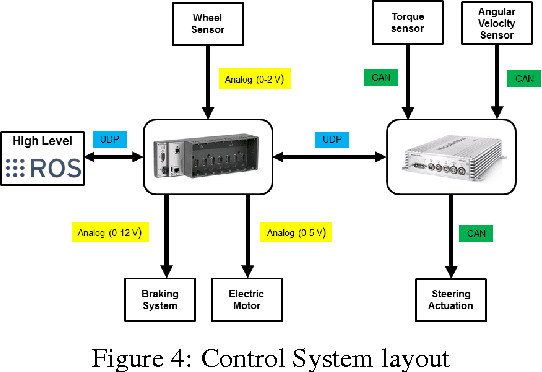

Autonomous Electric Race Car Design

Nov 01, 2017

Abstract:Autonomous driving and electric vehicles are nowadays very active research and development areas. In this paper we present the conversion of a standard Kyburz eRod into an autonomous vehicle that can be operated in challenging environments such as Swiss mountain passes. The overall hardware and software architectures are described in detail with a special emphasis on the sensor requirements for autonomous vehicles operating in partially structured environments. Furthermore, the design process itself and the finalized system architecture are presented. The work shows state of the art results in localization and controls for self-driving high-performance electric vehicles. Test results of the overall system are presented, which show the importance of generalizable state estimation algorithms to handle a plethora of conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge