Kenny Chen

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

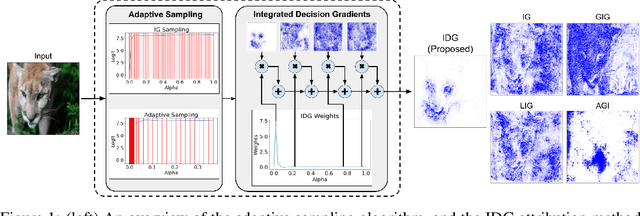

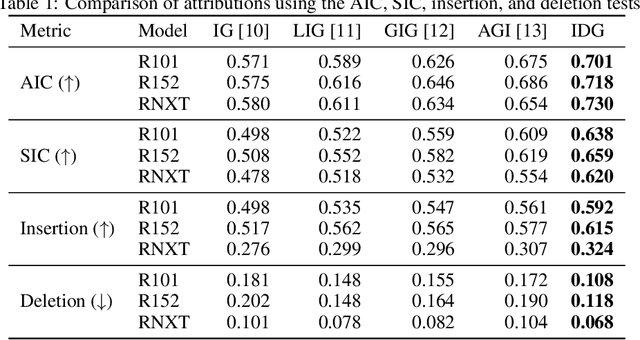

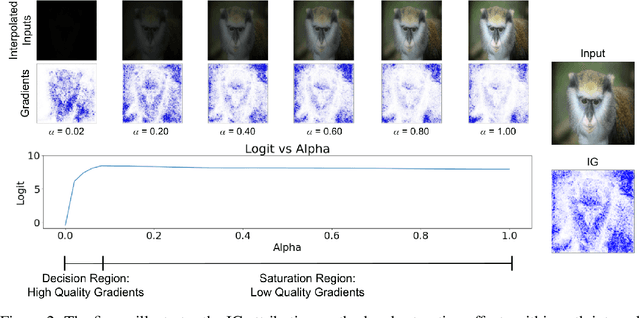

Integrated Decision Gradients: Compute Your Attributions Where the Model Makes Its Decision

May 31, 2023

Abstract:Attribution algorithms are frequently employed to explain the decisions of neural network models. Integrated Gradients (IG) is an influential attribution method due to its strong axiomatic foundation. The algorithm is based on integrating the gradients along a path from a reference image to the input image. Unfortunately, it can be observed that gradients computed from regions where the output logit changes minimally along the path provide poor explanations for the model decision, which is called the saturation effect problem. In this paper, we propose an attribution algorithm called integrated decision gradients (IDG). The algorithm focuses on integrating gradients from the region of the path where the model makes its decision, i.e., the portion of the path where the output logit rapidly transitions from zero to its final value. This is practically realized by scaling each gradient by the derivative of the output logit with respect to the path. The algorithm thereby provides a principled solution to the saturation problem. Additionally, we minimize the errors within the Riemann sum approximation of the path integral by utilizing non-uniform subdivisions determined by adaptive sampling. In the evaluation on ImageNet, it is demonstrated that IDG outperforms IG, left-IG, guided IG, and adversarial gradient integration both qualitatively and quantitatively using standard insertion and deletion metrics across three common models.

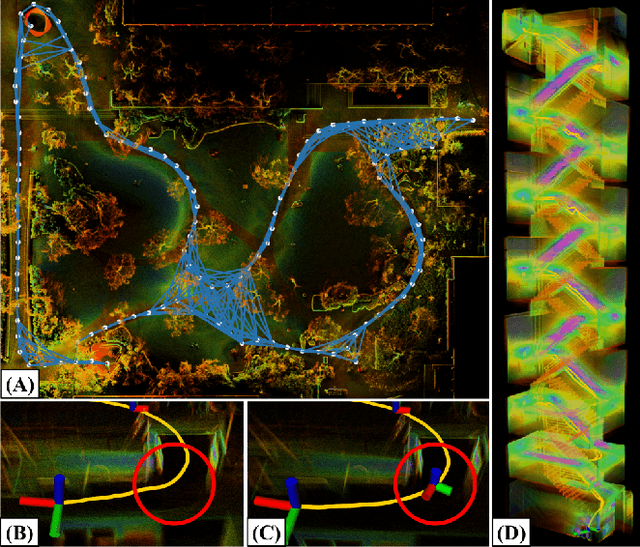

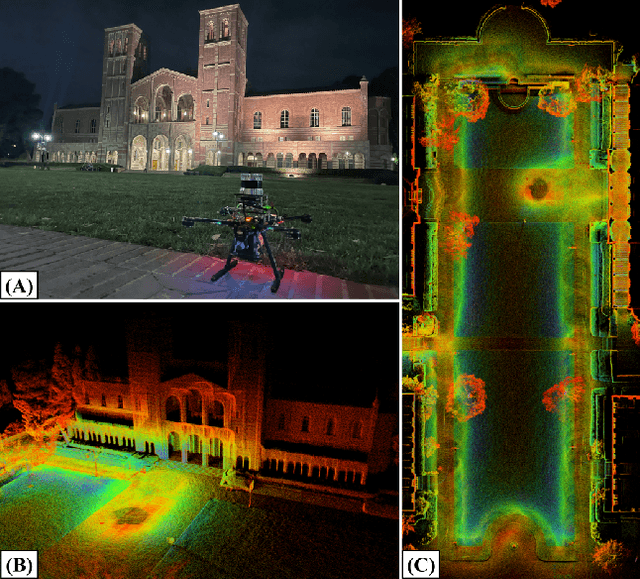

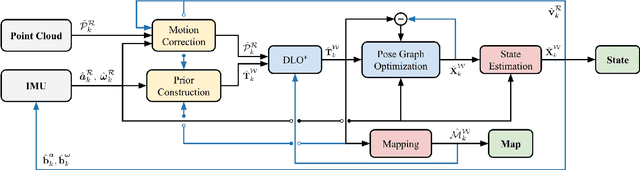

Direct LiDAR-Inertial Odometry and Mapping: Perceptive and Connective SLAM

May 03, 2023

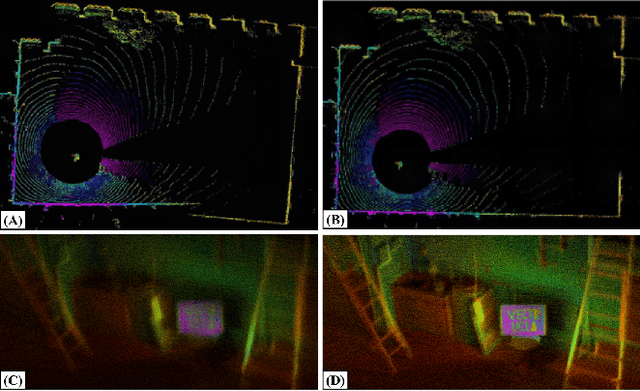

Abstract:This paper presents Direct LiDAR-Inertial Odometry and Mapping (DLIOM), a robust SLAM algorithm with an explicit focus on computational efficiency, operational reliability, and real-world efficacy. DLIOM contains several key algorithmic innovations in both the front-end and back-end subsystems to design a resilient LiDAR-inertial architecture that is perceptive to the environment and produces accurate localization and high-fidelity 3D mapping for autonomous robotic platforms. Our ideas spawned after a deep investigation into modern LiDAR SLAM systems and their inabilities to generalize across different operating environments, in which we address several common algorithmic failure points by means of proactive safe-guards to provide long-term operational reliability in the unstructured real world. We detail several important innovations to localization accuracy and mapping resiliency distributed throughout a typical LiDAR SLAM pipeline to comprehensively increase algorithmic speed, accuracy, and robustness. In addition, we discuss insights gained from our ground-up approach while implementing such a complex system for real-time state estimation on resource-constrained systems, and we experimentally show the increased performance of our method as compared to the current state-of-the-art on both public benchmark and self-collected datasets.

Joint On-Manifold Gravity and Accelerometer Intrinsics Estimation

Mar 06, 2023

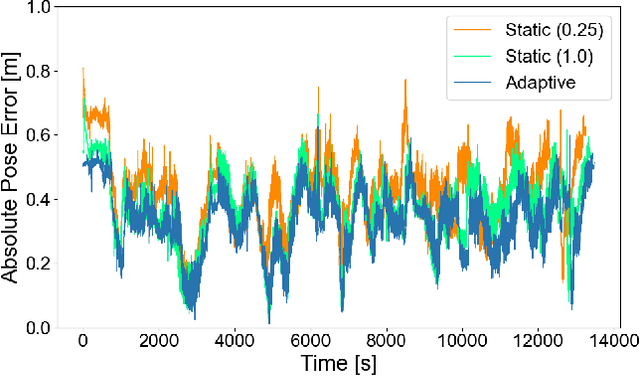

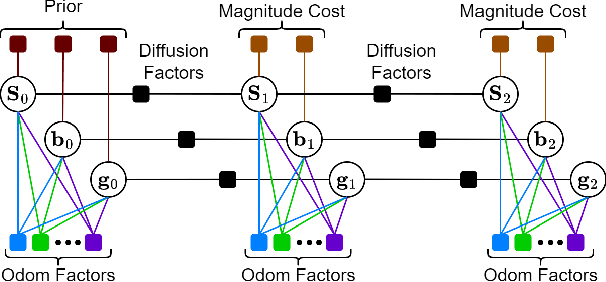

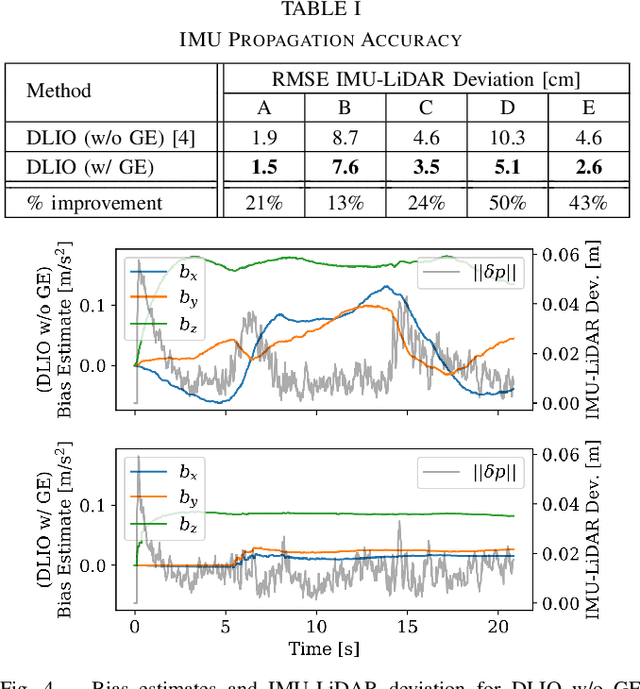

Abstract:Aligning a robot's trajectory or map to the inertial frame is a critical capability that is often difficult to do accurately even though inertial measurement units (IMUs) can observe absolute roll and pitch with respect to gravity. Accelerometer biases and scale factor errors from the IMU's initial calibration are often the major source of inaccuracies when aligning the robot's odometry frame with the inertial frame, especially for low-grade IMUs. Practically, one would simultaneously estimate the true gravity vector, accelerometer biases, and scale factor to improve measurement quality but these quantities are not observable unless the IMU is sufficiently excited. While several methods estimate accelerometer bias and gravity, they do not explicitly address the observability issue nor do they estimate scale factor. We present a fixed-lag factor-graph-based estimator to address both of these issues. In addition to estimating accelerometer scale factor, our method mitigates limited observability by optimizing over a time window an order of magnitude larger than existing methods with significantly lower computational burden. The proposed method, which estimates accelerometer intrinsics and gravity separately from the other states, is enabled by a novel, velocity-agnostic measurement model for intrinsics and gravity, as well as a new method for gravity vector optimization on S2. Accurate IMU state prediction, gravity-alignment, and roll/pitch drift correction are experimentally demonstrated on public and self-collected datasets in diverse environments.

Adaptive Coverage Path Planning for Efficient Exploration of Unknown Environments

Feb 06, 2023

Abstract:We present a method for solving the coverage problem with the objective of autonomously exploring an unknown environment under mission time constraints. Here, the robot is tasked with planning a path over a horizon such that the accumulated area swept out by its sensor footprint is maximized. Because this problem exhibits a diminishing returns property known as submodularity, we choose to formulate it as a tree-based sequential decision making process. This formulation allows us to evaluate the effects of the robot's actions on future world coverage states, while simultaneously accounting for traversability risk and the dynamic constraints of the robot. To quickly find near-optimal solutions, we propose an effective approximation to the coverage sensor model which adapts to the local environment. Our method was extensively tested across various complex environments and served as the local exploration algorithm for a competing entry in the DARPA Subterranean Challenge.

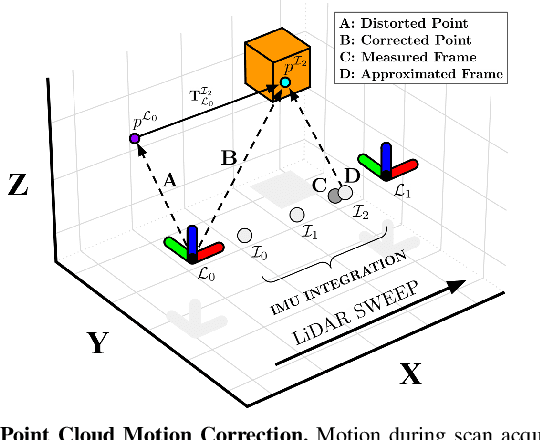

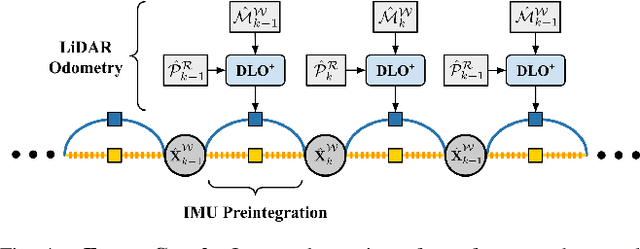

Direct LiDAR-Inertial Odometry

Mar 18, 2022

Abstract:This paper proposes a new LiDAR-inertial odometry framework that generates accurate state estimates and detailed maps in real-time on resource-constrained mobile robots. Our Direct LiDAR-Inertial Odometry (DLIO) algorithm utilizes a hybrid architecture that combines the benefits of loosely-coupled and tightly-coupled IMU integration to enhance reliability and real-time performance while improving accuracy. The proposed architecture has two key elements. The first is a fast keyframe-based LiDAR scan-matcher that builds an internal map by registering dense point clouds to a local submap with a translational and rotational prior generated by a nonlinear motion model. The second is a factor graph and high-rate propagator that fuses the output of the scan-matcher with preintegrated IMU measurements for up-to-date pose, velocity, and bias estimates. These estimates enable us to accurately deskew the next point cloud using a nonlinear kinematic model for precise motion correction, in addition to initializing the next scan-to-map optimization prior. We demonstrate DLIO's superior localization accuracy, map quality, and lower computational overhead by comparing it to the state-of-the-art using multiple benchmark, public, and self-collected datasets on both consumer and hobby-grade hardware.

Direct LiDAR Odometry: Fast Localization with Dense Point Clouds

Oct 01, 2021

Abstract:This paper presents a light-weight frontend LiDAR odometry solution with consistent and accurate localization for computationally-limited robotic platforms. Our Direct LiDAR Odometry (DLO) method includes several key algorithmic innovations which prioritize computational efficiency and enables the use of full, minimally-preprocessed point clouds to provide accurate pose estimates in real-time. This work also presents several important algorithmic insights and design choices from developing on platforms with shared or otherwise limited computational resources, including a custom iterative closest point solver for fast point cloud registration with data structure recycling. Our method is more accurate with lower computational overhead than the current state-of-the-art and has been extensively evaluated in several perceptually-challenging environments on aerial and legged robots as part of NASA JPL Team CoSTAR's research and development efforts for the DARPA Subterranean Challenge.

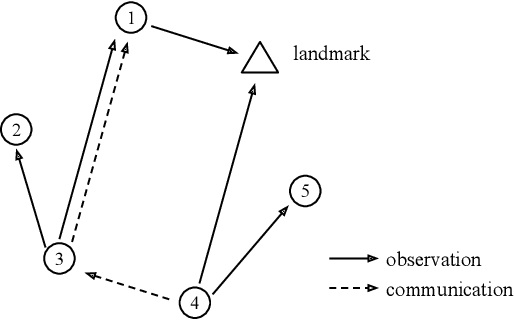

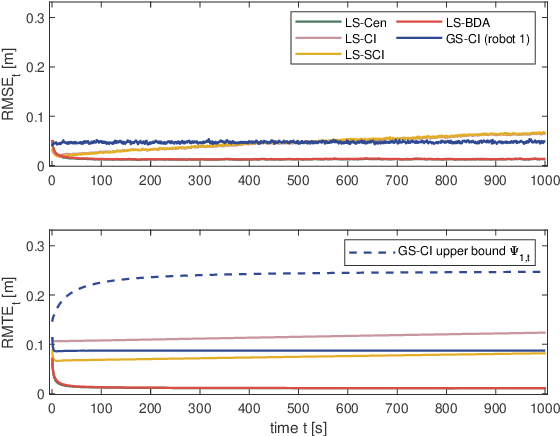

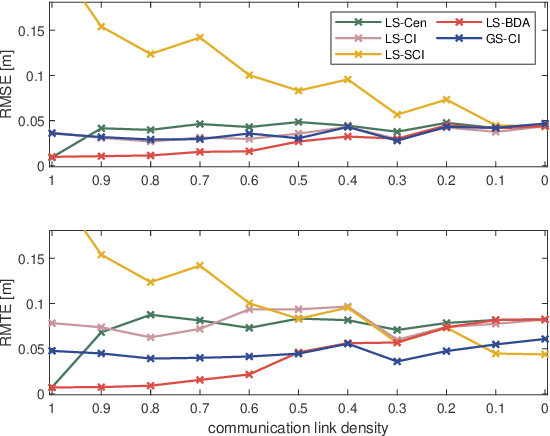

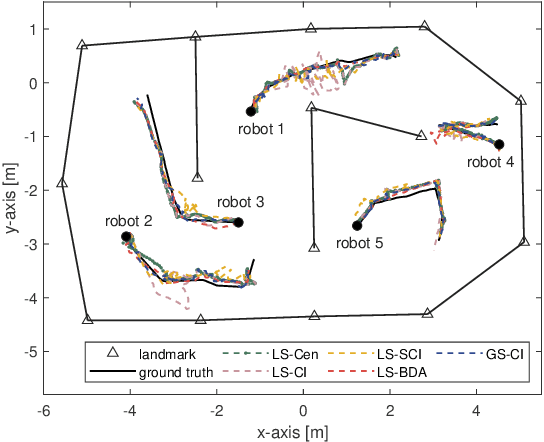

Resilient and consistent multirobot cooperative localization with covariance intersection

Aug 19, 2021

Abstract:Cooperative localization is fundamental to autonomous multirobot systems, but most algorithms couple inter-robot communication with observation, making these algorithms susceptible to failures in both communication and observation steps. To enhance the resilience of multirobot cooperative localization algorithms in a distributed system, we use covariance intersection to formalize a localization algorithm with an explicit communication update and ensure estimation consistency at the same time. We investigate the covariance boundedness criterion of our algorithm with respect to communication and observation graphs, demonstrating provable localization performance under even sparse communications topologies. We substantiate the resilience of our algorithm as well as the boundedness analysis through experiments on simulated and benchmark physical data against varying communications connectivity and failure metrics. Especially when inter-robot communication is entirely blocked or partially unavailable, we demonstrate that our method is less affected and maintains desired performance compared to existing cooperative localization algorithms.

Unsupervised Monocular Depth Learning with Integrated Intrinsics and Spatio-Temporal Constraints

Nov 02, 2020

Abstract:Monocular depth inference has gained tremendous attention from researchers in recent years and remains as a promising replacement for expensive time-of-flight sensors, but issues with scale acquisition and implementation overhead still plague these systems. To this end, this work presents an unsupervised learning framework that is able to predict at-scale depth maps and egomotion, in addition to camera intrinsics, from a sequence of monocular images via a single network. Our method incorporates both spatial and temporal geometric constraints to resolve depth and pose scale factors, which are enforced within the supervisory reconstruction loss functions at training time. Only unlabeled stereo sequences are required for training the weights of our single-network architecture, which reduces overall implementation overhead as compared to previous methods. Our results demonstrate strong performance when compared to the current state-of-the-art on multiple sequences of the KITTI driving dataset.

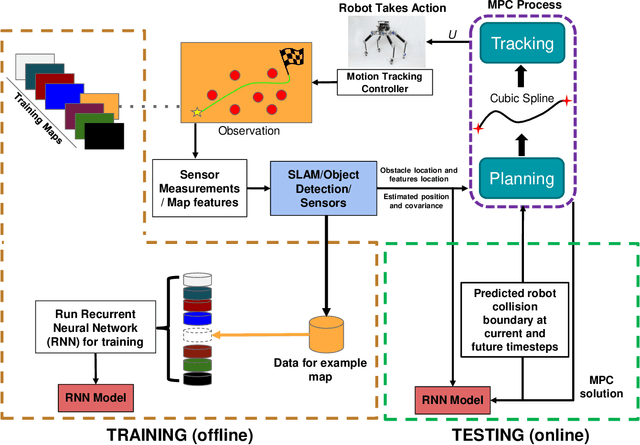

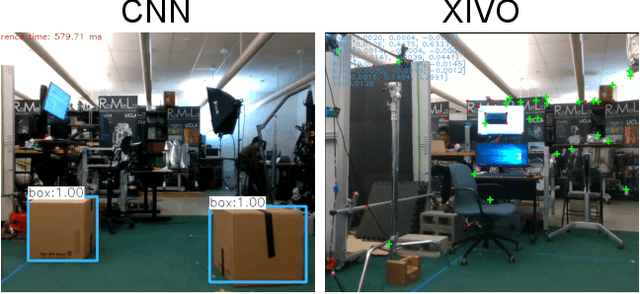

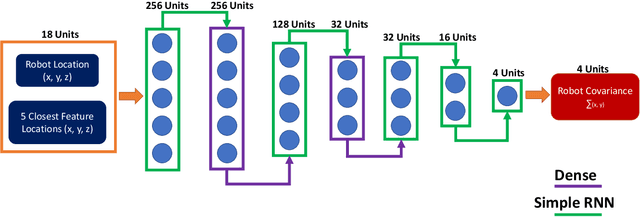

Risk-Averse MPC via Visual-Inertial Input and Recurrent Networks for Online Collision Avoidance

Jul 28, 2020

Abstract:In this paper, we propose an online path planning architecture that extends the model predictive control (MPC) formulation to consider future location uncertainties for safer navigation through cluttered environments. Our algorithm combines an object detection pipeline with a recurrent neural network (RNN) which infers the covariance of state estimates through each step of our MPC's finite time horizon. The RNN model is trained on a dataset that comprises of robot and landmark poses generated from camera images and inertial measurement unit (IMU) readings via a state-of-the-art visual-inertial odometry framework. To detect and extract object locations for avoidance, we use a custom-trained convolutional neural network model in conjunction with a feature extractor to retrieve 3D centroid and radii boundaries of nearby obstacles. The robustness of our methods is validated on complex quadruped robot dynamics and can be generally applied to most robotic platforms, demonstrating autonomous behaviors that can plan fast and collision-free paths towards a goal point.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge