Stephanie Tsuei

Quantifying VIO Uncertainty

Mar 29, 2023Abstract:We compute the uncertainty of XIVO, a monocular visual-inertial odometry system based on the Extended Kalman Filter, in the presence of Gaussian noise, drift, and attribution errors in the feature tracks in addition to Gaussian noise and drift in the IMU. Uncertainty is computed using Monte-Carlo simulations of a sufficiently exciting trajectory in the midst of a point cloud that bypass the typical image processing and feature tracking steps. We find that attribution errors have the largest detrimental effect on performance. Even with just small amounts of Gaussian noise and/or drift, however, the probability that XIVO's performance resembles the mean performance when noise and/or drift is artificially high is greater than 1 in 100.

Feature Tracks are not Zero-Mean Gaussian

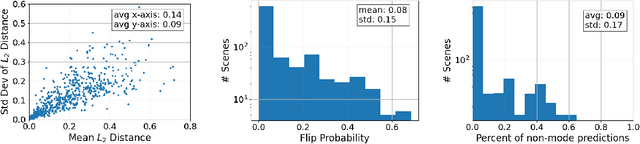

Mar 25, 2023Abstract:In state estimation algorithms that use feature tracks as input, it is customary to assume that the errors in feature track positions are zero-mean Gaussian. Using a combination of calibrated camera intrinsics, ground-truth camera pose, and depth images, it is possible to compute ground-truth positions for feature tracks extracted using an image processing algorithm. We find that feature track errors are not zero-mean Gaussian and that the distribution of errors is conditional on the type of motion, the speed of motion, and the image processing algorithm used to extract the tracks.

Learned Uncertainty Calibration for Visual Inertial Localization

Oct 05, 2021

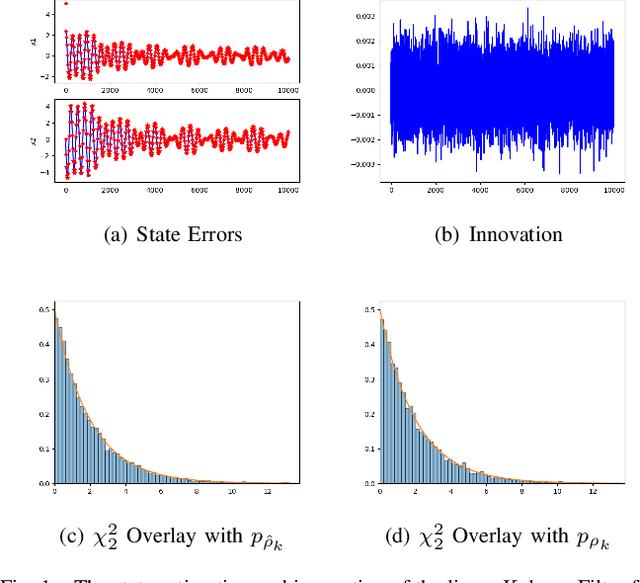

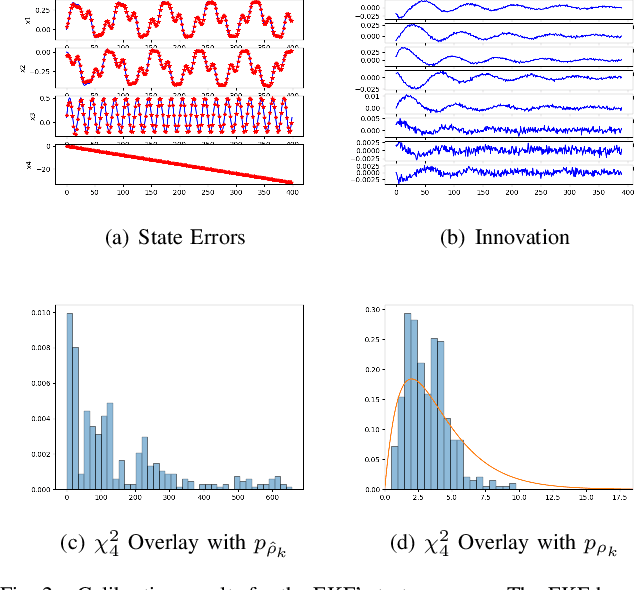

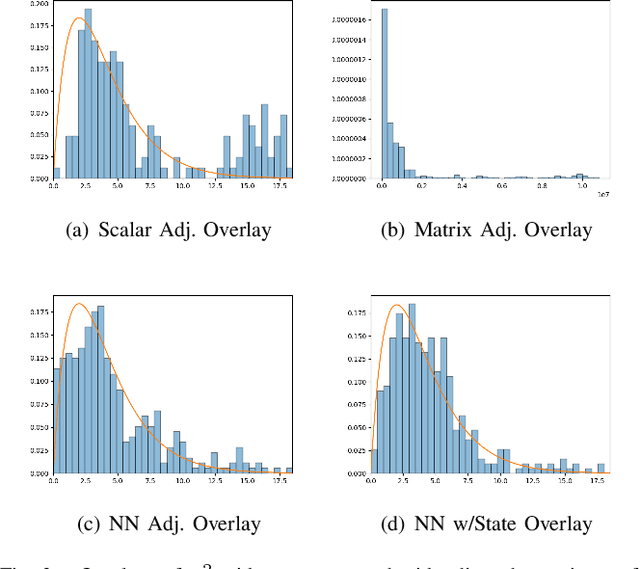

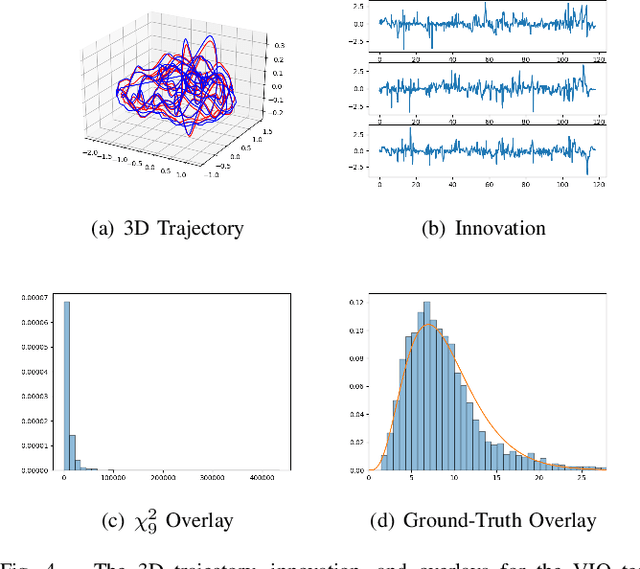

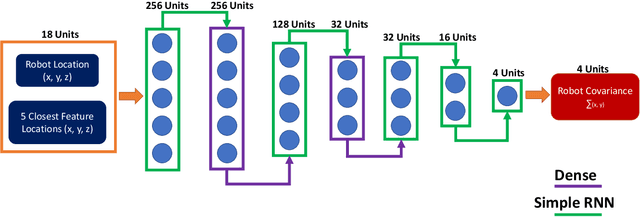

Abstract:The widely-used Extended Kalman Filter (EKF) provides a straightforward recipe to estimate the mean and covariance of the state given all past measurements in a causal and recursive fashion. For a wide variety of applications, the EKF is known to produce accurate estimates of the mean and typically inaccurate estimates of the covariance. For applications in visual inertial localization, we show that inaccuracies in the covariance estimates are \emph{systematic}, i.e. it is possible to learn a nonlinear map from the empirical ground truth to the estimated one. This is demonstrated on both a standard EKF in simulation and a Visual Inertial Odometry system on real-world data.

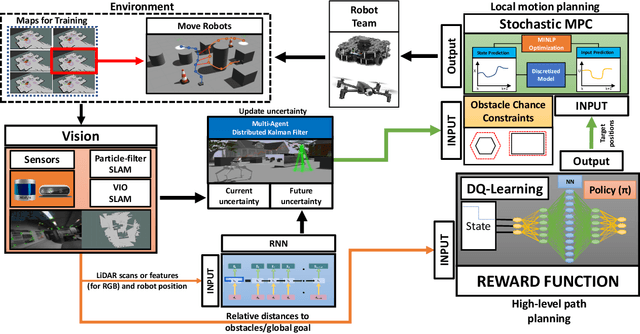

SABER: Data-Driven Motion Planner for Autonomously Navigating Heterogeneous Robots

Aug 03, 2021

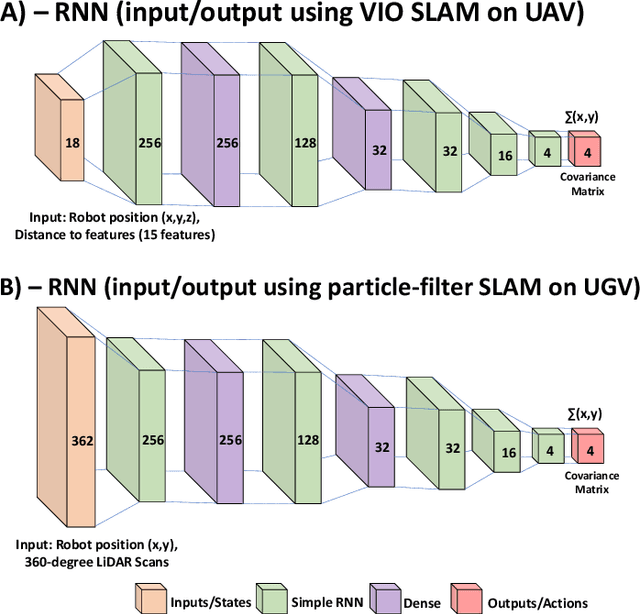

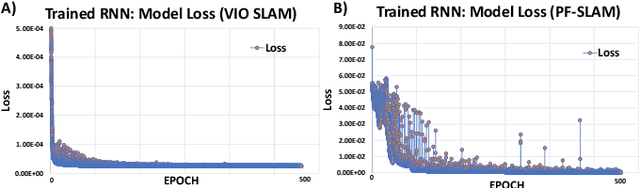

Abstract:We present an end-to-end online motion planning framework that uses a data-driven approach to navigate a heterogeneous robot team towards a global goal while avoiding obstacles in uncertain environments. First, we use stochastic model predictive control (SMPC) to calculate control inputs that satisfy robot dynamics, and consider uncertainty during obstacle avoidance with chance constraints. Second, recurrent neural networks are used to provide a quick estimate of future state uncertainty considered in the SMPC finite-time horizon solution, which are trained on uncertainty outputs of various simultaneous localization and mapping algorithms. When two or more robots are in communication range, these uncertainties are then updated using a distributed Kalman filtering approach. Lastly, a Deep Q-learning agent is employed to serve as a high-level path planner, providing the SMPC with target positions that move the robots towards a desired global goal. Our complete methods are demonstrated on a ground and aerial robot simultaneously (code available at: https://github.com/AlexS28/SABER).

Scene Uncertainty and the Wellington Posterior of Deterministic Image Classifiers

Jun 25, 2021

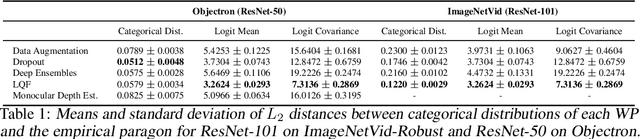

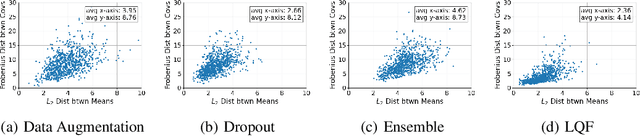

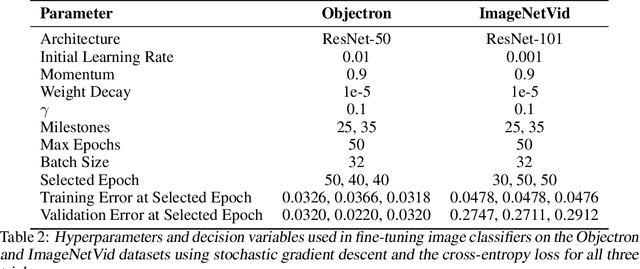

Abstract:We propose a method to estimate the uncertainty of the outcome of an image classifier on a given input datum. Deep neural networks commonly used for image classification are deterministic maps from an input image to an output class. As such, their outcome on a given datum involves no uncertainty, so we must specify what variability we are referring to when defining, measuring and interpreting "confidence." To this end, we introduce the Wellington Posterior, which is the distribution of outcomes that would have been obtained in response to data that could have been generated by the same scene that produced the given image. Since there are infinitely many scenes that could have generated the given image, the Wellington Posterior requires induction from scenes other than the one portrayed. We explore alternate methods using data augmentation, ensembling, and model linearization. Additional alternatives include generative adversarial networks, conditional prior networks, and supervised single-view reconstruction. We test these alternatives against the empirical posterior obtained by inferring the class of temporally adjacent frames in a video. These developments are only a small step towards assessing the reliability of deep network classifiers in a manner that is compatible with safety-critical applications.

Risk-Averse MPC via Visual-Inertial Input and Recurrent Networks for Online Collision Avoidance

Jul 28, 2020

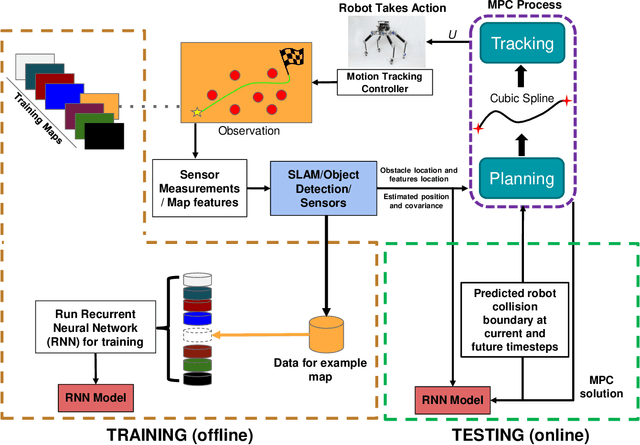

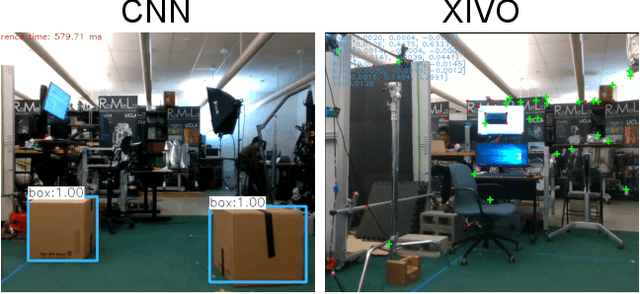

Abstract:In this paper, we propose an online path planning architecture that extends the model predictive control (MPC) formulation to consider future location uncertainties for safer navigation through cluttered environments. Our algorithm combines an object detection pipeline with a recurrent neural network (RNN) which infers the covariance of state estimates through each step of our MPC's finite time horizon. The RNN model is trained on a dataset that comprises of robot and landmark poses generated from camera images and inertial measurement unit (IMU) readings via a state-of-the-art visual-inertial odometry framework. To detect and extract object locations for avoidance, we use a custom-trained convolutional neural network model in conjunction with a feature extractor to retrieve 3D centroid and radii boundaries of nearby obstacles. The robustness of our methods is validated on complex quadruped robot dynamics and can be generally applied to most robotic platforms, demonstrating autonomous behaviors that can plan fast and collision-free paths towards a goal point.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge