SEEK: Semantic Reasoning for Object Goal Navigation in Real World Inspection Tasks

Paper and Code

May 16, 2024

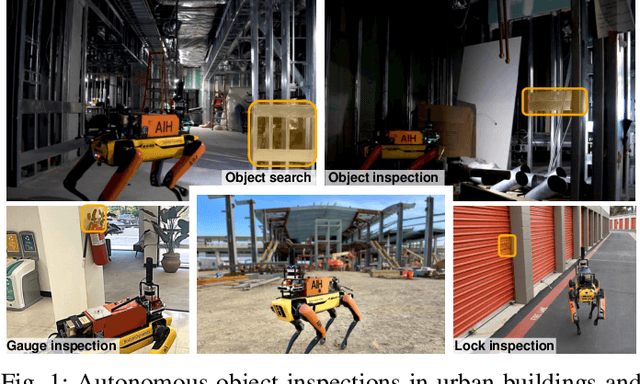

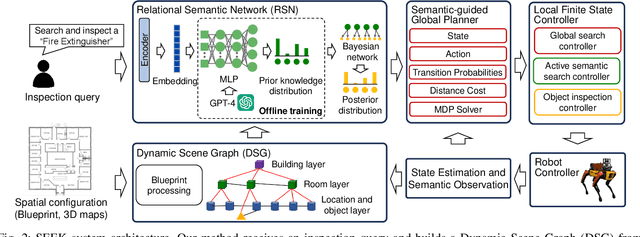

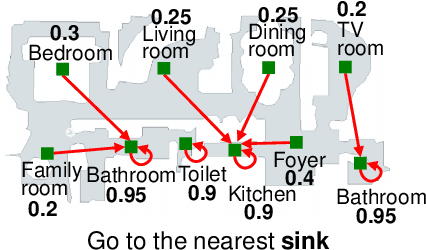

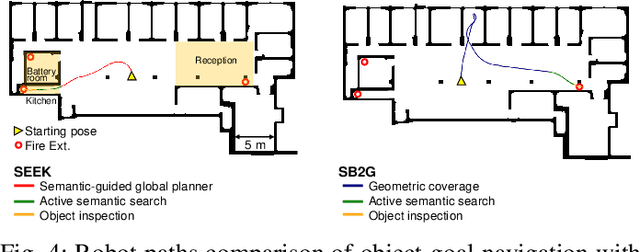

This paper addresses the problem of object-goal navigation in autonomous inspections in real-world environments. Object-goal navigation is crucial to enable effective inspections in various settings, often requiring the robot to identify the target object within a large search space. Current object inspection methods fall short of human efficiency because they typically cannot bootstrap prior and common sense knowledge as humans do. In this paper, we introduce a framework that enables robots to use semantic knowledge from prior spatial configurations of the environment and semantic common sense knowledge. We propose SEEK (Semantic Reasoning for Object Inspection Tasks) that combines semantic prior knowledge with the robot's observations to search for and navigate toward target objects more efficiently. SEEK maintains two representations: a Dynamic Scene Graph (DSG) and a Relational Semantic Network (RSN). The RSN is a compact and practical model that estimates the probability of finding the target object across spatial elements in the DSG. We propose a novel probabilistic planning framework to search for the object using relational semantic knowledge. Our simulation analyses demonstrate that SEEK outperforms the classical planning and Large Language Models (LLMs)-based methods that are examined in this study in terms of efficiency for object-goal inspection tasks. We validated our approach on a physical legged robot in urban environments, showcasing its practicality and effectiveness in real-world inspection scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge