Nicholas Ward

Automatic Parameter Optimization Using Genetic Algorithm in Deep Reinforcement Learning for Robotic Manipulation Tasks

Apr 07, 2022

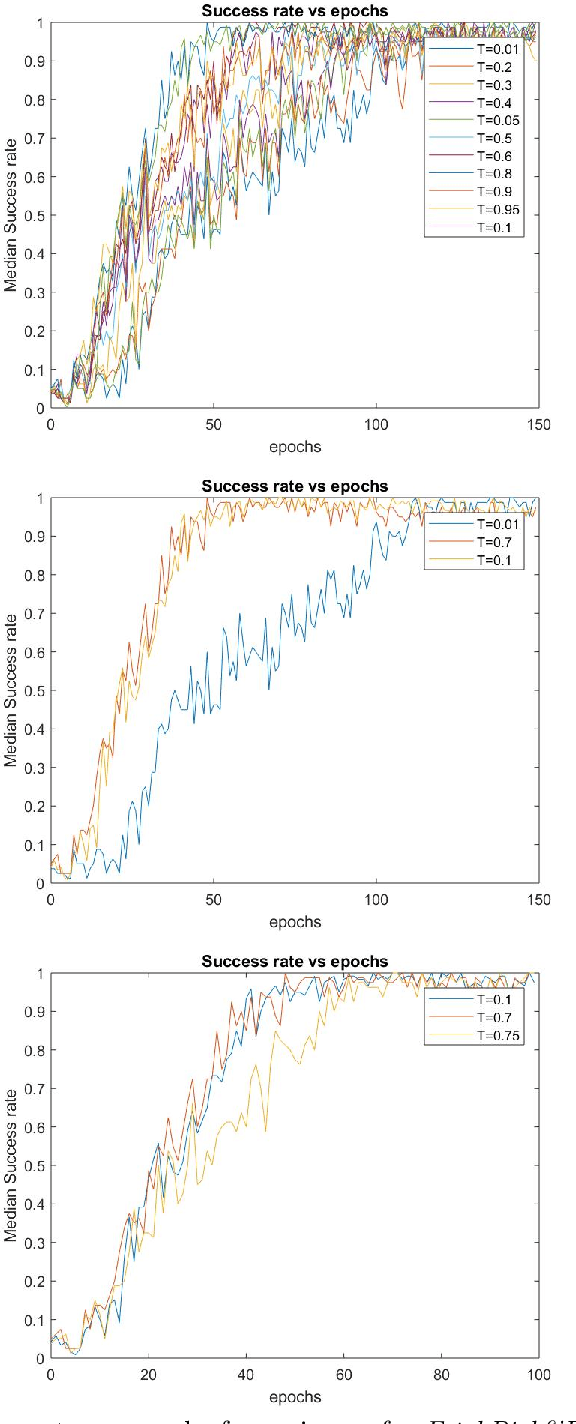

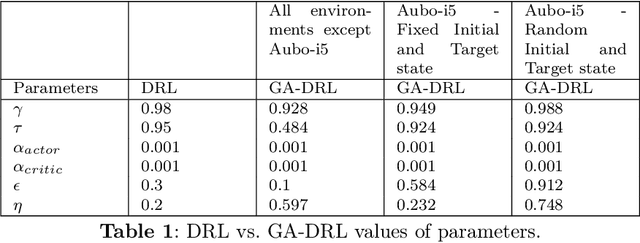

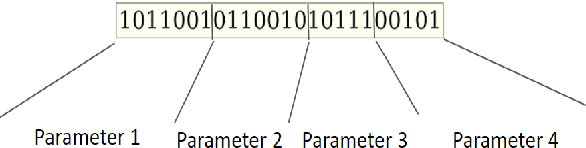

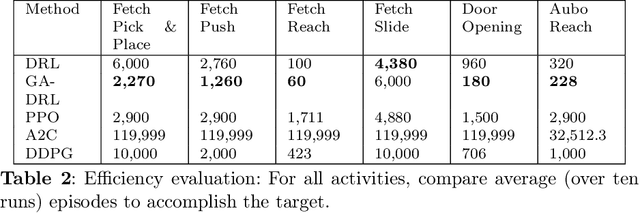

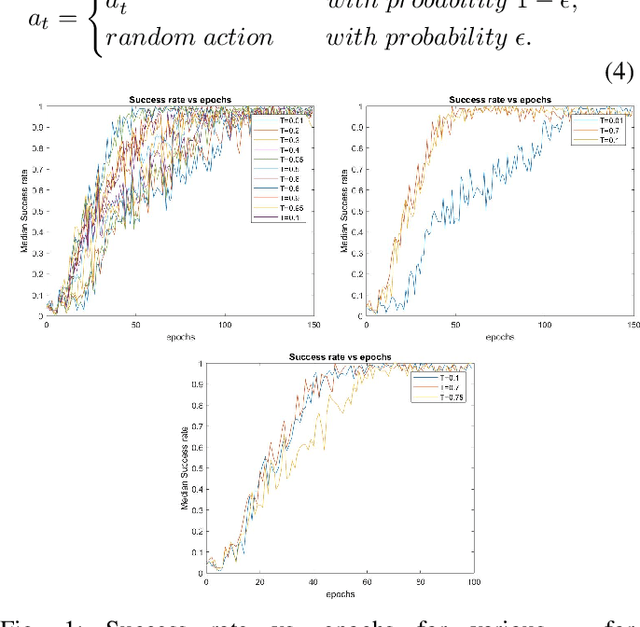

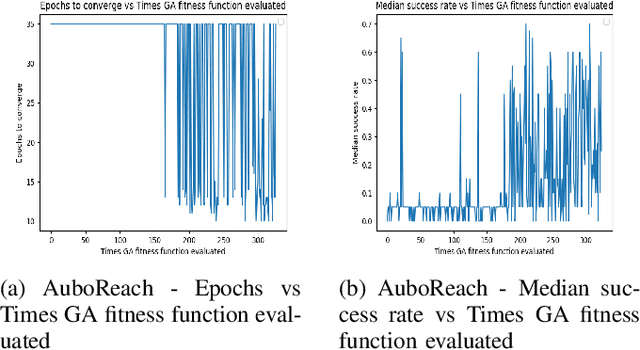

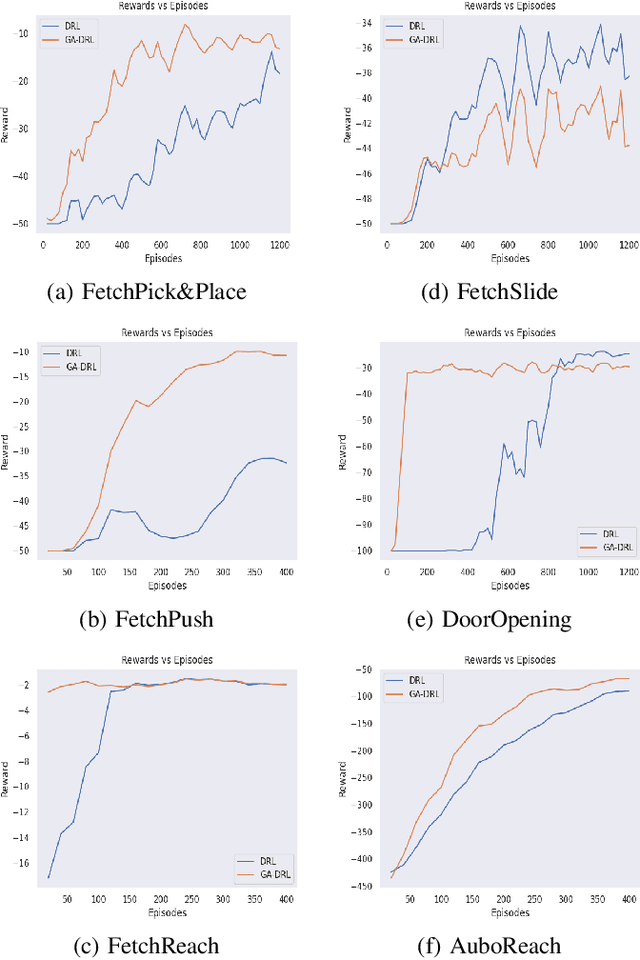

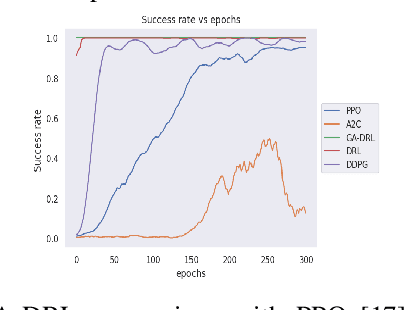

Abstract:Learning agents can make use of Reinforcement Learning (RL) to decide their actions by using a reward function. However, the learning process is greatly influenced by the elect of values of the parameters used in the learning algorithm. This work proposed a Deep Deterministic Policy Gradient (DDPG) and Hindsight Experience Replay (HER) based method, which makes use of the Genetic Algorithm (GA) to fine-tune the parameters' values. This method (GA-DRL) experimented on six robotic manipulation tasks: fetch-reach; fetch-slide; fetch-push; fetch-pick and place; door-opening; and aubo-reach. Analysis of these results demonstrated a significant increase in performance and a decrease in learning time. Also, we compare and provide evidence that GA-DRL is better than the existing methods.

GA-DRL: Genetic Algorithm-Based Function Optimizer in Deep Reinforcement Learning for Robotic Manipulation Tasks

Feb 28, 2022

Abstract:Reinforcement learning (RL) enables agents to make a decision based on a reward function. However, in the process of learning, the choice of values for learning algorithm parameters can significantly impact the overall learning process. In this paper, we proposed a Genetic Algorithm-based Deep Deterministic Policy Gradient and Hindsight Experience Replay method (called GA-DRL) to find near-optimal values of learning parameters. We used the proposed GA-DRL method on fetch-reach, slide, push, pick and place, and door opening in robotic manipulation tasks. With some modifications, our proposed GA-DRL method was also applied to the auboreach environment. Our experimental evaluation shows that our method leads to significantly better performance, faster than the original algorithm. Also, we provide evidence that GA-DRL performs better than the existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge