Yangge Li

Coordinated Science Laboratory at the University of Illinois at Urbana-Champaign

Verification and Validation of a Vision-Based Landing System for Autonomous VTOL Air Taxis

Dec 11, 2024

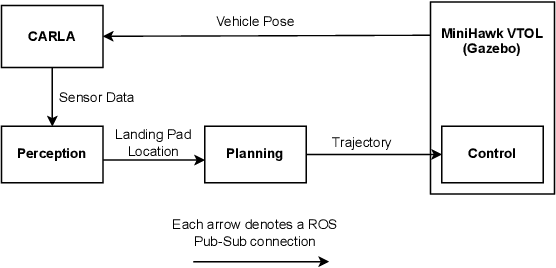

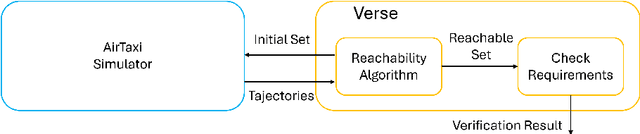

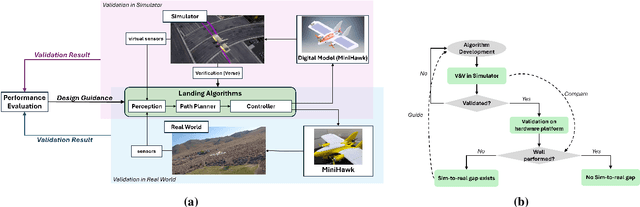

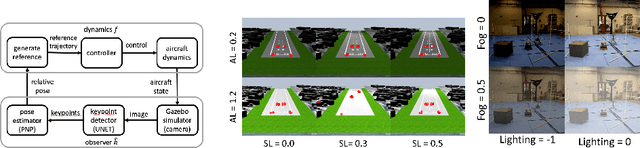

Abstract:Autonomous air taxis are poised to revolutionize urban mass transportation, however, ensuring their safety and reliability remains an open challenge. Validating autonomy solutions on air taxis in the real world presents complexities, risks, and costs that further convolute this challenge. Verification and Validation (V&V) frameworks play a crucial role in the design and development of highly reliable systems by formally verifying safety properties and validating algorithm behavior across diverse operational scenarios. Advancements in high-fidelity simulators have significantly enhanced their capability to emulate real-world conditions, encouraging their use for validating autonomous air taxi solutions, especially during early development stages. This evolution underscores the growing importance of simulation environments, not only as complementary tools to real-world testing but as essential platforms for evaluating algorithms in a controlled, reproducible, and scalable manner. This work presents a V&V framework for a vision-based landing system for air taxis with vertical take-off and landing (VTOL) capabilities. Specifically, we use Verse, a tool for formal verification, to model and verify the safety of the system by obtaining and analyzing the reachable sets. To conduct this analysis, we utilize a photorealistic simulation environment. The simulation environment, built on Unreal Engine, provides realistic terrain, weather, and sensor characteristics to emulate real-world conditions with high fidelity. To validate the safety analysis results, we conduct extensive scenario-based testing to assess the reachability set and robustness of the landing algorithm in various conditions. This approach showcases the representativeness of high-fidelity simulators, offering an effective means to analyze and refine algorithms before real-world deployment.

Visual Tracking with Intermittent Visibility: Switched Control Design and Implementation

Nov 12, 2024

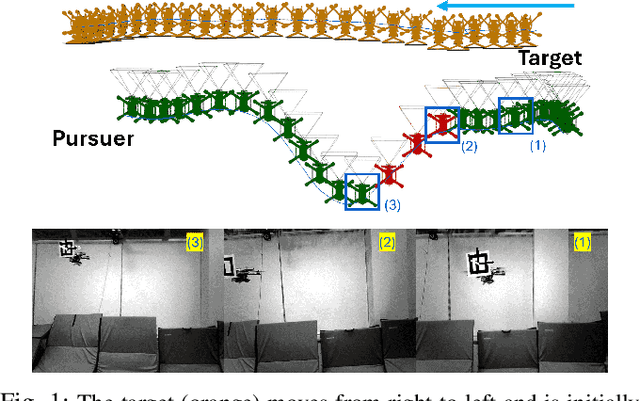

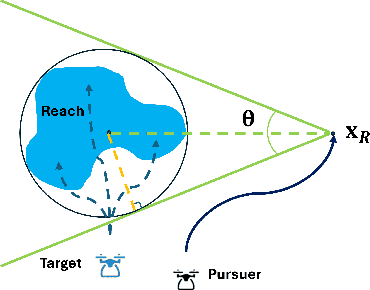

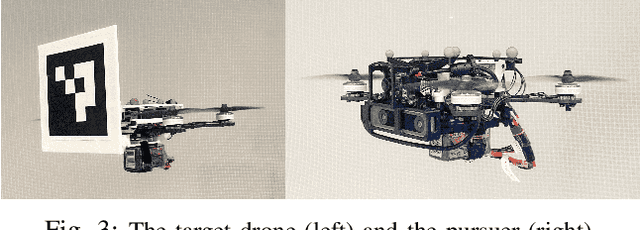

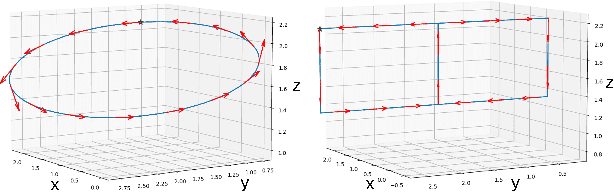

Abstract:This paper addresses the problem of visual target tracking in scenarios where a pursuer may experience intermittent loss of visibility of the target. The design of a Switched Visual Tracker (SVT) is presented which aims to meet the competing requirements of maintaining both proximity and visibility. SVT alternates between a visual tracking mode for following the target, and a recovery mode for regaining visual contact when the target falls out of sight. We establish the stability of SVT by extending the average dwell time theorem from switched systems theory, which may be of independent interest. Our implementation of SVT on an Agilicious drone [1] illustrates its effectiveness on tracking various target trajectories: it reduces the average tracking error by up to 45% and significantly improves visibility duration compared to a baseline algorithm. The results show that our approach effectively handles intermittent vision loss, offering enhanced robustness and adaptability for real-world autonomous missions. Additionally, we demonstrate how the stability analysis provides valuable guidance for selecting parameters, such as tracking speed and recovery distance, to optimize the SVT's performance.

Refining Perception Contracts: Case Studies in Vision-based Safe Auto-landing

Nov 15, 2023

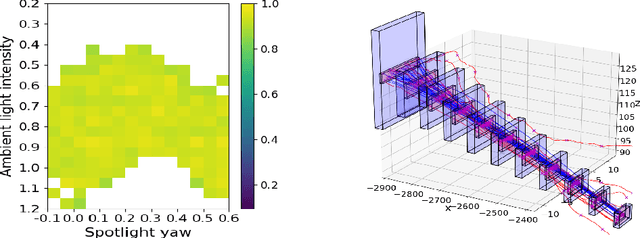

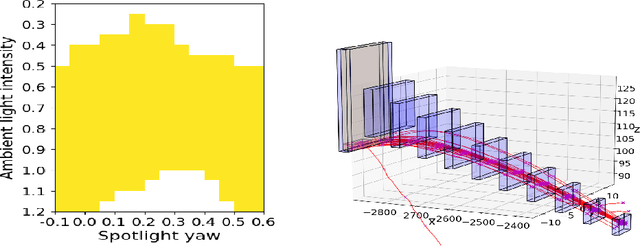

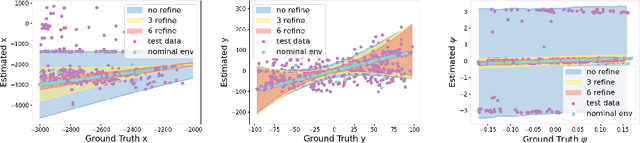

Abstract:Perception contracts provide a method for evaluating safety of control systems that use machine learning for perception. A perception contract is a specification for testing the ML components, and it gives a method for proving end-to-end system-level safety requirements. The feasibility of contract-based testing and assurance was established earlier in the context of straight lane keeping: a 3-dimensional system with relatively simple dynamics. This paper presents the analysis of two 6 and 12-dimensional flight control systems that use multi-stage, heterogeneous, ML-enabled perception. The paper advances methodology by introducing an algorithm for constructing data and requirement guided refinement of perception contracts (DaRePC). The resulting analysis provides testable contracts which establish the state and environment conditions under which an aircraft can safety touchdown on the runway and a drone can safely pass through a sequence of gates. It can also discover conditions (e.g., low-horizon sun) that can possibly violate the safety of the vision-based control system.

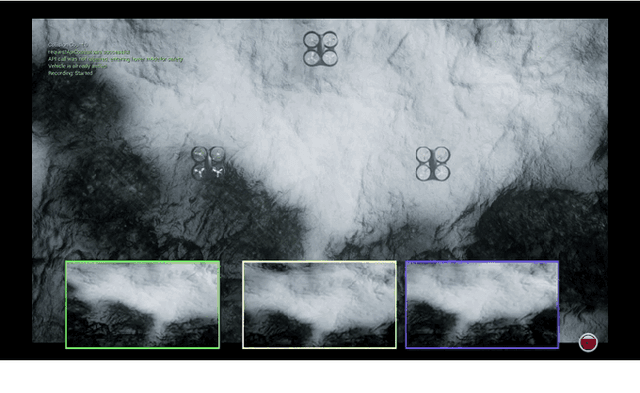

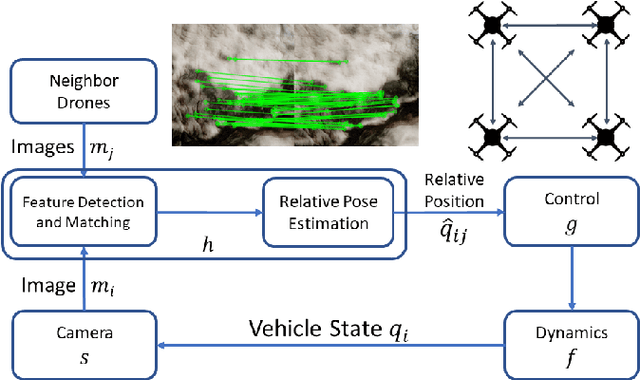

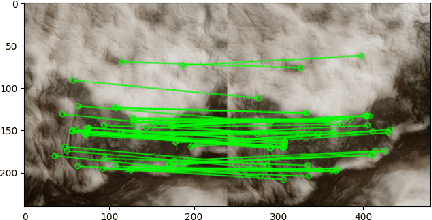

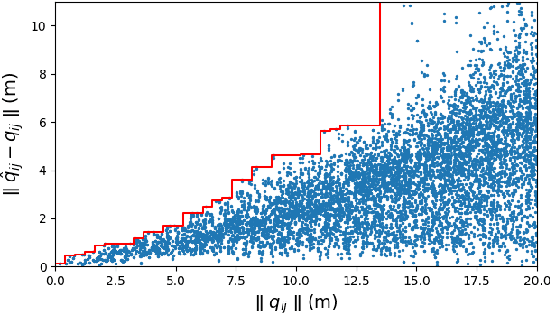

Assuring safety of vision-based swarm formation control

Oct 03, 2022

Abstract:Vision-based formation control systems recently have attracted attentions from both the research community and the industry for its applicability in GPS-denied environments. The safety assurance for such systems is challenging due to the lack of formal specifications for computer vision systems and the complex impact of imprecise estimations on distributed control. We propose a technique for safety assurance of vision-based formation control. Our technique combines (1) the construction of a piecewise approximation of the worst-case error of perception and (2) a classical Lyapunov-based safety analysis of the consensus control algorithm. The analysis provides the ultimate bound on the relative distance between drones. This ultimate bound can then be used to guarantee safe separation of all drones. We implement an instance of the vision-based control system on top of the photo-realistic AirSim simulator. We construct the piecewise approximation for varying perception error under different environments and weather conditions, and we are able to validate the safe separation provided by our analysis across the different weather conditions with AirSim simulation.

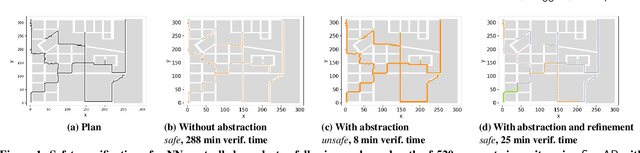

SymAR: Symmetry Abstractions and Refinement for Accelerating Scenarios with Neural Network Controllers Verification

Nov 21, 2020

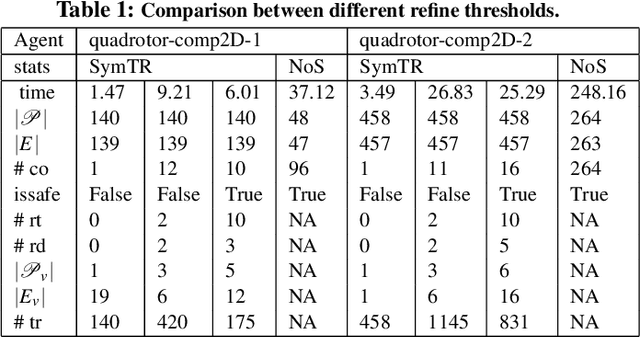

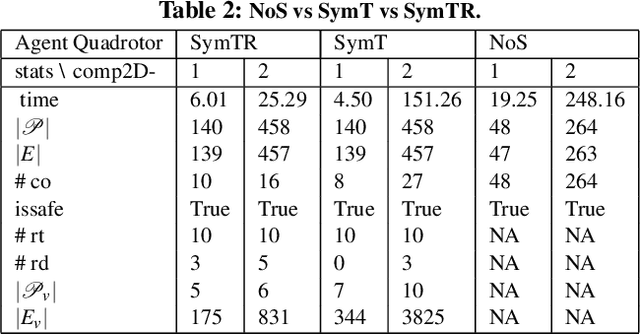

Abstract:We present a Symmetry-based abstraction refinement algorithm SymAR that is directed towards safety verification of large-scale scenarios with complex dynamical systems. The abstraction maps modes with symmetric dynamics to a single abstract mode and refinements recursively split the modes when safety checks fail. We show how symmetry abstractions can be applied effectively to closed-loop control systems, including non-symmetric deep neural network (DNN) controllers. For such controllers, we transform their inputs and outputs to enforce symmetry and make the closed loop system amenable for abstraction. We implemented SymAR in Python and used it to verify paths with 100s of segments in 2D and 3D scenarios followed by a six dimensional DNN-controlled quadrotor, and also a ground vehicle. Our experiments show significant savings, up to 10x in some cases, in verification time over existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge