Hyung-Jin Yoon

Expert Switching for Robust AAV Landing: A Dual-Detector Framework in Simulation

Dec 17, 2025

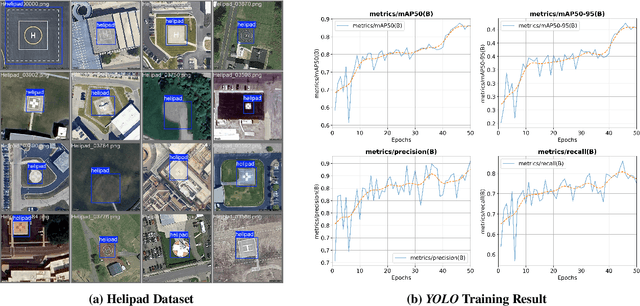

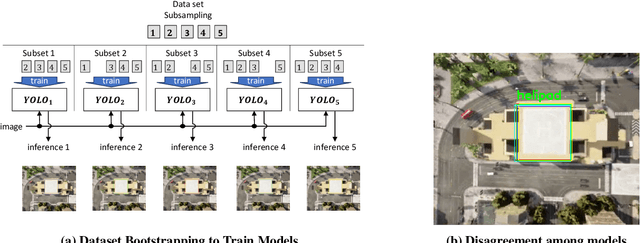

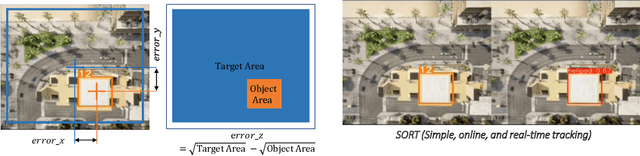

Abstract:Reliable helipad detection is essential for Autonomous Aerial Vehicle (AAV) landing, especially under GPS-denied or visually degraded conditions. While modern detectors such as YOLOv8 offer strong baseline performance, single-model pipelines struggle to remain robust across the extreme scale transitions that occur during descent, where helipads appear small at high altitude and large near touchdown. To address this limitation, we propose a scale-adaptive dual-expert perception framework that decomposes the detection task into far-range and close-range regimes. Two YOLOv8 experts are trained on scale-specialized versions of the HelipadCat dataset, enabling one model to excel at detecting small, low-resolution helipads and the other to provide high-precision localization when the target dominates the field of view. During inference, both experts operate in parallel, and a geometric gating mechanism selects the expert whose prediction is most consistent with the AAV's viewpoint. This adaptive routing prevents the degradation commonly observed in single-detector systems when operating across wide altitude ranges. The dual-expert perception module is evaluated in a closed-loop landing environment that integrates CARLA's photorealistic rendering with NASA's GUAM flight-dynamics engine. Results show substantial improvements in alignment stability, landing accuracy, and overall robustness compared to single-detector baselines. By introducing a scale-aware expert routing strategy tailored to the landing problem, this work advances resilient vision-based perception for autonomous descent and provides a foundation for future multi-expert AAV frameworks.

Development and Testing for Perception Based Autonomous Landing of a Long-Range QuadPlane

Dec 11, 2025

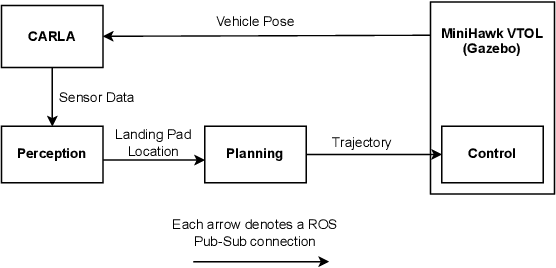

Abstract:QuadPlanes combine the range efficiency of fixed-wing aircraft with the maneuverability of multi-rotor platforms for long-range autonomous missions. In GPS-denied or cluttered urban environments, perception-based landing is vital for reliable operation. Unlike structured landing zones, real-world sites are unstructured and highly variable, requiring strong generalization capabilities from the perception system. Deep neural networks (DNNs) provide a scalable solution for learning landing site features across diverse visual and environmental conditions. While perception-driven landing has been shown in simulation, real-world deployment introduces significant challenges. Payload and volume constraints limit high-performance edge AI devices like the NVIDIA Jetson Orin Nano, which are crucial for real-time detection and control. Accurate pose estimation during descent is necessary, especially in the absence of GPS, and relies on dependable visual-inertial odometry. Achieving this with limited edge AI resources requires careful optimization of the entire deployment framework. The flight characteristics of large QuadPlanes further complicate the problem. These aircraft exhibit high inertia, reduced thrust vectoring, and slow response times further complicate stable landing maneuvers. This work presents a lightweight QuadPlane system for efficient vision-based autonomous landing and visual-inertial odometry, specifically developed for long-range QuadPlane operations such as aerial monitoring. It describes the hardware platform, sensor configuration, and embedded computing architecture designed to meet demanding real-time, physical constraints. This establishes a foundation for deploying autonomous landing in dynamic, unstructured, GPS-denied environments.

Verification and Validation of a Vision-Based Landing System for Autonomous VTOL Air Taxis

Dec 11, 2024

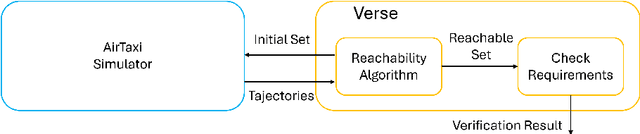

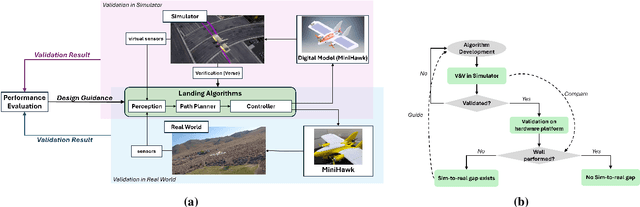

Abstract:Autonomous air taxis are poised to revolutionize urban mass transportation, however, ensuring their safety and reliability remains an open challenge. Validating autonomy solutions on air taxis in the real world presents complexities, risks, and costs that further convolute this challenge. Verification and Validation (V&V) frameworks play a crucial role in the design and development of highly reliable systems by formally verifying safety properties and validating algorithm behavior across diverse operational scenarios. Advancements in high-fidelity simulators have significantly enhanced their capability to emulate real-world conditions, encouraging their use for validating autonomous air taxi solutions, especially during early development stages. This evolution underscores the growing importance of simulation environments, not only as complementary tools to real-world testing but as essential platforms for evaluating algorithms in a controlled, reproducible, and scalable manner. This work presents a V&V framework for a vision-based landing system for air taxis with vertical take-off and landing (VTOL) capabilities. Specifically, we use Verse, a tool for formal verification, to model and verify the safety of the system by obtaining and analyzing the reachable sets. To conduct this analysis, we utilize a photorealistic simulation environment. The simulation environment, built on Unreal Engine, provides realistic terrain, weather, and sensor characteristics to emulate real-world conditions with high fidelity. To validate the safety analysis results, we conduct extensive scenario-based testing to assess the reachability set and robustness of the landing algorithm in various conditions. This approach showcases the representativeness of high-fidelity simulators, offering an effective means to analyze and refine algorithms before real-world deployment.

Bayesian Data Augmentation and Training for Perception DNN in Autonomous Aerial Vehicles

Dec 10, 2024

Abstract:Learning-based solutions have enabled incredible capabilities for autonomous systems. Autonomous vehicles, both aerial and ground, rely on DNN for various integral tasks, including perception. The efficacy of supervised learning solutions hinges on the quality of the training data. Discrepancies between training data and operating conditions result in faults that can lead to catastrophic incidents. However, collecting vast amounts of context-sensitive data, with broad coverage of possible operating environments, is prohibitively difficult. Synthetic data generation techniques for DNN allow for the easy exploration of diverse scenarios. However, synthetic data generation solutions for aerial vehicles are still lacking. This work presents a data augmentation framework for aerial vehicle's perception training, leveraging photorealistic simulation integrated with high-fidelity vehicle dynamics. Safe landing is a crucial challenge in the development of autonomous air taxis, therefore, landing maneuver is chosen as the focus of this work. With repeated simulations of landing in varying scenarios we assess the landing performance of the VTOL type UAV and gather valuable data. The landing performance is used as the objective function to optimize the DNN through retraining. Given the high computational cost of DNN retraining, we incorporated Bayesian Optimization in our framework that systematically explores the data augmentation parameter space to retrain the best-performing models. The framework allowed us to identify high-performing data augmentation parameters that are consistently effective across different landing scenarios. Utilizing the capabilities of this data augmentation framework, we obtained a robust perception model. The model consistently improved the perception-based landing success rate by at least 20% under different lighting and weather conditions.

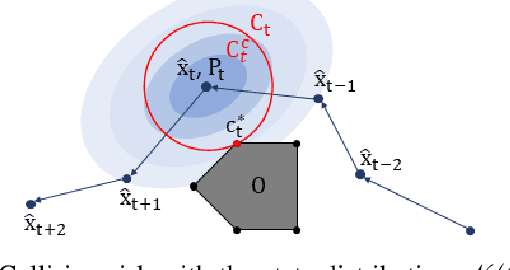

Synergistic Perception and Control Simplex for Verifiable Safe Vertical Landing

Dec 05, 2023

Abstract:Perception, Planning, and Control form the essential components of autonomy in advanced air mobility. This work advances the holistic integration of these components to enhance the performance and robustness of the complete cyber-physical system. We adapt Perception Simplex, a system for verifiable collision avoidance amidst obstacle detection faults, to the vertical landing maneuver for autonomous air mobility vehicles. We improve upon this system by replacing static assumptions of control capabilities with dynamic confirmation, i.e., real-time confirmation of control limitations of the system, ensuring reliable fulfillment of safety maneuvers and overrides, without dependence on overly pessimistic assumptions. Parameters defining control system capabilities and limitations, e.g., maximum deceleration, are continuously tracked within the system and used to make safety-critical decisions. We apply these techniques to propose a verifiable collision avoidance solution for autonomous aerial mobility vehicles operating in cluttered and potentially unsafe environments.

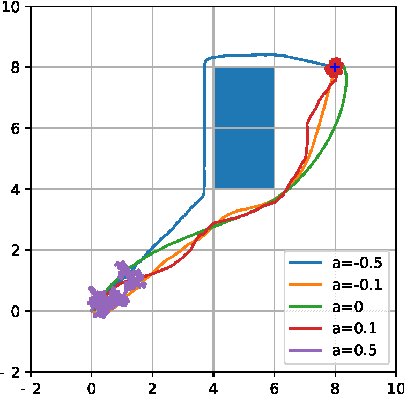

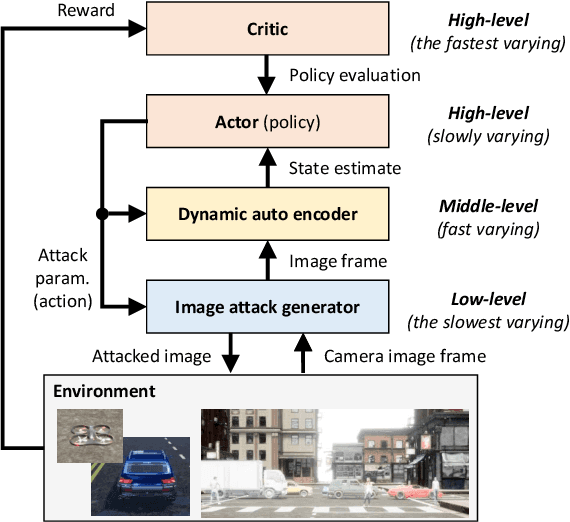

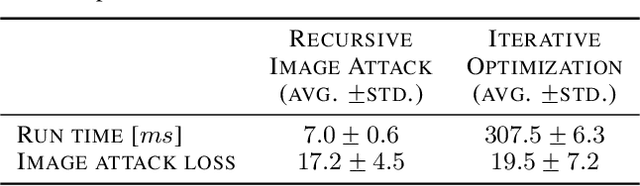

Learning When to Use Adaptive Adversarial Image Perturbations against Autonomous Vehicles

Dec 28, 2022Abstract:The deep neural network (DNN) models for object detection using camera images are widely adopted in autonomous vehicles. However, DNN models are shown to be susceptible to adversarial image perturbations. In the existing methods of generating the adversarial image perturbations, optimizations take each incoming image frame as the decision variable to generate an image perturbation. Therefore, given a new image, the typically computationally-expensive optimization needs to start over as there is no learning between the independent optimizations. Very few approaches have been developed for attacking online image streams while considering the underlying physical dynamics of autonomous vehicles, their mission, and the environment. We propose a multi-level stochastic optimization framework that monitors an attacker's capability of generating the adversarial perturbations. Based on this capability level, a binary decision attack/not attack is introduced to enhance the effectiveness of the attacker. We evaluate our proposed multi-level image attack framework using simulations for vision-guided autonomous vehicles and actual tests with a small indoor drone in an office environment. The results show our method's capability to generate the image attack in real-time while monitoring when the attacker is proficient given state estimates.

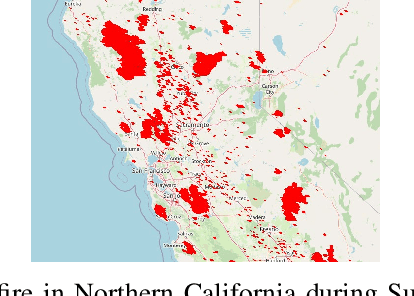

Multi-time Predictions of Wildfire Grid Map using Remote Sensing Local Data

Sep 15, 2022

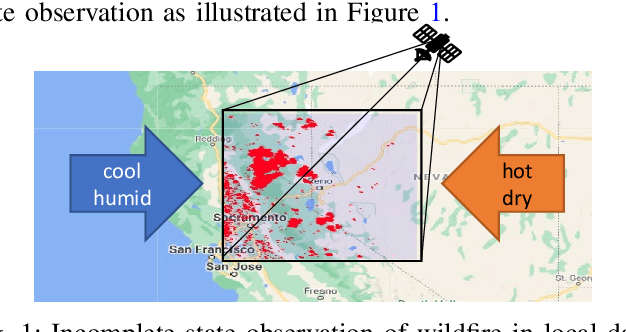

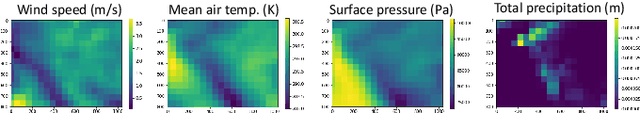

Abstract:Due to recent climate changes, we have seen more frequent and severe wildfires in the United States. Predicting wildfires is critical for natural disaster prevention and mitigation. Advances in technologies in data processing and communication enabled us to access remote sensing data. With the remote sensing data, valuable spatiotemporal statistical models can be created and used for resource management practices. This paper proposes a distributed learning framework that shares local data collected in ten locations in the western USA throughout the local agents. The local agents aim to predict wildfire grid maps one, two, three, and four weeks in advance while online processing the remote sensing data stream. The proposed model has distinct features that address the characteristic need in prediction evaluations, including dynamic online estimation and time-series modeling. Local fire event triggers are not isolated between locations, and there are confounding factors when local data is analyzed due to incomplete state observations. Compared to existing approaches that do not account for incomplete state observation within wildfire time-series data, on average, we can achieve higher prediction performance.

Sampling Complexity of Path Integral Methods for Trajectory Optimization

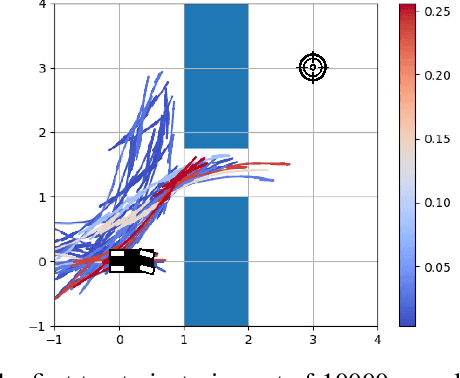

Mar 18, 2022

Abstract:The use of random sampling in decision-making and control has become popular with the ease of access to graphic processing units that can generate and calculate multiple random trajectories for real-time robotic applications. In contrast to sequential optimization, the sampling-based method can take advantage of parallel computing to maintain constant control loop frequencies. Inspired by its wide applicability in robotic applications, we calculate a sampling complexity result applicable to general nonlinear systems considered in the path integral method, which is a sampling-based method. The result determines the required number of samples to satisfy the given error bounds of the estimated control signal from the optimal value with the predefined risk probability. The sampling complexity result shows that the variance of the estimated control value is upper-bounded in terms of the expectation of the cost. Then we apply the result to a linear time-varying dynamical system with quadratic cost and an indicator function cost to avoid constraint sets.

Learning Wildfire Model from Incomplete State Observations

Nov 28, 2021

Abstract:As wildfires are expected to become more frequent and severe, improved prediction models are vital to mitigating risk and allocating resources. With remote sensing data, valuable spatiotemporal statistical models can be created and used for resource management practices. In this paper, we create a dynamic model for future wildfire predictions of five locations within the western United States through a deep neural network via historical burned area and climate data. The proposed model has distinct features that address the characteristic need in prediction evaluations, including dynamic online estimation and time-series modeling. Between locations, local fire event triggers are not isolated, and there are confounding factors when local data is analyzed due to incomplete state observations. When compared to existing approaches that do not account for incomplete state observation within wildfire time-series data, on average, we are able to achieve higher prediction performances.

Learning Image Attacks toward Vision Guided Autonomous Vehicles

May 17, 2021

Abstract:While adversarial neural networks have been shown successful for static image attacks, very few approaches have been developed for attacking online image streams while taking into account the underlying physical dynamics of autonomous vehicles, their mission, and environment. This paper presents an online adversarial machine learning framework that can effectively misguide autonomous vehicles' missions. In the existing image attack methods devised toward autonomous vehicles, optimization steps are repeated for every image frame. This framework removes the need for fully converged optimization at every frame to realize image attacks in real-time. Using reinforcement learning, a generative neural network is trained over a set of image frames to obtain an attack policy that is more robust to dynamic and uncertain environments. A state estimator is introduced for processing image streams to reduce the attack policy's sensitivity to physical variables such as unknown position and velocity. A simulation study is provided to validate the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge