Aman Rana

Promptformer: Prompted Conformer Transducer for ASR

Jan 14, 2024

Abstract:Context cues carry information which can improve multi-turn interactions in automatic speech recognition (ASR) systems. In this paper, we introduce a novel mechanism inspired by hyper-prompting to fuse textual context with acoustic representations in the attention mechanism. Results on a test set with multi-turn interactions show that our method achieves 5.9% relative word error rate reduction (rWERR) over a strong baseline. We show that our method does not degrade in the absence of context and leads to improvements even if the model is trained without context. We further show that leveraging a pre-trained sentence-piece model for context embedding generation can outperform an external BERT model.

High Accuracy Tumor Diagnoses and Benchmarking of Hematoxylin and Eosin Stained Prostate Core Biopsy Images Generated by Explainable Deep Neural Networks

Aug 02, 2019

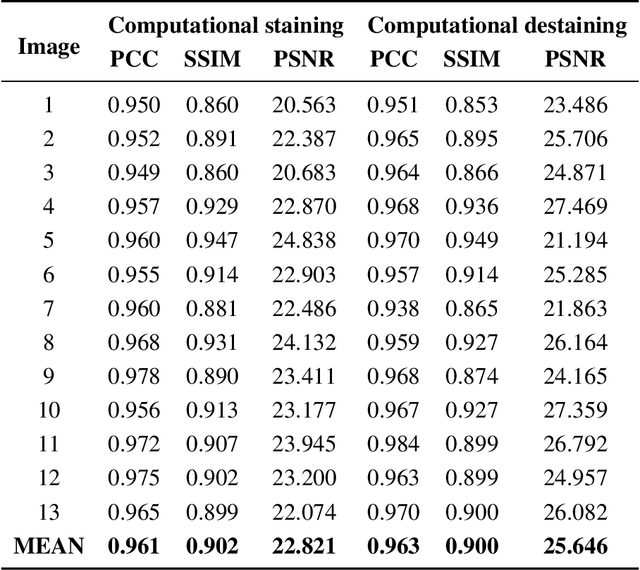

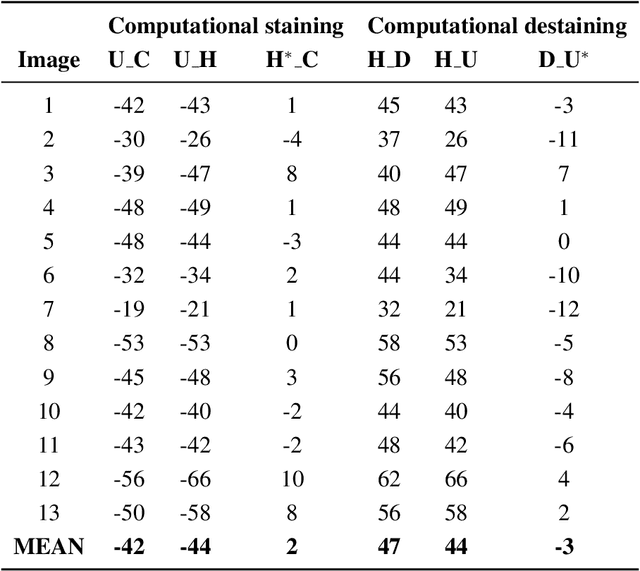

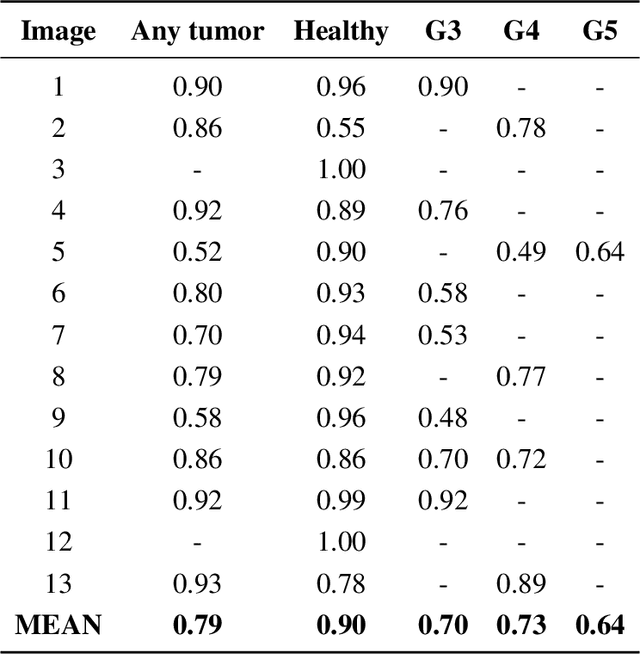

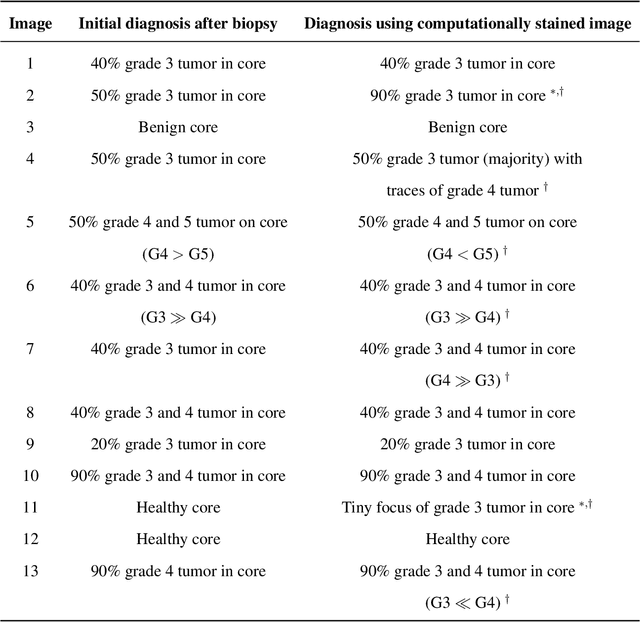

Abstract:Histopathological diagnoses of tumors in tissue biopsy after Hematoxylin and Eosin (H&E) staining is the gold standard for oncology care. H&E staining is slow and uses dyes, reagents and precious tissue samples that cannot be reused. Thousands of native nonstained RGB Whole Slide Image (RWSI) patches of prostate core tissue biopsies were registered with their H&E stained versions. Conditional Generative Adversarial Neural Networks (cGANs) that automate conversion of native nonstained RWSI to computational H&E stained images were then trained. High similarities between computational and H&E dye stained images with Structural Similarity Index (SSIM) 0.902, Pearsons Correlation Coefficient (CC) 0.962 and Peak Signal to Noise Ratio (PSNR) 22.821 dB were calculated. A second cGAN performed accurate computational destaining of H&E dye stained images back to their native nonstained form with SSIM 0.9, CC 0.963 and PSNR 25.646 dB. A single-blind study computed more than 95% pixel-by-pixel overlap between prostate tumor annotations on computationally stained images, provided by five-board certified MD pathologists, with those on H&E dye stained counterparts. We report the first visualization and explanation of neural network kernel activation maps during H&E staining and destaining of RGB images by cGANs. High similarities between kernel activation maps of computational and H&E stained images (Mean-Squared Errors <0.0005) provide additional mathematical and mechanistic validation of the staining system. Our neural network framework thus is automated, explainable and performs high precision H&E staining and destaining of low cost native RGB images, and is computer vision and physician authenticated for rapid and accurate tumor diagnoses.

Automated Process Incorporating Machine Learning Segmentation and Correlation of Oral Diseases with Systemic Health

Oct 25, 2018

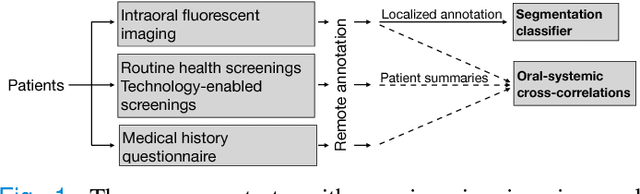

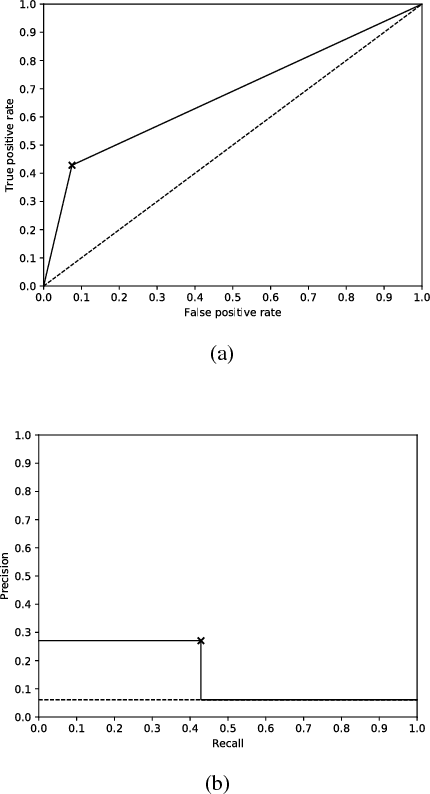

Abstract:Imaging fluorescent disease biomarkers in tissues and skin is a non-invasive method to screen for health conditions. We report an automated process that combines intraoral fluorescent porphyrin biomarker imaging, clinical examinations and machine learning for correlation of systemic health conditions with periodontal disease. 1215 intraoral fluorescent images, from 284 consenting adults aged 18-90, were analyzed using a machine learning classifier that can segment periodontal inflammation. The classifier achieved an AUC of 0.677 with precision and recall of 0.271 and 0.429, respectively, indicating a learned association between disease signatures in collected images. Periodontal diseases were more prevalent among males (p=0.0012) and older subjects (p=0.0224) in the screened population. Physicians independently examined the collected images, assigning localized modified gingival indices (MGIs). MGIs and periodontal disease were then cross-correlated with responses to a medical history questionnaire, blood pressure and body mass index measurements, and optic nerve, tympanic membrane, neurological, and cardiac rhythm imaging examinations. Gingivitis and early periodontal disease were associated with subjects diagnosed with optic nerve abnormalities (p <0.0001) in their retinal scans. We also report significant co-occurrences of periodontal disease in subjects reporting swollen joints (p=0.0422) and a family history of eye disease (p=0.0337). These results indicate cross-correlation of poor periodontal health with systemic health outcomes and stress the importance of oral health screenings at the primary care level. Our screening process and analysis method, using images and machine learning, can be generalized for automated diagnoses and systemic health screenings for other diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge