Visual-Thermal Landmarks and Inertial Fusion for Navigation in Degraded Visual Environments

Paper and Code

Mar 05, 2019

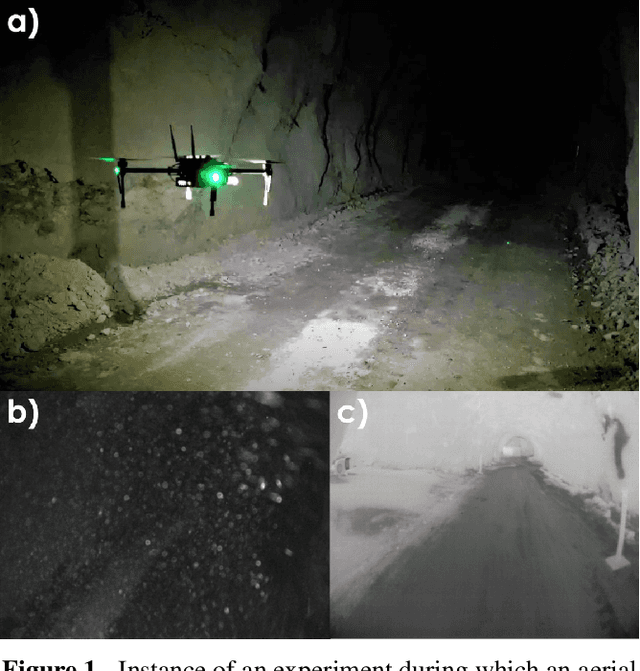

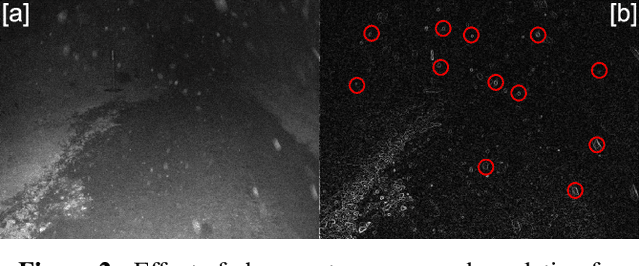

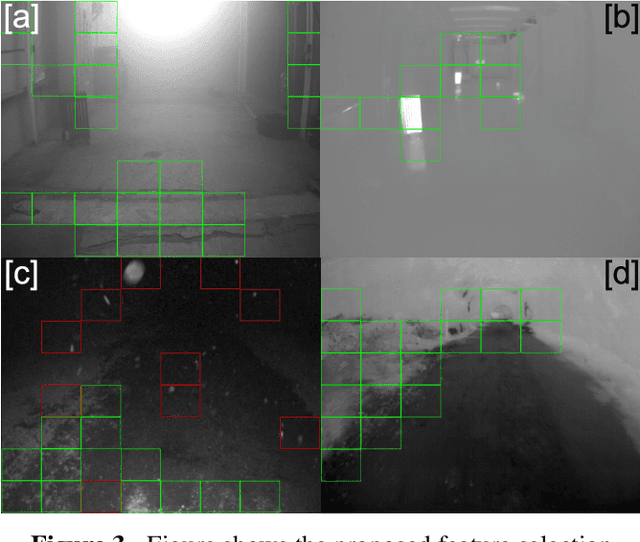

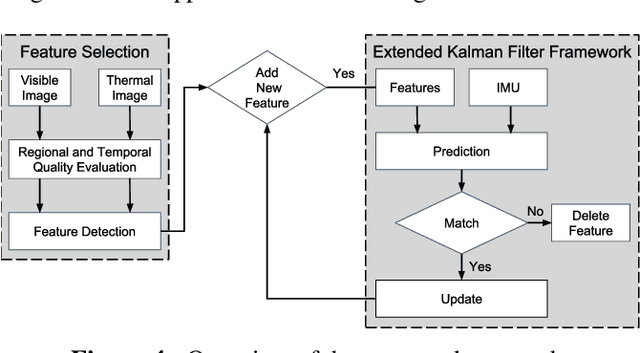

With an ever-widening domain of aerial robotic applications, including many mission critical tasks such as disaster response operations, search and rescue missions and infrastructure inspections taking place in GPS-denied environments, the need for reliable autonomous operation of aerial robots has become crucial. Operating in GPS-denied areas aerial robots rely on a multitude of sensors to localize and navigate. Visible spectrum cameras are the most commonly used sensors due to their low cost and weight. However, in environments that are visually-degraded such as in conditions of poor illumination, low texture, or presence of obscurants including fog, smoke and dust, the reliability of visible light cameras deteriorates significantly. Nevertheless, maintaining reliable robot navigation in such conditions is essential. In contrast to visible light cameras, thermal cameras offer visibility in the infrared spectrum and can be used in a complementary manner with visible spectrum cameras for robot localization and navigation tasks, without paying the significant weight and power penalty typically associated with carrying other sensors. Exploiting this fact, in this work we present a multi-sensor fusion algorithm for reliable odometry estimation in GPS-denied and degraded visual environments. The proposed method utilizes information from both the visible and thermal spectra for landmark selection and prioritizes feature extraction from informative image regions based on a metric over spatial entropy. Furthermore, inertial sensing cues are integrated to improve the robustness of the odometry estimation process. To verify our solution, a set of challenging experiments were conducted inside a) an obscurant filed machine shop-like industrial environment, as well as b) a dark subterranean mine in the presence of heavy airborne dust.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge