Renaud Dubé

Fast Image-Anomaly Mitigation for Autonomous Mobile Robots

Sep 04, 2021

Abstract:Camera anomalies like rain or dust can severelydegrade image quality and its related tasks, such as localizationand segmentation. In this work we address this importantissue by implementing a pre-processing step that can effectivelymitigate such artifacts in a real-time fashion, thus supportingthe deployment of autonomous systems with limited computecapabilities. We propose a shallow generator with aggregation,trained in an adversarial setting to solve the ill-posed problemof reconstructing the occluded regions. We add an enhancer tofurther preserve high-frequency details and image colorization.We also produce one of the largest publicly available datasets1to train our architecture and use realistic synthetic raindrops toobtain an improved initialization of the model. We benchmarkour framework on existing datasets and on our own imagesobtaining state-of-the-art results while enabling real-time per-formance, with up to 40x faster inference time than existingapproaches.

Dynamic Object Aware LiDAR SLAM based on Automatic Generation of Training Data

Apr 08, 2021

Abstract:Highly dynamic environments, with moving objects such as cars or humans, can pose a performance challenge for LiDAR SLAM systems that assume largely static scenes. To overcome this challenge and support the deployment of robots in real world scenarios, we propose a complete solution for a dynamic object aware LiDAR SLAM algorithm. This is achieved by leveraging a real-time capable neural network that can detect dynamic objects, thus allowing our system to deal with them explicitly. To efficiently generate the necessary training data which is key to our approach, we present a novel end-to-end occupancy grid based pipeline that can automatically label a wide variety of arbitrary dynamic objects. Our solution can thus generalize to different environments without the need for expensive manual labeling and at the same time avoids assumptions about the presence of a predefined set of known objects in the scene. Using this technique, we automatically label over 12000 LiDAR scans collected in an urban environment with a large amount of pedestrians and use this data to train a neural network, achieving an average segmentation IoU of 0.82. We show that explicitly dealing with dynamic objects can improve the LiDAR SLAM odometry performance by 39.6% while yielding maps which better represent the environments. A supplementary video as well as our test data are available online.

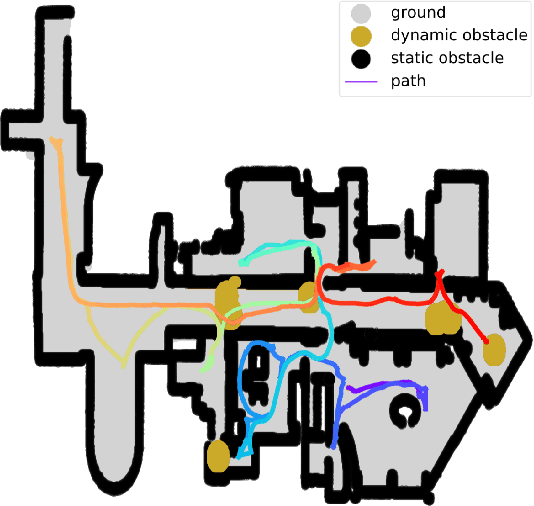

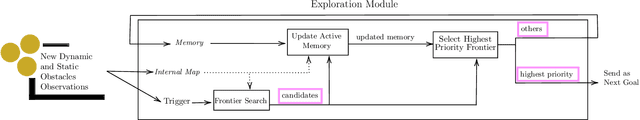

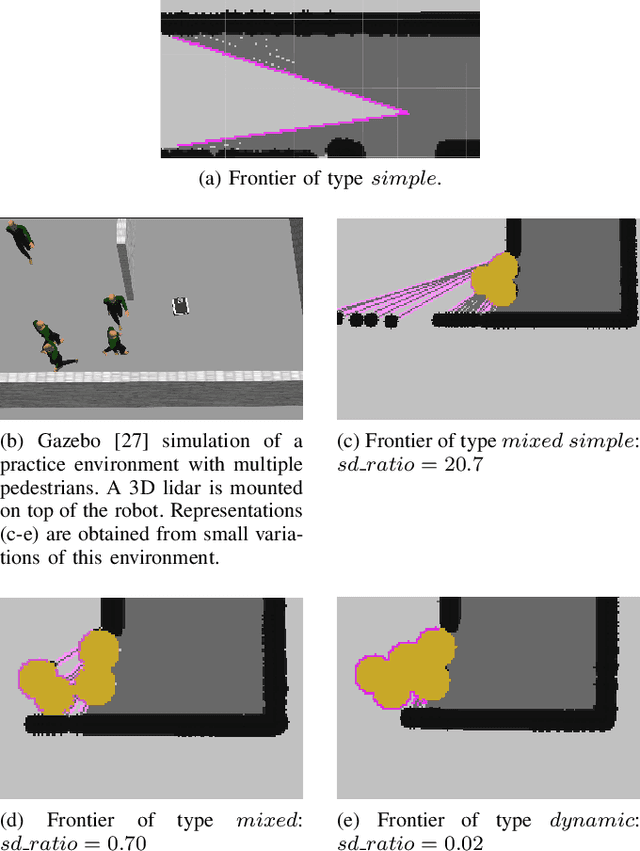

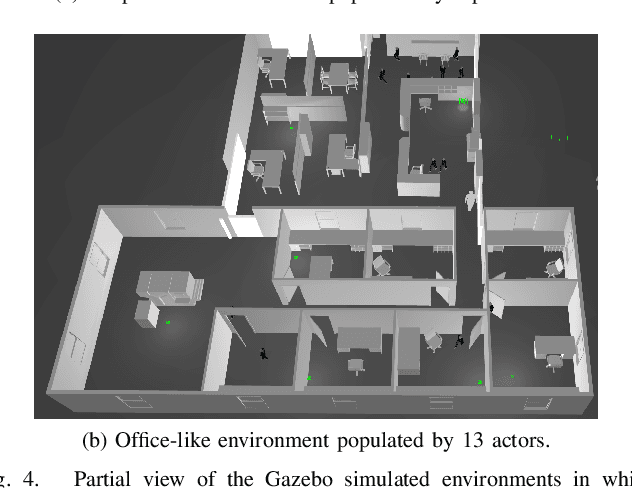

Dynamic-Aware Autonomous Exploration in Populated Environments

Apr 06, 2021

Abstract:Autonomous exploration allows mobile robots to navigate in initially unknown territories in order to build complete representations of the environments. In many real-life applications, environments often contain dynamic obstacles which can compromise the exploration process by temporarily blocking passages, narrow paths, exits or entrances to other areas yet to be explored. In this work, we formulate a novel exploration strategy capable of explicitly handling dynamic obstacles, thus leading to complete and reliable exploration outcomes in populated environments. We introduce the concept of dynamic frontiers to represent unknown regions at the boundaries with dynamic obstacles together with a cost function which allows the robot to make informed decisions about when to revisit such frontiers. We evaluate the proposed strategy in challenging simulated environments and show that it outperforms a state-of-the-art baseline in these populated scenarios.

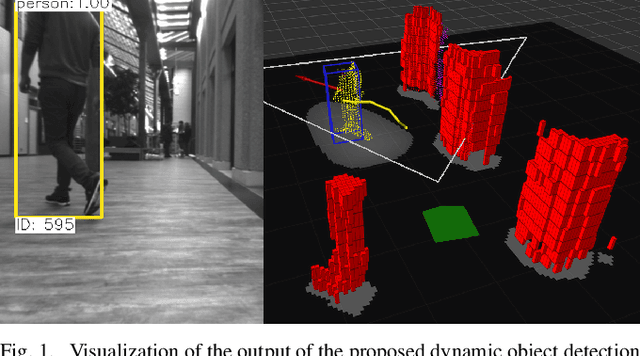

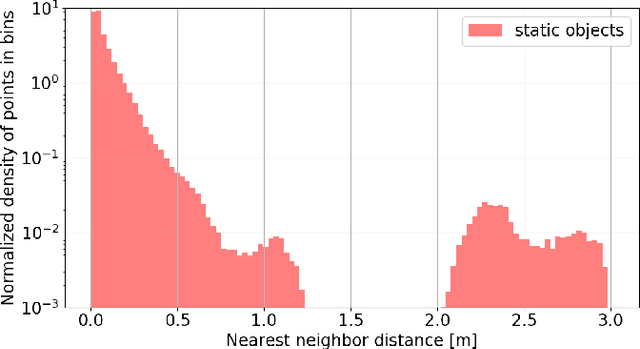

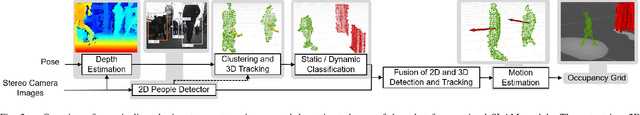

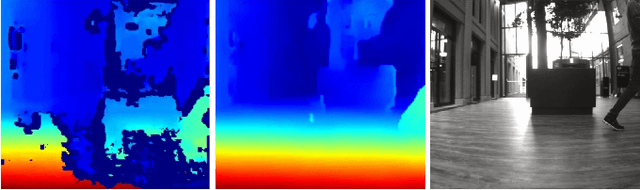

Leveraging Stereo-Camera Data for Real-Time Dynamic Obstacle Detection and Tracking

Jul 21, 2020

Abstract:Dynamic obstacle avoidance is one crucial component for compliant navigation in crowded environments. In this paper we present a system for accurate and reliable detection and tracking of dynamic objects using noisy point cloud data generated by stereo cameras. Our solution is real-time capable and specifically designed for the deployment on computationally-constrained unmanned ground vehicles. The proposed approach identifies individual objects in the robot's surroundings and classifies them as either static or dynamic. The dynamic objects are labeled as either a person or a generic dynamic object. We then estimate their velocities to generate a 2D occupancy grid that is suitable for performing obstacle avoidance. We evaluate the system in indoor and outdoor scenarios and achieve real-time performance on a consumer-grade computer. On our test-dataset, we reach a MOTP of $0.07 \pm 0.07m$, and a MOTA of $85.3\%$ for the detection and tracking of dynamic objects. We reach a precision of $96.9\%$ for the detection of static objects.

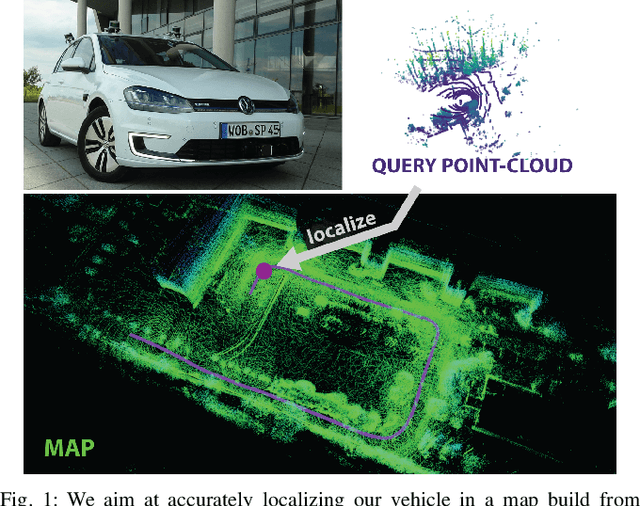

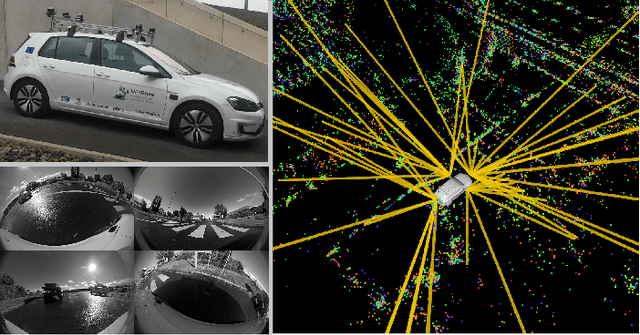

OneShot Global Localization: Instant LiDAR-Visual Pose Estimation

Mar 31, 2020

Abstract:Globally localizing in a given map is a crucial ability for robots to perform a wide range of autonomous navigation tasks. This paper presents OneShot - a global localization algorithm that uses only a single 3D LiDAR scan at a time, while outperforming approaches based on integrating a sequence of point clouds. Our approach, which does not require the robot to move, relies on learning-based descriptors of point cloud segments and computes the full 6 degree-of-freedom pose in a map. The segments are extracted from the current LiDAR scan and are matched against a database using the computed descriptors. Candidate matches are then verified with a geometric consistency test. We additionally present a strategy to further improve the performance of the segment descriptors by augmenting them with visual information provided by a camera. For this purpose, a custom-tailored neural network architecture is proposed. We demonstrate that our LiDAR-only approach outperforms a state-of-the-art baseline on a sequence of the KITTI dataset and also evaluate its performance on the challenging NCLT dataset. Finally, we show that fusing in visual information boosts segment retrieval rates by up to 26% compared to LiDAR-only description.

SegMap: Segment-based mapping and localization using data-driven descriptors

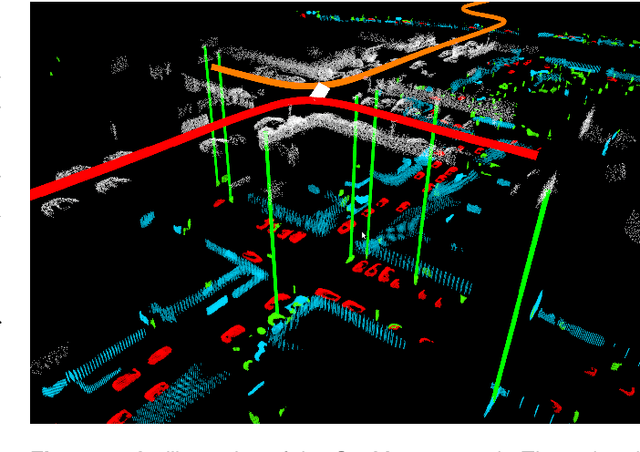

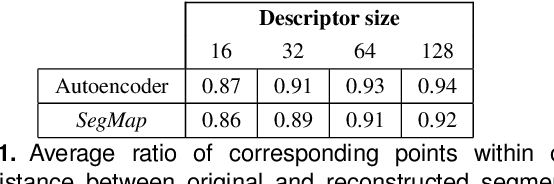

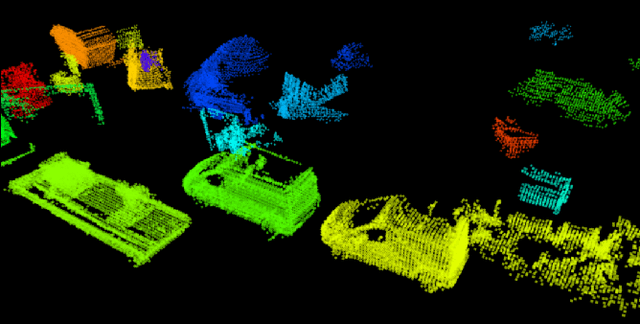

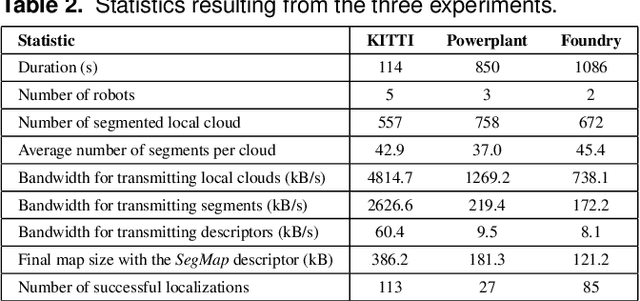

Sep 27, 2019

Abstract:Precisely estimating a robot's pose in a prior, global map is a fundamental capability for mobile robotics, e.g. autonomous driving or exploration in disaster zones. This task, however, remains challenging in unstructured, dynamic environments, where local features are not discriminative enough and global scene descriptors only provide coarse information. We therefore present SegMap: a map representation solution for localization and mapping based on the extraction of segments in 3D point clouds. Working at the level of segments offers increased invariance to view-point and local structural changes, and facilitates real-time processing of large-scale 3D data. SegMap exploits a single compact data-driven descriptor for performing multiple tasks: global localization, 3D dense map reconstruction, and semantic information extraction. The performance of SegMap is evaluated in multiple urban driving and search and rescue experiments. We show that the learned SegMap descriptor has superior segment retrieval capabilities, compared to state-of-the-art handcrafted descriptors. In consequence, we achieve a higher localization accuracy and a 6% increase in recall over state-of-the-art. These segment-based localizations allow us to reduce the open-loop odometry drift by up to 50%. SegMap is open-source available along with easy to run demonstrations.

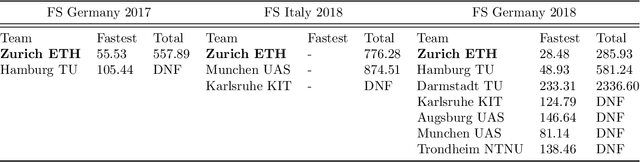

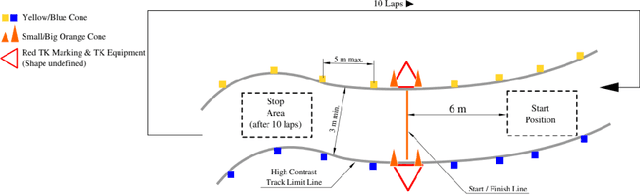

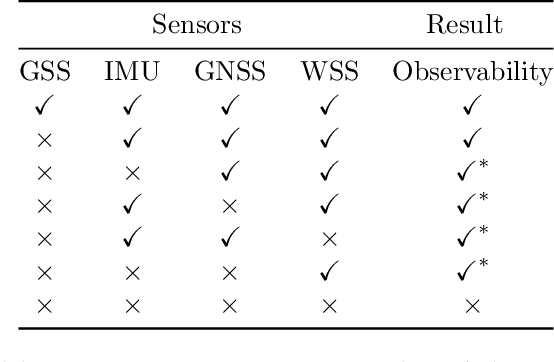

AMZ Driverless: The Full Autonomous Racing System

May 13, 2019

Abstract:This paper presents the algorithms and system architecture of an autonomous racecar. The introduced vehicle is powered by a software stack designed for robustness, reliability, and extensibility. In order to autonomously race around a previously unknown track, the proposed solution combines state of the art techniques from different fields of robotics. Specifically, perception, estimation, and control are incorporated into one high-performance autonomous racecar. This complex robotic system, developed by AMZ Driverless and ETH Zurich, finished 1st overall at each competition we attended: Formula Student Germany 2017, Formula Student Italy 2018 and Formula Student Germany 2018. We discuss the findings and learnings from these competitions and present an experimental evaluation of each module of our solution.

OREOS: Oriented Recognition of 3D Point Clouds in Outdoor Scenarios

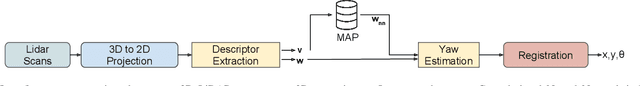

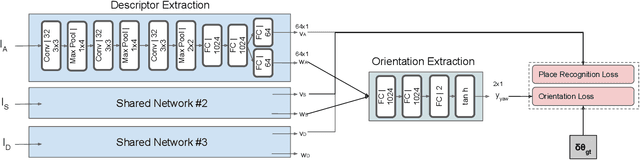

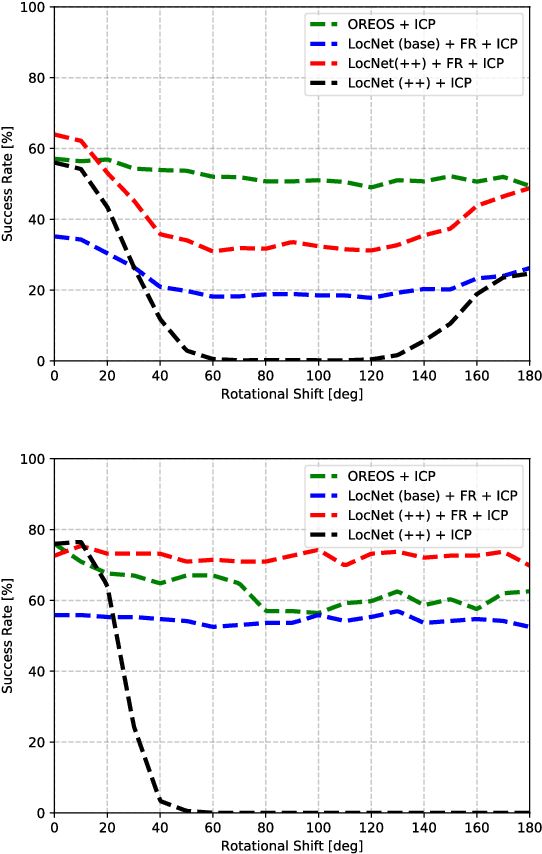

Mar 19, 2019

Abstract:We introduce a novel method for oriented place recognition with 3D LiDAR scans. A Convolutional Neural Network is trained to extract compact descriptors from single 3D LiDAR scans. These can be used both to retrieve near-by place candidates from a map, and to estimate the yaw discrepancy needed for bootstrapping local registration methods. We employ a triplet loss function for training and use a hard-negative mining strategy to further increase the performance of our descriptor extractor. In an evaluation on the NCLT and KITTI datasets, we demonstrate that our method outperforms related state-of-the-art approaches based on both data-driven and handcrafted data representation in challenging long-term outdoor conditions.

VIZARD: Reliable Visual Localization for Autonomous Vehicles in Urban Outdoor Environments

Feb 12, 2019

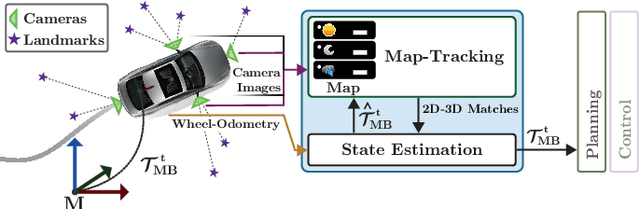

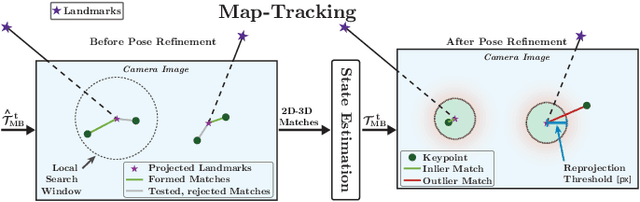

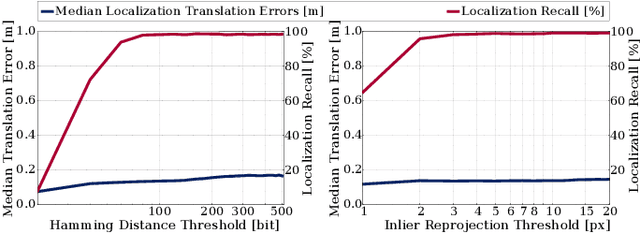

Abstract:Changes in appearance is one of the main sources of failure in visual localization systems in outdoor environments. To address this challenge, we present VIZARD, a visual localization system for urban outdoor environments. By combining a local localization algorithm with the use of multi-session maps, a high localization recall can be achieved across vastly different appearance conditions. The fusion of the visual localization constraints with wheel-odometry in a state estimation framework further guarantees smooth and accurate pose estimates. In an extensive experimental evaluation on several hundreds of driving kilometers in challenging urban outdoor environments, we analyze the recall and accuracy of our localization system, investigate its key parameters and boundary conditions, and compare different types of feature descriptors. Our results show that VIZARD is able to achieve nearly 100% recall with a localization accuracy below 0.5m under varying outdoor appearance conditions, including at night-time.

SegMatch: Segment based loop-closure for 3D point clouds

Jan 15, 2019

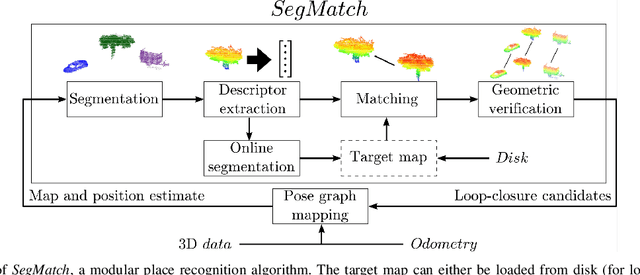

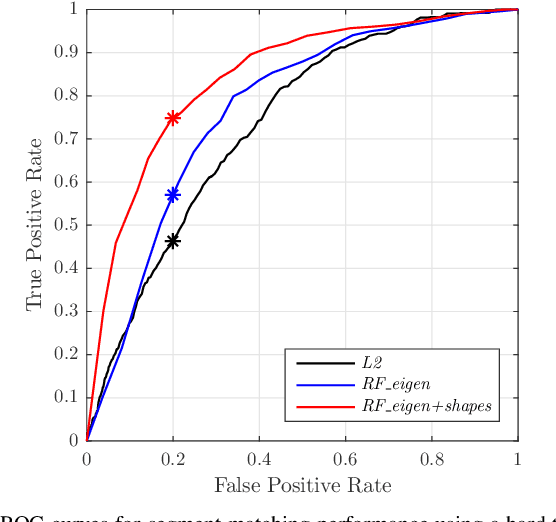

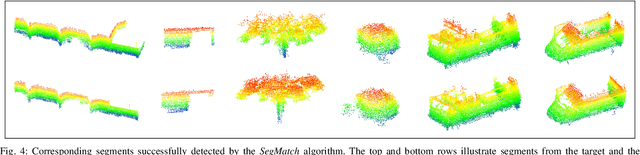

Abstract:Loop-closure detection on 3D data is a challenging task that has been commonly approached by adapting image-based solutions. Methods based on local features suffer from ambiguity and from robustness to environment changes while methods based on global features are viewpoint dependent. We propose SegMatch, a reliable loop-closure detection algorithm based on the matching of 3D segments. Segments provide a good compromise between local and global descriptions, incorporating their strengths while reducing their individual drawbacks. SegMatch does not rely on assumptions of "perfect segmentation", or on the existence of "objects" in the environment, which allows for reliable execution on large scale, unstructured environments. We quantitatively demonstrate that SegMatch can achieve accurate localization at a frequency of 1Hz on the largest sequence of the KITTI odometry dataset. We furthermore show how this algorithm can reliably detect and close loops in real-time, during online operation. In addition, the source code for the SegMatch algorithm will be made available after publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge