Mehak Gupta

Personality Prediction from Life Stories using Language Models

Jun 24, 2025Abstract:Natural Language Processing (NLP) offers new avenues for personality assessment by leveraging rich, open-ended text, moving beyond traditional questionnaires. In this study, we address the challenge of modeling long narrative interview where each exceeds 2000 tokens so as to predict Five-Factor Model (FFM) personality traits. We propose a two-step approach: first, we extract contextual embeddings using sliding-window fine-tuning of pretrained language models; then, we apply Recurrent Neural Networks (RNNs) with attention mechanisms to integrate long-range dependencies and enhance interpretability. This hybrid method effectively bridges the strengths of pretrained transformers and sequence modeling to handle long-context data. Through ablation studies and comparisons with state-of-the-art long-context models such as LLaMA and Longformer, we demonstrate improvements in prediction accuracy, efficiency, and interpretability. Our results highlight the potential of combining language-based features with long-context modeling to advance personality assessment from life narratives.

Equitable Electronic Health Record Prediction with FAME: Fairness-Aware Multimodal Embedding

Jun 16, 2025Abstract:Electronic Health Record (EHR) data encompass diverse modalities -- text, images, and medical codes -- that are vital for clinical decision-making. To process these complex data, multimodal AI (MAI) has emerged as a powerful approach for fusing such information. However, most existing MAI models optimize for better prediction performance, potentially reinforcing biases across patient subgroups. Although bias-reduction techniques for multimodal models have been proposed, the individual strengths of each modality and their interplay in both reducing bias and optimizing performance remain underexplored. In this work, we introduce FAME (Fairness-Aware Multimodal Embeddings), a framework that explicitly weights each modality according to its fairness contribution. FAME optimizes both performance and fairness by incorporating a combined loss function. We leverage the Error Distribution Disparity Index (EDDI) to measure fairness across subgroups and propose a sign-agnostic aggregation method to balance fairness across subgroups, ensuring equitable model outcomes. We evaluate FAME with BEHRT and BioClinicalBERT, combining structured and unstructured EHR data, and demonstrate its effectiveness in terms of performance and fairness compared with other baselines across multiple EHR prediction tasks.

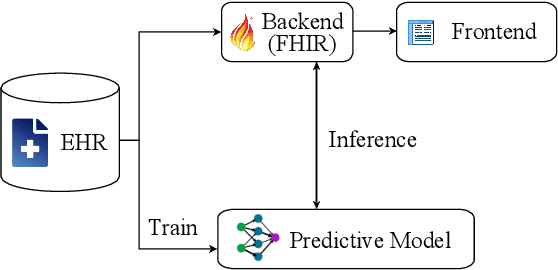

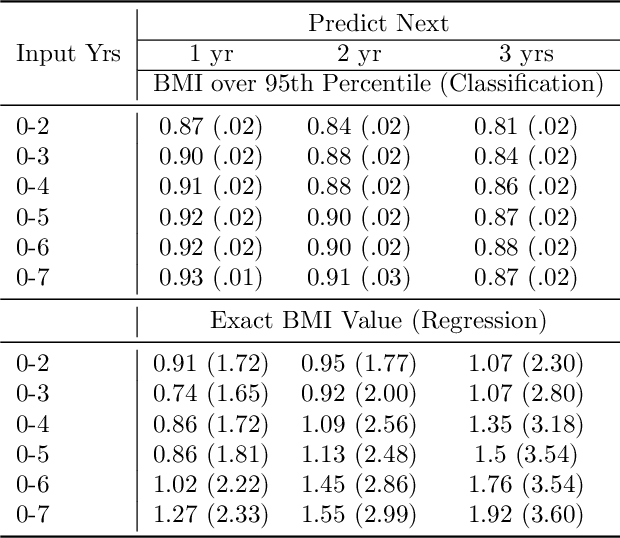

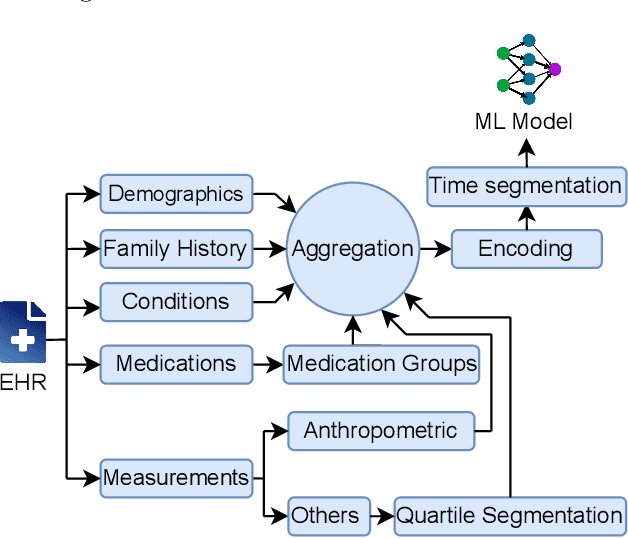

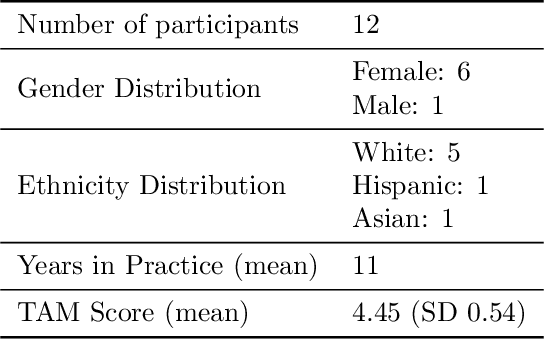

An Interoperable Machine Learning Pipeline for Pediatric Obesity Risk Estimation

Dec 12, 2024

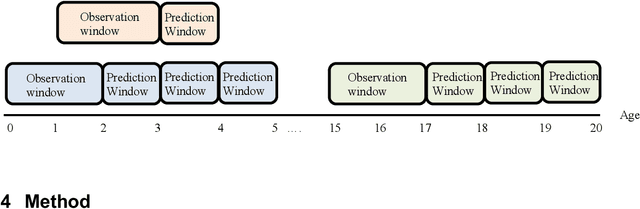

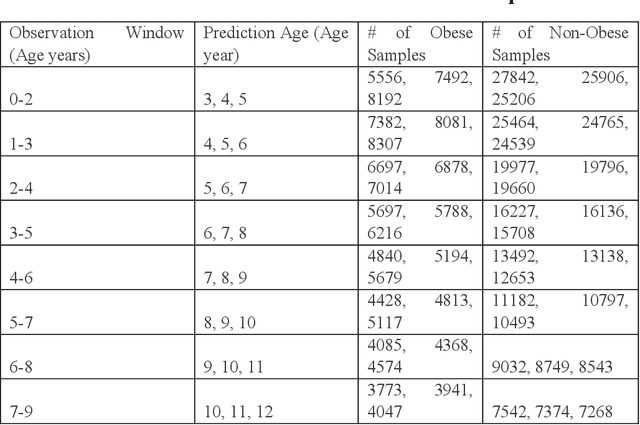

Abstract:Reliable prediction of pediatric obesity can offer a valuable resource to providers, helping them engage in timely preventive interventions before the disease is established. Many efforts have been made to develop ML-based predictive models of obesity, and some studies have reported high predictive performances. However, no commonly used clinical decision support tool based on existing ML models currently exists. This study presents a novel end-to-end pipeline specifically designed for pediatric obesity prediction, which supports the entire process of data extraction, inference, and communication via an API or a user interface. While focusing only on routinely recorded data in pediatric electronic health records (EHRs), our pipeline uses a diverse expert-curated list of medical concepts to predict the 1-3 years risk of developing obesity. Furthermore, by using the Fast Healthcare Interoperability Resources (FHIR) standard in our design procedure, we specifically target facilitating low-effort integration of our pipeline with different EHR systems. In our experiments, we report the effectiveness of the predictive model as well as its alignment with the feedback from various stakeholders, including ML scientists, providers, health IT personnel, health administration representatives, and patient group representatives.

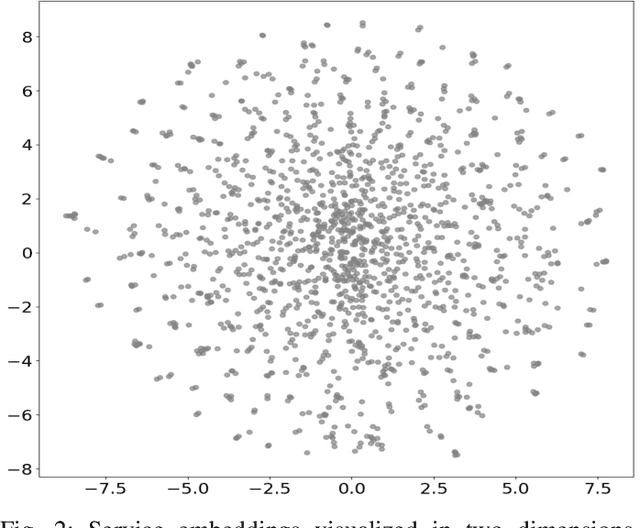

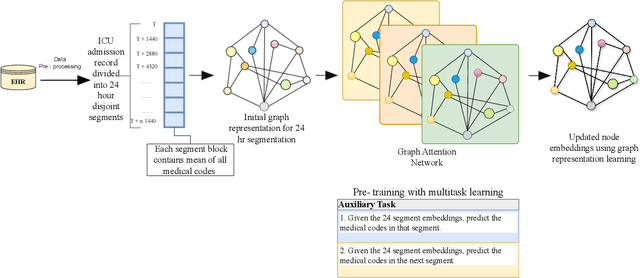

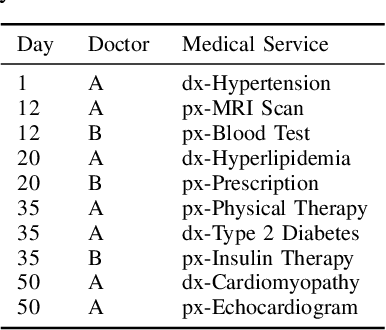

HealthGAT: Node Classifications in Electronic Health Records using Graph Attention Networks

Mar 26, 2024

Abstract:While electronic health records (EHRs) are widely used across various applications in healthcare, most applications use the EHRs in their raw (tabular) format. Relying on raw or simple data pre-processing can greatly limit the performance or even applicability of downstream tasks using EHRs. To address this challenge, we present HealthGAT, a novel graph attention network framework that utilizes a hierarchical approach to generate embeddings from EHR, surpassing traditional graph-based methods. Our model iteratively refines the embeddings for medical codes, resulting in improved EHR data analysis. We also introduce customized EHR-centric auxiliary pre-training tasks to leverage the rich medical knowledge embedded within the data. This approach provides a comprehensive analysis of complex medical relationships and offers significant advancement over standard data representation techniques. HealthGAT has demonstrated its effectiveness in various healthcare scenarios through comprehensive evaluations against established methodologies. Specifically, our model shows outstanding performance in node classification and downstream tasks such as predicting readmissions and diagnosis classifications. Our code is available at https://github.com/healthylaife/HealthGAT

An Extensive Data Processing Pipeline for MIMIC-IV

Apr 29, 2022

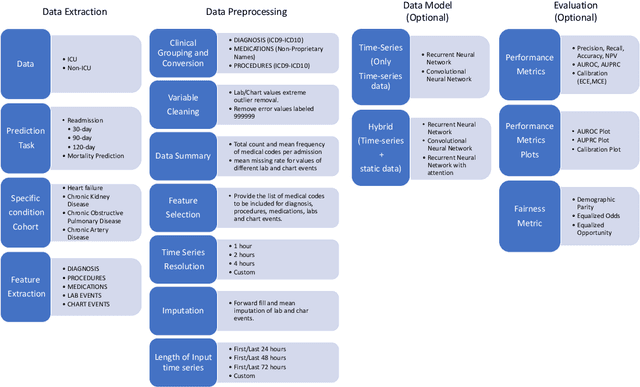

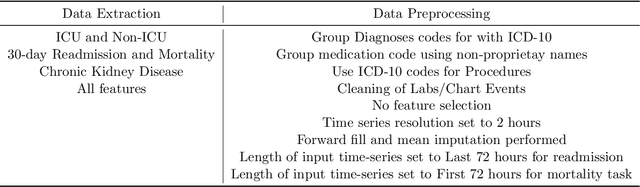

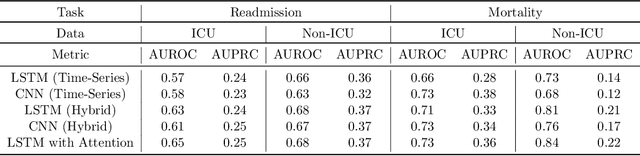

Abstract:An increasing amount of research is being devoted to applying machine learning methods to electronic health record (EHR) data for various clinical tasks. This growing area of research has exposed the limitation of accessibility of EHR datasets for all, as well as the reproducibility of different modeling frameworks. One reason for these limitations is the lack of standardized pre-processing pipelines. MIMIC is a freely available EHR dataset in a raw format that has been used in numerous studies. The absence of standardized pre-processing steps serves as a major barrier to the wider adoption of the dataset. It also leads to different cohorts being used in downstream tasks, limiting the ability to compare the results among similar studies. Contrasting studies also use various distinct performance metrics, which can greatly reduce the ability to compare model results. In this work, we provide an end-to-end fully customizable pipeline to extract, clean, and pre-process data; and to predict and evaluate the fourth version of the MIMIC dataset (MIMIC-IV) for ICU and non-ICU-related clinical time-series prediction tasks.

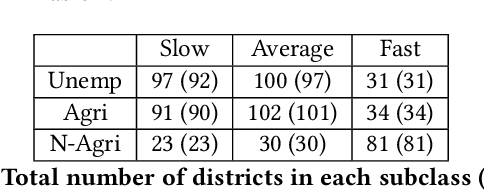

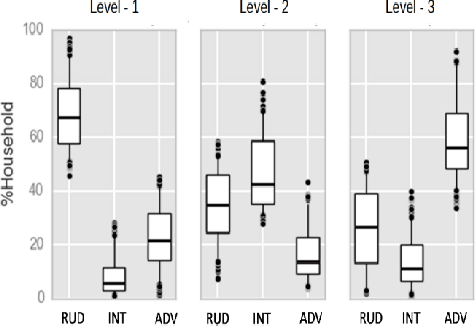

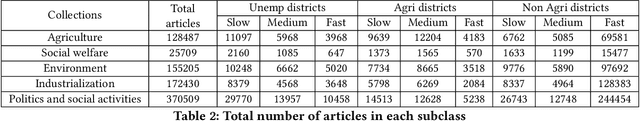

Exploring the Scope of Using News Articles to Understand Development Patterns of Districts in India

Jul 03, 2021

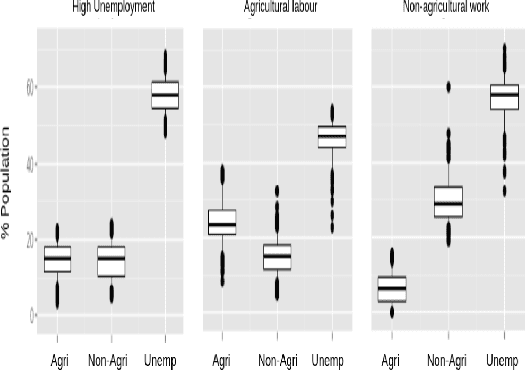

Abstract:Understanding what factors bring about socio-economic development may often suffer from the streetlight effect, of analyzing the effect of only those variables that have been measured and are therefore available for analysis. How do we check whether all worthwhile variables have been instrumented and considered when building an econometric development model? We attempt to address this question by building unsupervised learning methods to identify and rank news articles about diverse events occurring in different districts of India, that can provide insights about what may have transpired in the districts. This can help determine whether variables related to these events are indeed available or not to model the development of these districts. We also describe several other applications that emerge from this approach, such as to use news articles to understand why pairs of districts that may have had similar socio-economic indicators approximately ten years back ended up at different levels of development currently, and another application that generates a newsfeed of unusual news articles that do not conform to news articles about typical districts with a similar socio-economic profile. These applications outline the need for qualitative data to augment models based on quantitative data, and are meant to open up research on new ways to mine information from unstructured qualitative data to understand development.

* 11 pages of main text, 4 pages of supplementary material

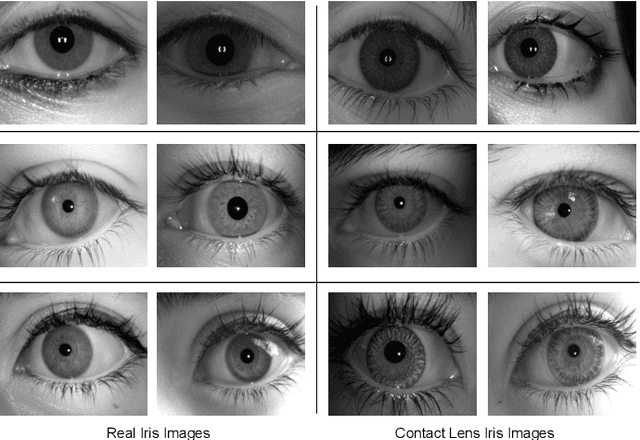

Generalized Iris Presentation Attack Detection Algorithm under Cross-Database Settings

Oct 25, 2020

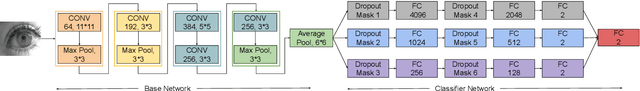

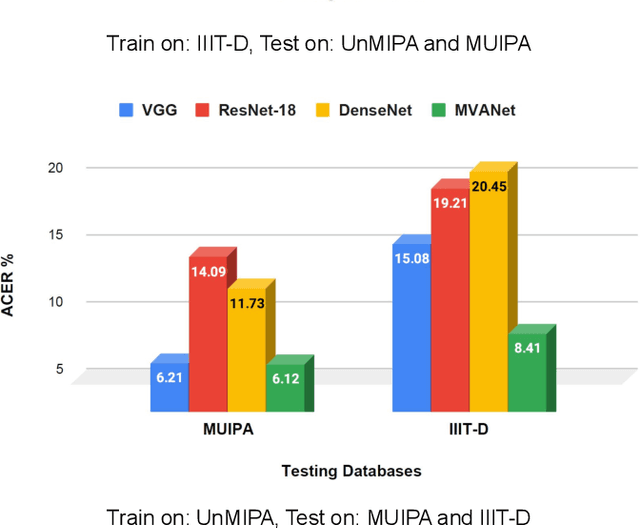

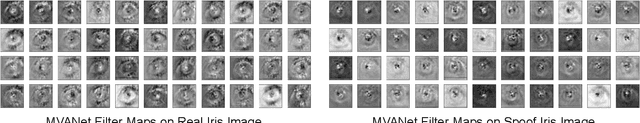

Abstract:Presentation attacks are posing major challenges to most of the biometric modalities. Iris recognition, which is considered as one of the most accurate biometric modality for person identification, has also been shown to be vulnerable to advanced presentation attacks such as 3D contact lenses and textured lens. While in the literature, several presentation attack detection (PAD) algorithms are presented; a significant limitation is the generalizability against an unseen database, unseen sensor, and different imaging environment. To address this challenge, we propose a generalized deep learning-based PAD network, MVANet, which utilizes multiple representation layers. It is inspired by the simplicity and success of hybrid algorithm or fusion of multiple detection networks. The computational complexity is an essential factor in training deep neural networks; therefore, to reduce the computational complexity while learning multiple feature representation layers, a fixed base model has been used. The performance of the proposed network is demonstrated on multiple databases such as IIITD-WVU MUIPA and IIITD-CLI databases under cross-database training-testing settings, to assess the generalizability of the proposed algorithm.

Time-series Imputation and Prediction with Bi-Directional Generative Adversarial Networks

Sep 18, 2020

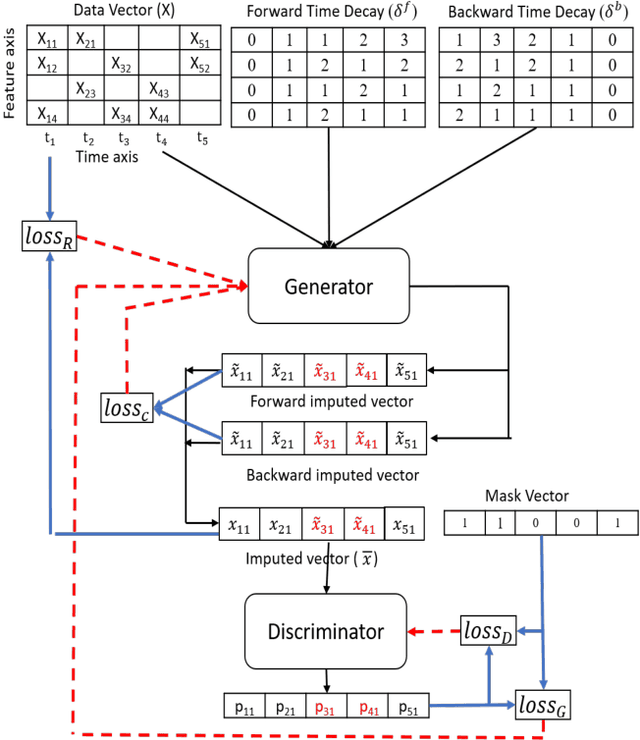

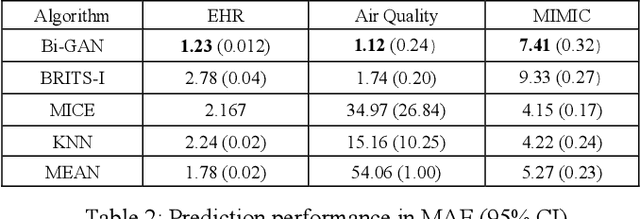

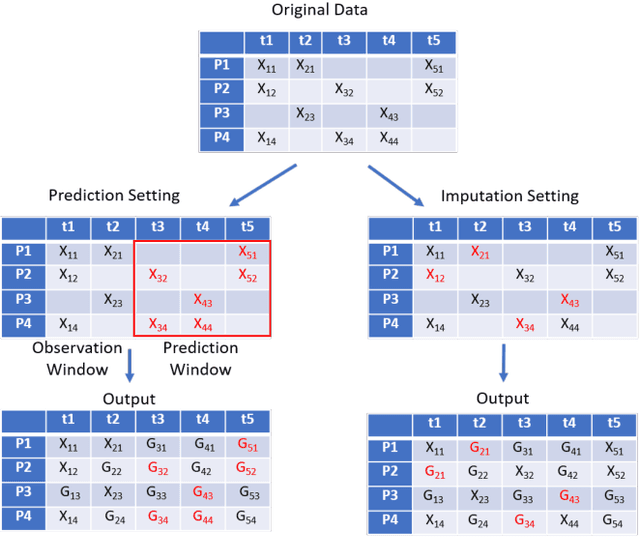

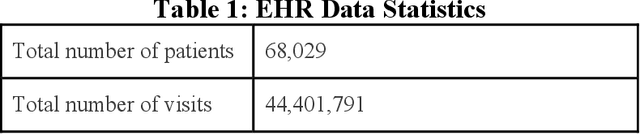

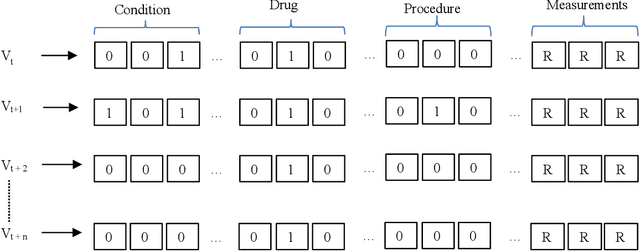

Abstract:Multivariate time-series data are used in many classification and regression predictive tasks, and recurrent models have been widely used for such tasks. Most common recurrent models assume that time-series data elements are of equal length and the ordered observations are recorded at regular intervals. However, real-world time-series data have neither a similar length nor a same number of observations. They also have missing entries, which hinders the performance of predictive tasks. In this paper, we approach these issues by presenting a model for the combined task of imputing and predicting values for the irregularly observed and varying length time-series data with missing entries. Our proposed model (Bi-GAN) uses a bidirectional recurrent network in a generative adversarial setting. The generator is a bidirectional recurrent network that receives actual incomplete data and imputes the missing values. The discriminator attempts to discriminate between the actual and the imputed values in the output of the generator. Our model learns how to impute missing elements in-between (imputation) or outside of the input time steps (prediction), hence working as an effective any-time prediction tool for time-series data. Our method has three advantages to the state-of-the-art methods in the field: (a) single model can be used for both imputation and prediction tasks; (b) it can perform prediction task for time-series of varying length with missing data; (c) it does not require to know the observation and prediction time window during training which provides a flexible length of prediction window for both long-term and short-term predictions. We evaluate our model on two public datasets and on another large real-world electronic health records dataset to impute and predict body mass index (BMI) values in children and show its superior performance in both settings.

Obesity Prediction with EHR Data: A deep learning approach with interpretable elements

Jan 30, 2020

Abstract:Childhood obesity is a major public health challenge. Obesity in early childhood and adolescence can lead to obesity and other health problems in adulthood. Early prediction and identification of the children at a high risk of developing childhood obesity may help in engaging earlier and more effective interventions to prevent and manage this and other related health conditions. Existing predictive tools designed for childhood obesity primarily rely on traditional regression-type methods without exploiting longitudinal patterns of children's data (ignoring data temporality). In this paper, we present a machine learning model specifically designed for predicting future obesity patterns from generally available items on children's medical history. To do this, we have used a large unaugmented EHR (Electronic Health Record) dataset from a major pediatric health system in the US. We adopt a general LSTM (long short-term memory) network architecture for our model for training over dynamic (sequential) and static (demographic) EHR data. We have additionally included a set embedding and attention layers to compute the feature ranking of each timestamp and attention scores of each hidden layer corresponding to each input timestamp. These feature ranking and attention scores added interpretability at both the features and the timestamp-level.

AMZ Driverless: The Full Autonomous Racing System

May 13, 2019

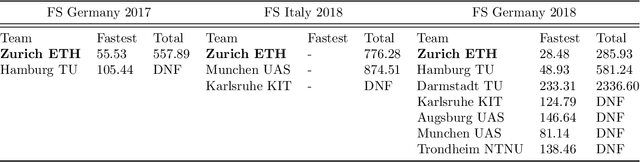

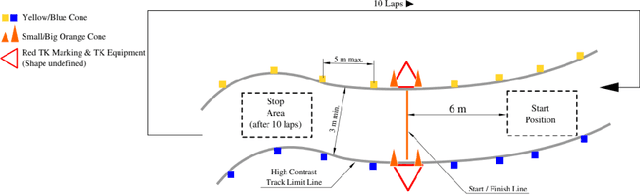

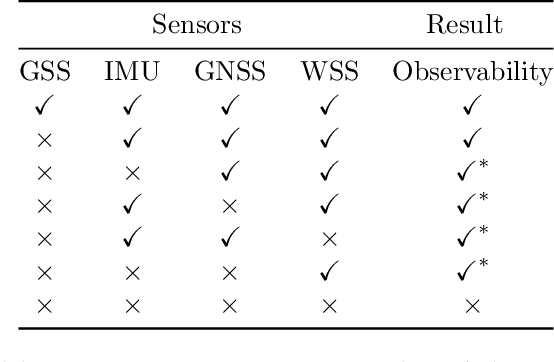

Abstract:This paper presents the algorithms and system architecture of an autonomous racecar. The introduced vehicle is powered by a software stack designed for robustness, reliability, and extensibility. In order to autonomously race around a previously unknown track, the proposed solution combines state of the art techniques from different fields of robotics. Specifically, perception, estimation, and control are incorporated into one high-performance autonomous racecar. This complex robotic system, developed by AMZ Driverless and ETH Zurich, finished 1st overall at each competition we attended: Formula Student Germany 2017, Formula Student Italy 2018 and Formula Student Germany 2018. We discuss the findings and learnings from these competitions and present an experimental evaluation of each module of our solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge