Hubertus Franciscus Cornelis Hendrikx

Dynamic Object Aware LiDAR SLAM based on Automatic Generation of Training Data

Apr 08, 2021

Abstract:Highly dynamic environments, with moving objects such as cars or humans, can pose a performance challenge for LiDAR SLAM systems that assume largely static scenes. To overcome this challenge and support the deployment of robots in real world scenarios, we propose a complete solution for a dynamic object aware LiDAR SLAM algorithm. This is achieved by leveraging a real-time capable neural network that can detect dynamic objects, thus allowing our system to deal with them explicitly. To efficiently generate the necessary training data which is key to our approach, we present a novel end-to-end occupancy grid based pipeline that can automatically label a wide variety of arbitrary dynamic objects. Our solution can thus generalize to different environments without the need for expensive manual labeling and at the same time avoids assumptions about the presence of a predefined set of known objects in the scene. Using this technique, we automatically label over 12000 LiDAR scans collected in an urban environment with a large amount of pedestrians and use this data to train a neural network, achieving an average segmentation IoU of 0.82. We show that explicitly dealing with dynamic objects can improve the LiDAR SLAM odometry performance by 39.6% while yielding maps which better represent the environments. A supplementary video as well as our test data are available online.

AMZ Driverless: The Full Autonomous Racing System

May 13, 2019

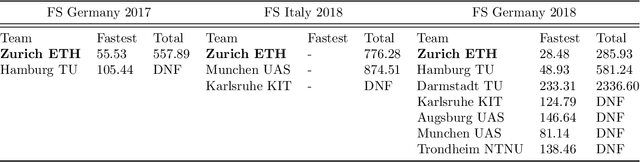

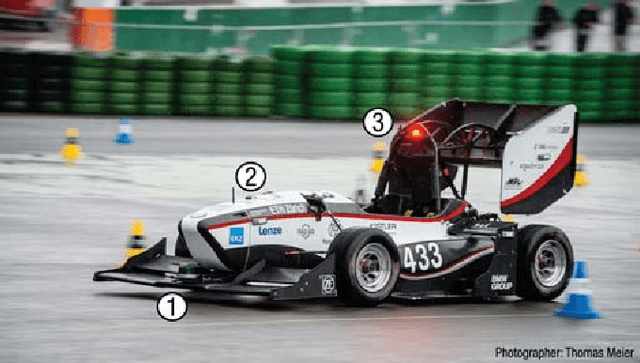

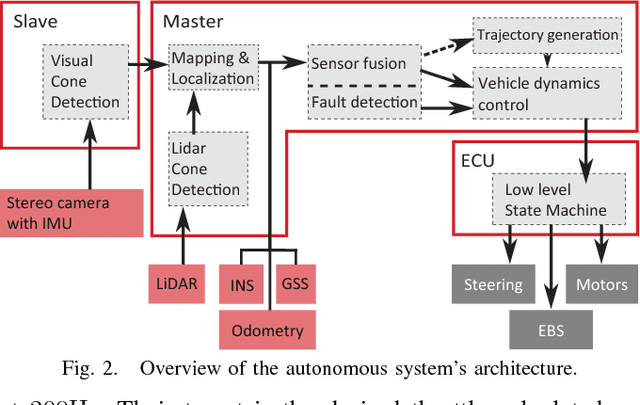

Abstract:This paper presents the algorithms and system architecture of an autonomous racecar. The introduced vehicle is powered by a software stack designed for robustness, reliability, and extensibility. In order to autonomously race around a previously unknown track, the proposed solution combines state of the art techniques from different fields of robotics. Specifically, perception, estimation, and control are incorporated into one high-performance autonomous racecar. This complex robotic system, developed by AMZ Driverless and ETH Zurich, finished 1st overall at each competition we attended: Formula Student Germany 2017, Formula Student Italy 2018 and Formula Student Germany 2018. We discuss the findings and learnings from these competitions and present an experimental evaluation of each module of our solution.

Design of an Autonomous Racecar: Perception, State Estimation and System Integration

Apr 09, 2018

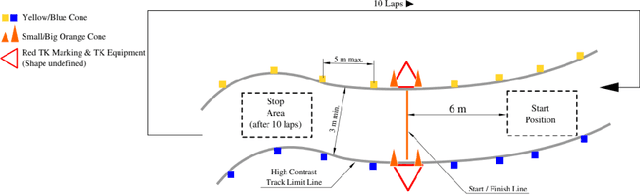

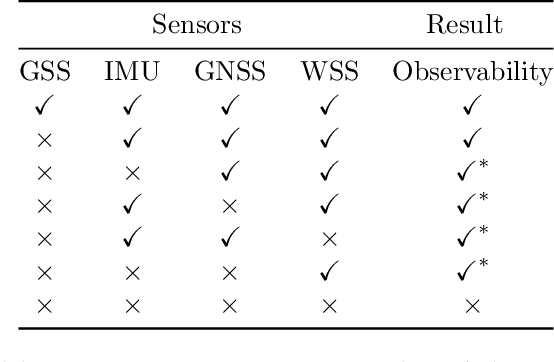

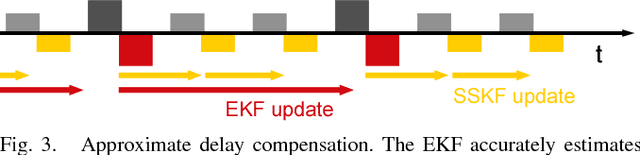

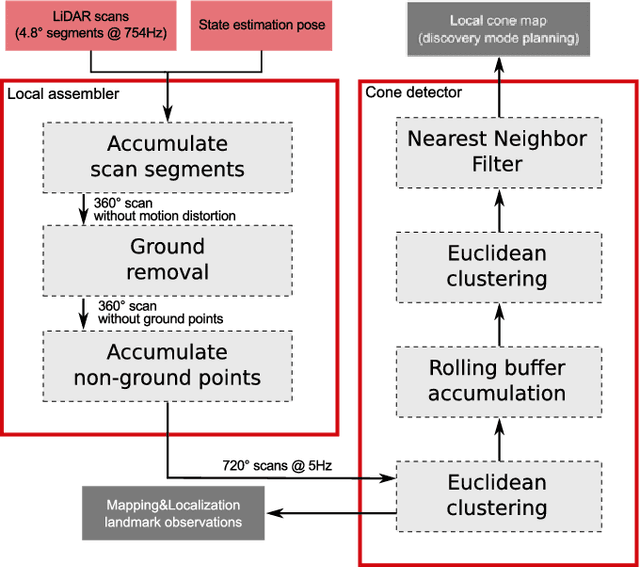

Abstract:This paper introduces fl\"uela driverless: the first autonomous racecar to win a Formula Student Driverless competition. In this competition, among other challenges, an autonomous racecar is tasked to complete 10 laps of a previously unknown racetrack as fast as possible and using only onboard sensing and computing. The key components of fl\"uela's design are its modular redundant sub-systems that allow robust performance despite challenging perceptual conditions or partial system failures. The paper presents the integration of key components of our autonomous racecar, i.e., system design, EKF-based state estimation, LiDAR-based perception, and particle filter-based SLAM. We perform an extensive experimental evaluation on real-world data, demonstrating the system's effectiveness by outperforming the next-best ranking team by almost half the time required to finish a lap. The autonomous racecar reaches lateral and longitudinal accelerations comparable to those achieved by experienced human drivers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge