Hannes Sommer

Multiple Hypothesis Semantic Mapping for Robust Data Association

Dec 08, 2020

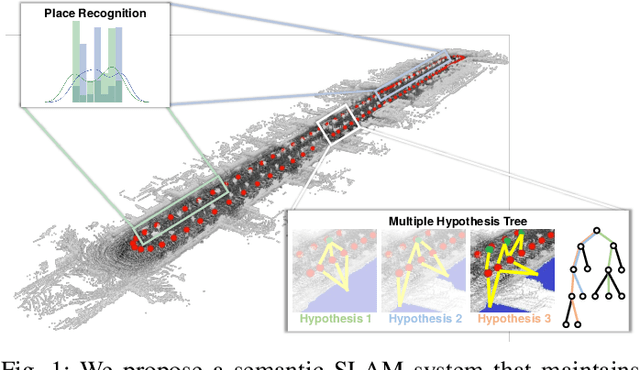

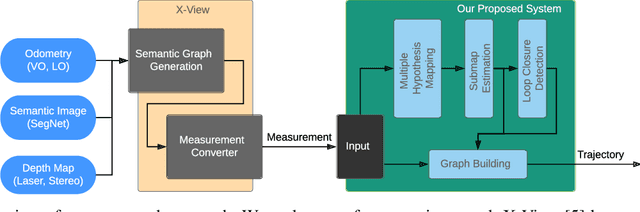

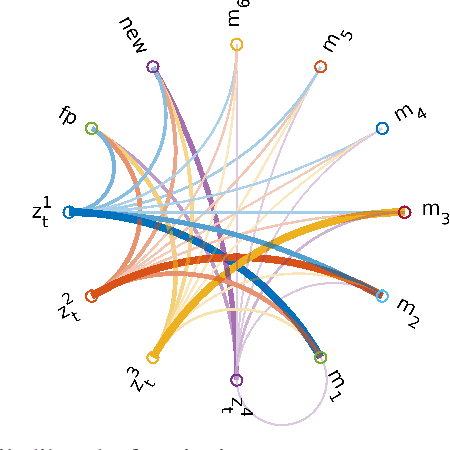

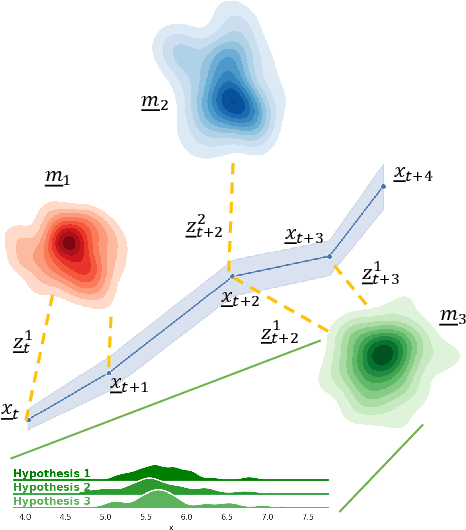

Abstract:In this paper, we present a semantic mapping approach with multiple hypothesis tracking for data association. As semantic information has the potential to overcome ambiguity in measurements and place recognition, it forms an eminent modality for autonomous systems. This is particularly evident in urban scenarios with several similar looking surroundings. Nevertheless, it requires the handling of a non-Gaussian and discrete random variable coming from object detectors. Previous methods facilitate semantic information for global localization and data association to reduce the instance ambiguity between the landmarks. However, many of these approaches do not deal with the creation of complete globally consistent representations of the environment and typically do not scale well. We utilize multiple hypothesis trees to derive a probabilistic data association for semantic measurements by means of position, instance and class to create a semantic representation. We propose an optimized mapping method and make use of a pose graph to derive a novel semantic SLAM solution. Furthermore, we show that semantic covisibility graphs allow for a precise place recognition in urban environments. We verify our approach using real-world outdoor dataset and demonstrate an average drift reduction of 33 % w.r.t. the raw odometry source. Moreover, our approach produces 55 % less hypotheses on average than a regular multiple hypotheses approach.

SegMap: Segment-based mapping and localization using data-driven descriptors

Sep 27, 2019

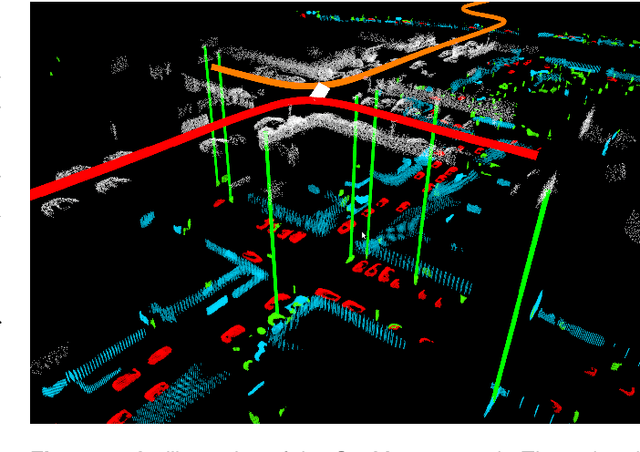

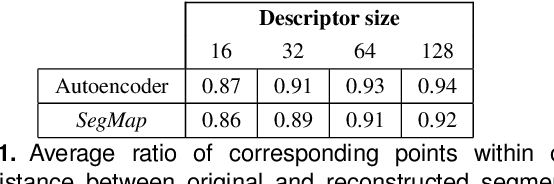

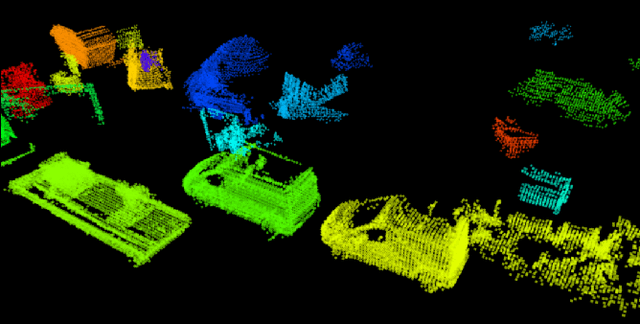

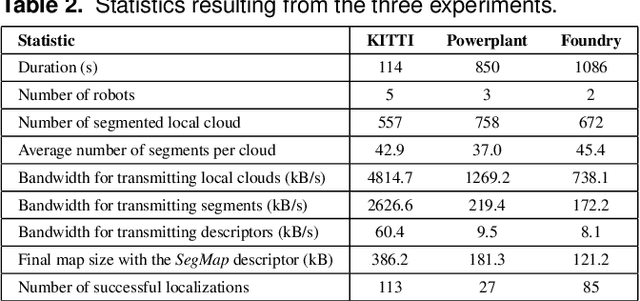

Abstract:Precisely estimating a robot's pose in a prior, global map is a fundamental capability for mobile robotics, e.g. autonomous driving or exploration in disaster zones. This task, however, remains challenging in unstructured, dynamic environments, where local features are not discriminative enough and global scene descriptors only provide coarse information. We therefore present SegMap: a map representation solution for localization and mapping based on the extraction of segments in 3D point clouds. Working at the level of segments offers increased invariance to view-point and local structural changes, and facilitates real-time processing of large-scale 3D data. SegMap exploits a single compact data-driven descriptor for performing multiple tasks: global localization, 3D dense map reconstruction, and semantic information extraction. The performance of SegMap is evaluated in multiple urban driving and search and rescue experiments. We show that the learned SegMap descriptor has superior segment retrieval capabilities, compared to state-of-the-art handcrafted descriptors. In consequence, we achieve a higher localization accuracy and a 6% increase in recall over state-of-the-art. These segment-based localizations allow us to reduce the open-loop odometry drift by up to 50%. SegMap is open-source available along with easy to run demonstrations.

Learning 3D Segment Descriptors for Place Recognition

Apr 24, 2018

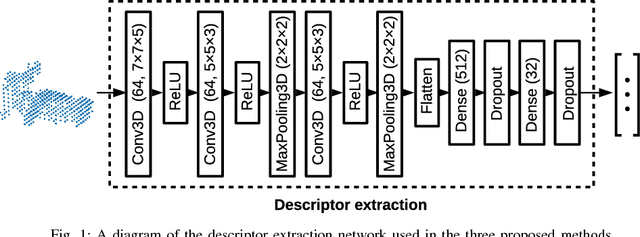

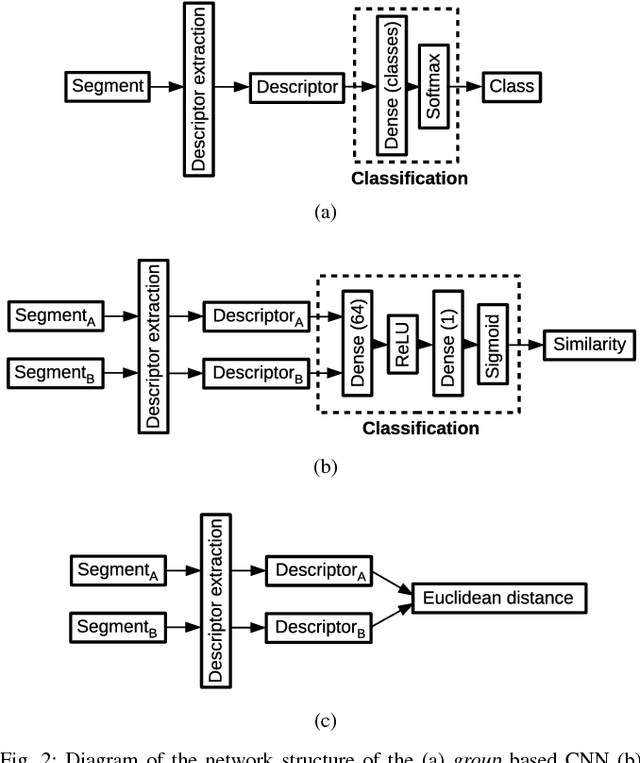

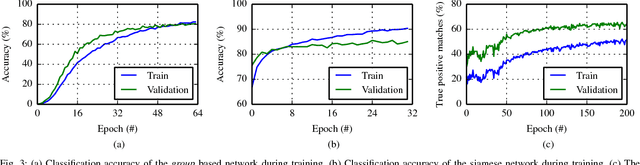

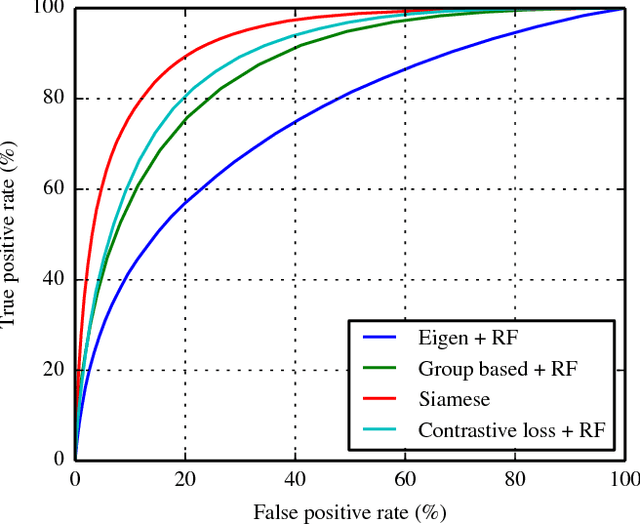

Abstract:In the absence of global positioning information, place recognition is a key capability for enabling localization, mapping and navigation in any environment. Most place recognition methods rely on images, point clouds, or a combination of both. In this work we leverage a segment extraction and matching approach to achieve place recognition in Light Detection and Ranging (LiDAR) based 3D point cloud maps. One challenge related to this approach is the recognition of segments despite changes in point of view or occlusion. We propose using a learning based method in order to reach a higher recall accuracy then previously proposed methods. Using Convolutional Neural Networks (CNNs), which are state-of-the-art classifiers, we propose a new approach to segment recognition based on learned descriptors. In this paper we compare the effectiveness of three different structures and training methods for CNNs. We demonstrate through several experiments on real-world data collected in an urban driving scenario that the proposed learning based methods outperform hand-crafted descriptors.

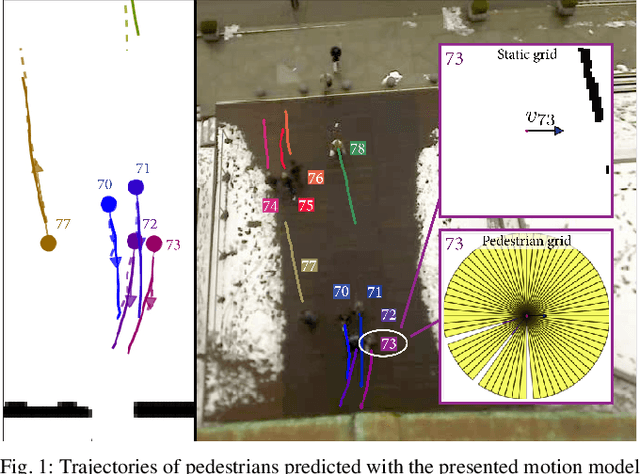

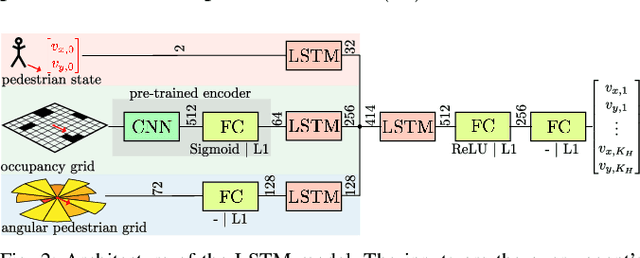

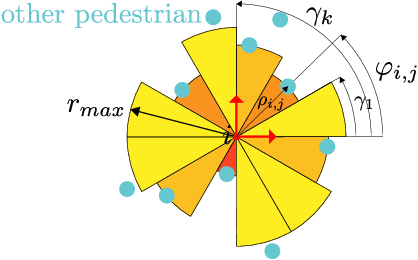

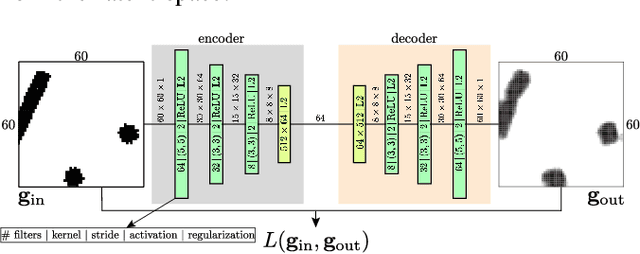

A Data-driven Model for Interaction-aware Pedestrian Motion Prediction in Object Cluttered Environments

Feb 26, 2018

Abstract:This paper reports on a data-driven, interaction-aware motion prediction approach for pedestrians in environments cluttered with static obstacles. When navigating in such workspaces shared with humans, robots need accurate motion predictions of the surrounding pedestrians. Human navigation behavior is mostly influenced by their surrounding pedestrians and by the static obstacles in their vicinity. In this paper we introduce a new model based on Long-Short Term Memory (LSTM) neural networks, which is able to learn human motion behavior from demonstrated data. To the best of our knowledge, this is the first approach using LSTMs, that incorporates both static obstacles and surrounding pedestrians for trajectory forecasting. As part of the model, we introduce a new way of encoding surrounding pedestrians based on a 1d-grid in polar angle space. We evaluate the benefit of interaction-aware motion prediction and the added value of incorporating static obstacles on both simulation and real-world datasets by comparing with state-of-the-art approaches. The results show, that our new approach outperforms the other approaches while being very computationally efficient and that taking into account static obstacles for motion predictions significantly improves the prediction accuracy, especially in cluttered environments.

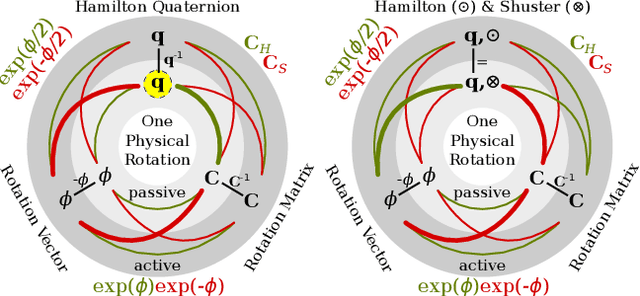

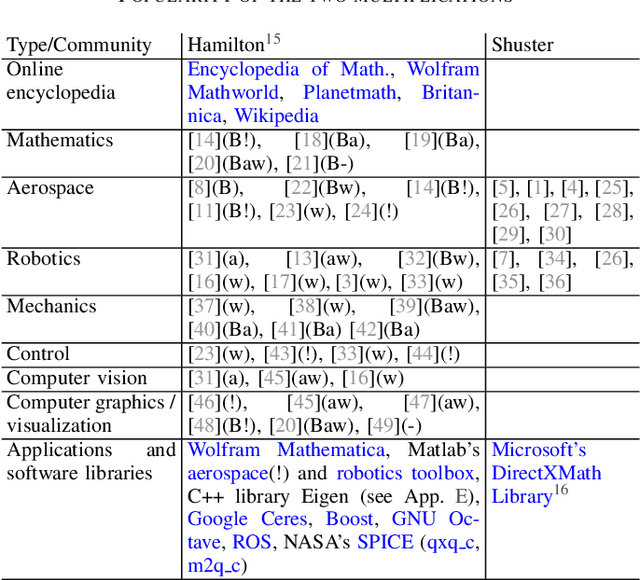

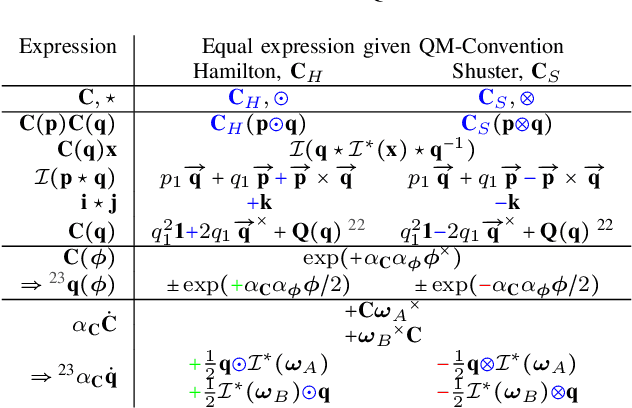

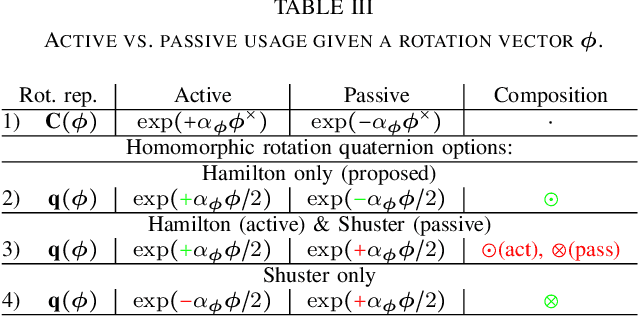

Why and How to Avoid the Flipped Quaternion Multiplication

Feb 05, 2018

Abstract:Over the last decades quaternions have become a crucial and very successful tool for attitude representation in robotics and aerospace. However, there is a major problem that is continuously causing trouble in practice when it comes to exchanging formulas or implementations: there are two quaternion multiplications in common use, Hamilton's original multiplication and its flipped version, which is often associated with NASA's Jet Propulsion Laboratory. We believe that this particular issue is completely avoidable and only exists today due to a lack of understanding. This paper explains the underlying problem for the popular passive world to body usage of rotation quaternions, and derives an alternative solution compatible with Hamilton's multiplication. Furthermore, it argues for entirely discontinuing the flipped multiplication. Additionally, it provides recipes for efficiently detecting relevant conventions and migrating formulas or algorithms between them.

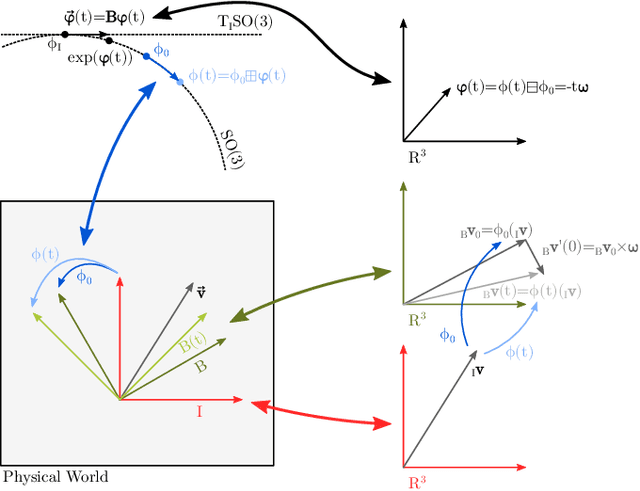

A Primer on the Differential Calculus of 3D Orientations

Oct 31, 2016

Abstract:The proper handling of 3D orientations is a central element in many optimization problems in engineering. Unfortunately many researchers and engineers struggle with the formulation of such problems and often fall back to suboptimal solutions. The existence of many different conventions further complicates this issue, especially when interfacing multiple differing implementations. This document discusses an alternative approach which makes use of a more abstract notion of 3D orientations. The relative orientation between two coordinate systems is primarily identified by the coordinate mapping it induces. This is combined with the standard exponential map in order to introduce representation-independent and minimal differentials, which are very convenient in optimization based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge