Michael Burri

Instant Visual Odometry Initialization for Mobile AR

Jul 30, 2021

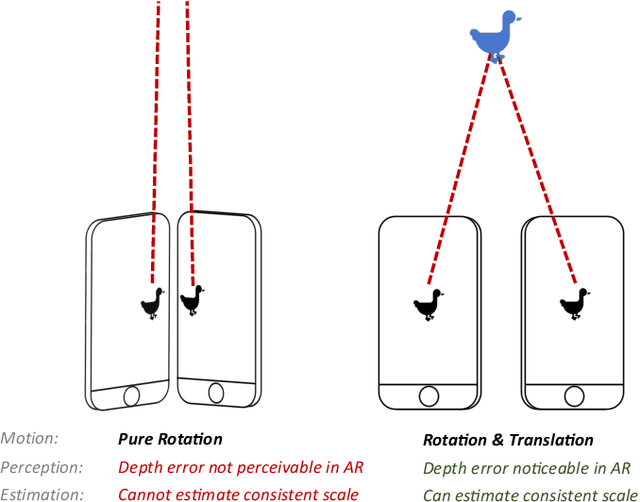

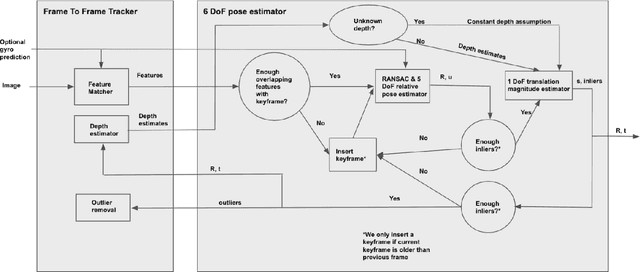

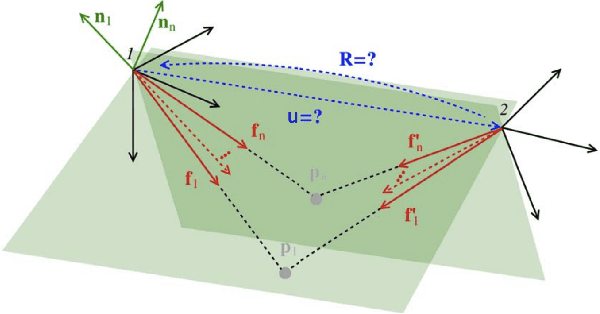

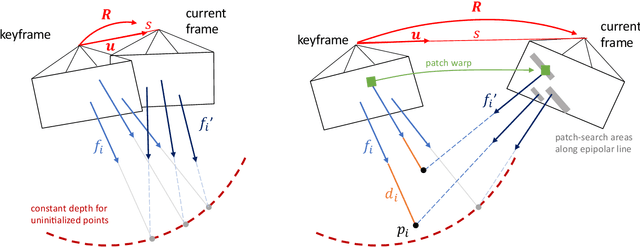

Abstract:Mobile AR applications benefit from fast initialization to display world-locked effects instantly. However, standard visual odometry or SLAM algorithms require motion parallax to initialize (see Figure 1) and, therefore, suffer from delayed initialization. In this paper, we present a 6-DoF monocular visual odometry that initializes instantly and without motion parallax. Our main contribution is a pose estimator that decouples estimating the 5-DoF relative rotation and translation direction from the 1-DoF translation magnitude. While scale is not observable in a monocular vision-only setting, it is still paramount to estimate a consistent scale over the whole trajectory (even if not physically accurate) to avoid AR effects moving erroneously along depth. In our approach, we leverage the fact that depth errors are not perceivable to the user during rotation-only motion. However, as the user starts translating the device, depth becomes perceivable and so does the capability to estimate consistent scale. Our proposed algorithm naturally transitions between these two modes. We perform extensive validations of our contributions with both a publicly available dataset and synthetic data. We show that the proposed pose estimator outperforms the classical approaches for 6-DoF pose estimation used in the literature in low-parallax configurations. We release a dataset for the relative pose problem using real data to facilitate the comparison with future solutions for the relative pose problem. Our solution is either used as a full odometry or as a preSLAM component of any supported SLAM system (ARKit, ARCore) in world-locked AR effects on platforms such as Instagram and Facebook.

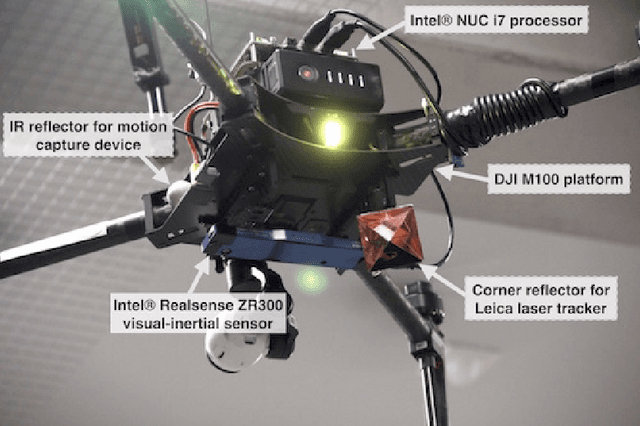

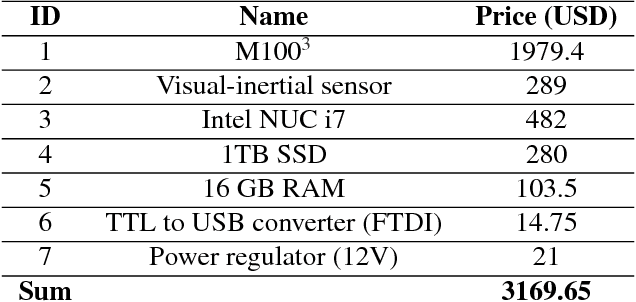

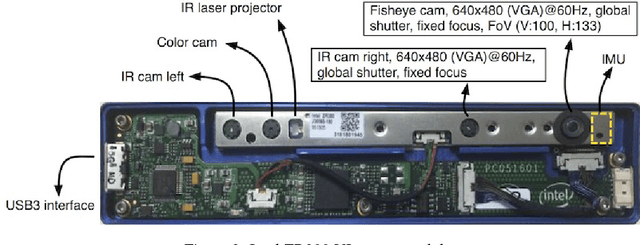

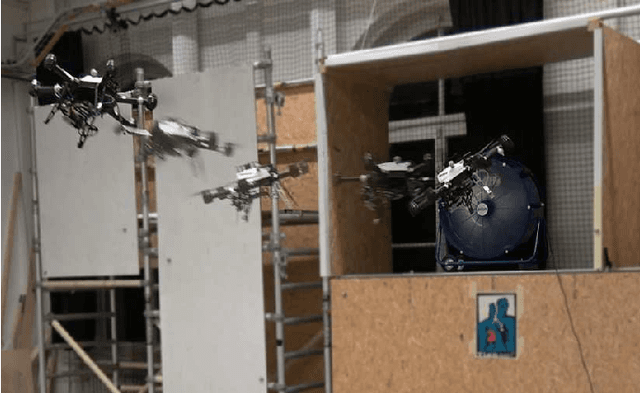

Build Your Own Visual-Inertial Drone: A Cost-Effective and Open-Source Autonomous Drone

Sep 06, 2018

Abstract:This paper describes an approach to building a cost-effective and research grade visual-inertial odometry aided vertical taking-off and landing (VTOL) platform. We utilize an off-the-shelf visual-inertial sensor, an onboard computer, and a quadrotor platform that are factory-calibrated and mass-produced, thereby sharing similar hardware and sensor specifications (e.g., mass, dimensions, intrinsic and extrinsic of camera-IMU systems, and signal-to-noise ratio). We then perform a system calibration and identification enabling the use of our visual-inertial odometry, multi-sensor fusion, and model predictive control frameworks with the off-the-shelf products. This implies that we can partially avoid tedious parameter tuning procedures for building a full system. The complete system is extensively evaluated both indoors using a motion capture system and outdoors using a laser tracker while performing hover and step responses, and trajectory following tasks in the presence of external wind disturbances. We achieve root-mean-square (RMS) pose errors between a reference and actual trajectories of 0.036m, while performing hover. We also conduct relatively long distance flight (~180m) experiments on a farm site and achieve 0.82% drift error of the total distance flight. This paper conveys the insights we acquired about the platform and sensor module and returns to the community as open-source code with tutorial documentation.

* 21 pages, 10 figures, accepted to IEEE Robotics & Automation Magazine

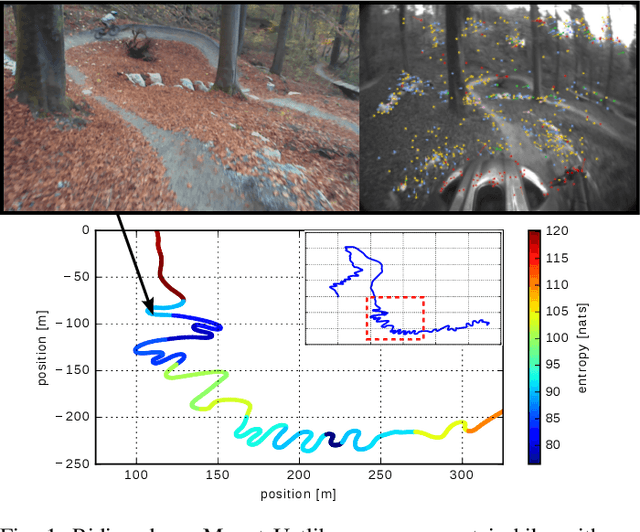

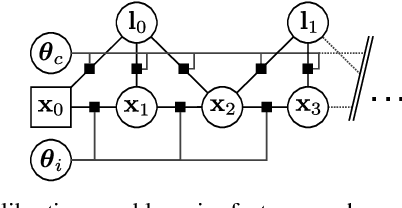

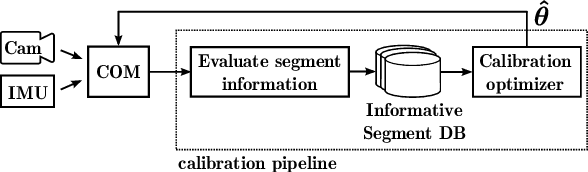

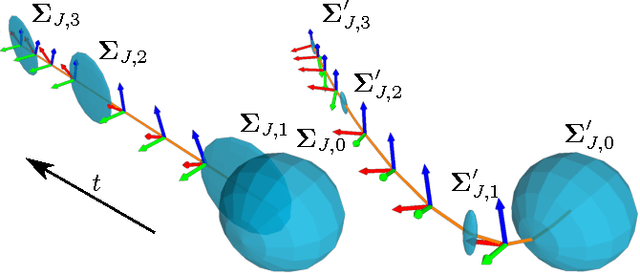

Visual-inertial self-calibration on informative motion segments

Aug 08, 2017

Abstract:Environmental conditions and external effects, such as shocks, have a significant impact on the calibration parameters of visual-inertial sensor systems. Thus long-term operation of these systems cannot fully rely on factory calibration. Since the observability of certain parameters is highly dependent on the motion of the device, using short data segments at device initialization may yield poor results. When such systems are additionally subject to energy constraints, it is also infeasible to use full-batch approaches on a big dataset and careful selection of the data is of high importance. In this paper, we present a novel approach for resource efficient self-calibration of visual-inertial sensor systems. This is achieved by casting the calibration as a segment-based optimization problem that can be run on a small subset of informative segments. Consequently, the computational burden is limited as only a predefined number of segments is used. We also propose an efficient information-theoretic selection to identify such informative motion segments. In evaluations on a challenging dataset, we show our approach to significantly outperform state-of-the-art in terms of computational burden while maintaining a comparable accuracy.

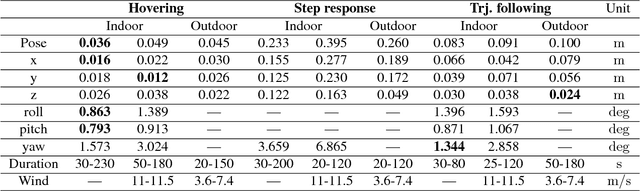

Linear vs Nonlinear MPC for Trajectory Tracking Applied to Rotary Wing Micro Aerial Vehicles

Apr 21, 2017

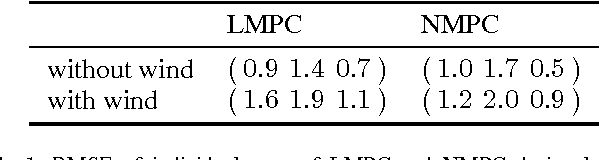

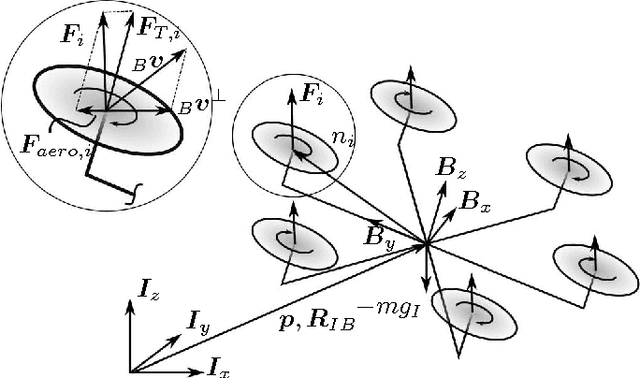

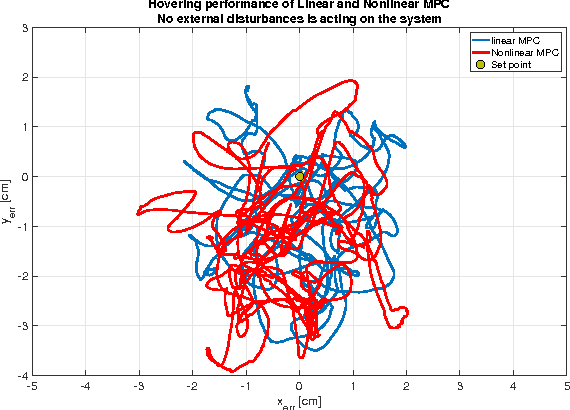

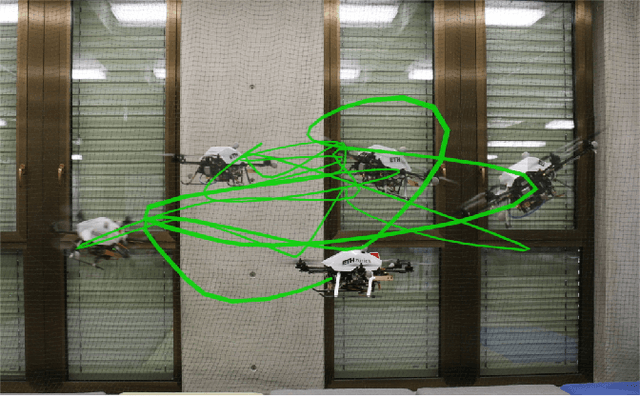

Abstract:Precise trajectory tracking is a crucial property for \acp{MAV} to operate in cluttered environment or under disturbances. In this paper we present a detailed comparison between two state-of-the-art model-based control techniques for \ac{MAV} trajectory tracking. A classical \ac{LMPC} is presented and compared against a more advanced \ac{NMPC} that considers the full system model. In a careful analysis we show the advantages and disadvantages of the two implementations in terms of speed and tracking performance. This is achieved by evaluating hovering performance, step response, and aggressive trajectory tracking under nominal conditions and under external wind disturbances.

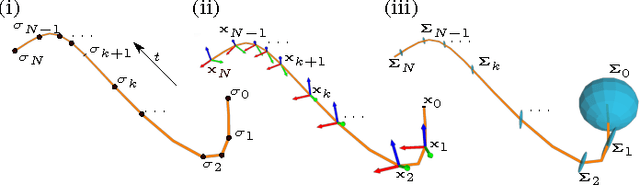

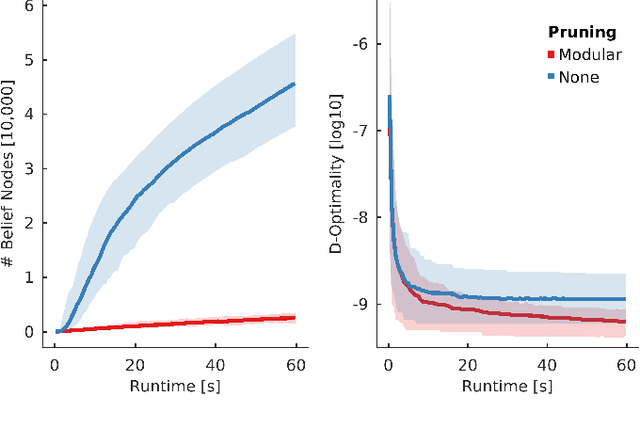

Sampling-based Motion Planning for Active Multirotor System Identification

Feb 05, 2017

Abstract:This paper reports on an algorithm for planning trajectories that allow a multirotor micro aerial vehicle (MAV) to quickly identify a set of unknown parameters. In many problems like self calibration or model parameter identification some states are only observable under a specific motion. These motions are often hard to find, especially for inexperienced users. Therefore, we consider system model identification in an active setting, where the vehicle autonomously decides what actions to take in order to quickly identify the model. Our algorithm approximates the belief dynamics of the system around a candidate trajectory using an extended Kalman filter (EKF). It uses sampling-based motion planning to explore the space of possible beliefs and find a maximally informative trajectory within a user-defined budget. We validate our method in simulation and on a real system showing the feasibility and repeatability of the proposed approach. Our planner creates trajectories which reduce model parameter convergence time and uncertainty by a factor of four.

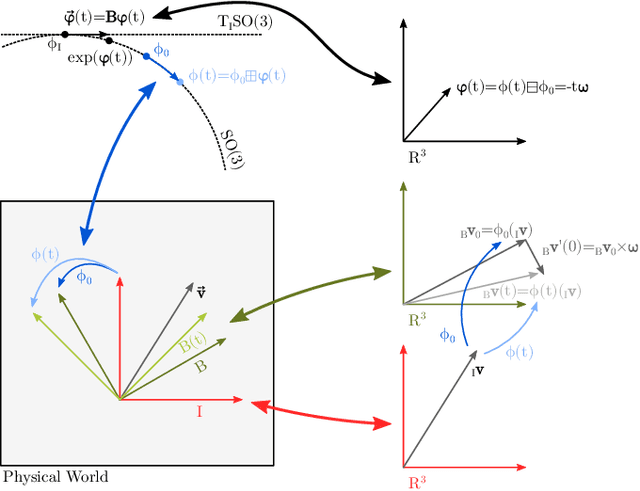

A Primer on the Differential Calculus of 3D Orientations

Oct 31, 2016

Abstract:The proper handling of 3D orientations is a central element in many optimization problems in engineering. Unfortunately many researchers and engineers struggle with the formulation of such problems and often fall back to suboptimal solutions. The existence of many different conventions further complicates this issue, especially when interfacing multiple differing implementations. This document discusses an alternative approach which makes use of a more abstract notion of 3D orientations. The relative orientation between two coordinate systems is primarily identified by the coordinate mapping it induces. This is combined with the standard exponential map in order to introduce representation-independent and minimal differentials, which are very convenient in optimization based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge