Raghav Khanna

Building an Aerial-Ground Robotics System for Precision Farming

Nov 08, 2019

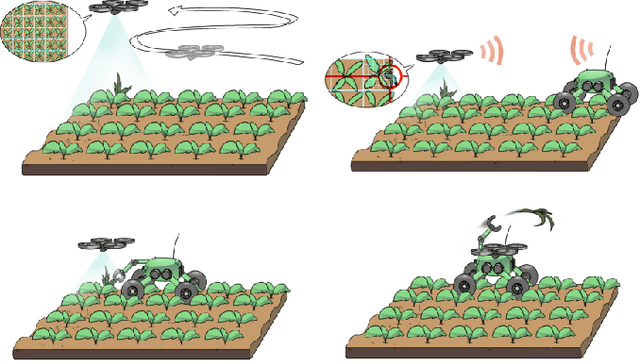

Abstract:The application of autonomous robots in agriculture is gaining more and more popularity thanks to the high impact it may have on food security, sustainability, resource use efficiency, reduction of chemical treatments, minimization of the human effort and maximization of yield. The Flourish research project faced this challenge by developing an adaptable robotic solution for precision farming that combines the aerial survey capabilities of small autonomous unmanned aerial vehicles (UAVs) with flexible targeted intervention performed by multi-purpose agricultural unmanned ground vehicles (UGVs). This paper presents an exhaustive overview of the scientific and technological advances and outcomes obtained in the Flourish project. We introduce multi-spectral perception algorithms and aerial and ground based systems developed to monitor crop density, weed pressure, crop nitrogen nutrition status, and to accurately classify and locate weeds. We then introduce the navigation and mapping systems to deal with the specificity of the employed robots and of the agricultural environment, highlighting the collaborative modules that enable the UAVs and UGVs to collect and share information in a unified environment model. We finally present the ground intervention hardware, software solutions, and interfaces we implemented and tested in different field conditions and with different crops. We describe here a real use case in which a UAV collaborates with a UGV to monitor the field and to perform selective spraying treatments in a totally autonomous way.

An Efficient Sampling-based Method for Online Informative Path Planning in Unknown Environments

Sep 20, 2019

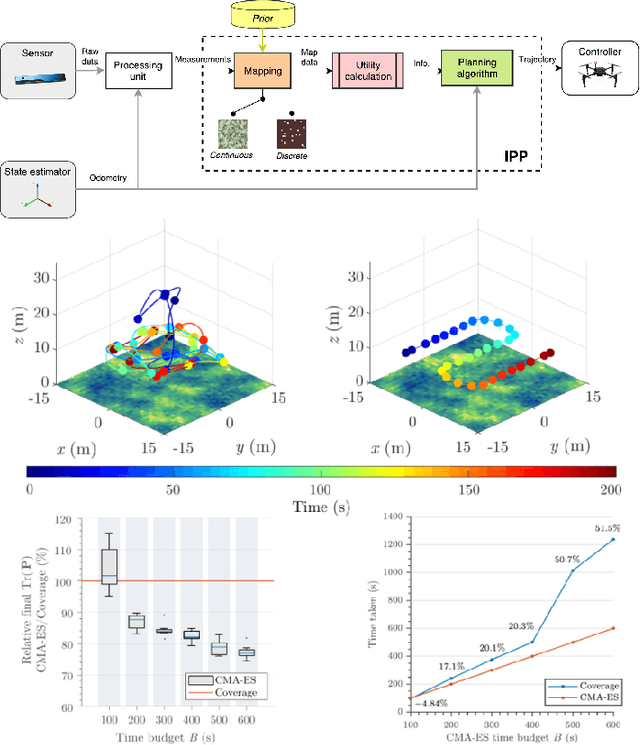

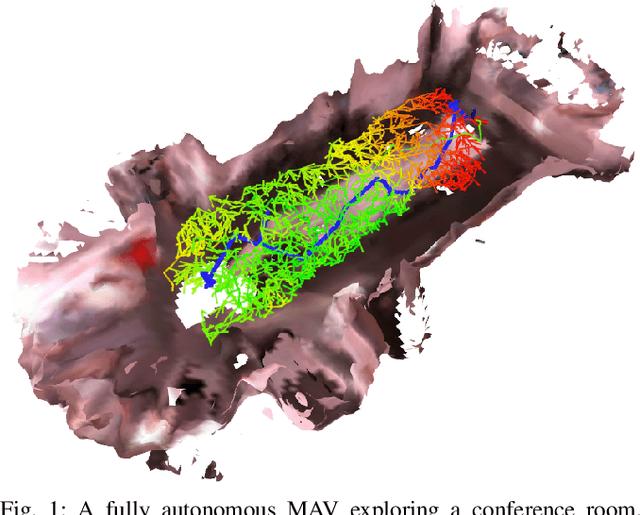

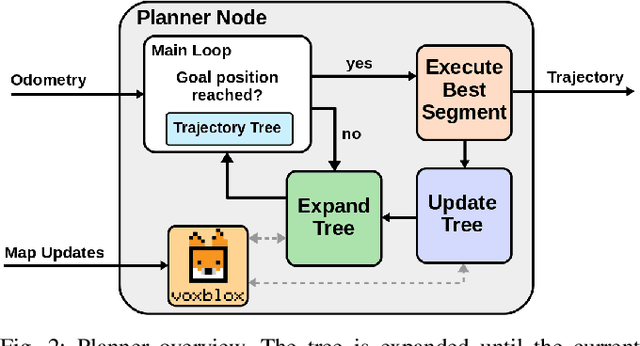

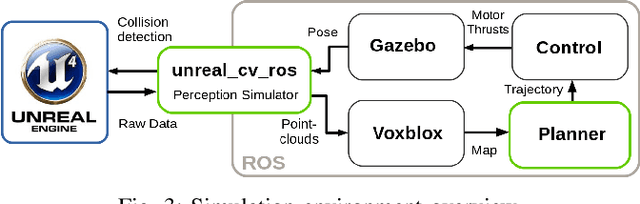

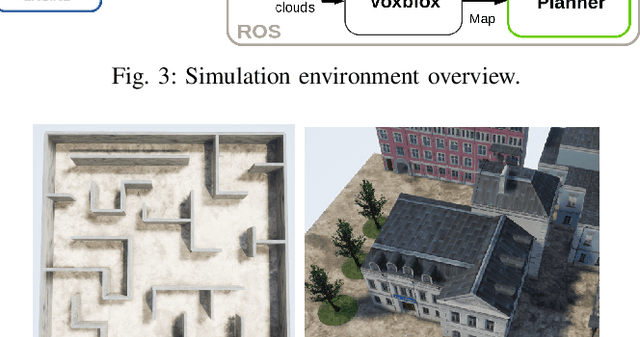

Abstract:The ability to plan informative paths online is essential to robot autonomy. In particular, sampling-based approaches are often used as they are capable of using arbitrary information gain formulations. However, they are prone to local minima, resulting in sub-optimal trajectories, and sometimes do not reach global coverage. In this paper, we present a new RRT*-inspired online informative path planning algorithm. Our method continuously expands a single tree of candidate trajectories and rewires segments to maintain the tree and refine intermediate trajectories. This allows the algorithm to achieve global coverage and maximize the utility of a path in a global context, using a single objective function. We demonstrate the algorithm's capabilities in the applications of autonomous indoor exploration as well as accurate Truncated Signed Distance Field (TSDF)-based 3D reconstruction on-board a Micro Aerial vehicle (MAV). We study the impact of commonly used information gain and cost formulations in these scenarios and propose a novel TSDF-based 3D reconstruction gain and cost-utility formulation. Detailed evaluation in realistic simulation environments show that our approach outperforms state of the art methods in these tasks. Experiments on a real MAV demonstrate the ability of our method to robustly plan in real-time, exploring an indoor environment solely with on-board sensing and computation. We make our framework available for future research.

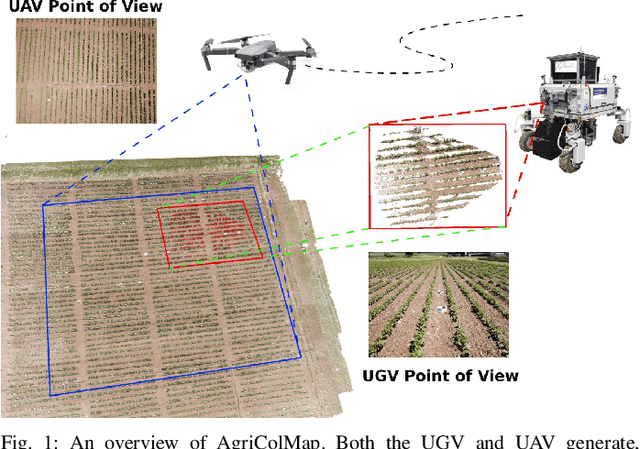

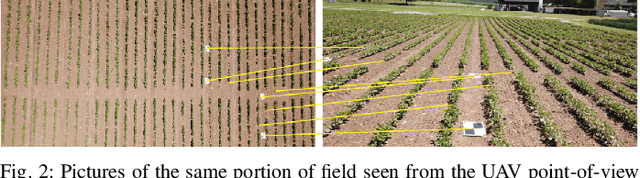

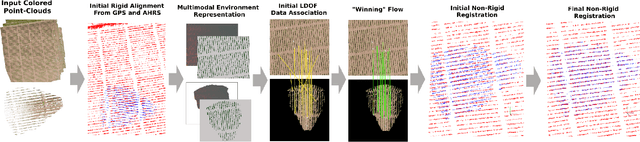

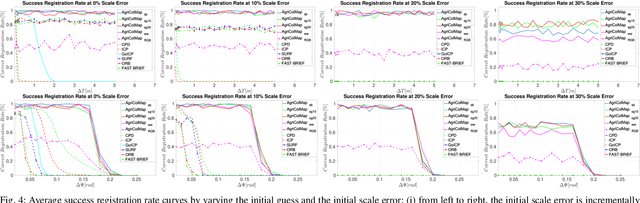

AgriColMap: Aerial-Ground Collaborative 3D Mapping for Precision Farming

Mar 14, 2019

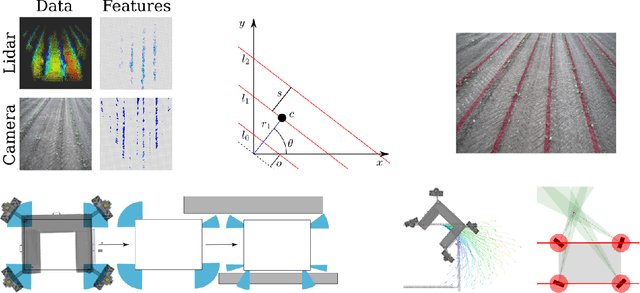

Abstract:The combination of aerial survey capabilities of Unmanned Aerial Vehicles with targeted intervention abilities of agricultural Unmanned Ground Vehicles can significantly improve the effectiveness of robotic systems applied to precision agriculture. In this context, building and updating a common map of the field is an essential but challenging task. The maps built using robots of different types show differences in size, resolution and scale, the associated geolocation data may be inaccurate and biased, while the repetitiveness of both visual appearance and geometric structures found within agricultural contexts render classical map merging techniques ineffective. In this paper we propose AgriColMap, a novel map registration pipeline that leverages a grid-based multimodal environment representation which includes a vegetation index map and a Digital Surface Model. We cast the data association problem between maps built from UAVs and UGVs as a multimodal, large displacement dense optical flow estimation. The dominant, coherent flows, selected using a voting scheme, are used as point-to-point correspondences to infer a preliminary non-rigid alignment between the maps. A final refinement is then performed, by exploiting only meaningful parts of the registered maps. We evaluate our system using real world data for 3 fields with different crop species. The results show that our method outperforms several state of the art map registration and matching techniques by a large margin, and has a higher tolerance to large initial misalignments. We release an implementation of the proposed approach along with the acquired datasets with this paper.

* Published in IEEE Robotics and Automation Letters, 2019

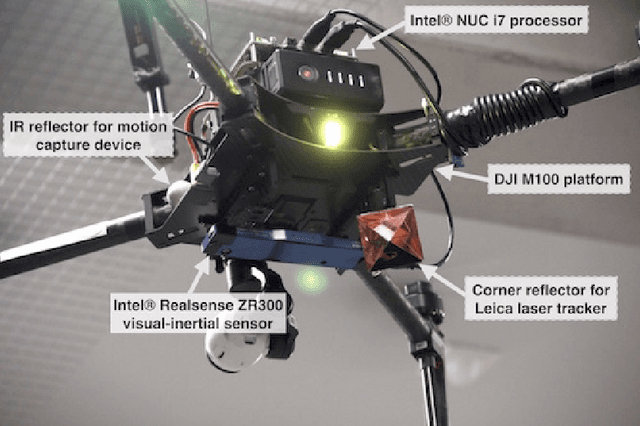

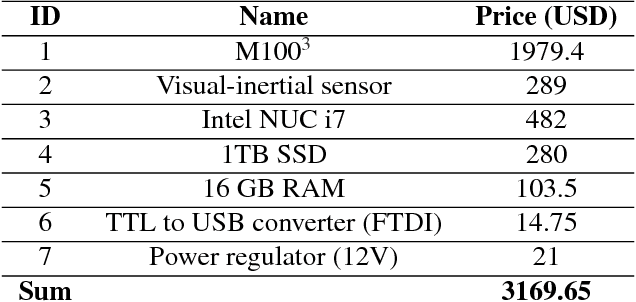

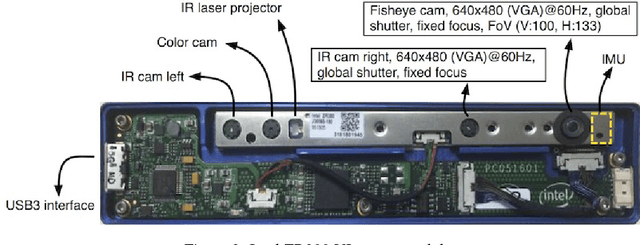

Build Your Own Visual-Inertial Drone: A Cost-Effective and Open-Source Autonomous Drone

Sep 06, 2018

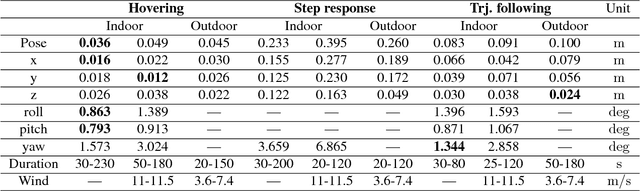

Abstract:This paper describes an approach to building a cost-effective and research grade visual-inertial odometry aided vertical taking-off and landing (VTOL) platform. We utilize an off-the-shelf visual-inertial sensor, an onboard computer, and a quadrotor platform that are factory-calibrated and mass-produced, thereby sharing similar hardware and sensor specifications (e.g., mass, dimensions, intrinsic and extrinsic of camera-IMU systems, and signal-to-noise ratio). We then perform a system calibration and identification enabling the use of our visual-inertial odometry, multi-sensor fusion, and model predictive control frameworks with the off-the-shelf products. This implies that we can partially avoid tedious parameter tuning procedures for building a full system. The complete system is extensively evaluated both indoors using a motion capture system and outdoors using a laser tracker while performing hover and step responses, and trajectory following tasks in the presence of external wind disturbances. We achieve root-mean-square (RMS) pose errors between a reference and actual trajectories of 0.036m, while performing hover. We also conduct relatively long distance flight (~180m) experiments on a farm site and achieve 0.82% drift error of the total distance flight. This paper conveys the insights we acquired about the platform and sensor module and returns to the community as open-source code with tutorial documentation.

* 21 pages, 10 figures, accepted to IEEE Robotics & Automation Magazine

WeedMap: A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming

Sep 06, 2018

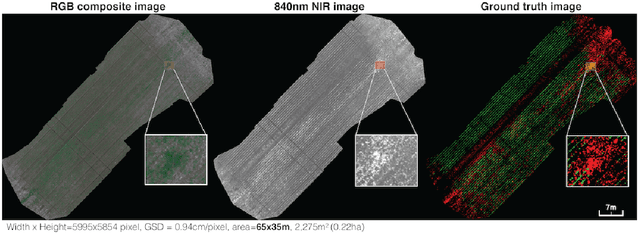

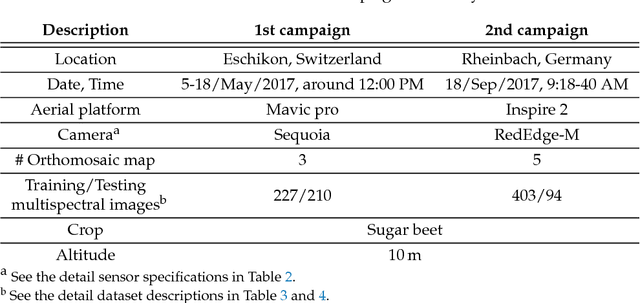

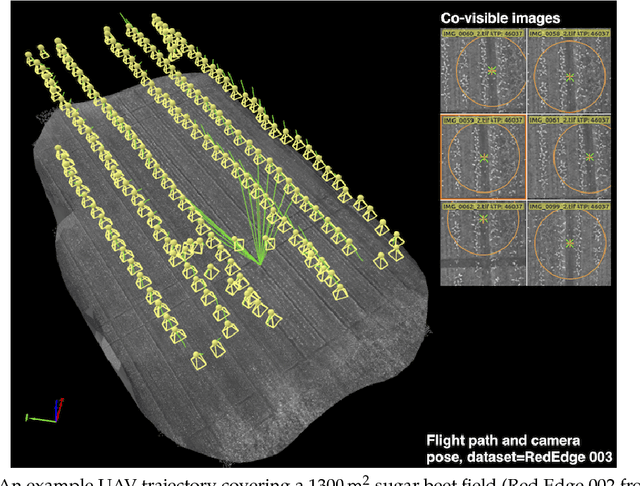

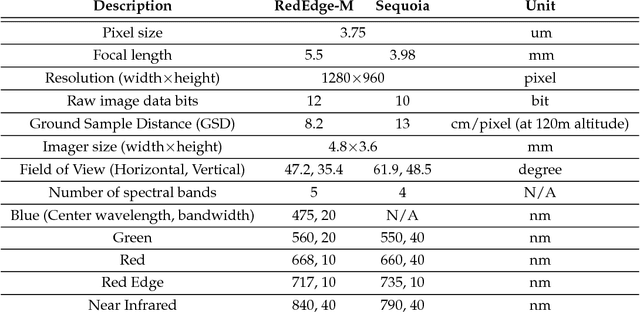

Abstract:We present a novel weed segmentation and mapping framework that processes multispectral images obtained from an unmanned aerial vehicle (UAV) using a deep neural network (DNN). Most studies on crop/weed semantic segmentation only consider single images for processing and classification. Images taken by UAVs often cover only a few hundred square meters with either color only or color and near-infrared (NIR) channels. Computing a single large and accurate vegetation map (e.g., crop/weed) using a DNN is non-trivial due to difficulties arising from: (1) limited ground sample distances (GSDs) in high-altitude datasets, (2) sacrificed resolution resulting from downsampling high-fidelity images, and (3) multispectral image alignment. To address these issues, we adopt a stand sliding window approach that operates on only small portions of multispectral orthomosaic maps (tiles), which are channel-wise aligned and calibrated radiometrically across the entire map. We define the tile size to be the same as that of the DNN input to avoid resolution loss. Compared to our baseline model (i.e., SegNet with 3 channel RGB inputs) yielding an area under the curve (AUC) of [background=0.607, crop=0.681, weed=0.576], our proposed model with 9 input channels achieves [0.839, 0.863, 0.782]. Additionally, we provide an extensive analysis of 20 trained models, both qualitatively and quantitatively, in order to evaluate the effects of varying input channels and tunable network hyperparameters. Furthermore, we release a large sugar beet/weed aerial dataset with expertly guided annotations for further research in the fields of remote sensing, precision agriculture, and agricultural robotics.

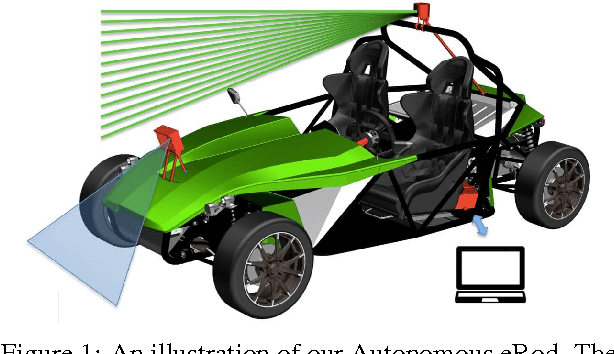

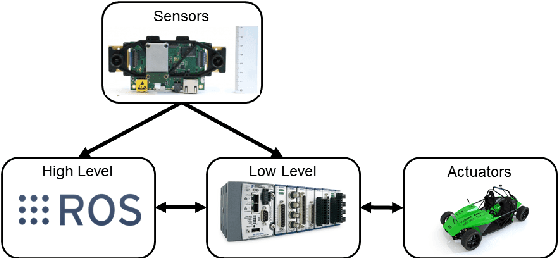

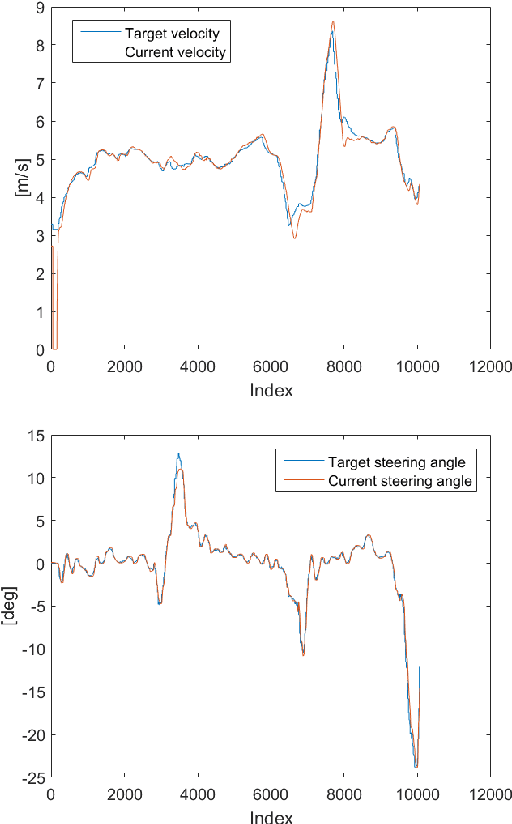

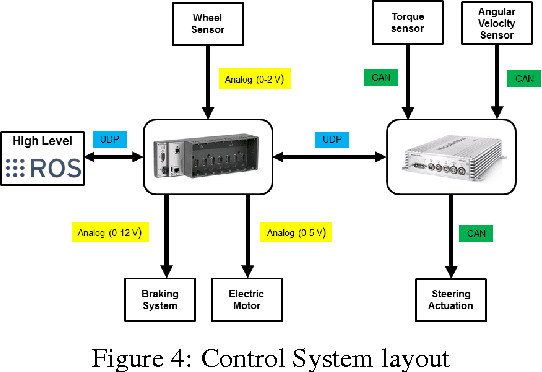

Autonomous Electric Race Car Design

Nov 01, 2017

Abstract:Autonomous driving and electric vehicles are nowadays very active research and development areas. In this paper we present the conversion of a standard Kyburz eRod into an autonomous vehicle that can be operated in challenging environments such as Swiss mountain passes. The overall hardware and software architectures are described in detail with a special emphasis on the sensor requirements for autonomous vehicles operating in partially structured environments. Furthermore, the design process itself and the finalized system architecture are presented. The work shows state of the art results in localization and controls for self-driving high-performance electric vehicles. Test results of the overall system are presented, which show the importance of generalizable state estimation algorithms to handle a plethora of conditions.

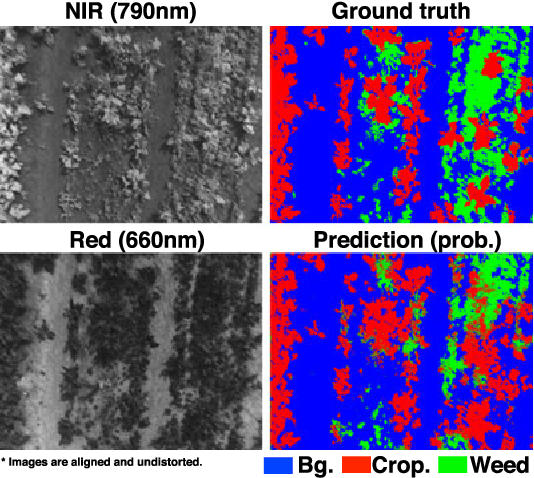

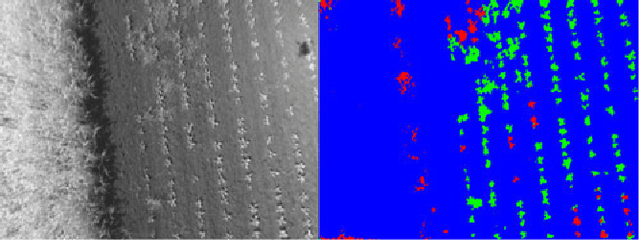

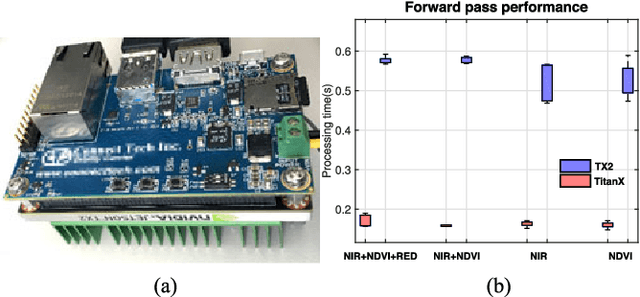

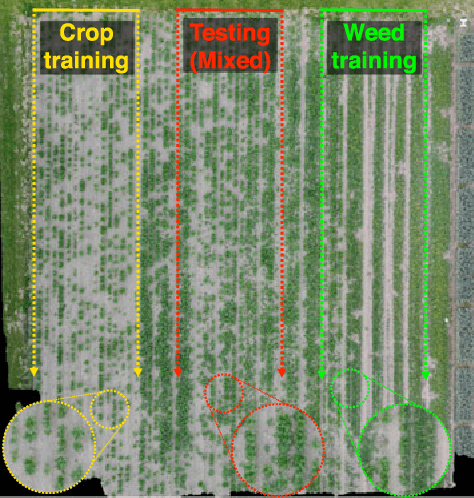

weedNet: Dense Semantic Weed Classification Using Multispectral Images and MAV for Smart Farming

Sep 11, 2017

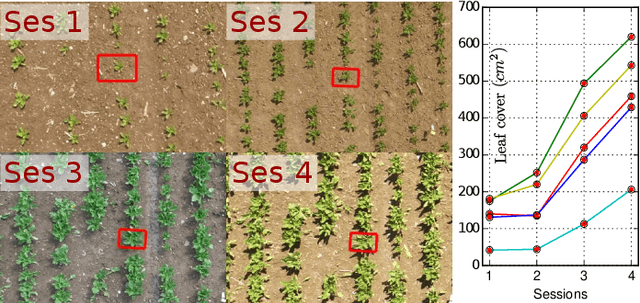

Abstract:Selective weed treatment is a critical step in autonomous crop management as related to crop health and yield. However, a key challenge is reliable, and accurate weed detection to minimize damage to surrounding plants. In this paper, we present an approach for dense semantic weed classification with multispectral images collected by a micro aerial vehicle (MAV). We use the recently developed encoder-decoder cascaded Convolutional Neural Network (CNN), Segnet, that infers dense semantic classes while allowing any number of input image channels and class balancing with our sugar beet and weed datasets. To obtain training datasets, we established an experimental field with varying herbicide levels resulting in field plots containing only either crop or weed, enabling us to use the Normalized Difference Vegetation Index (NDVI) as a distinguishable feature for automatic ground truth generation. We train 6 models with different numbers of input channels and condition (fine-tune) it to achieve about 0.8 F1-score and 0.78 Area Under the Curve (AUC) classification metrics. For model deployment, an embedded GPU system (Jetson TX2) is tested for MAV integration. Dataset used in this paper is released to support the community and future work.

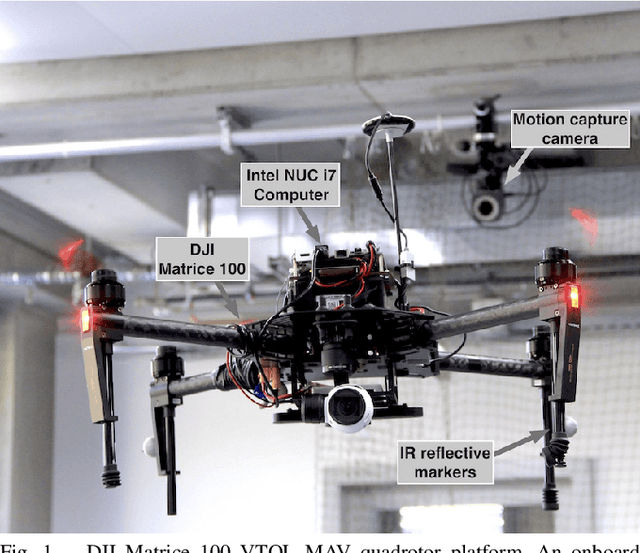

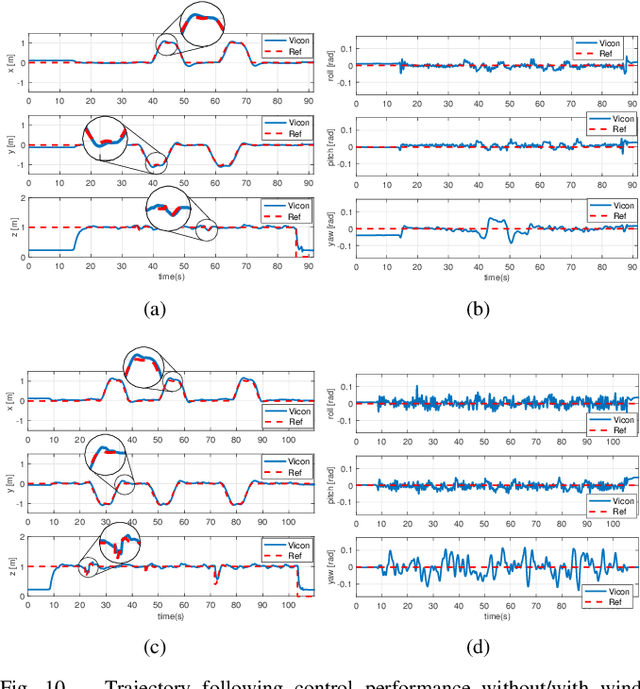

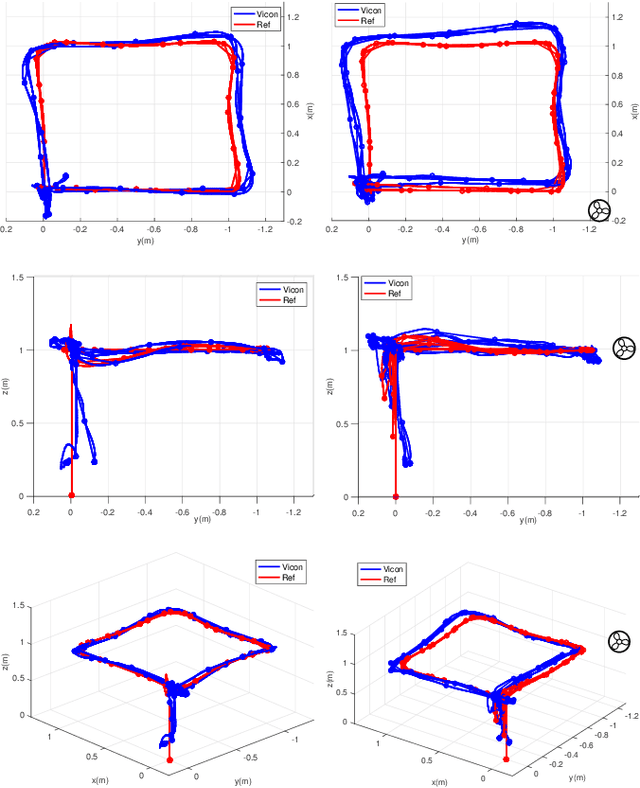

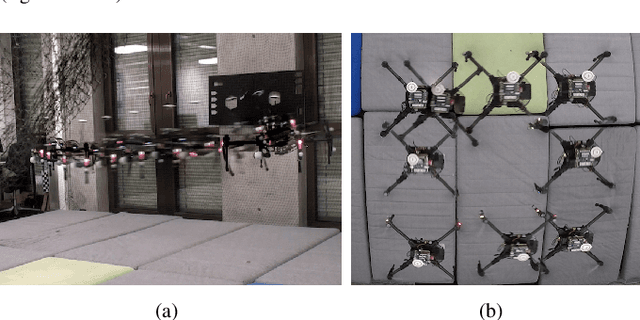

Dynamic System Identification, and Control for a cost effective open-source VTOL MAV

Mar 09, 2017

Abstract:This paper describes dynamic system identification, and full control of a cost-effective vertical take-off and landing (VTOL) multi-rotor micro-aerial vehicle (MAV) --- DJI Matrice 100. The dynamics of the vehicle and autopilot controllers are identified using only a built-in IMU and utilized to design a subsequent model predictive controller (MPC). Experimental results for the control performance are evaluated using a motion capture system while performing hover, step responses, and trajectory following tasks in the present of external wind disturbances. We achieve root-mean-square (RMS) errors between the reference and actual trajectory of x=0.021m, y=0.016m, z=0.029m, roll=0.392deg, pitch=0.618deg, and yaw=1.087deg while performing hover. This paper also conveys the insights we have gained about the platform and returned to the community through open-source code, and documentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge