Philipp Lottes

Building an Aerial-Ground Robotics System for Precision Farming

Nov 08, 2019

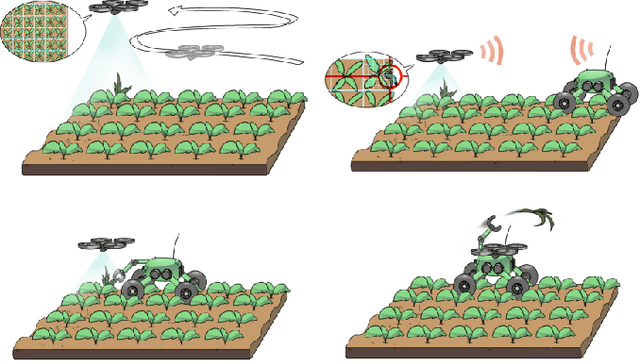

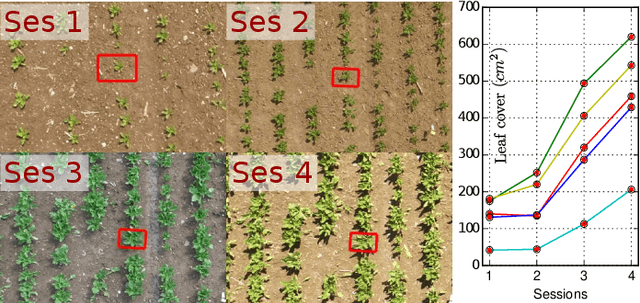

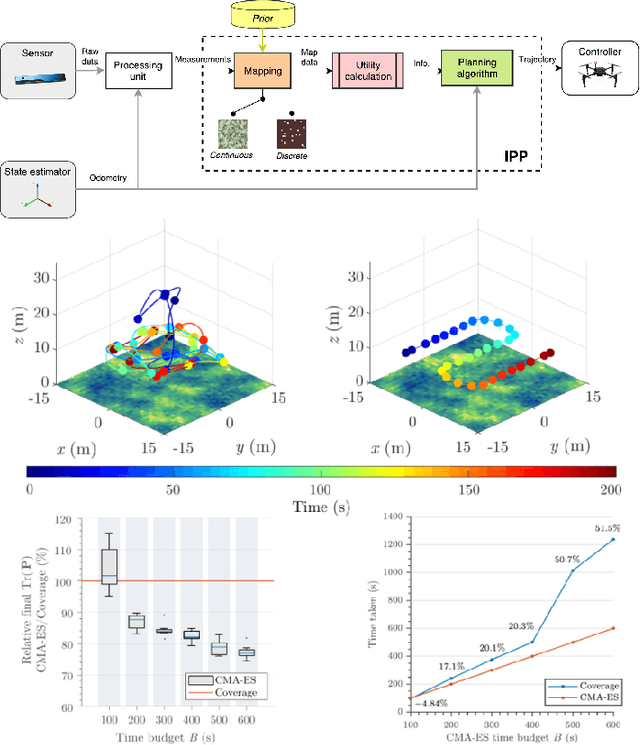

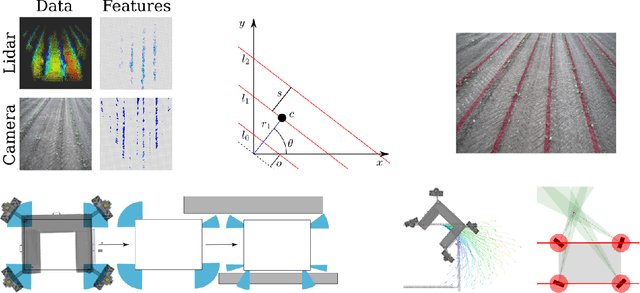

Abstract:The application of autonomous robots in agriculture is gaining more and more popularity thanks to the high impact it may have on food security, sustainability, resource use efficiency, reduction of chemical treatments, minimization of the human effort and maximization of yield. The Flourish research project faced this challenge by developing an adaptable robotic solution for precision farming that combines the aerial survey capabilities of small autonomous unmanned aerial vehicles (UAVs) with flexible targeted intervention performed by multi-purpose agricultural unmanned ground vehicles (UGVs). This paper presents an exhaustive overview of the scientific and technological advances and outcomes obtained in the Flourish project. We introduce multi-spectral perception algorithms and aerial and ground based systems developed to monitor crop density, weed pressure, crop nitrogen nutrition status, and to accurately classify and locate weeds. We then introduce the navigation and mapping systems to deal with the specificity of the employed robots and of the agricultural environment, highlighting the collaborative modules that enable the UAVs and UGVs to collect and share information in a unified environment model. We finally present the ground intervention hardware, software solutions, and interfaces we implemented and tested in different field conditions and with different crops. We describe here a real use case in which a UAV collaborates with a UGV to monitor the field and to perform selective spraying treatments in a totally autonomous way.

ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals

May 20, 2019

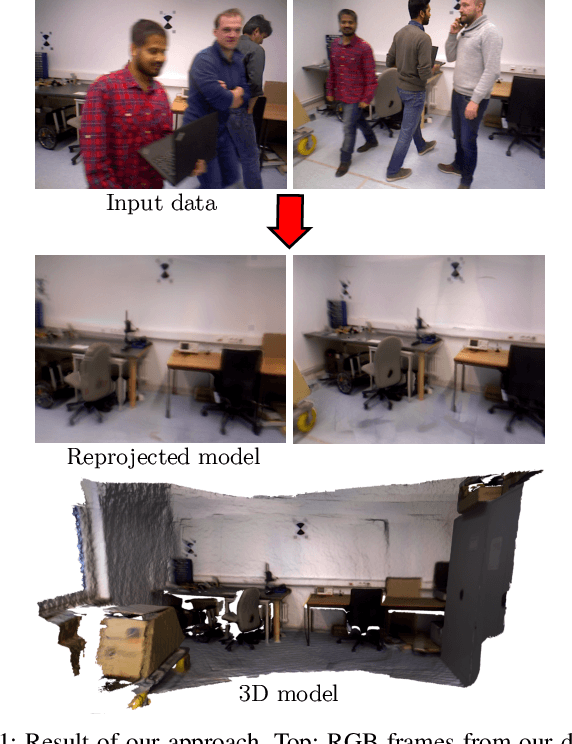

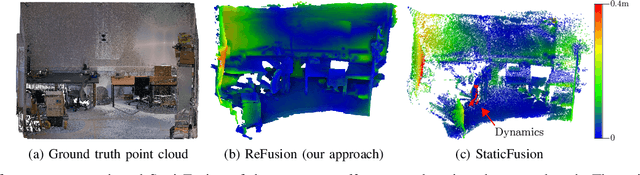

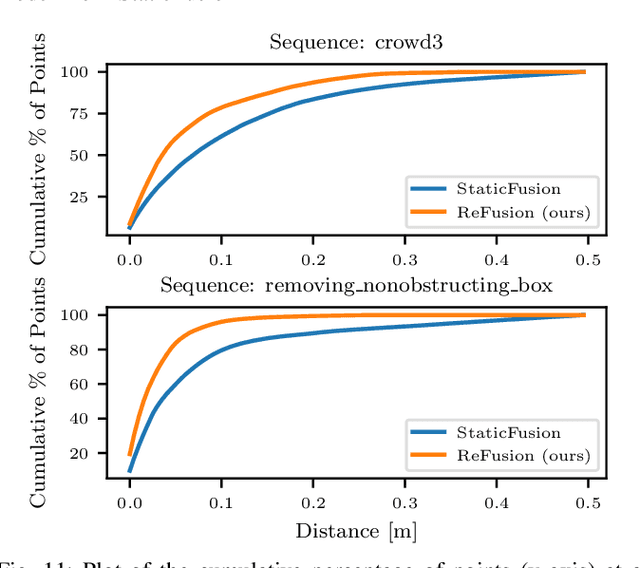

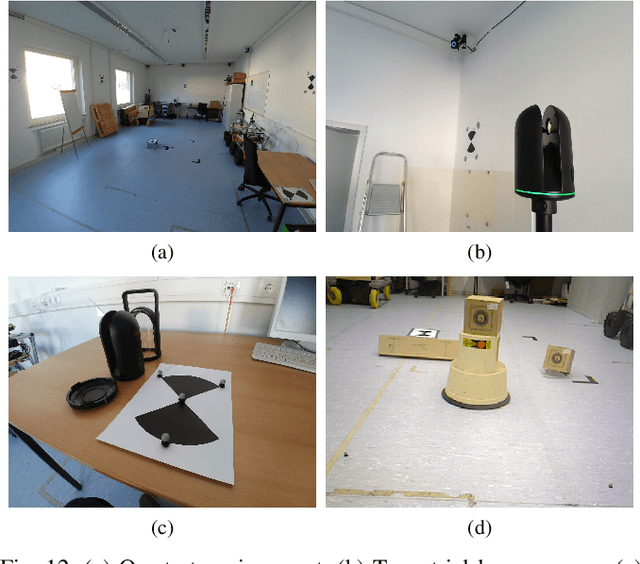

Abstract:Mapping and localization are essential capabilities of robotic systems. Although the majority of mapping systems focus on static environments, the deployment in real-world situations requires them to handle dynamic objects. In this paper, we propose an approach for an RGB-D sensor that is able to consistently map scenes containing multiple dynamic elements. For localization and mapping, we employ an efficient direct tracking on the truncated signed distance function (TSDF) and leverage color information encoded in the TSDF to estimate the pose of the sensor. The TSDF is efficiently represented using voxel hashing, with most computations parallelized on a GPU. For detecting dynamics, we exploit the residuals obtained after an initial registration, together with the explicit modeling of free space in the model. We evaluate our approach on existing datasets, and provide a new dataset showing highly dynamic scenes. These experiments show that our approach often surpass other state-of-the-art dense SLAM methods. We make available our dataset with the ground truth for both the trajectory of the RGB-D sensor obtained by a motion capture system and the model of the static environment using a high-precision terrestrial laser scanner. Finally, we release our approach as open source code.

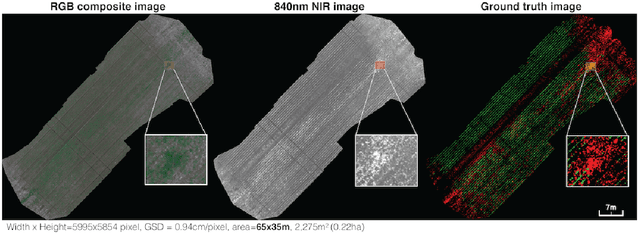

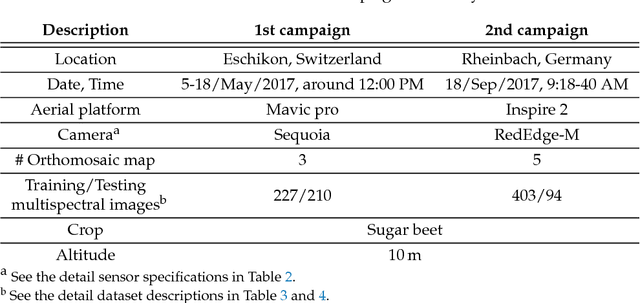

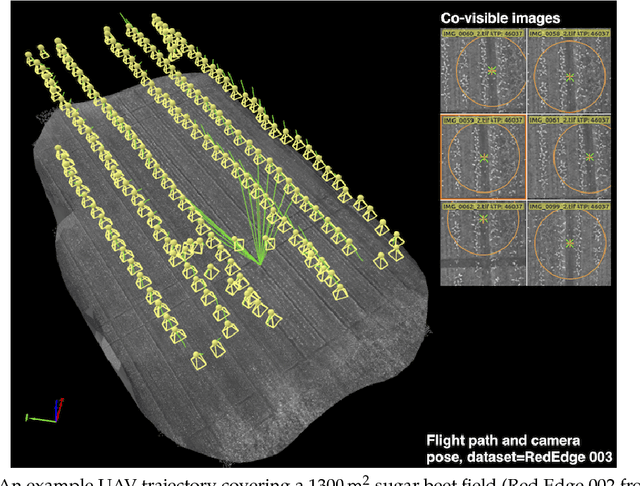

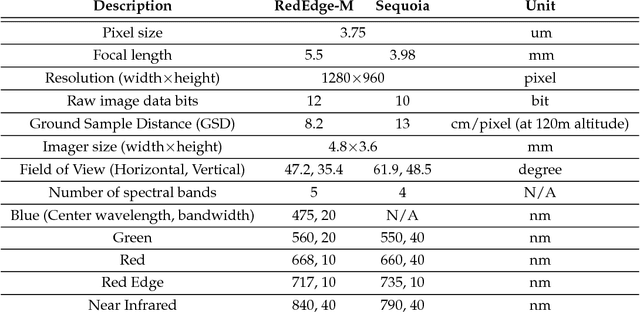

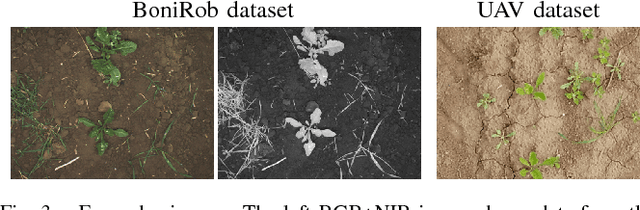

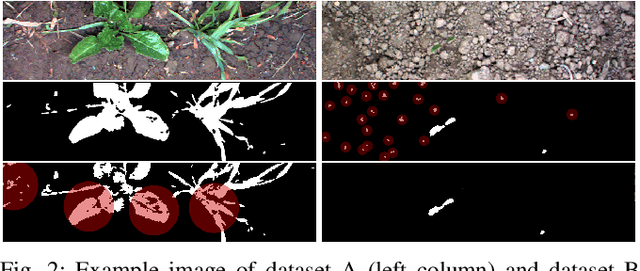

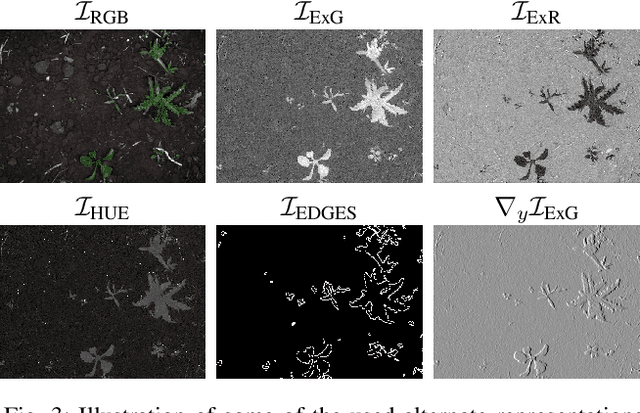

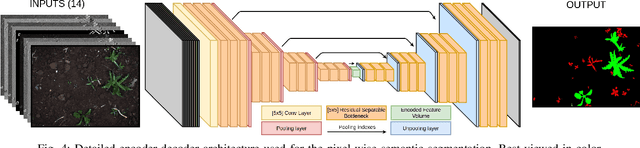

WeedMap: A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming

Sep 06, 2018

Abstract:We present a novel weed segmentation and mapping framework that processes multispectral images obtained from an unmanned aerial vehicle (UAV) using a deep neural network (DNN). Most studies on crop/weed semantic segmentation only consider single images for processing and classification. Images taken by UAVs often cover only a few hundred square meters with either color only or color and near-infrared (NIR) channels. Computing a single large and accurate vegetation map (e.g., crop/weed) using a DNN is non-trivial due to difficulties arising from: (1) limited ground sample distances (GSDs) in high-altitude datasets, (2) sacrificed resolution resulting from downsampling high-fidelity images, and (3) multispectral image alignment. To address these issues, we adopt a stand sliding window approach that operates on only small portions of multispectral orthomosaic maps (tiles), which are channel-wise aligned and calibrated radiometrically across the entire map. We define the tile size to be the same as that of the DNN input to avoid resolution loss. Compared to our baseline model (i.e., SegNet with 3 channel RGB inputs) yielding an area under the curve (AUC) of [background=0.607, crop=0.681, weed=0.576], our proposed model with 9 input channels achieves [0.839, 0.863, 0.782]. Additionally, we provide an extensive analysis of 20 trained models, both qualitatively and quantitatively, in order to evaluate the effects of varying input channels and tunable network hyperparameters. Furthermore, we release a large sugar beet/weed aerial dataset with expertly guided annotations for further research in the fields of remote sensing, precision agriculture, and agricultural robotics.

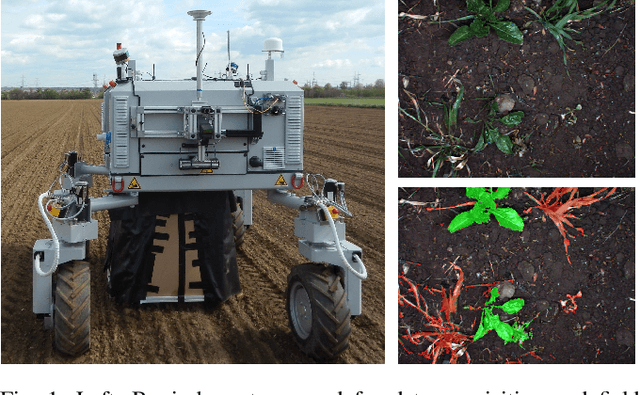

Joint Stem Detection and Crop-Weed Classification for Plant-specific Treatment in Precision Farming

Jun 09, 2018

Abstract:Applying agrochemicals is the default procedure for conventional weed control in crop production, but has negative impacts on the environment. Robots have the potential to treat every plant in the field individually and thus can reduce the required use of such chemicals. To achieve that, robots need the ability to identify crops and weeds in the field and must additionally select effective treatments. While certain types of weed can be treated mechanically, other types need to be treated by (selective) spraying. In this paper, we present an approach that provides the necessary information for effective plant-specific treatment. It outputs the stem location for weeds, which allows for mechanical treatments, and the covered area of the weed for selective spraying. Our approach uses an end-to-end trainable fully convolutional network that simultaneously estimates stem positions as well as the covered area of crops and weeds. It jointly learns the class-wise stem detection and the pixel-wise semantic segmentation. Experimental evaluations on different real-world datasets show that our approach is able to reliably solve this problem. Compared to state-of-the-art approaches, our approach not only substantially improves the stem detection accuracy, i.e., distinguishing crop and weed stems, but also provides an improvement in the semantic segmentation performance.

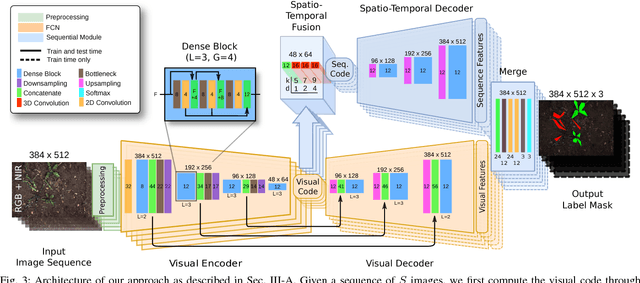

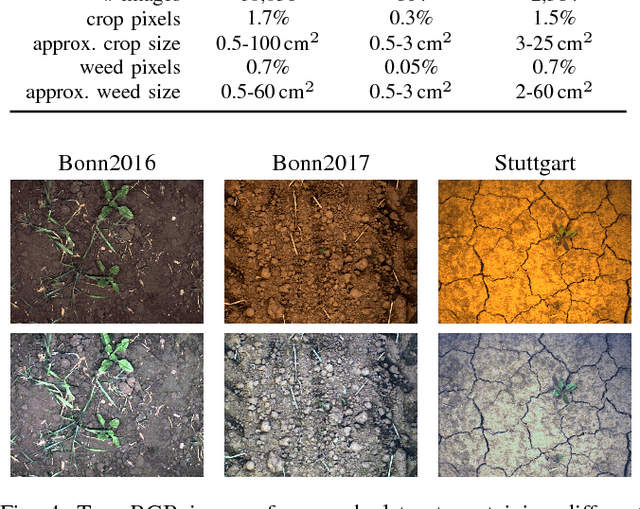

Fully Convolutional Networks with Sequential Information for Robust Crop and Weed Detection in Precision Farming

Jun 09, 2018

Abstract:Reducing the use of agrochemicals is an important component towards sustainable agriculture. Robots that can perform targeted weed control offer the potential to contribute to this goal, for example, through specialized weeding actions such as selective spraying or mechanical weed removal. A prerequisite of such systems is a reliable and robust plant classification system that is able to distinguish crop and weed in the field. A major challenge in this context is the fact that different fields show a large variability. Thus, classification systems have to robustly cope with substantial environmental changes with respect to weed pressure and weed types, growth stages of the crop, visual appearance, and soil conditions. In this paper, we propose a novel crop-weed classification system that relies on a fully convolutional network with an encoder-decoder structure and incorporates spatial information by considering image sequences. Exploiting the crop arrangement information that is observable from the image sequences enables our system to robustly estimate a pixel-wise labeling of the images into crop and weed, i.e., a semantic segmentation. We provide a thorough experimental evaluation, which shows that our system generalizes well to previously unseen fields under varying environmental conditions --- a key capability to actually use such systems in precision framing. We provide comparisons to other state-of-the-art approaches and show that our system substantially improves the accuracy of crop-weed classification without requiring a retraining of the model.

Real-time Semantic Segmentation of Crop and Weed for Precision Agriculture Robots Leveraging Background Knowledge in CNNs

Mar 02, 2018

Abstract:Precision farming robots, which target to reduce the amount of herbicides that need to be brought out in the fields, must have the ability to identify crops and weeds in real time to trigger weeding actions. In this paper, we address the problem of CNN-based semantic segmentation of crop fields separating sugar beet plants, weeds, and background solely based on RGB data. We propose a CNN that exploits existing vegetation indexes and provides a classification in real time. Furthermore, it can be effectively re-trained to so far unseen fields with a comparably small amount of training data. We implemented and thoroughly evaluated our system on a real agricultural robot operating in different fields in Germany and Switzerland. The results show that our system generalizes well, can operate at around 20Hz, and is suitable for online operation in the fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge