Christian Dornhege

Building an Aerial-Ground Robotics System for Precision Farming

Nov 08, 2019

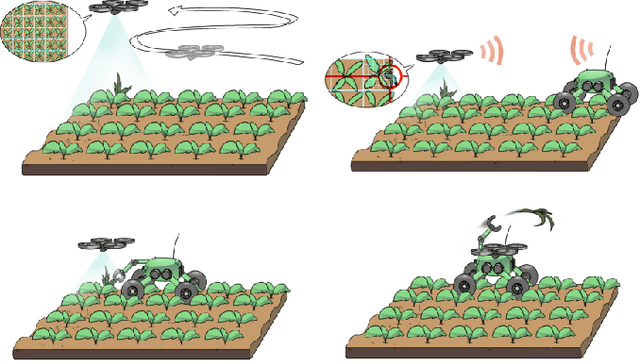

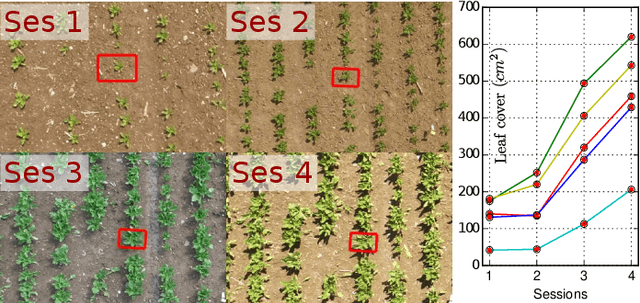

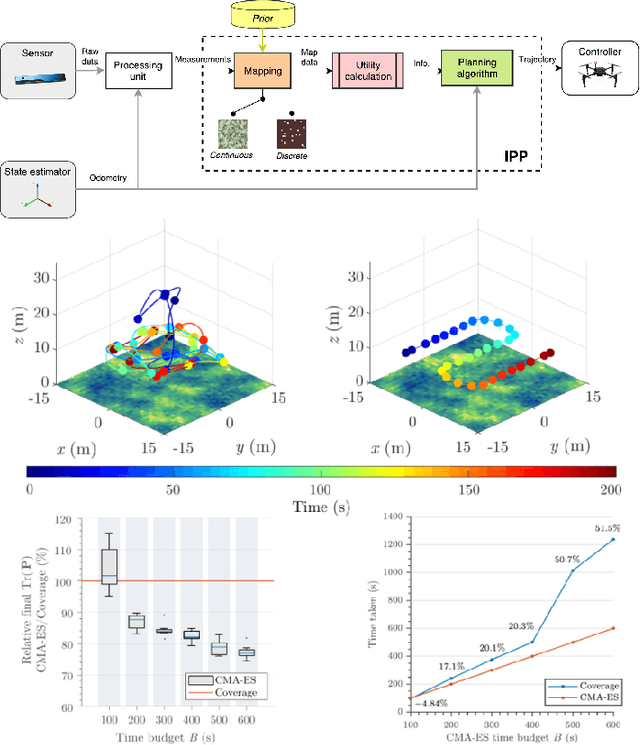

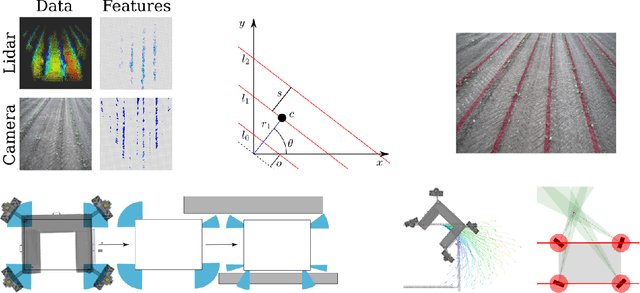

Abstract:The application of autonomous robots in agriculture is gaining more and more popularity thanks to the high impact it may have on food security, sustainability, resource use efficiency, reduction of chemical treatments, minimization of the human effort and maximization of yield. The Flourish research project faced this challenge by developing an adaptable robotic solution for precision farming that combines the aerial survey capabilities of small autonomous unmanned aerial vehicles (UAVs) with flexible targeted intervention performed by multi-purpose agricultural unmanned ground vehicles (UGVs). This paper presents an exhaustive overview of the scientific and technological advances and outcomes obtained in the Flourish project. We introduce multi-spectral perception algorithms and aerial and ground based systems developed to monitor crop density, weed pressure, crop nitrogen nutrition status, and to accurately classify and locate weeds. We then introduce the navigation and mapping systems to deal with the specificity of the employed robots and of the agricultural environment, highlighting the collaborative modules that enable the UAVs and UGVs to collect and share information in a unified environment model. We finally present the ground intervention hardware, software solutions, and interfaces we implemented and tested in different field conditions and with different crops. We describe here a real use case in which a UAV collaborates with a UGV to monitor the field and to perform selective spraying treatments in a totally autonomous way.

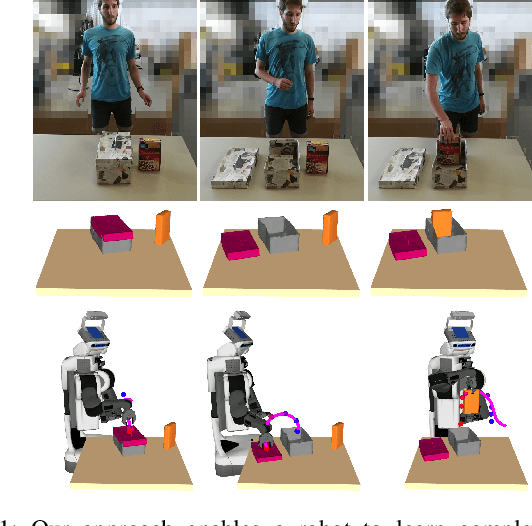

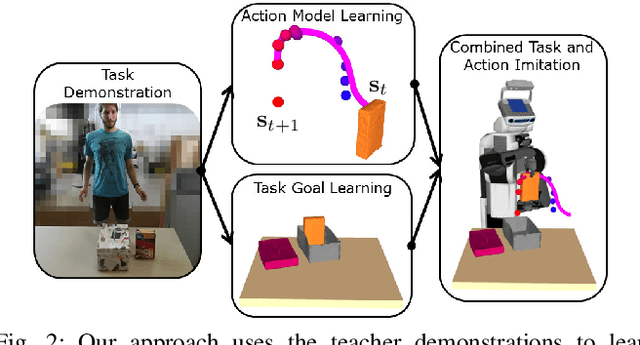

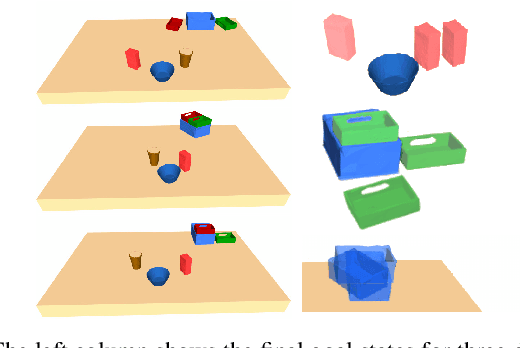

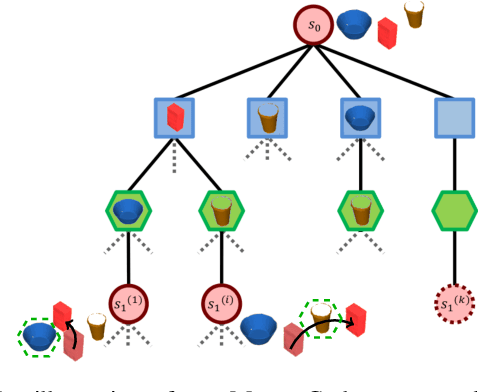

Combined Task and Action Learning from Human Demonstrations for Mobile Manipulation Applications

Aug 25, 2019

Abstract:Learning from demonstrations is a promising paradigm for transferring knowledge to robots. However, learning mobile manipulation tasks directly from a human teacher is a complex problem as it requires learning models of both the overall task goal and of the underlying actions. Additionally, learning from a small number of demonstrations often introduces ambiguity with respect to the intention of the teacher, making it challenging to commit to one model for generalizing the task to new settings. In this paper, we present an approach to learning flexible mobile manipulation action models and task goal representations from teacher demonstrations. Our action models enable the robot to consider different likely outcomes of each action and to generate feasible trajectories for achieving them. Accordingly, we leverage a probabilistic framework based on Monte Carlo tree search to compute sequences of feasible actions imitating the teacher intention in new settings without requiring the teacher to specify an explicit goal state. We demonstrate the effectiveness of our approach in complex tasks carried out in real-world settings.

3D Human Pose Estimation in RGBD Images for Robotic Task Learning

Mar 13, 2018

Abstract:We propose an approach to estimate 3D human pose in real world units from a single RGBD image and show that it exceeds performance of monocular 3D pose estimation approaches from color as well as pose estimation exclusively from depth. Our approach builds on robust human keypoint detectors for color images and incorporates depth for lifting into 3D. We combine the system with our learning from demonstration framework to instruct a service robot without the need of markers. Experiments in real world settings demonstrate that our approach enables a PR2 robot to imitate manipulation actions observed from a human teacher.

Why did the Robot Cross the Road? - Learning from Multi-Modal Sensor Data for Autonomous Road Crossing

Sep 18, 2017

Abstract:We consider the problem of developing robots that navigate like pedestrians on sidewalks through city centers for performing various tasks including delivery and surveillance. One particular challenge for such robots is crossing streets without pedestrian traffic lights. To solve this task the robot has to decide based on its sensory input if the road is clear. In this work, we propose a novel multi-modal learning approach for the problem of autonomous street crossing. Our approach solely relies on laser and radar data and learns a classifier based on Random Forests to predict when it is safe to cross the road. We present extensive experimental evaluations using real-world data collected from multiple street crossing situations which demonstrate that our approach yields a safe and accurate street crossing behavior and generalizes well over different types of situations. A comparison to alternative methods demonstrates the advantages of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge