Nichola Abdo

Scalable Scene Flow from Point Clouds in the Real World

Mar 15, 2021

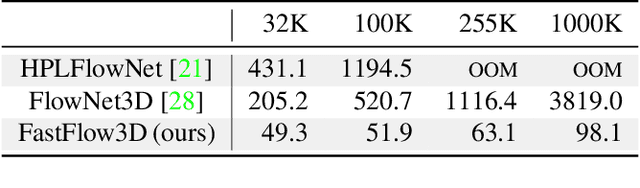

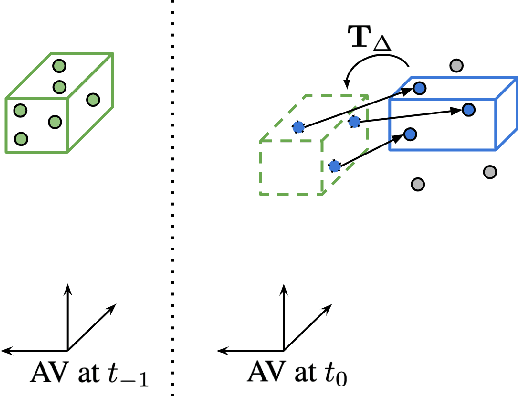

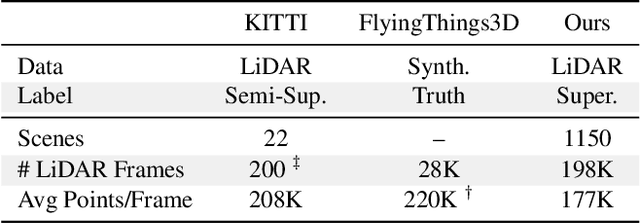

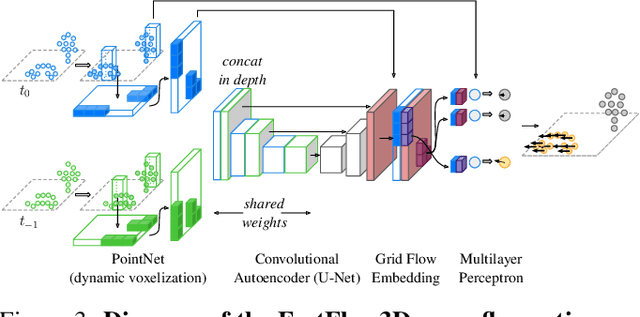

Abstract:Autonomous vehicles operate in highly dynamic environments necessitating an accurate assessment of which aspects of a scene are moving and where they are moving to. A popular approach to 3D motion estimation -- termed scene flow -- is to employ 3D point cloud data from consecutive LiDAR scans, although such approaches have been limited by the small size of real-world, annotated LiDAR data. In this work, we introduce a new large scale benchmark for scene flow based on the Waymo Open Dataset. The dataset is $\sim$1,000$\times$ larger than previous real-world datasets in terms of the number of annotated frames and is derived from the corresponding tracked 3D objects. We demonstrate how previous works were bounded based on the amount of real LiDAR data available, suggesting that larger datasets are required to achieve state-of-the-art predictive performance. Furthermore, we show how previous heuristics for operating on point clouds such as artificial down-sampling heavily degrade performance, motivating a new class of models that are tractable on the full point cloud. To address this issue, we introduce the model architecture FastFlow3D that provides real time inference on the full point cloud. Finally, we demonstrate that this problem is amenable to techniques from semi-supervised learning by highlighting open problems for generalizing methods for predicting motion on unlabeled objects. We hope that this dataset may provide new opportunities for developing real world scene flow systems and motivate a new class of machine learning problems.

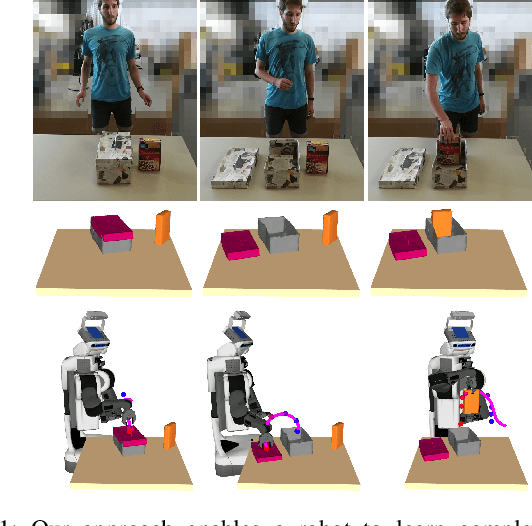

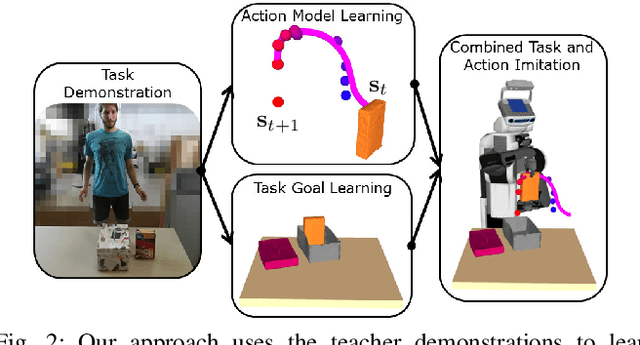

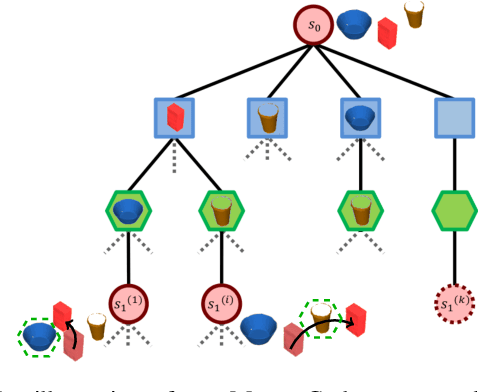

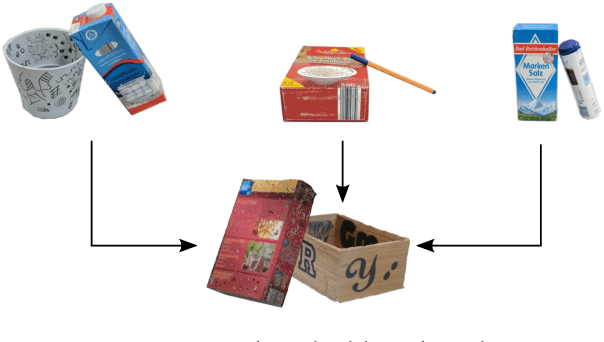

Combined Task and Action Learning from Human Demonstrations for Mobile Manipulation Applications

Aug 25, 2019

Abstract:Learning from demonstrations is a promising paradigm for transferring knowledge to robots. However, learning mobile manipulation tasks directly from a human teacher is a complex problem as it requires learning models of both the overall task goal and of the underlying actions. Additionally, learning from a small number of demonstrations often introduces ambiguity with respect to the intention of the teacher, making it challenging to commit to one model for generalizing the task to new settings. In this paper, we present an approach to learning flexible mobile manipulation action models and task goal representations from teacher demonstrations. Our action models enable the robot to consider different likely outcomes of each action and to generate feasible trajectories for achieving them. Accordingly, we leverage a probabilistic framework based on Monte Carlo tree search to compute sequences of feasible actions imitating the teacher intention in new settings without requiring the teacher to specify an explicit goal state. We demonstrate the effectiveness of our approach in complex tasks carried out in real-world settings.

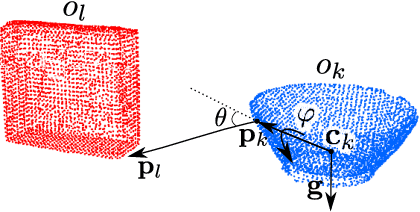

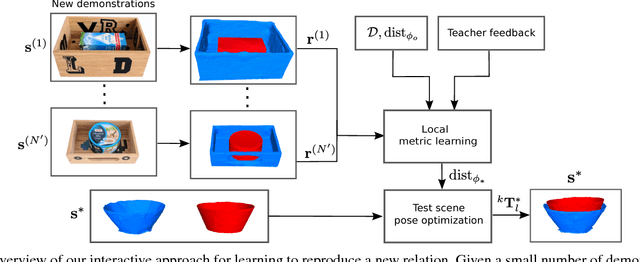

Optimization Beyond the Convolution: Generalizing Spatial Relations with End-to-End Metric Learning

Mar 24, 2018

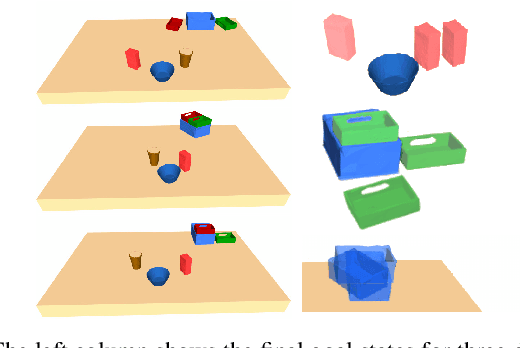

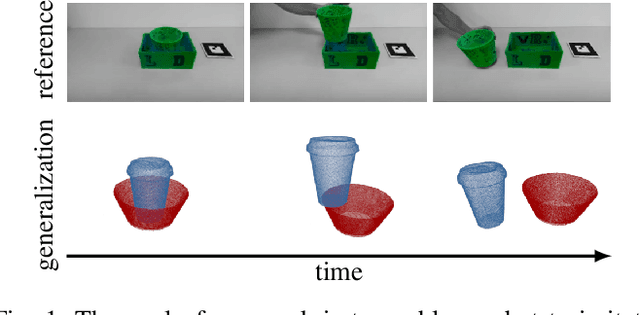

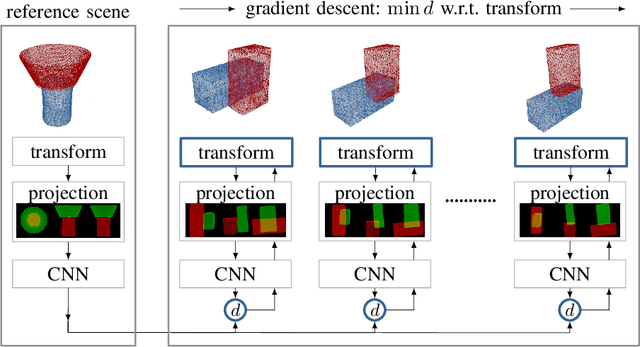

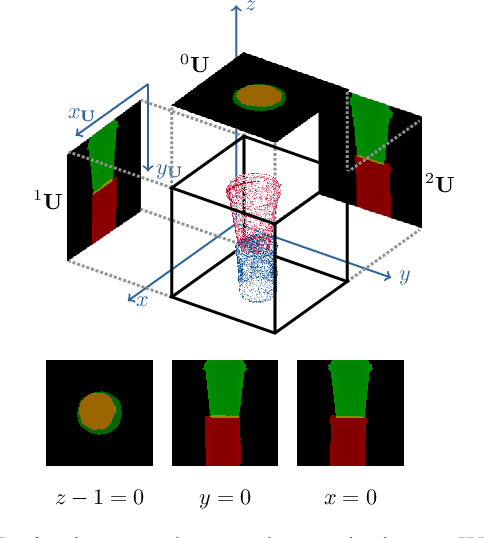

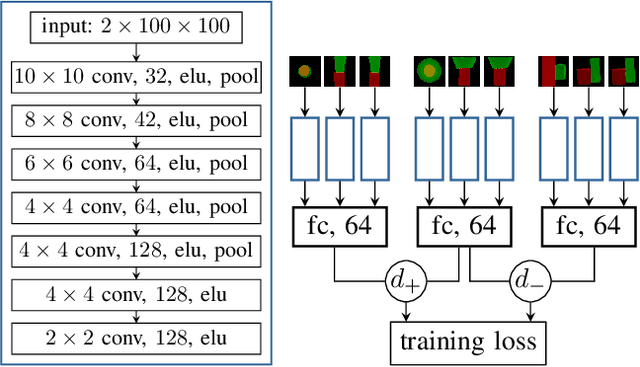

Abstract:To operate intelligently in domestic environments, robots require the ability to understand arbitrary spatial relations between objects and to generalize them to objects of varying sizes and shapes. In this work, we present a novel end-to-end approach to generalize spatial relations based on distance metric learning. We train a neural network to transform 3D point clouds of objects to a metric space that captures the similarity of the depicted spatial relations, using only geometric models of the objects. Our approach employs gradient-based optimization to compute object poses in order to imitate an arbitrary target relation by reducing the distance to it under the learned metric. Our results based on simulated and real-world experiments show that the proposed method enables robots to generalize spatial relations to unknown objects over a continuous spectrum.

Metric Learning for Generalizing Spatial Relations to New Objects

Jul 24, 2017

Abstract:Human-centered environments are rich with a wide variety of spatial relations between everyday objects. For autonomous robots to operate effectively in such environments, they should be able to reason about these relations and generalize them to objects with different shapes and sizes. For example, having learned to place a toy inside a basket, a robot should be able to generalize this concept using a spoon and a cup. This requires a robot to have the flexibility to learn arbitrary relations in a lifelong manner, making it challenging for an expert to pre-program it with sufficient knowledge to do so beforehand. In this paper, we address the problem of learning spatial relations by introducing a novel method from the perspective of distance metric learning. Our approach enables a robot to reason about the similarity between pairwise spatial relations, thereby enabling it to use its previous knowledge when presented with a new relation to imitate. We show how this makes it possible to learn arbitrary spatial relations from non-expert users using a small number of examples and in an interactive manner. Our extensive evaluation with real-world data demonstrates the effectiveness of our method in reasoning about a continuous spectrum of spatial relations and generalizing them to new objects.

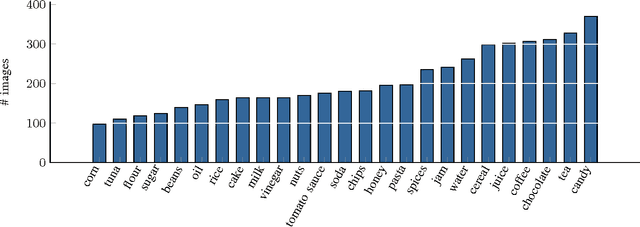

The Freiburg Groceries Dataset

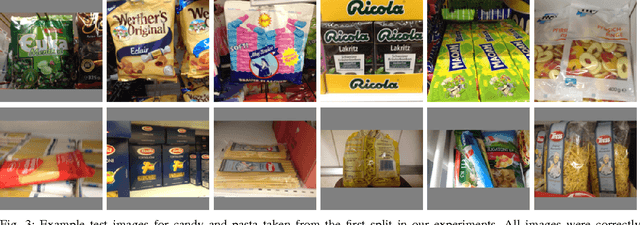

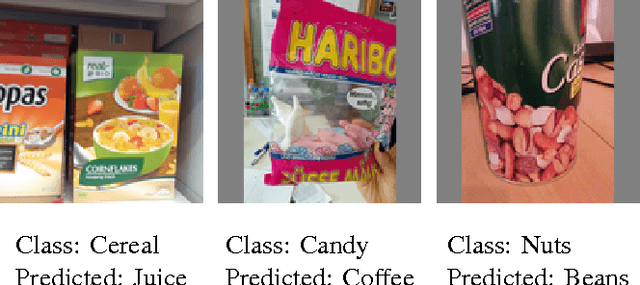

Nov 17, 2016

Abstract:With the increasing performance of machine learning techniques in the last few years, the computer vision and robotics communities have created a large number of datasets for benchmarking object recognition tasks. These datasets cover a large spectrum of natural images and object categories, making them not only useful as a testbed for comparing machine learning approaches, but also a great resource for bootstrapping different domain-specific perception and robotic systems. One such domain is domestic environments, where an autonomous robot has to recognize a large variety of everyday objects such as groceries. This is a challenging task due to the large variety of objects and products, and where there is great need for real-world training data that goes beyond product images available online. In this paper, we address this issue and present a dataset consisting of 5,000 images covering 25 different classes of groceries, with at least 97 images per class. We collected all images from real-world settings at different stores and apartments. In contrast to existing groceries datasets, our dataset includes a large variety of perspectives, lighting conditions, and degrees of clutter. Overall, our images contain thousands of different object instances. It is our hope that machine learning and robotics researchers find this dataset of use for training, testing, and bootstrapping their approaches. As a baseline classifier to facilitate comparison, we re-trained the CaffeNet architecture (an adaptation of the well-known AlexNet) on our dataset and achieved a mean accuracy of 78.9%. We release this trained model along with the code and data splits we used in our experiments.

Collaborative Filtering for Predicting User Preferences for Organizing Objects

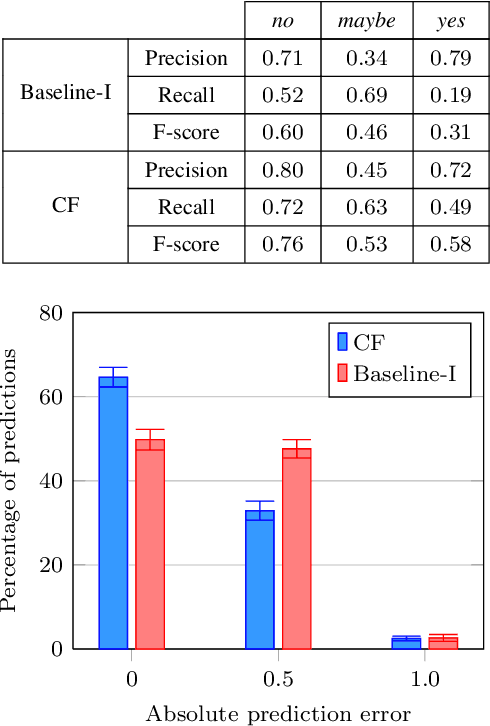

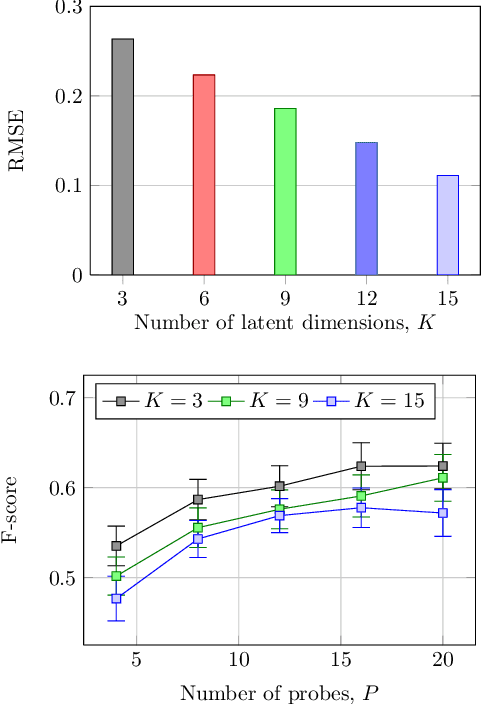

Dec 20, 2015

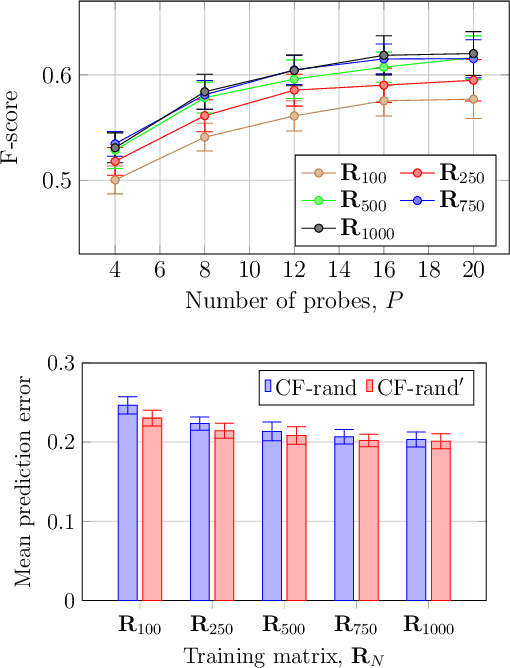

Abstract:As service robots become more and more capable of performing useful tasks for us, there is a growing need to teach robots how we expect them to carry out these tasks. However, different users typically have their own preferences, for example with respect to arranging objects on different shelves. As many of these preferences depend on a variety of factors including personal taste, cultural background, or common sense, it is challenging for an expert to pre-program a robot in order to accommodate all potential users. At the same time, it is impractical for robots to constantly query users about how they should perform individual tasks. In this work, we present an approach to learn patterns in user preferences for the task of tidying up objects in containers, e.g., shelves or boxes. Our method builds upon the paradigm of collaborative filtering for making personalized recommendations and relies on data from different users that we gather using crowdsourcing. To deal with novel objects for which we have no data, we propose a method that compliments standard collaborative filtering by leveraging information mined from the Web. When solving a tidy-up task, we first predict pairwise object preferences of the user. Then, we subdivide the objects in containers by modeling a spectral clustering problem. Our solution is easy to update, does not require complex modeling, and improves with the amount of user data. We evaluate our approach using crowdsourcing data from over 1,200 users and demonstrate its effectiveness for two tidy-up scenarios. Additionally, we show that a real robot can reliably predict user preferences using our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge